This document provides an overview of a course on parallel and distributed computer systems. It discusses course details such as lectures being delivered in English, assessments also being in English, and homework and projects counting towards the final grade. It provides resources like textbooks and online materials. The document outlines how to find teaching materials and assignments on the learning platform Jusur. It also discusses policies around cheating and late assignments.

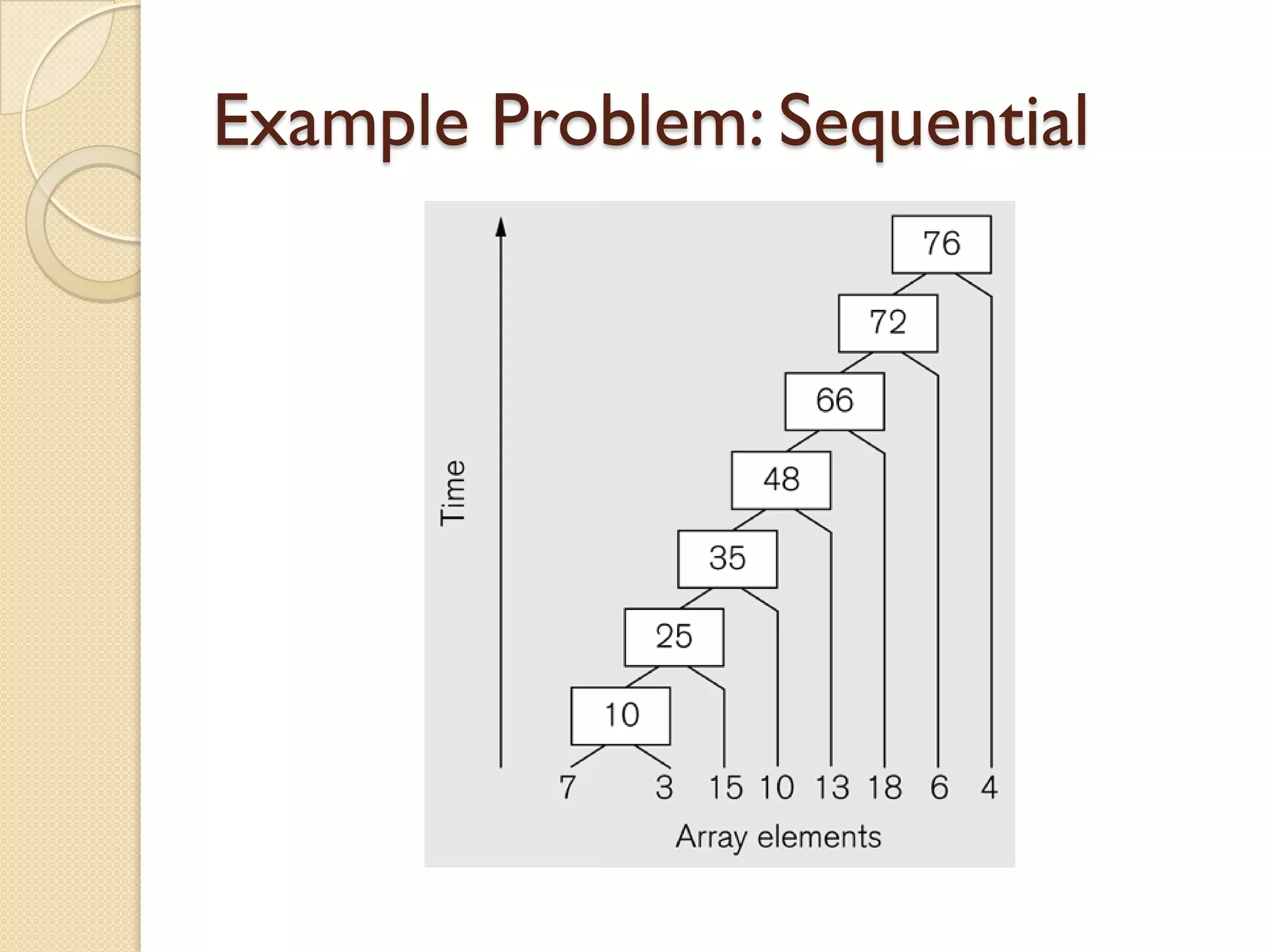

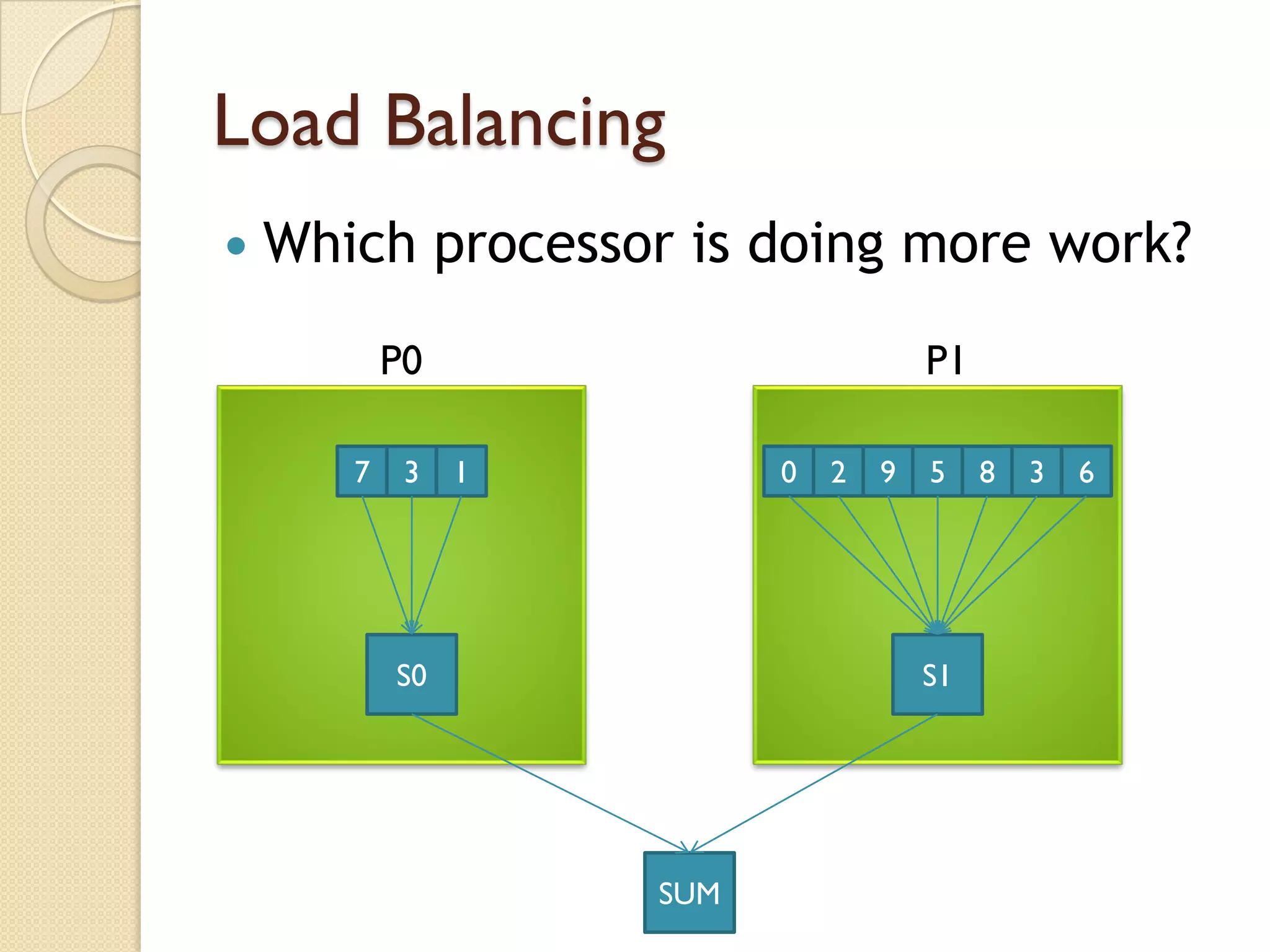

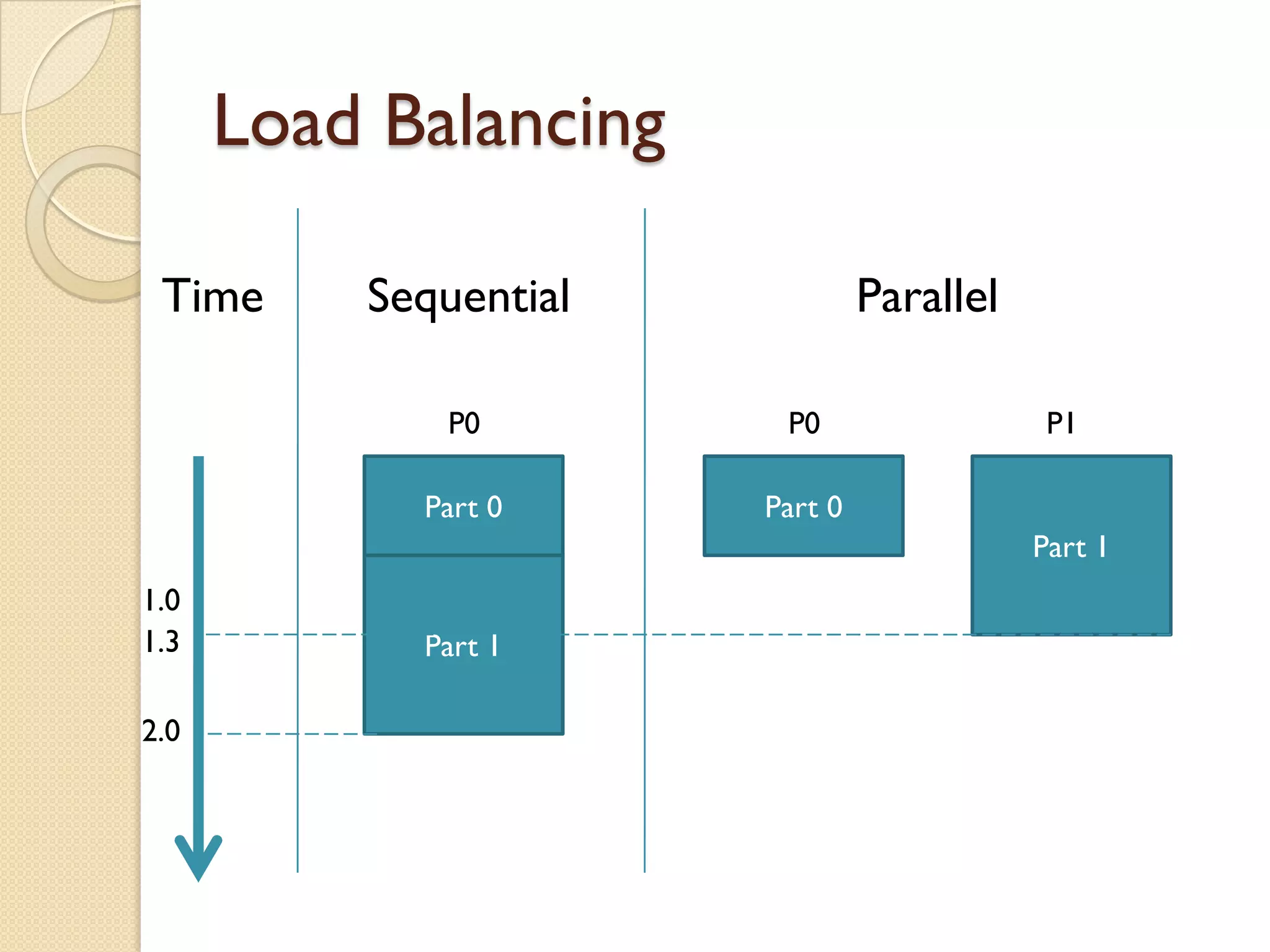

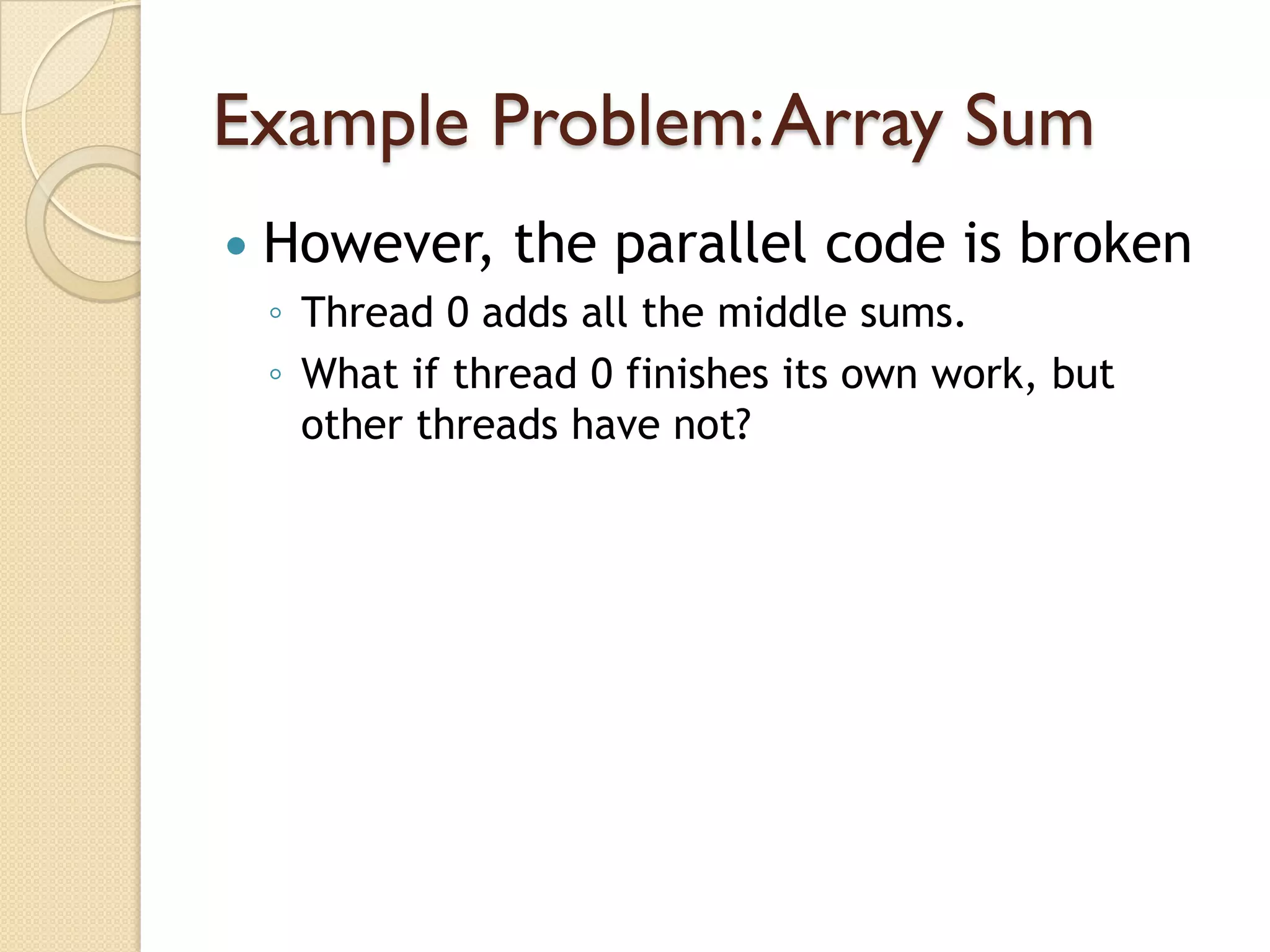

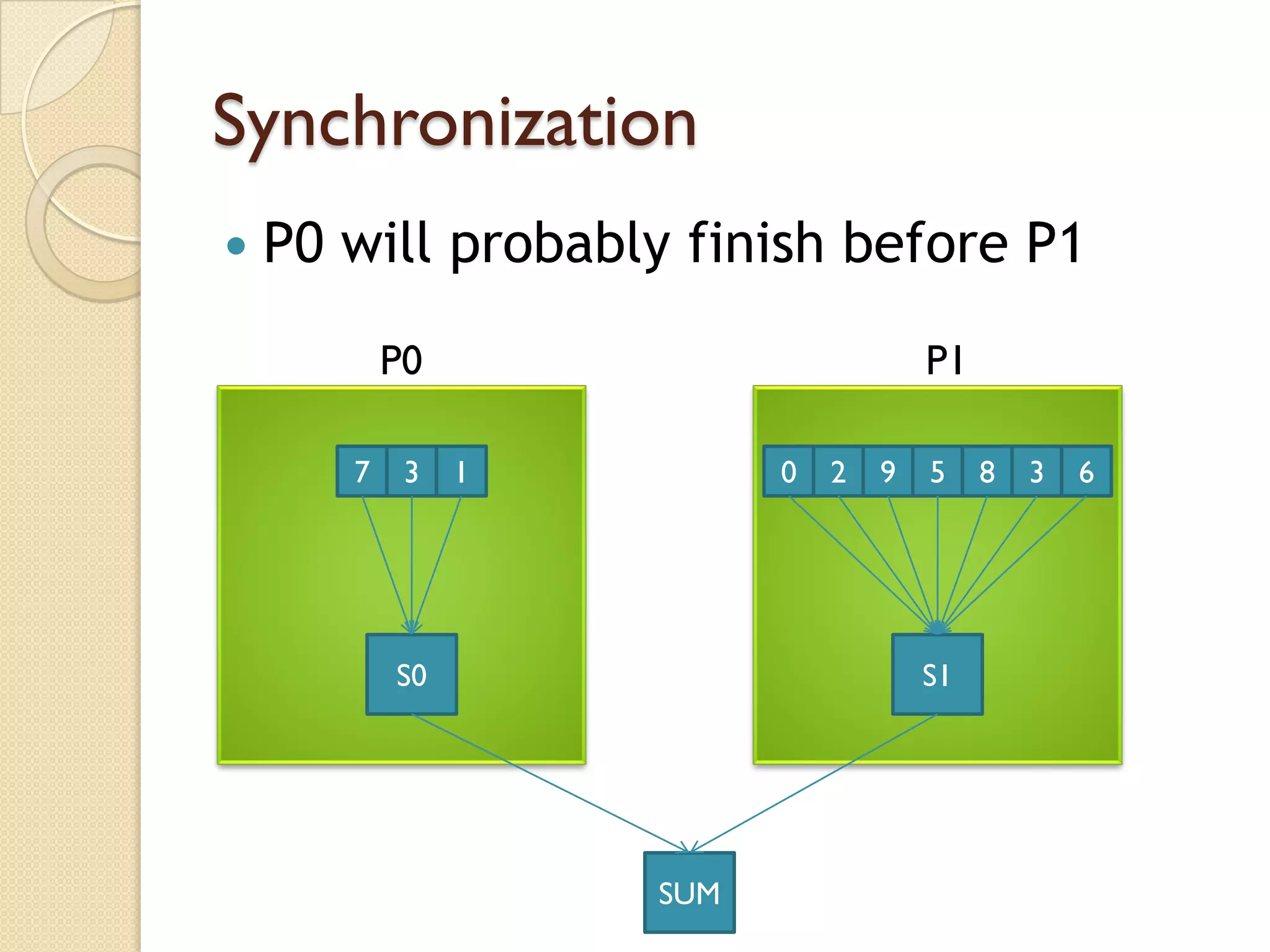

The document then provides an example lecture, walking through parallelizing a simple array sum problem to introduce concepts of parallel programming. It discusses identifying data dependencies, improving load balancing by splitting the problem across processors, and using synchronization to ensure correct results. Performance implications of parallelization are also briefly explored.

![Example Problem: Array Sum

Add all the numbers in a large array

It has 100 million elements

int size = 100000000;

int array[] = {7,3,15,10,13,18,6,4,…};

What code should we write for a

sequential program?](https://image.slidesharecdn.com/02exampleproblem-100409080452-phpapp02/75/Example-parallelize-a-simple-problem-9-2048.jpg)

![Example Problem: Sequential

int sum = 0;

int i = 0;

for(i = 0; i < size; i++) {

sum += array[i]; //sum=sum+array[i];

}](https://image.slidesharecdn.com/02exampleproblem-100409080452-phpapp02/75/Example-parallelize-a-simple-problem-10-2048.jpg)

![Back to Array Sum Example

Does the code have data dependence?

int sum = 0;

for(int i = 0; i < size; i++) {

sum += array[i]; //sum=sum+array[i];

}](https://image.slidesharecdn.com/02exampleproblem-100409080452-phpapp02/75/Example-parallelize-a-simple-problem-22-2048.jpg)

![Back to Array Sum Example

Does the code have data dependence?

int sum = 0;

for(int i = 0; i < size; i++) {

sum += array[i]; //sum=sum+array[i];

}

Not so easy to see](https://image.slidesharecdn.com/02exampleproblem-100409080452-phpapp02/75/Example-parallelize-a-simple-problem-23-2048.jpg)

![Back to Array Sum Example

Let’s unroll the loop:

int sum = 0;

sum += array[0]; //sum=sum+array[0];

sum += array[1]; //sum=sum+array[1];

sum += array[2]; //sum=sum+array[2];

sum += array[3]; //sum=sum+array[3];

…

Now we can see dependence!](https://image.slidesharecdn.com/02exampleproblem-100409080452-phpapp02/75/Example-parallelize-a-simple-problem-24-2048.jpg)

![What Does the Code Look Like?

int numThreads = 2; //Assume one thread per core, and 2 cores

int sum = 0;

int i = 0;

int middleSum[numThreads];

int threadSetSize = size/numThreads

//Each thread will execute this code with a different threadID

for( i = threadID*threadSetSize; i < (threadID+1)*threadSetSize; i++)

{

middleSum[threadID] += array[i];

}

//Only thread 0 will execute this code

if (threadID==0) {

for(i = 0; i < numThreads; i++) {

sum += middleSum[i];

}

}](https://image.slidesharecdn.com/02exampleproblem-100409080452-phpapp02/75/Example-parallelize-a-simple-problem-29-2048.jpg)

![How Can We Fix The Code to

GUARANTEE It Works Correctly?

int numThreads = 2; //Assume one thread per core, and 2 cores

int sum = 0;

int i = 0;

int middleSum[numThreads];

int threadSetSize = size/numThreads

//Each thread will execute this code with a different threadID

for( i = threadID*threadSetSize; i < (threadID+1)*threadSetSize; i++)

{

middleSum[threadID] += array[i];

}

//Only thread 0 will execute this code

if (threadID==0) {

for(i = 0; i < numThreads; i++) {

sum += middleSum[i];

}

}](https://image.slidesharecdn.com/02exampleproblem-100409080452-phpapp02/75/Example-parallelize-a-simple-problem-35-2048.jpg)

![Code with Synchronization Fixed

int numThreads = 2; //Assume one thread per core, & 2 cores

int sum = 0;

int i = 0;

int middleSum[numThreads];

int threadSetSize = size/numThreads

//Each thread will execute this code with a different threadID

for( i = threadID*threadSetSize; i < (threadID+1)*threadSetSize; i++)

{

middleSum[threadID] += array[i];

}

waitForAllThreads(); //Wait for all threads

//Only thread 0 will execute this code

if (threadID==0) {

for(i = 0; i < numThreads; i++) {

sum += middleSum[i];

}

}](https://image.slidesharecdn.com/02exampleproblem-100409080452-phpapp02/75/Example-parallelize-a-simple-problem-37-2048.jpg)