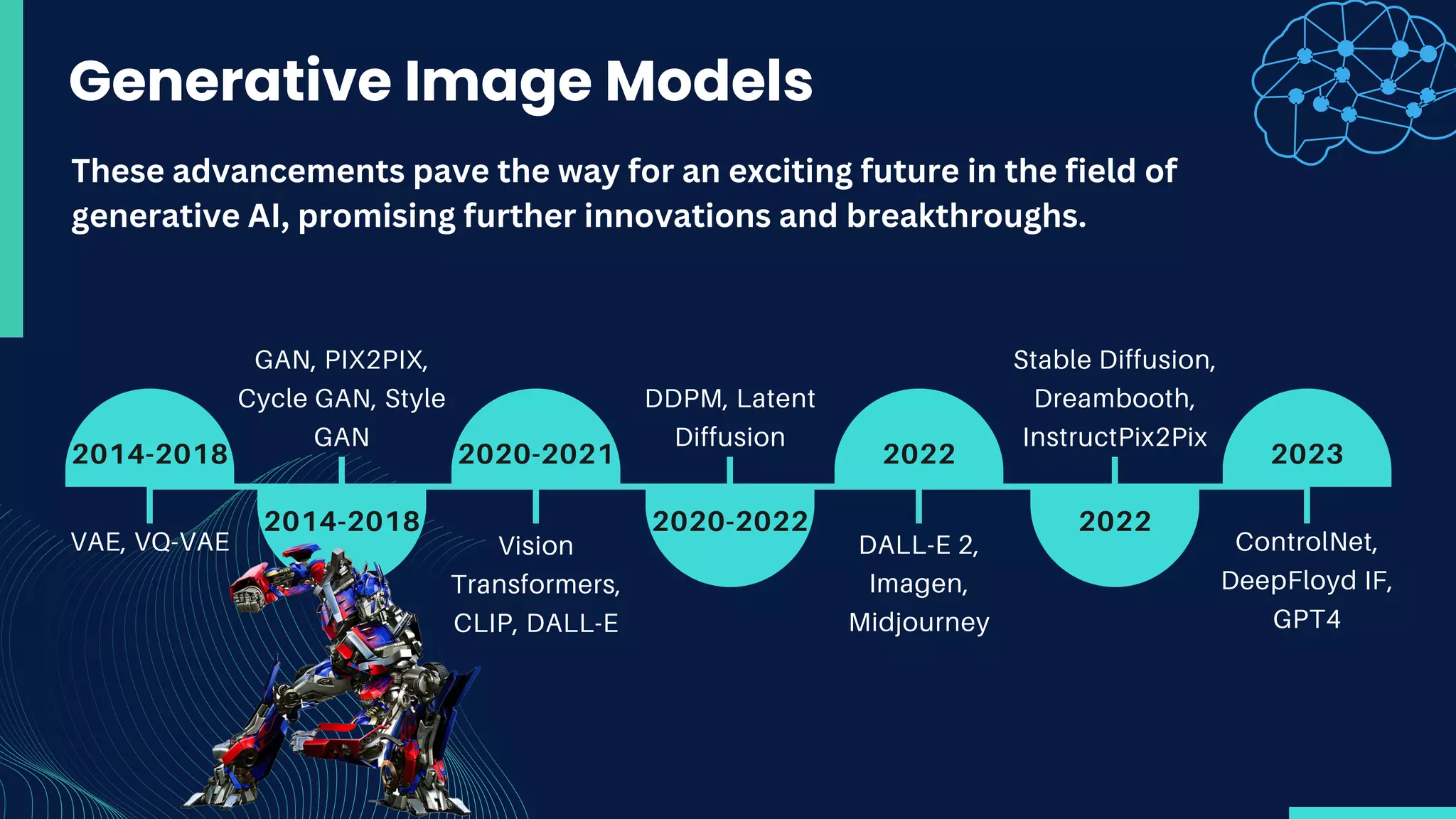

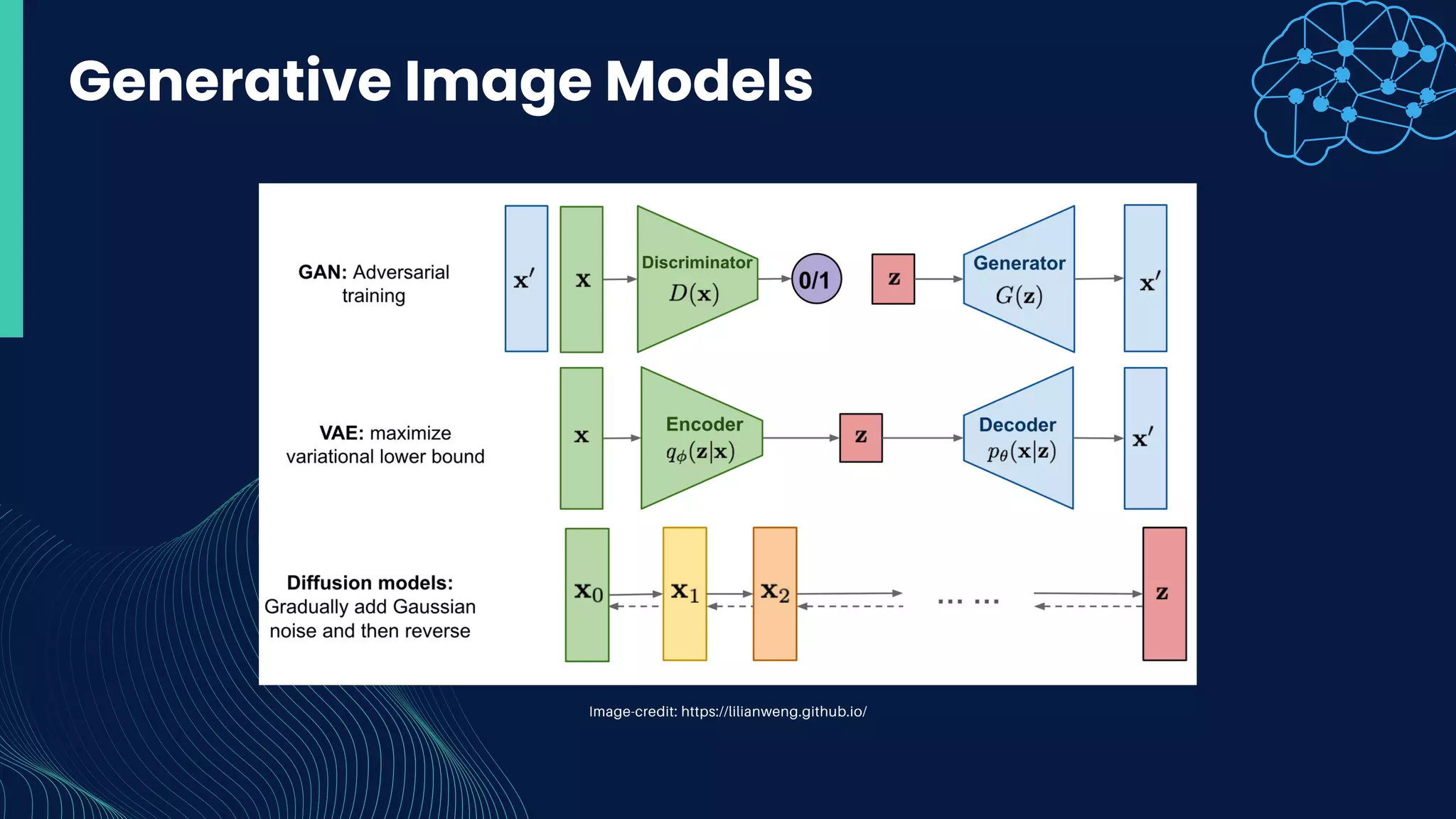

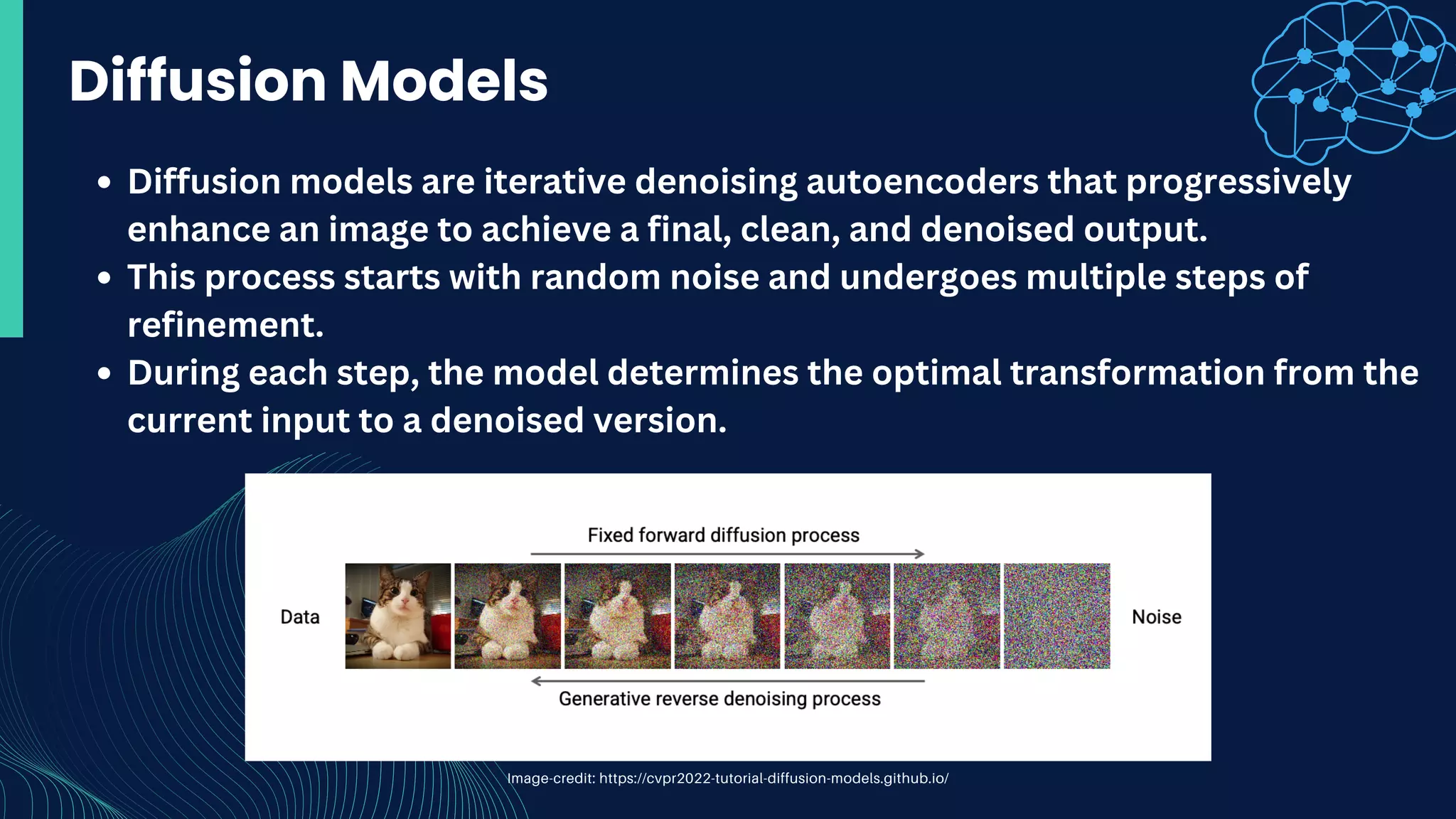

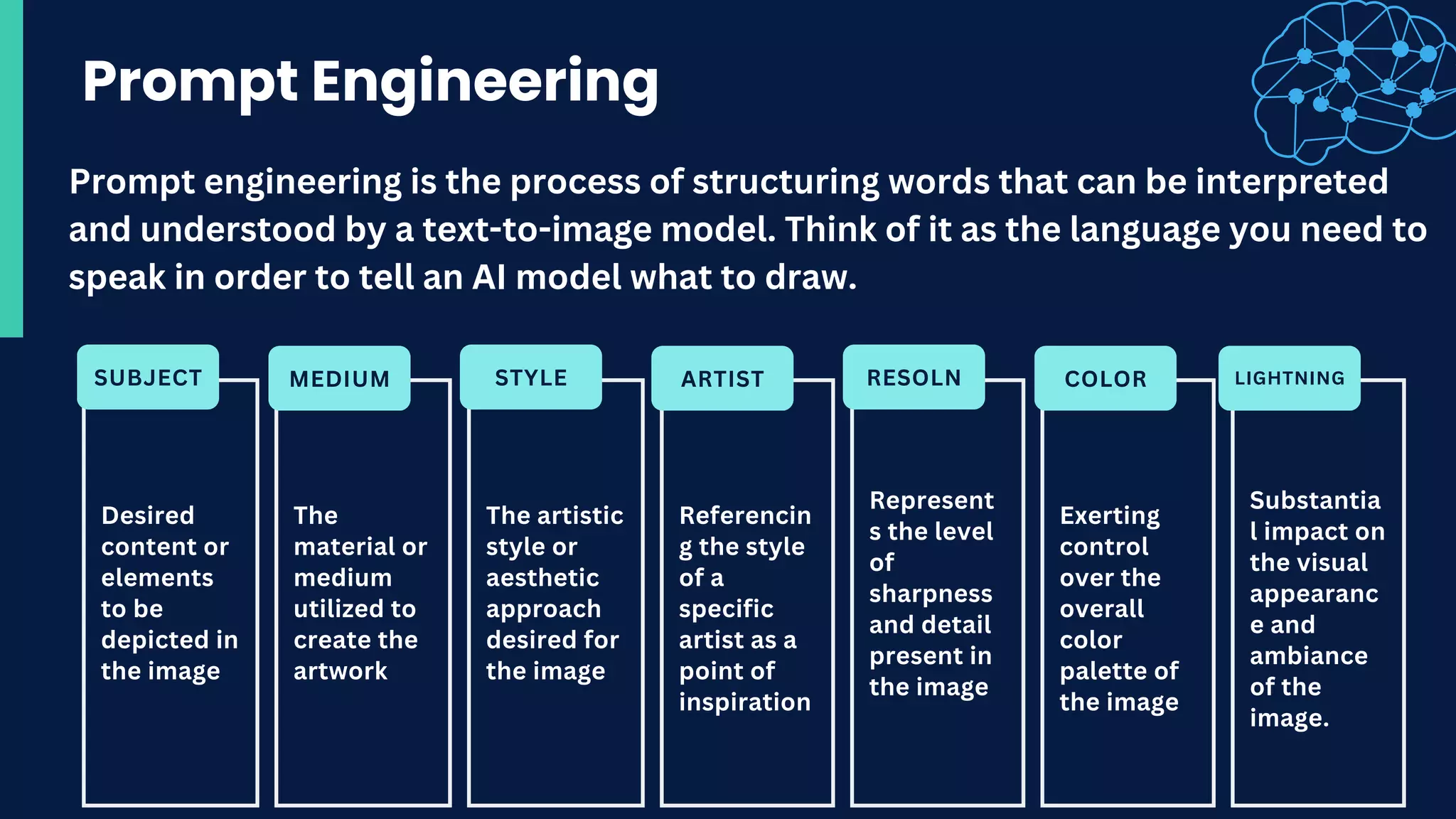

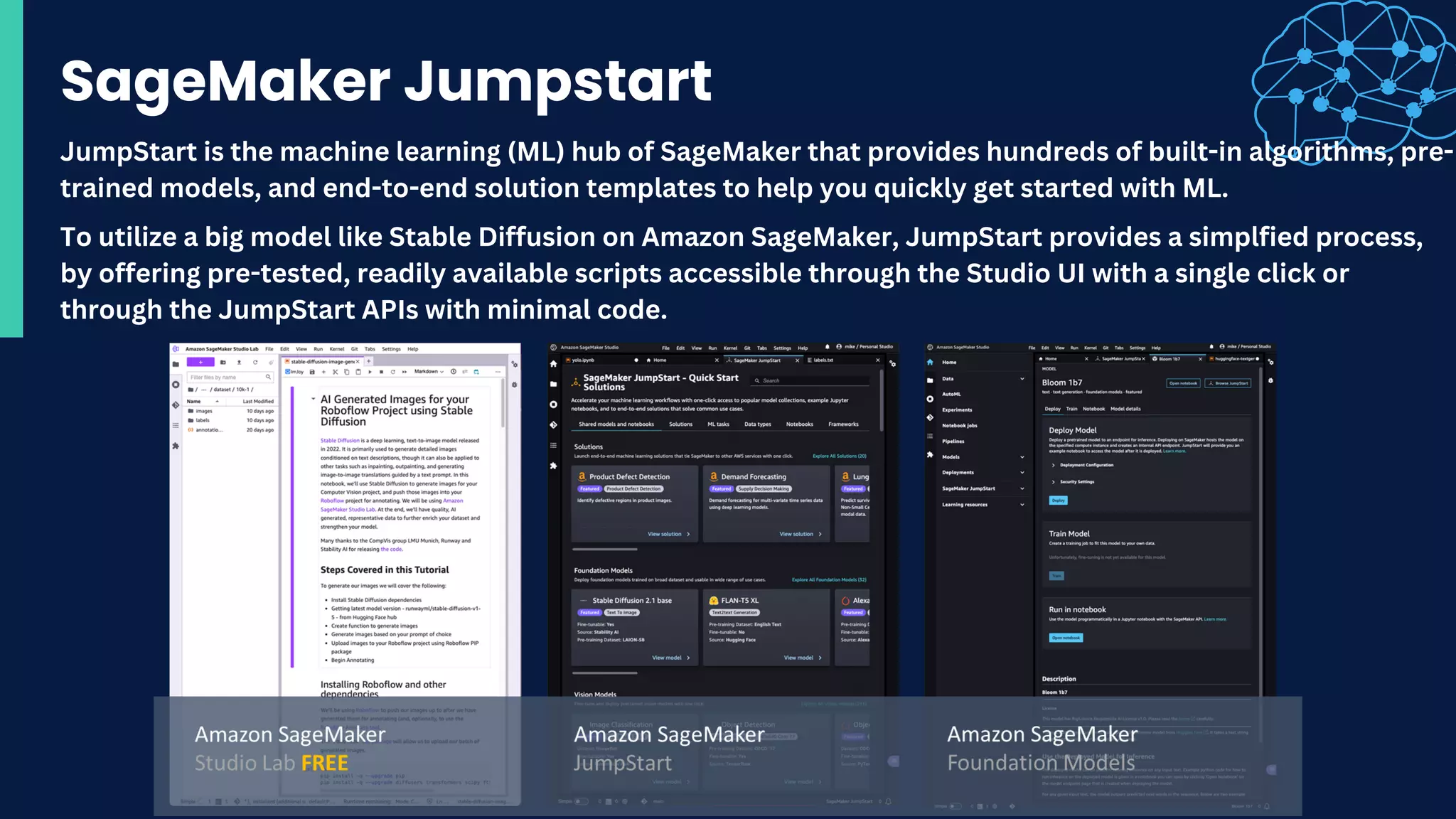

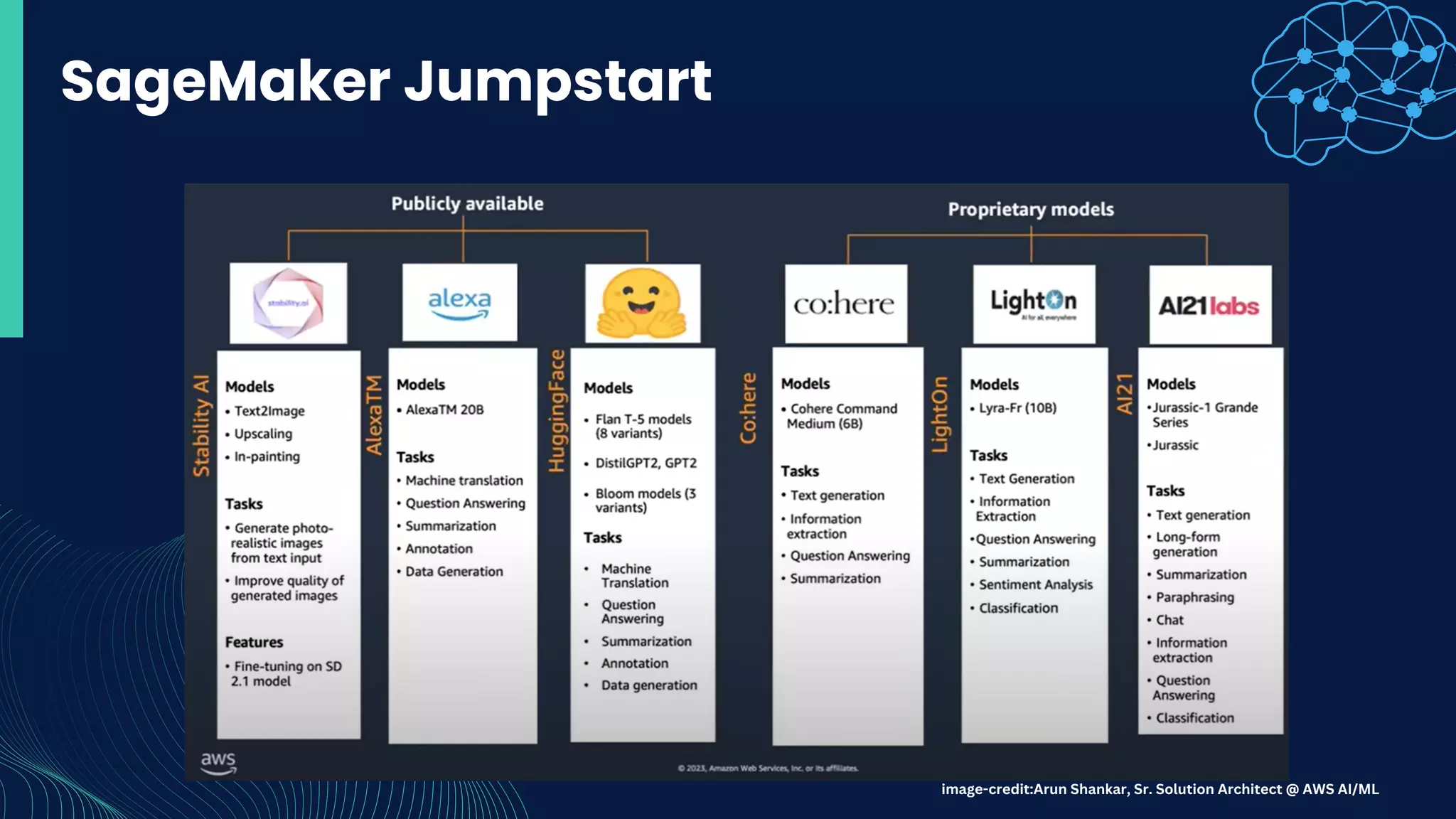

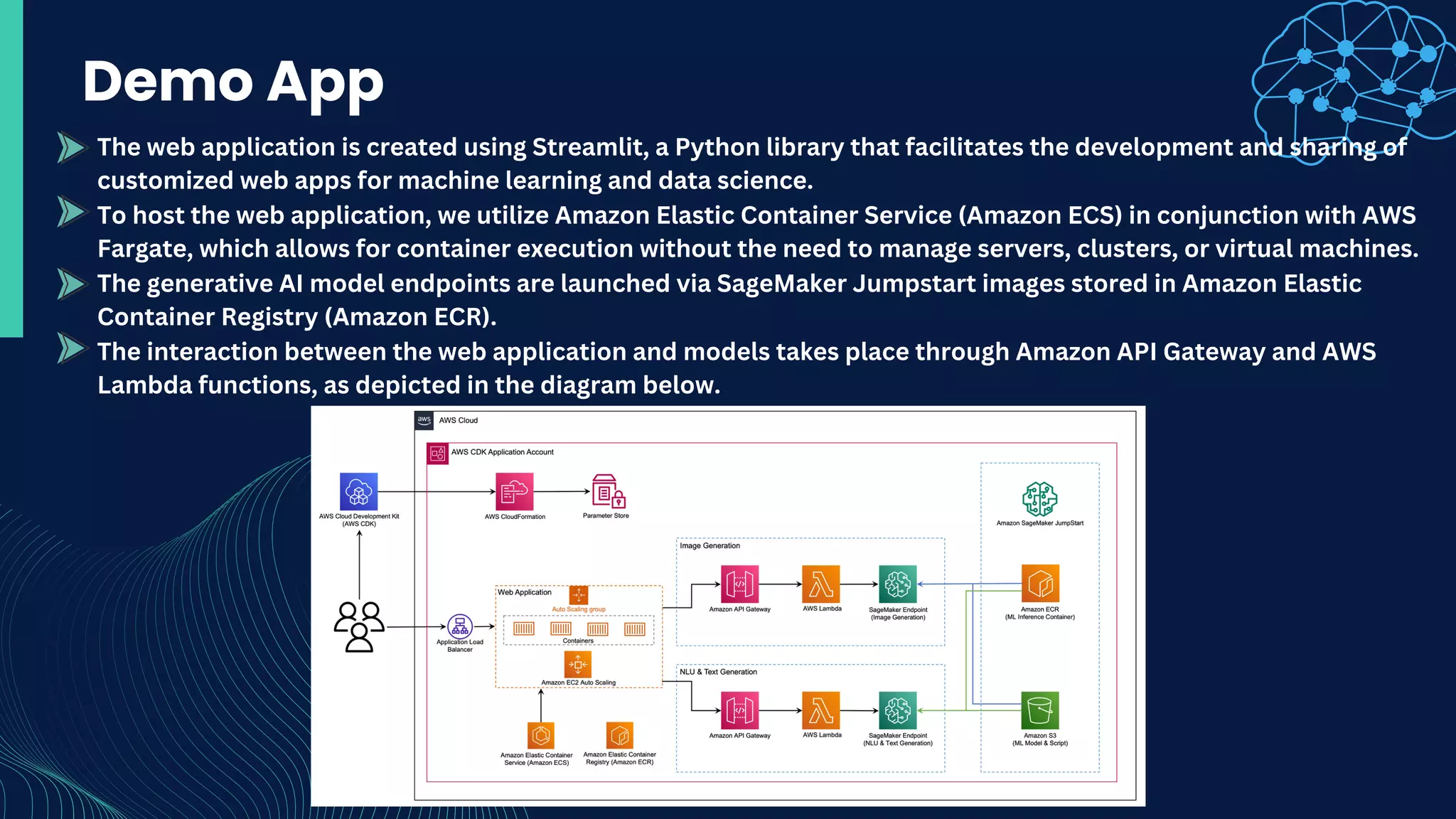

The document discusses generative AI, focusing on diffusion models and their application in creating new content like images and text. It highlights the processes involved in stable diffusion for high-quality image generation, including prompt engineering for effective interaction with AI models. Additionally, it introduces Amazon SageMaker Jumpstart as a resource for using machine learning models, emphasizing the ease of deploying applications using AWS services.