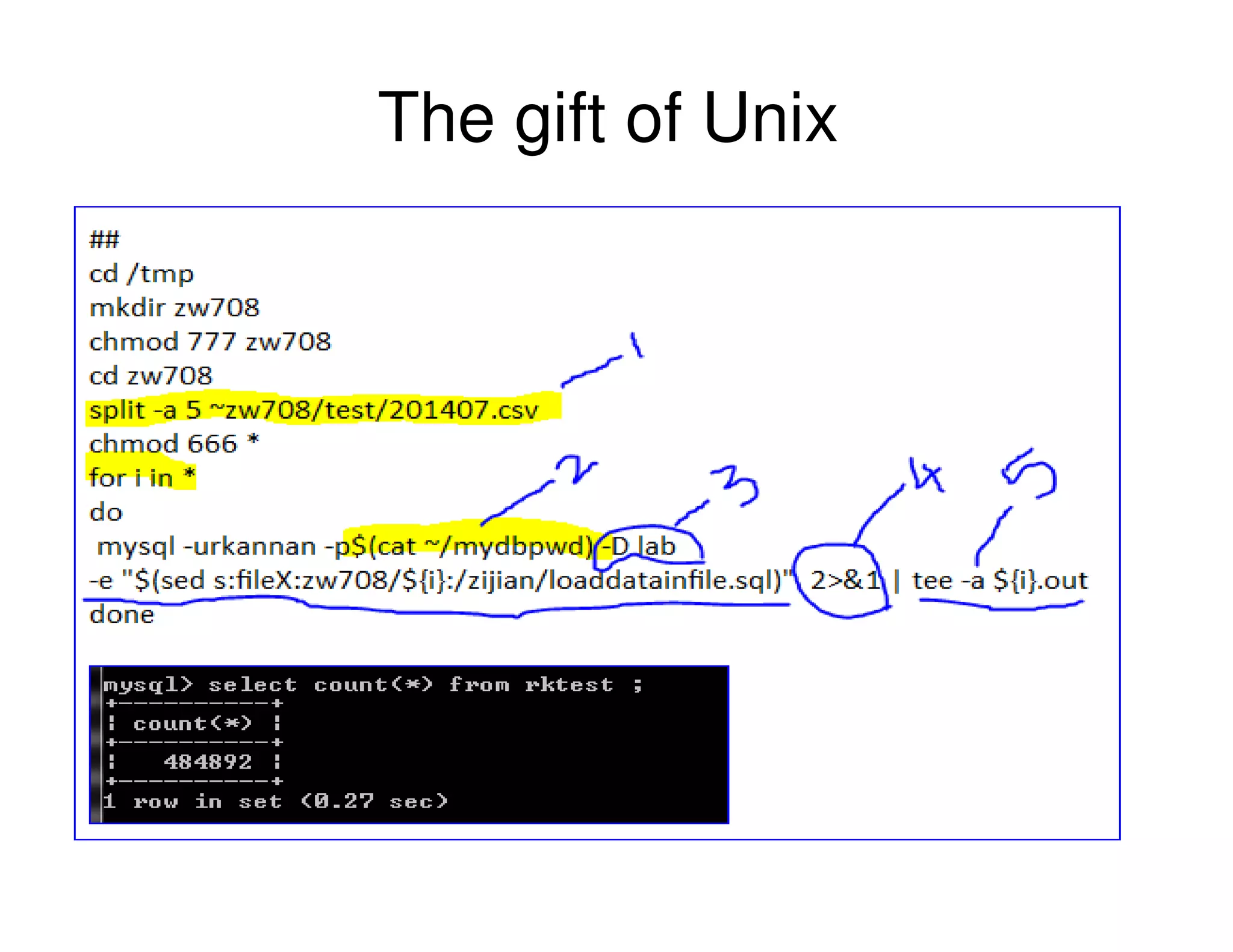

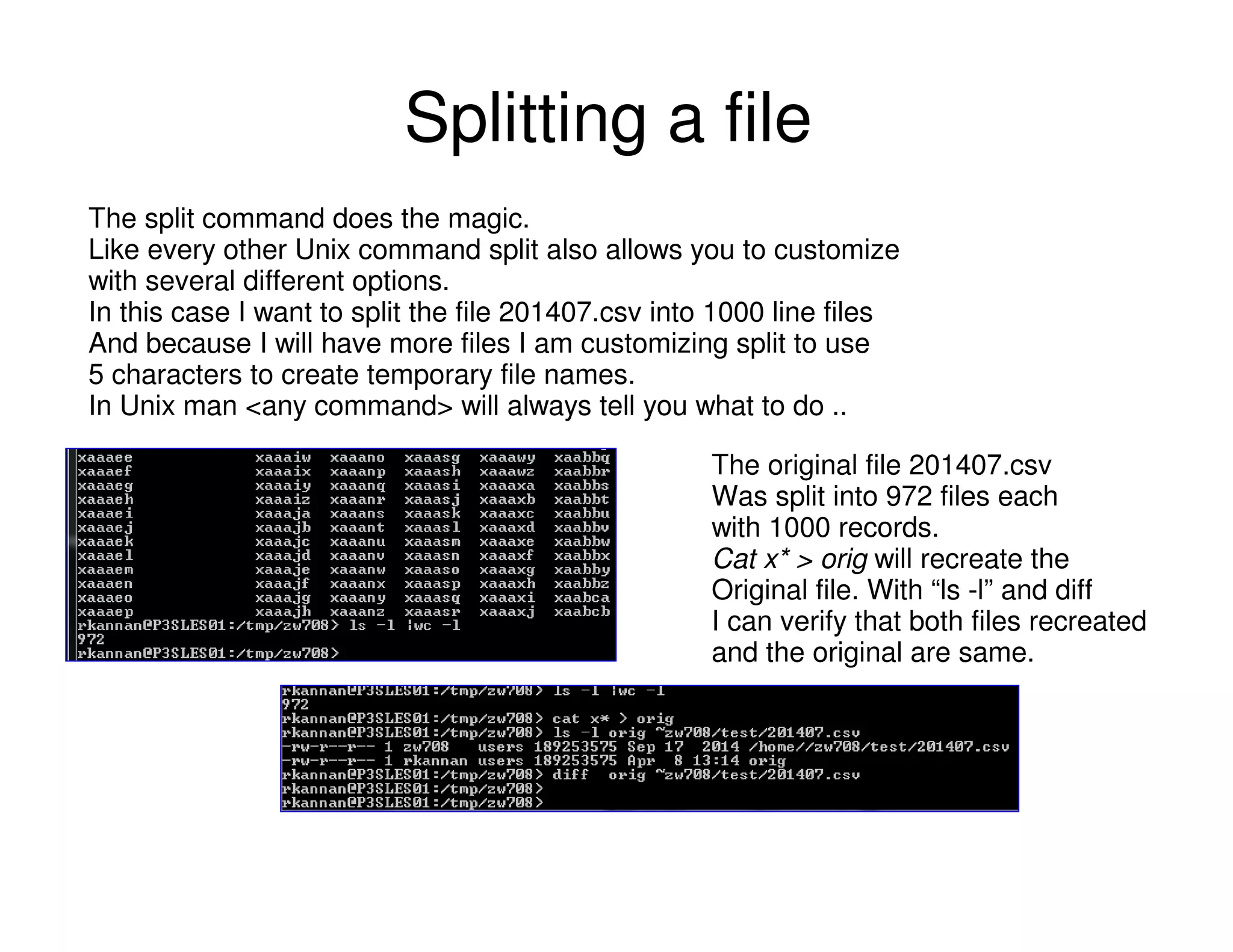

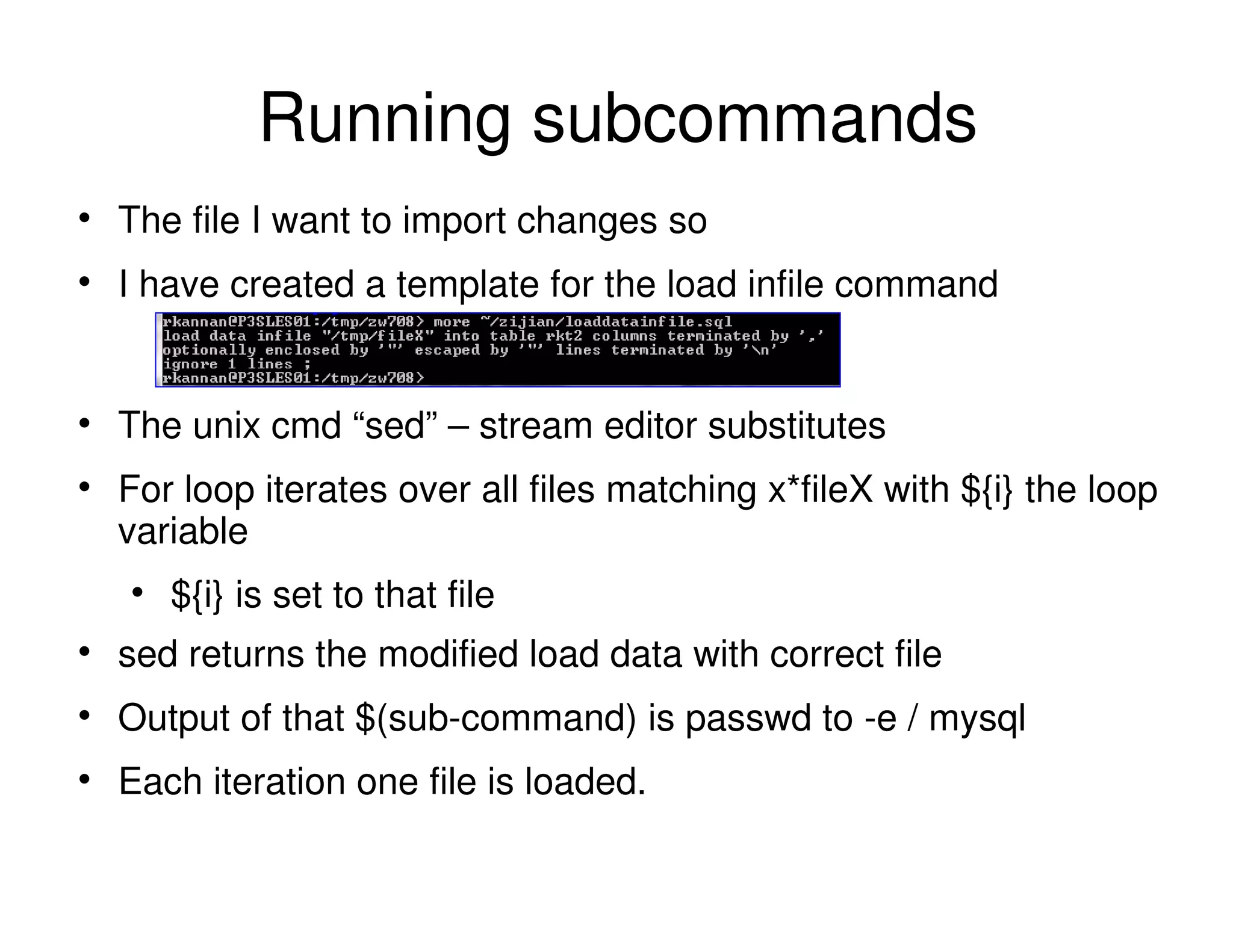

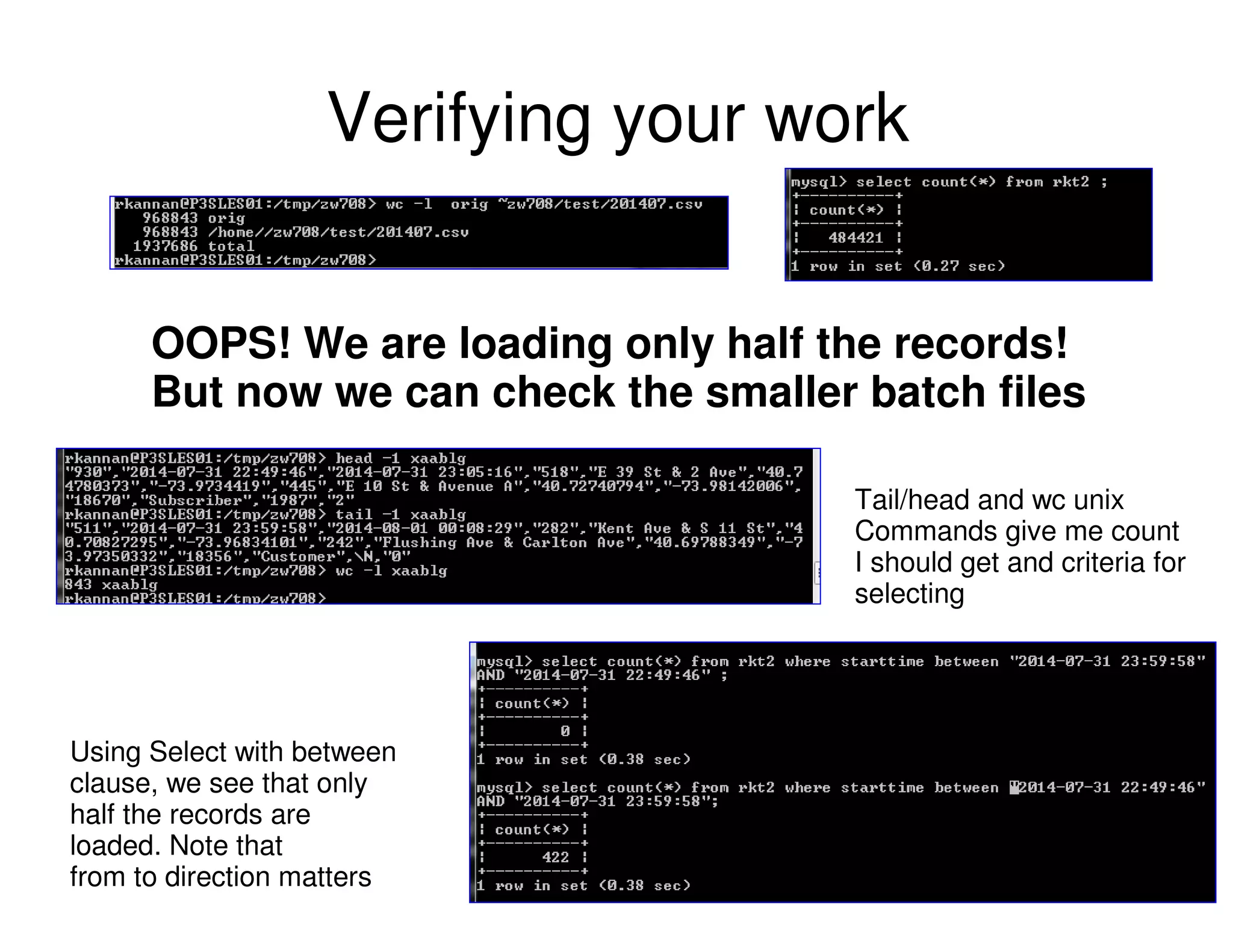

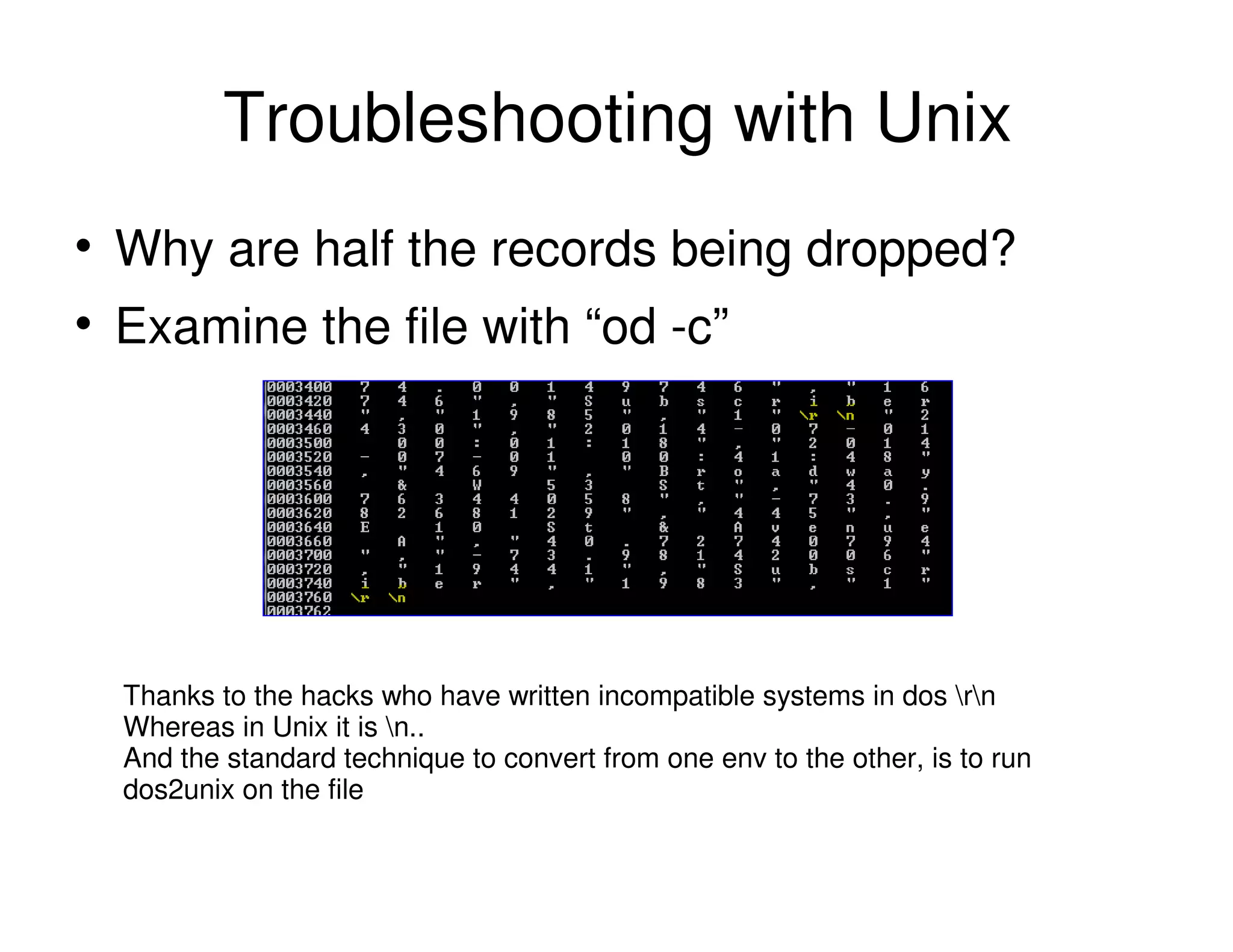

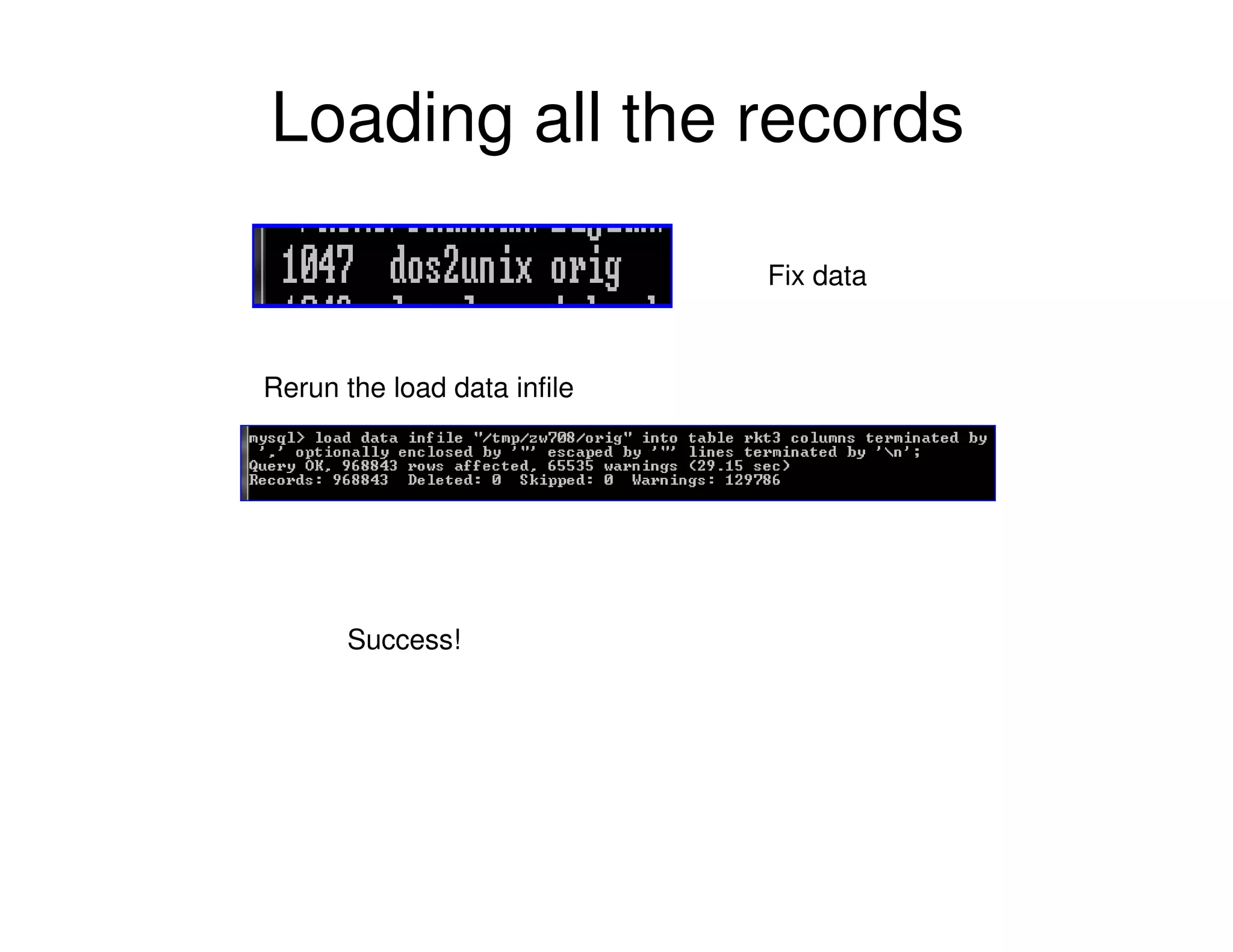

The document discusses strategies for efficiently importing a large file into a database while addressing challenges such as isolating errors and minimizing manual intervention. It details the use of Unix commands to split files, configure MySQL for file I/O, and automate the loading process through shell scripting. The author shares troubleshooting tips for handling data format issues and acknowledges contributors who have supported the project.