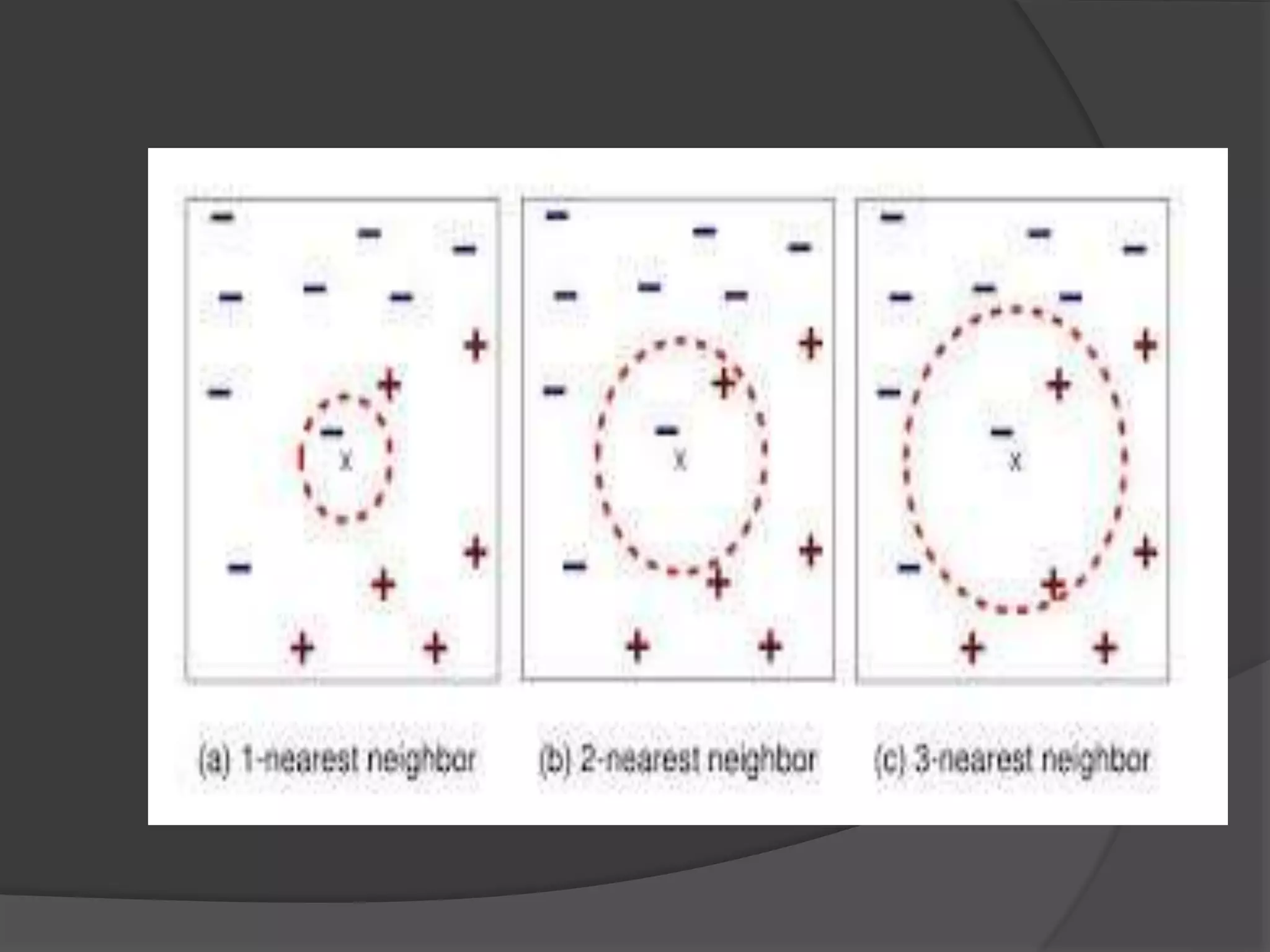

Lazy learning is a machine learning method where generalization of training data is delayed until a query is made, unlike eager learning which generalizes before queries. K-nearest neighbors and case-based reasoning are examples of lazy learners, which store training data and classify new data based on similarity. Case-based reasoning specifically stores prior problem solutions to solve new problems by combining similar past case solutions.