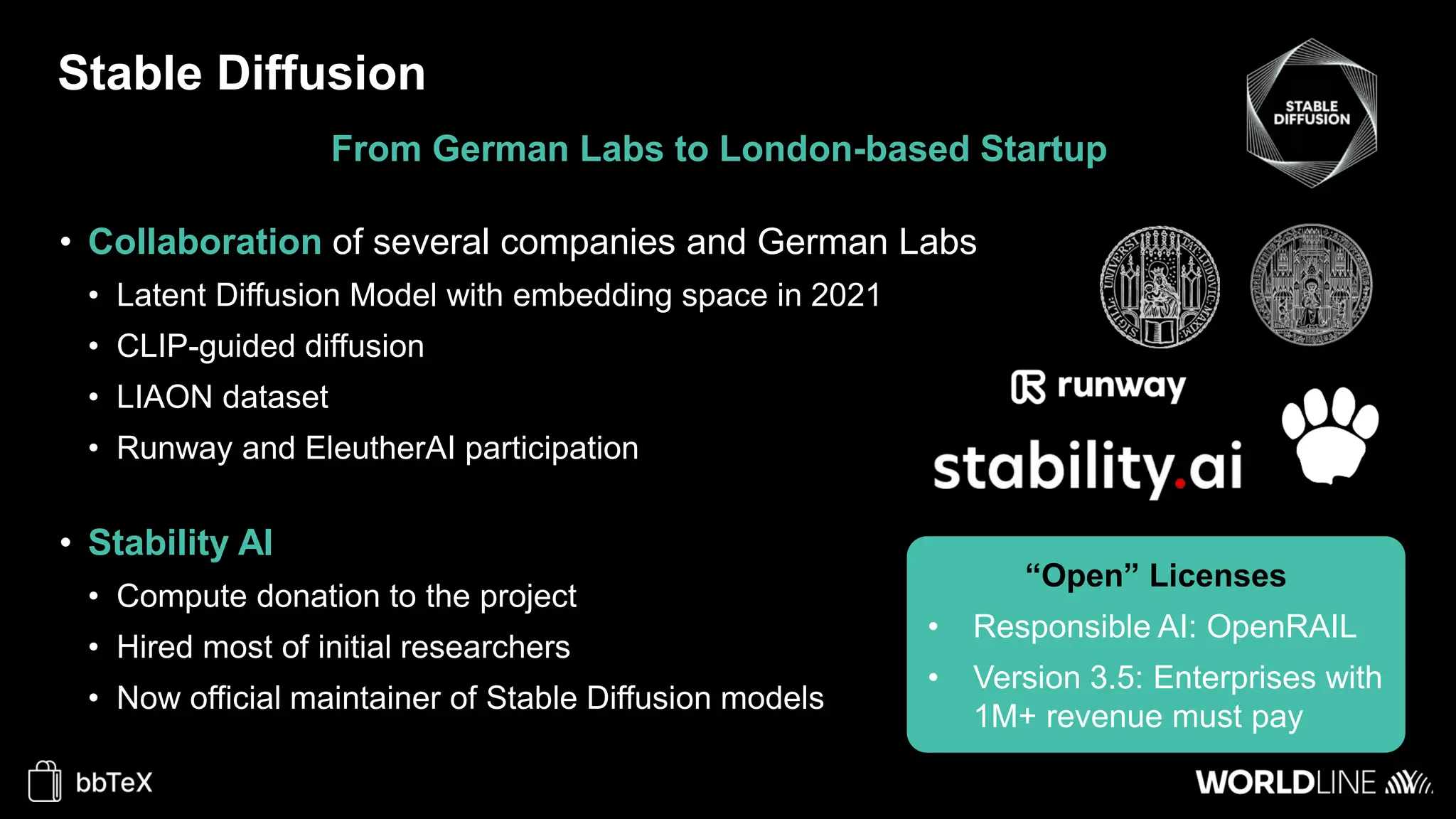

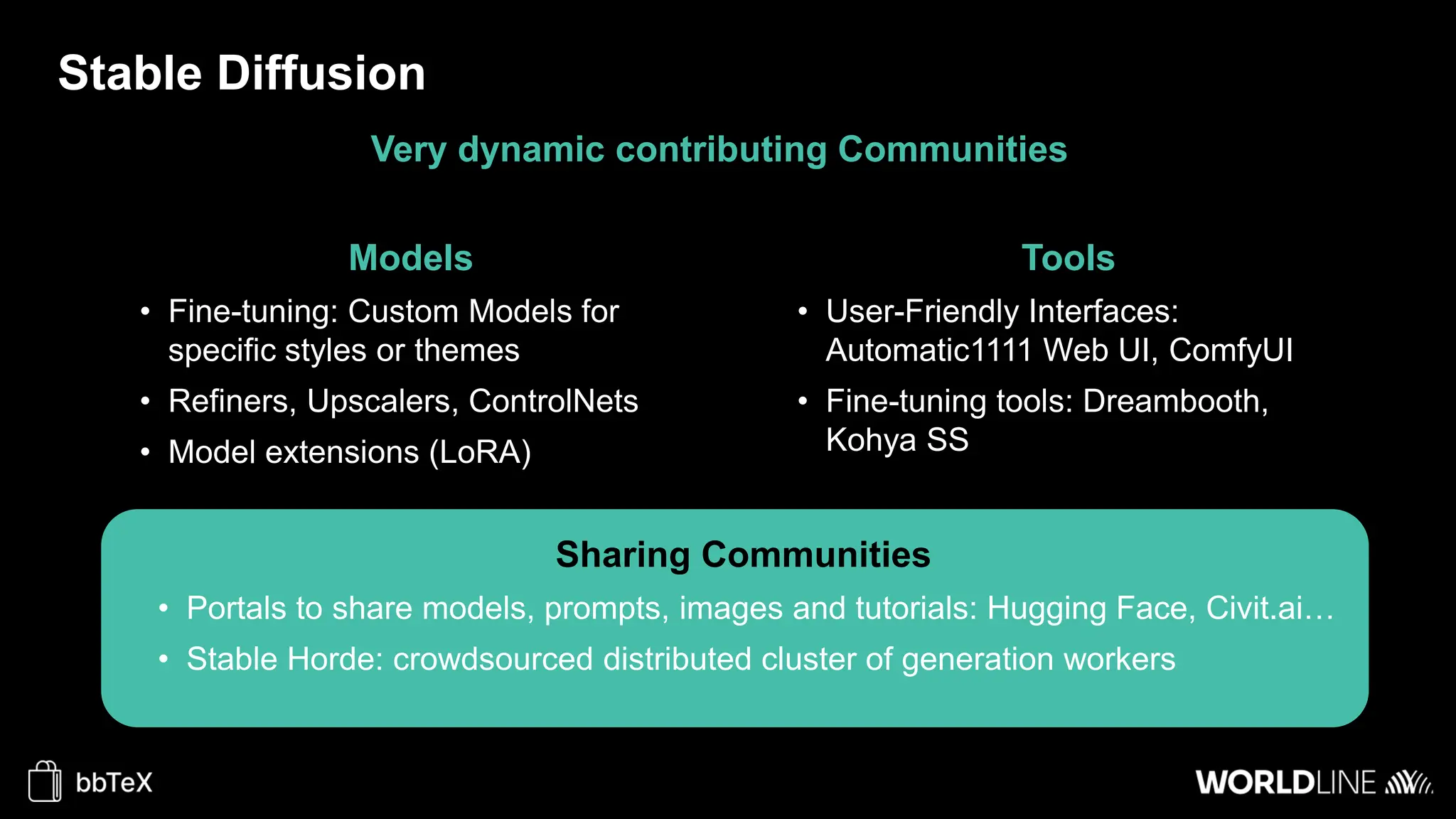

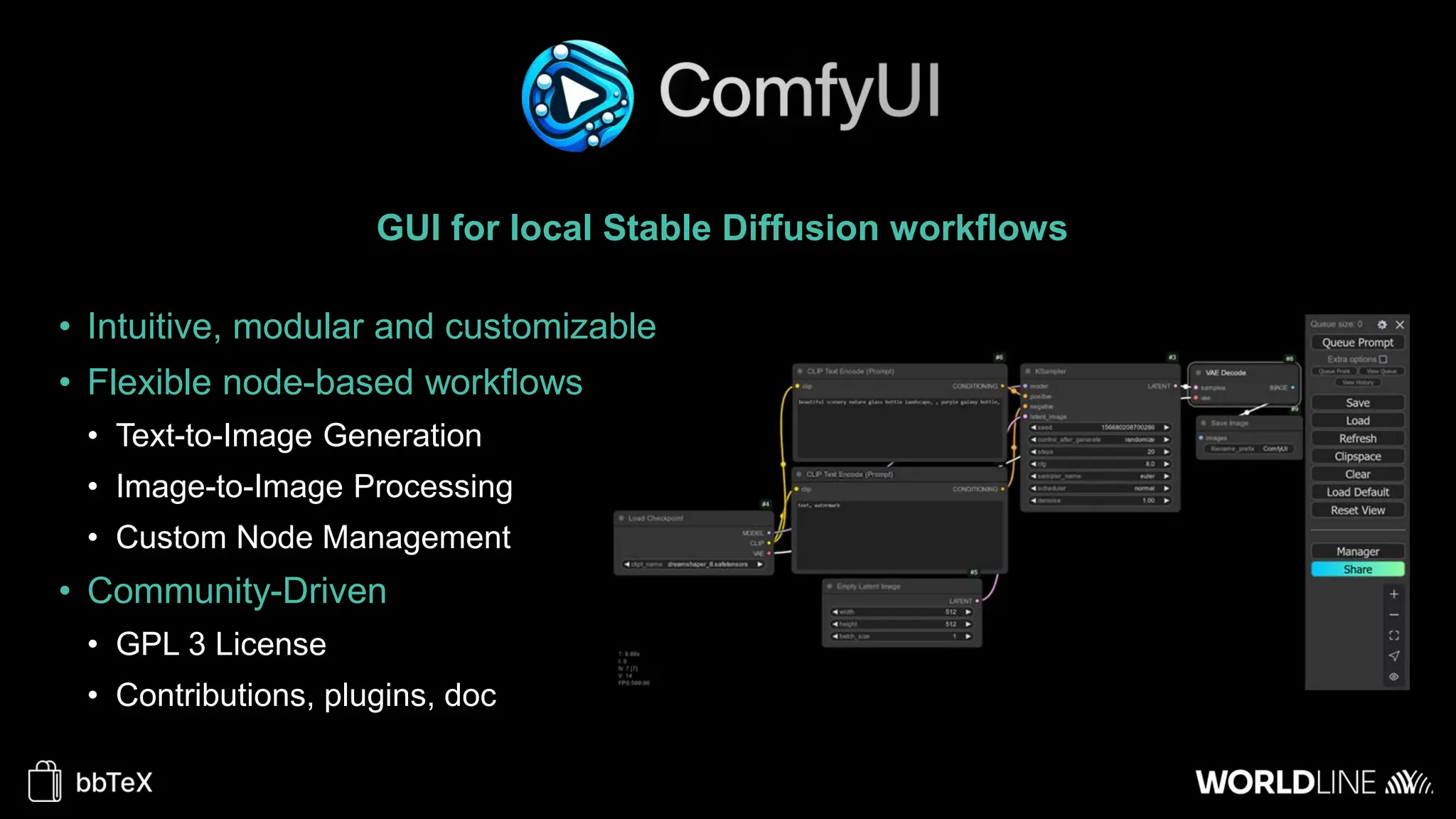

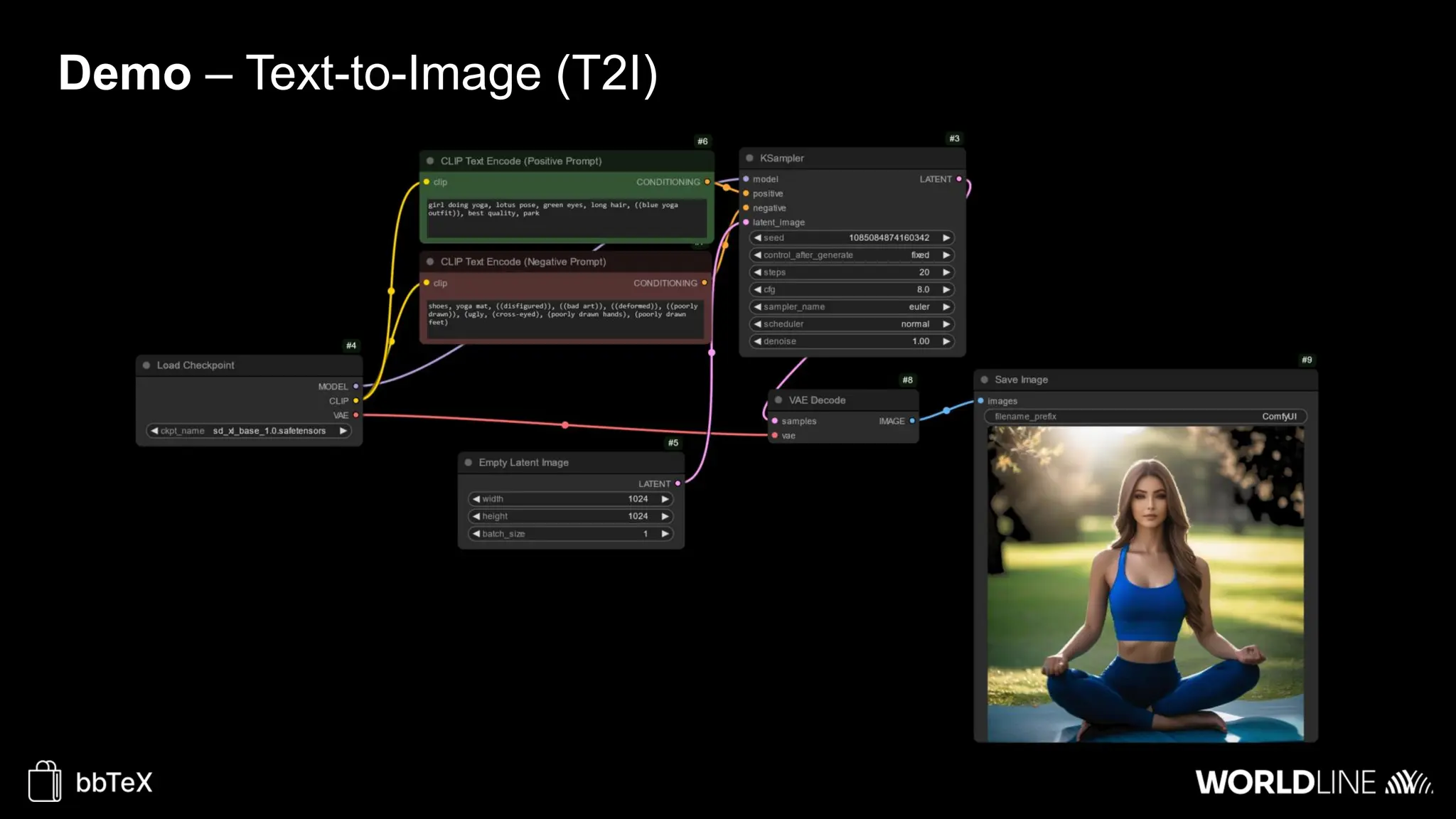

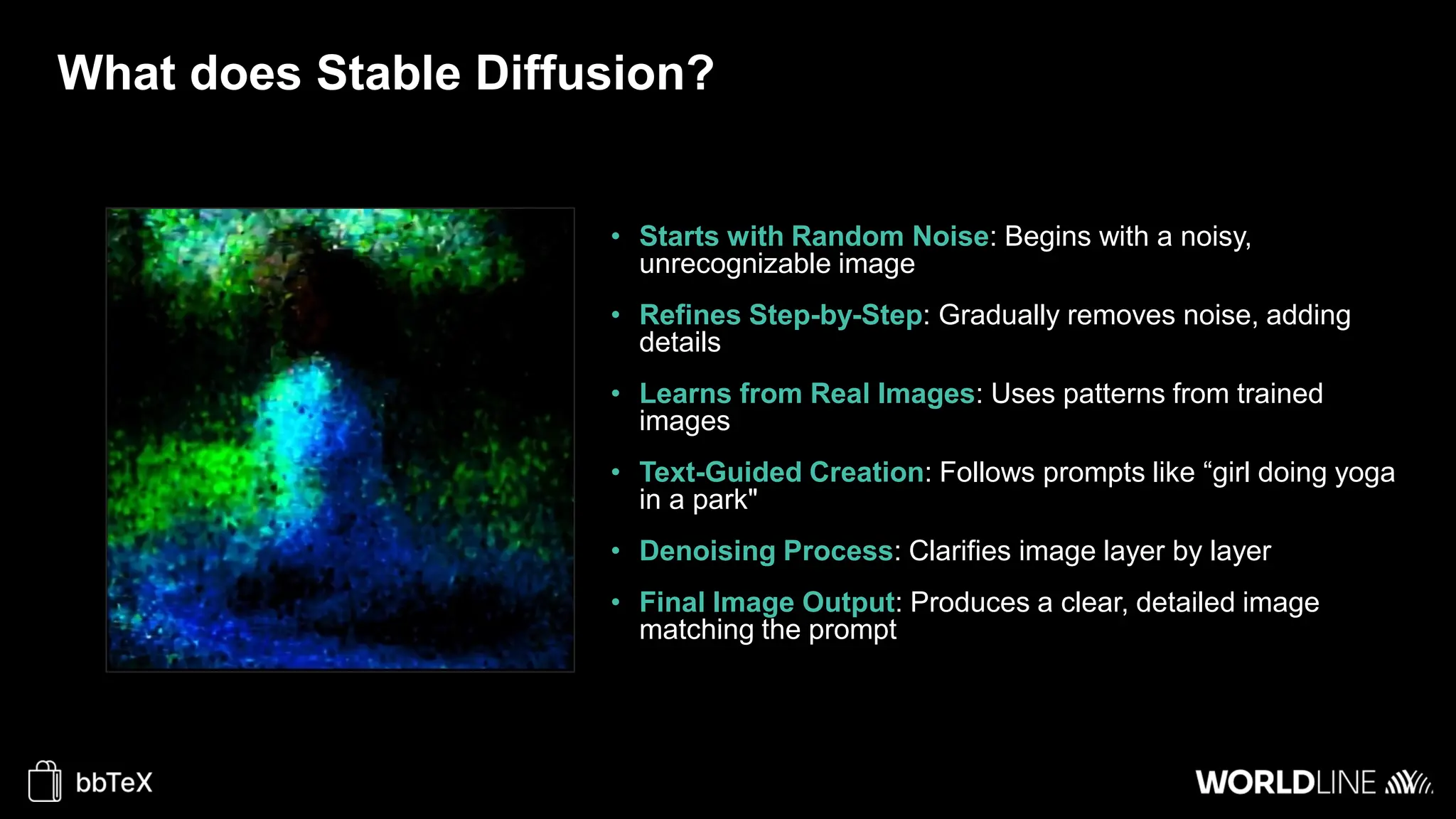

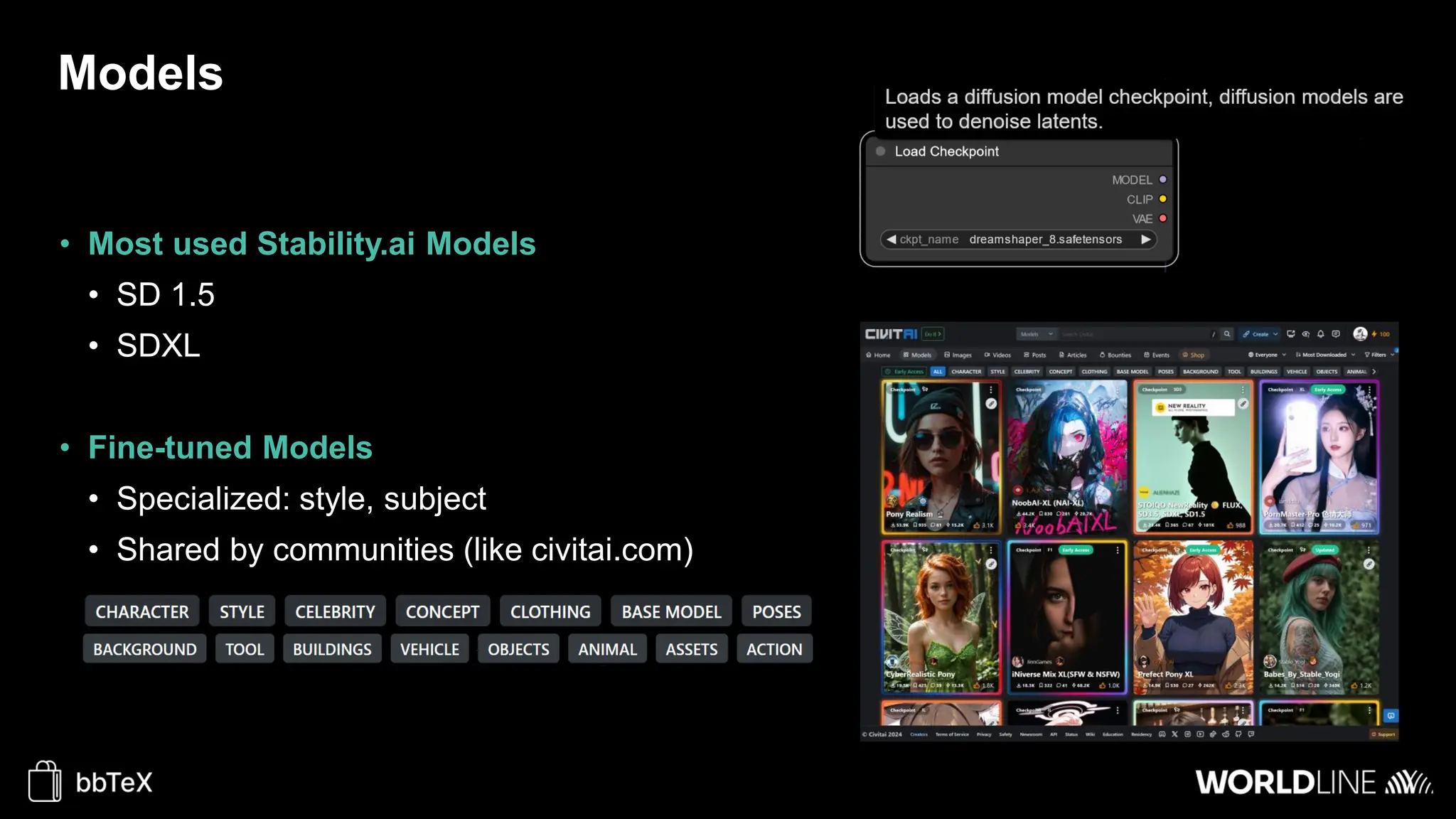

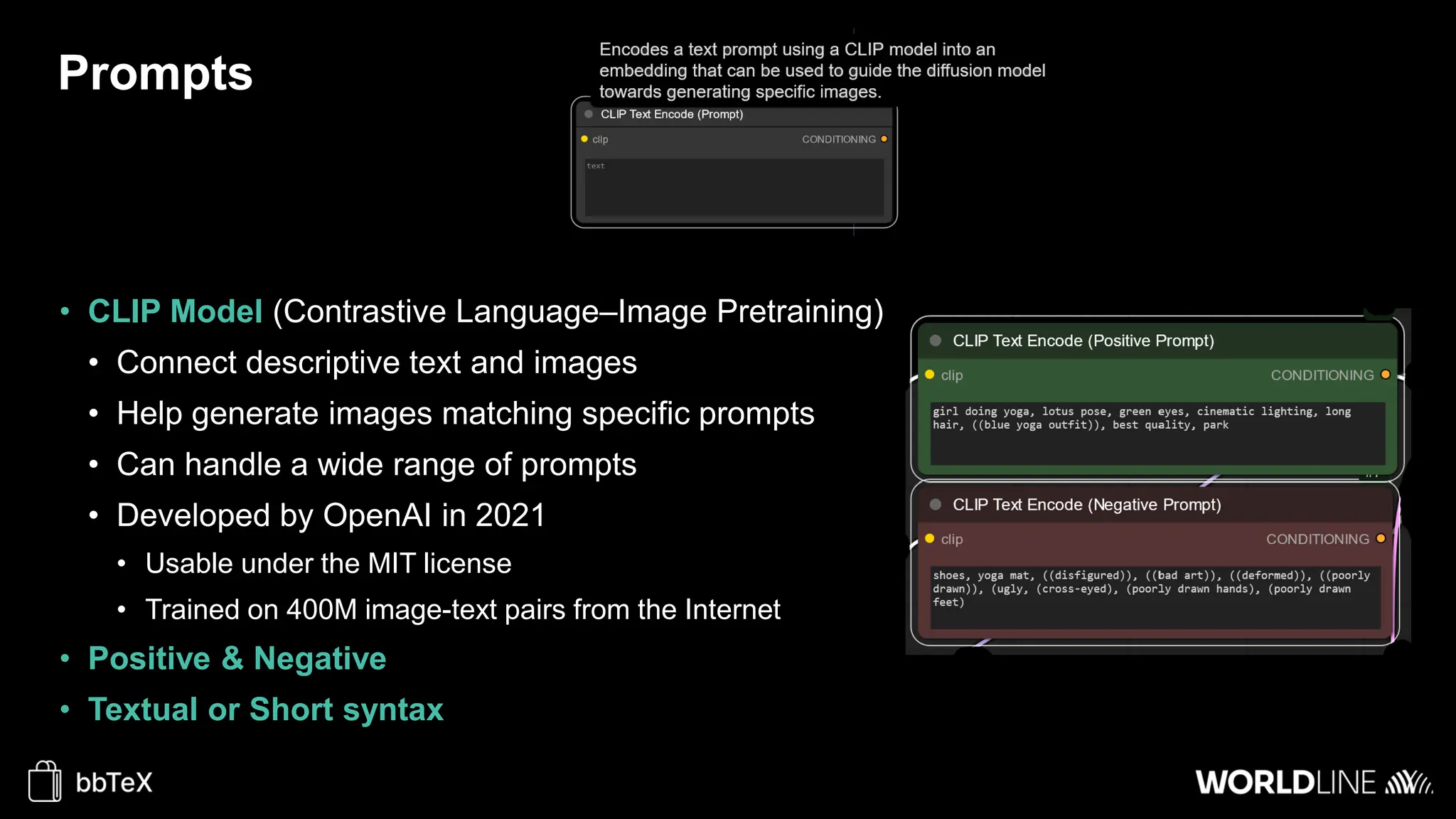

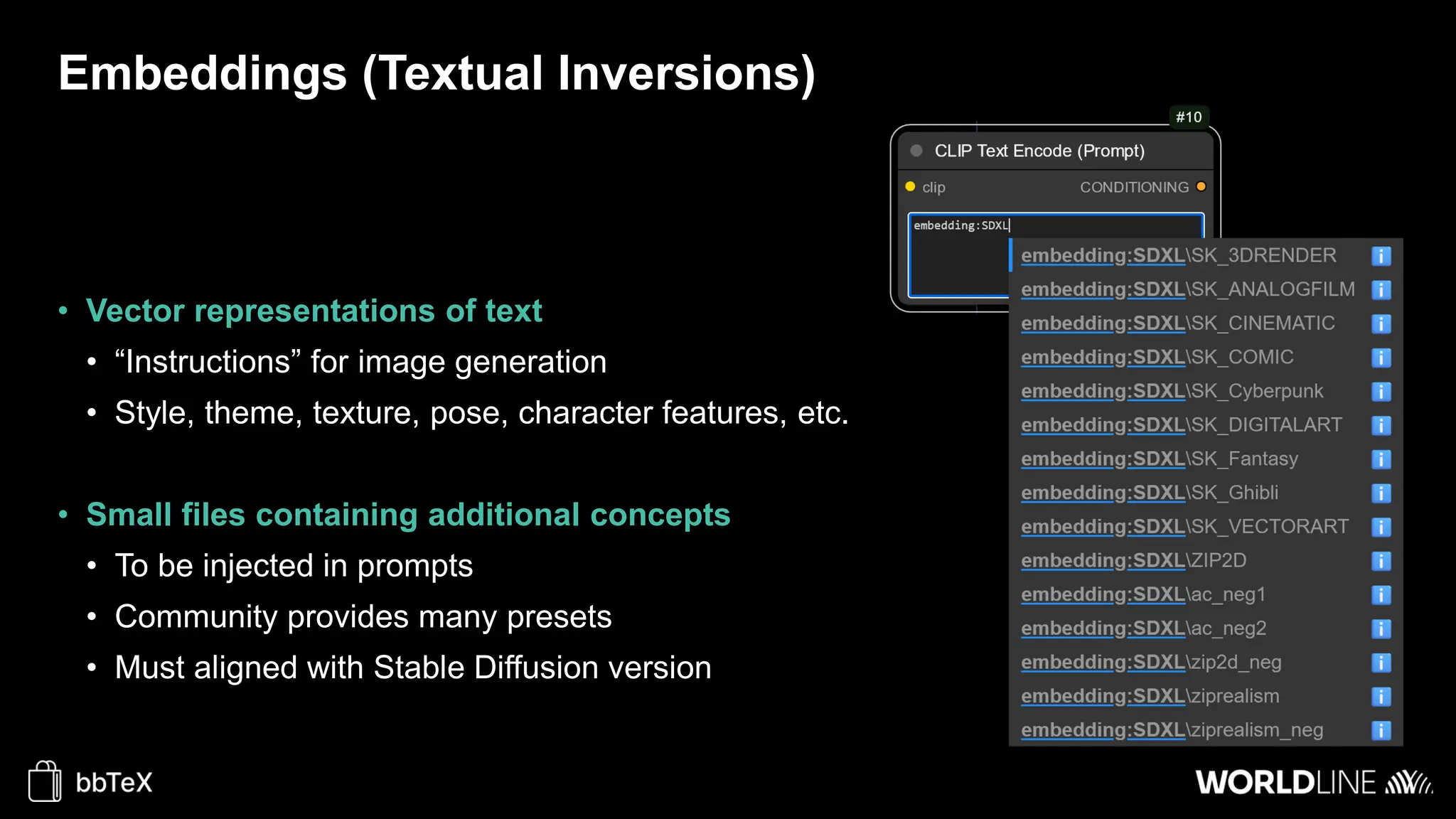

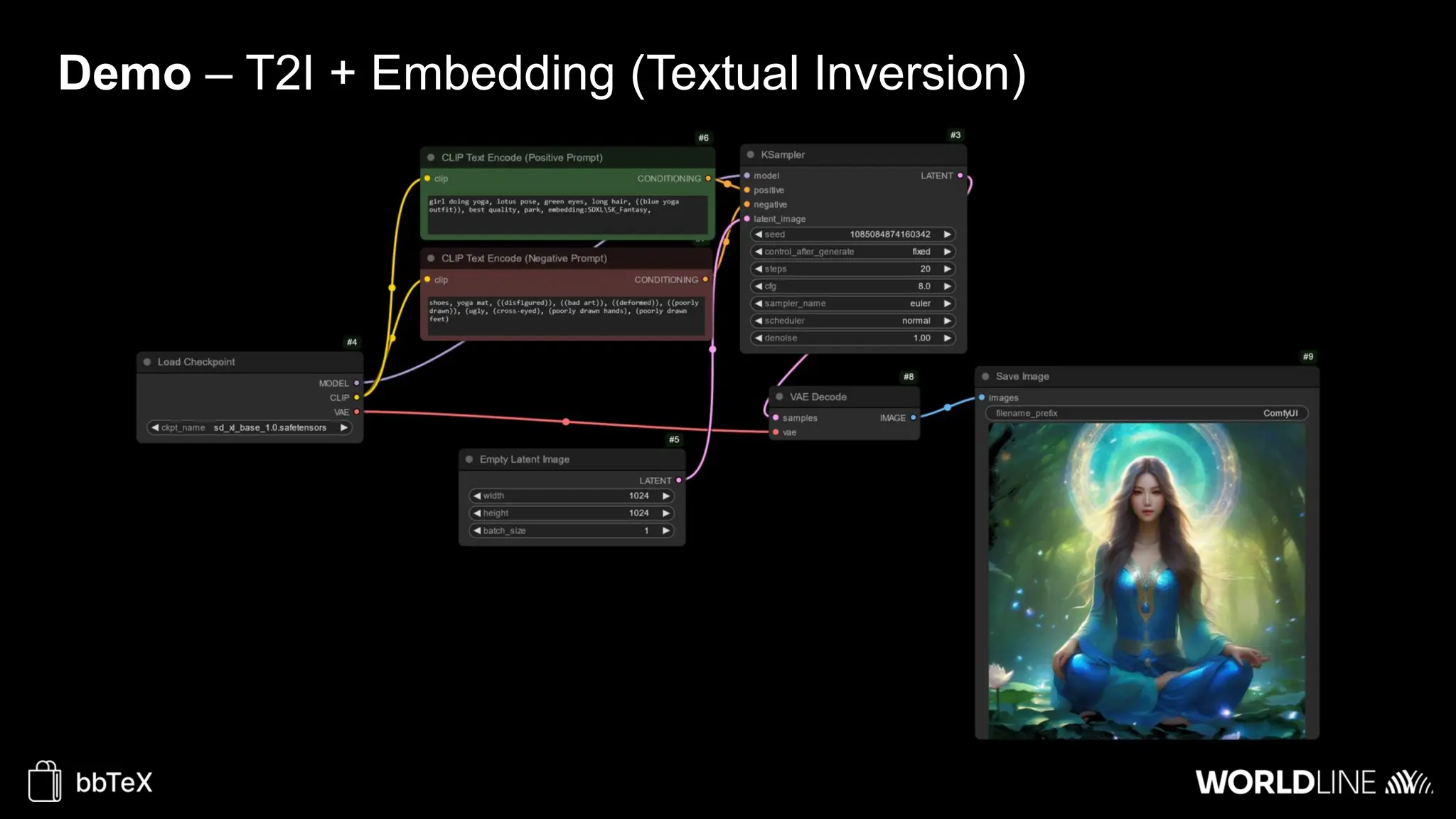

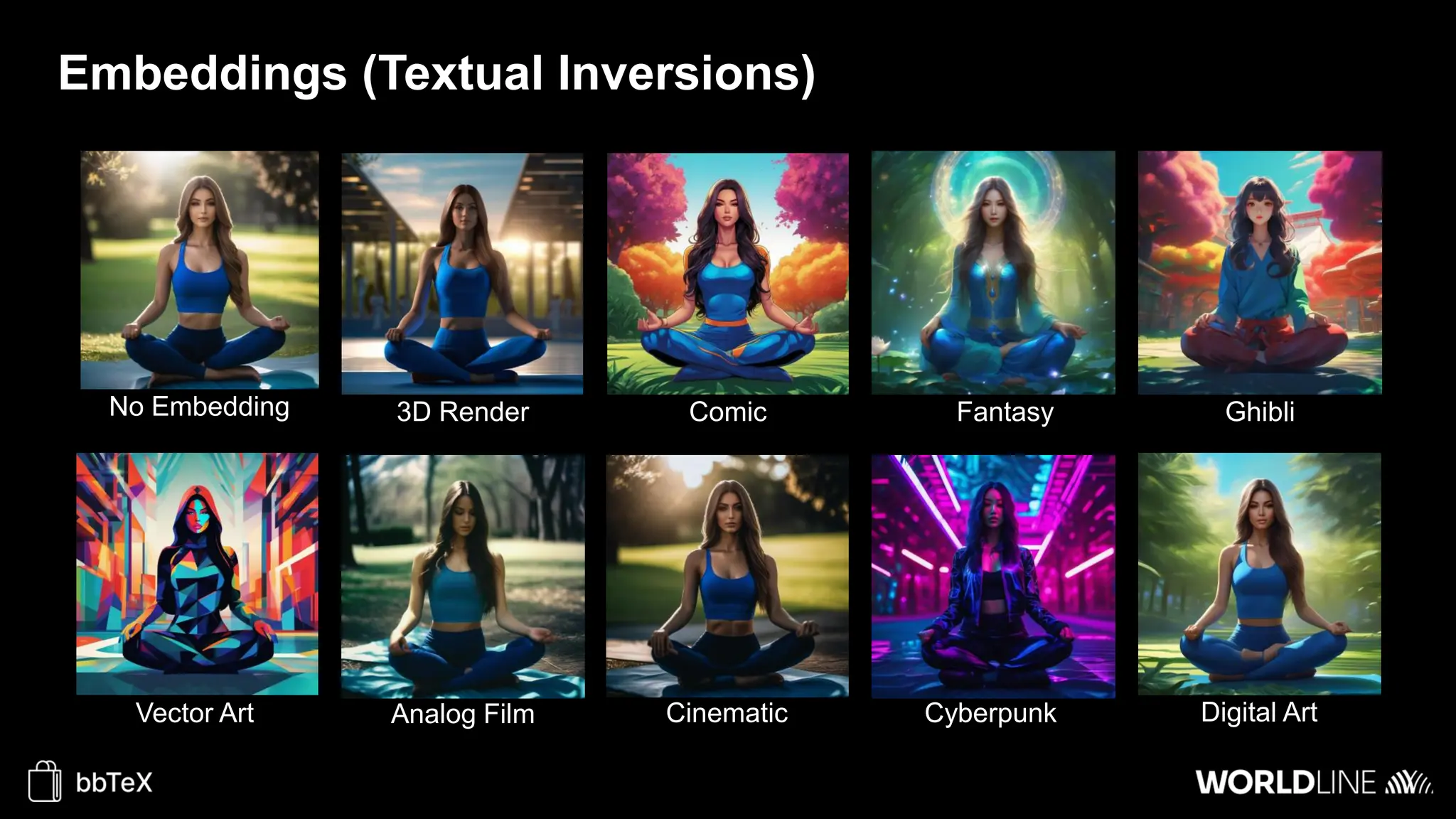

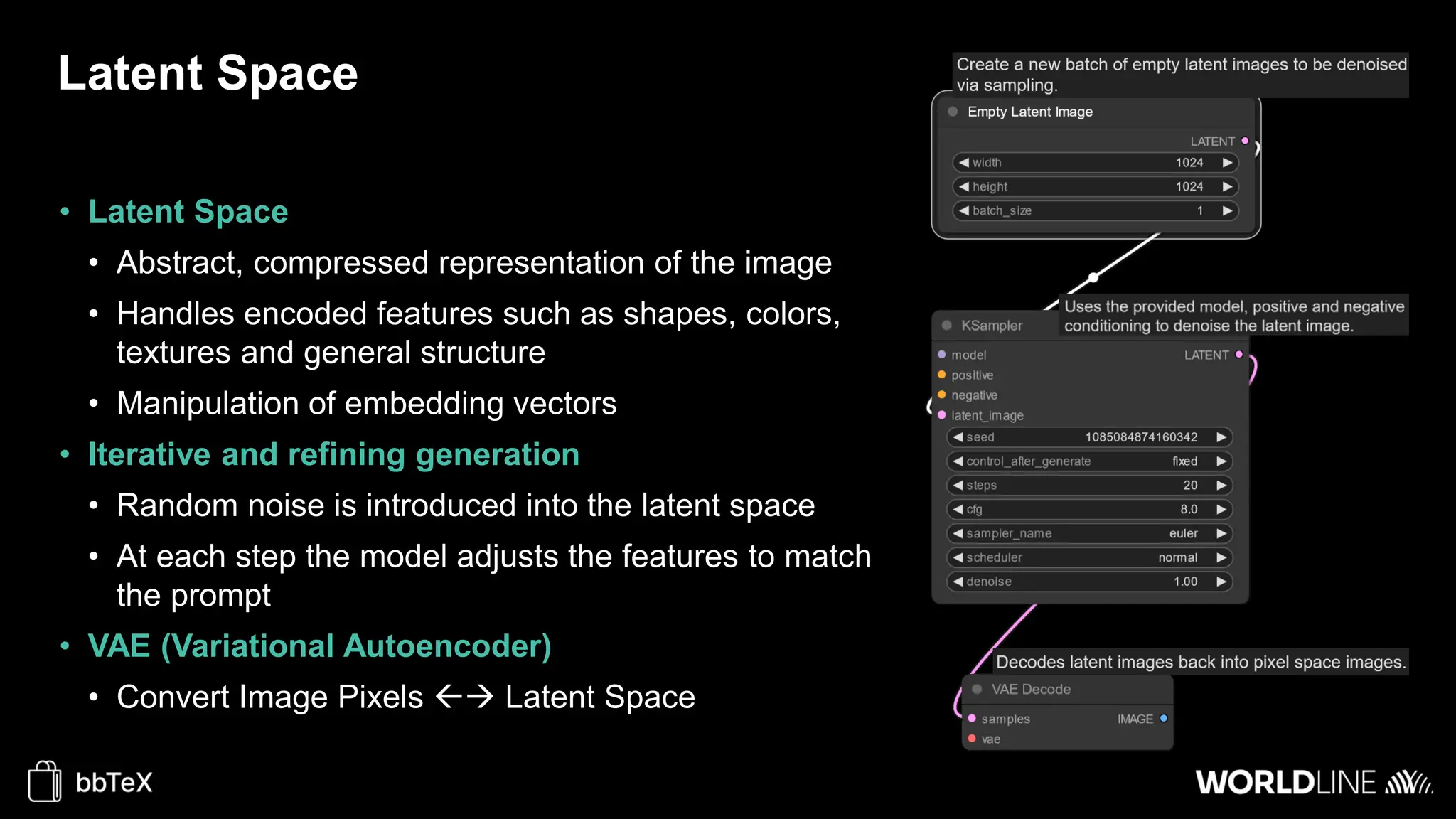

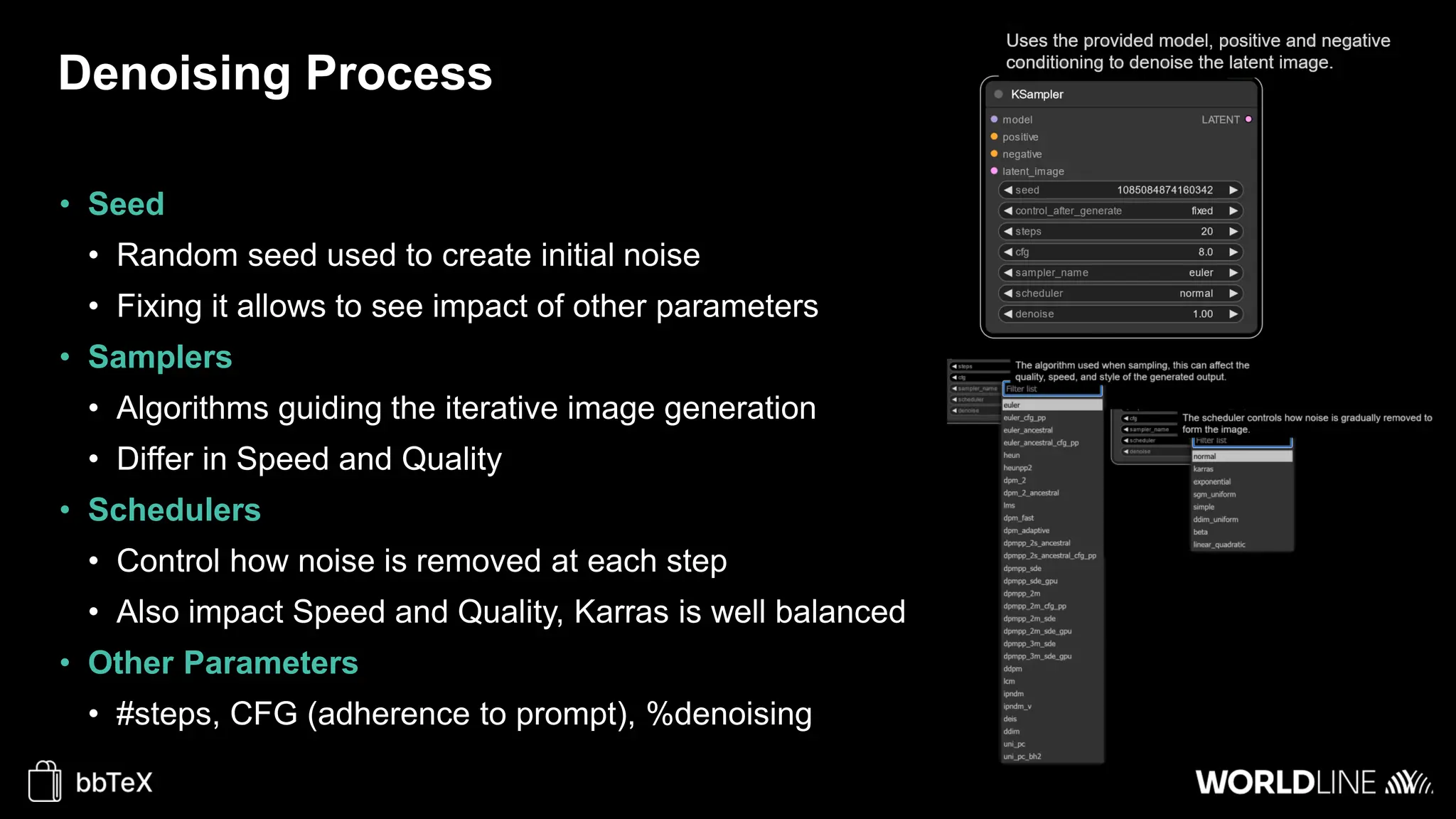

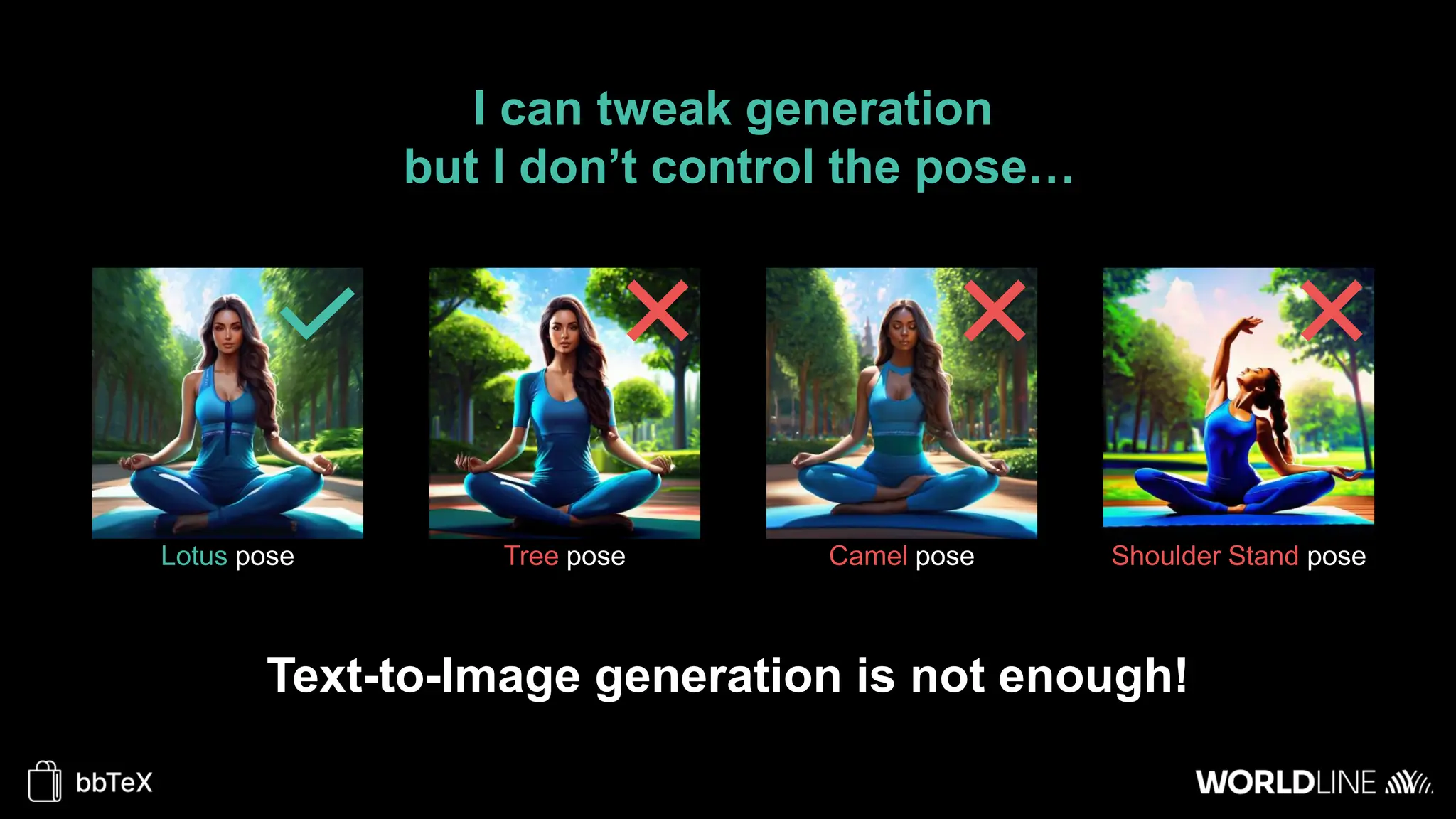

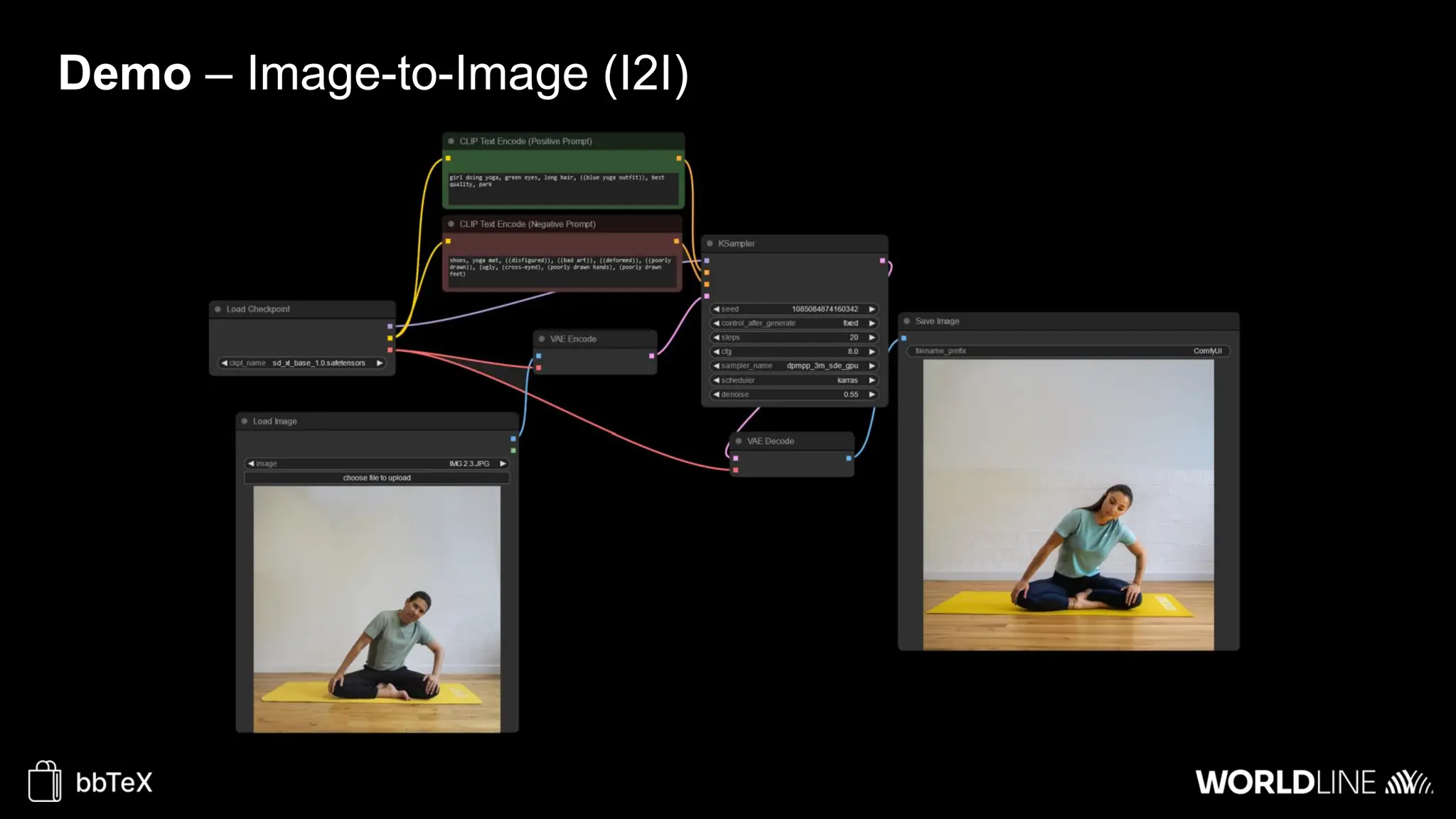

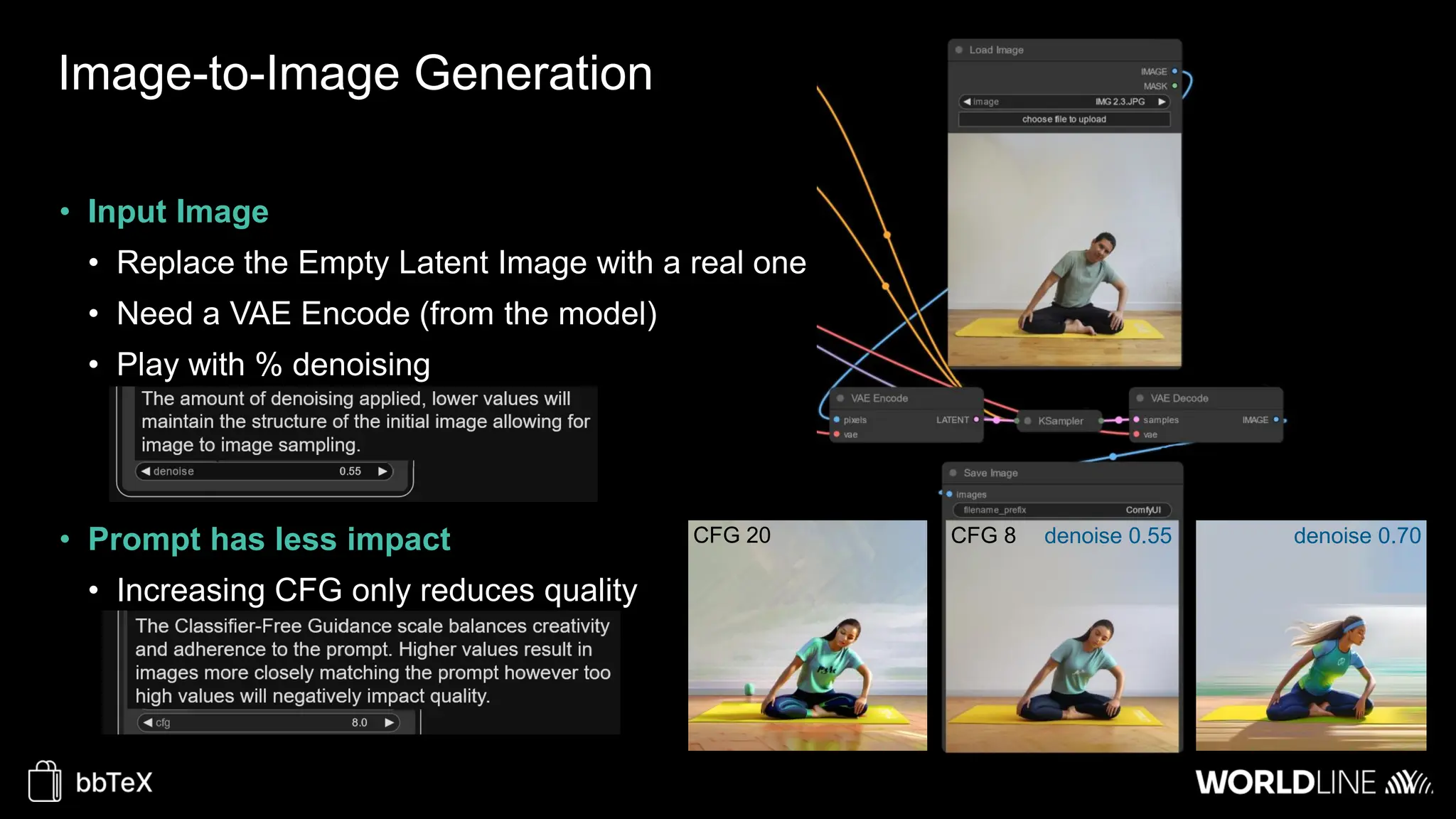

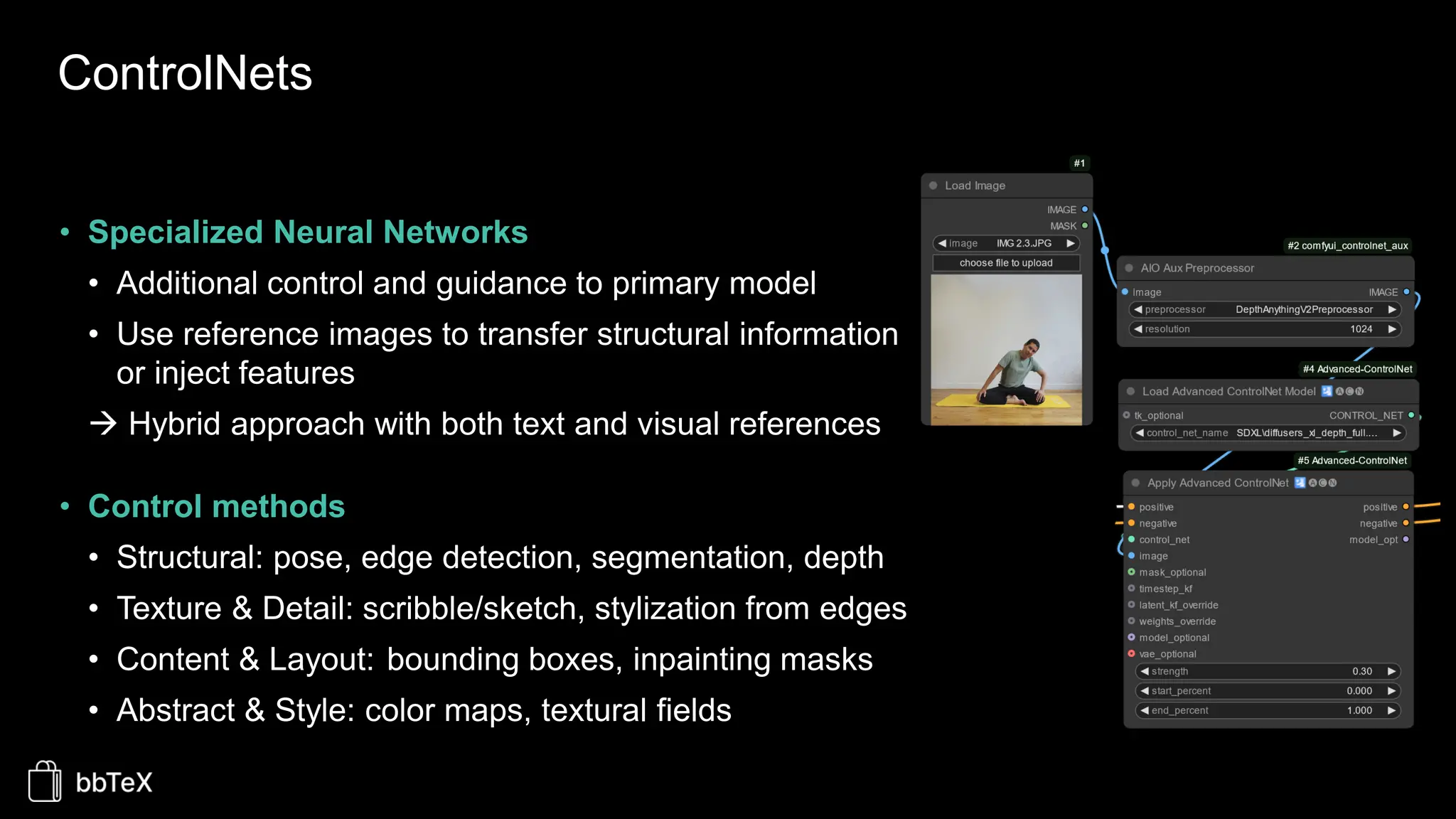

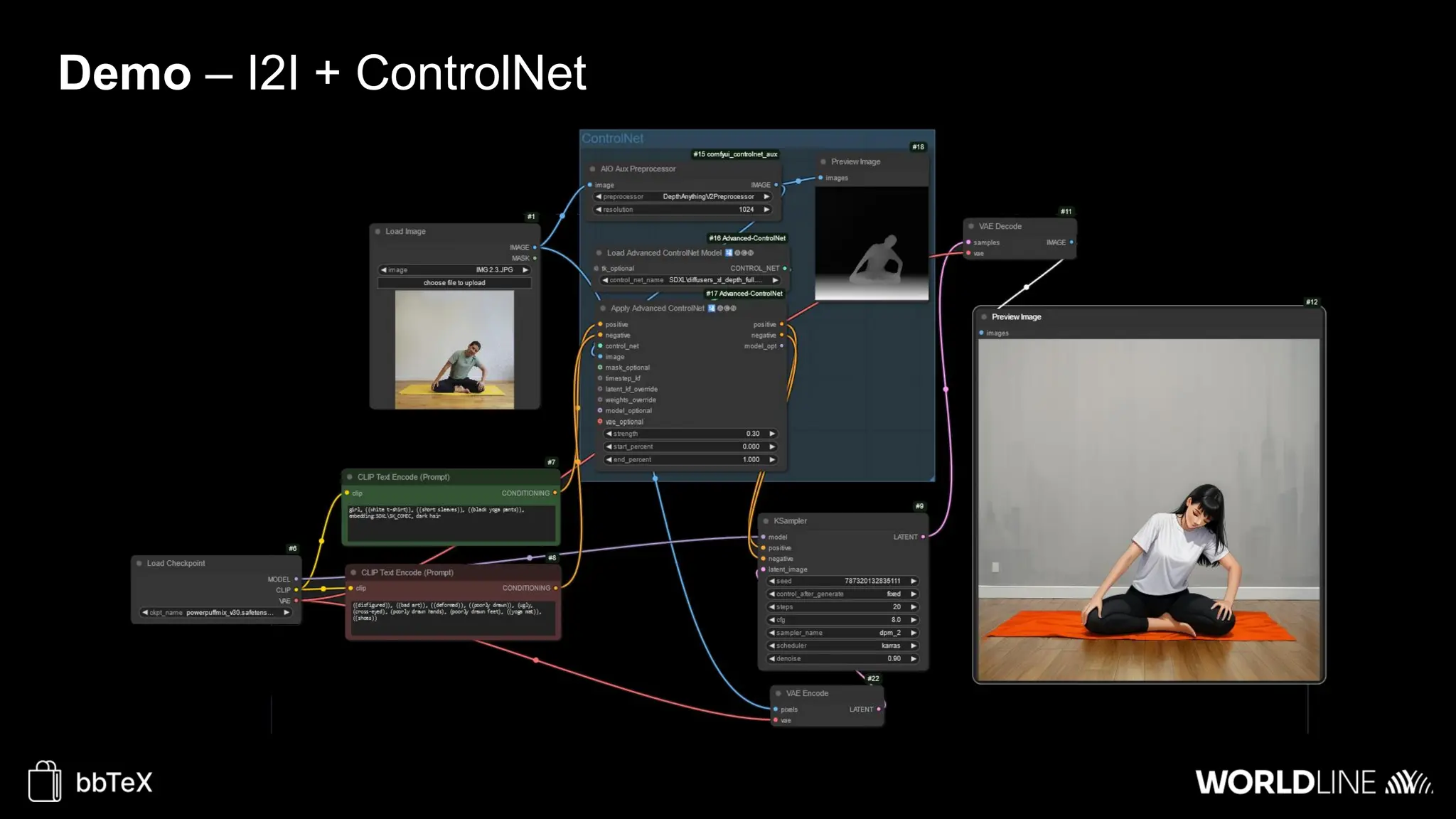

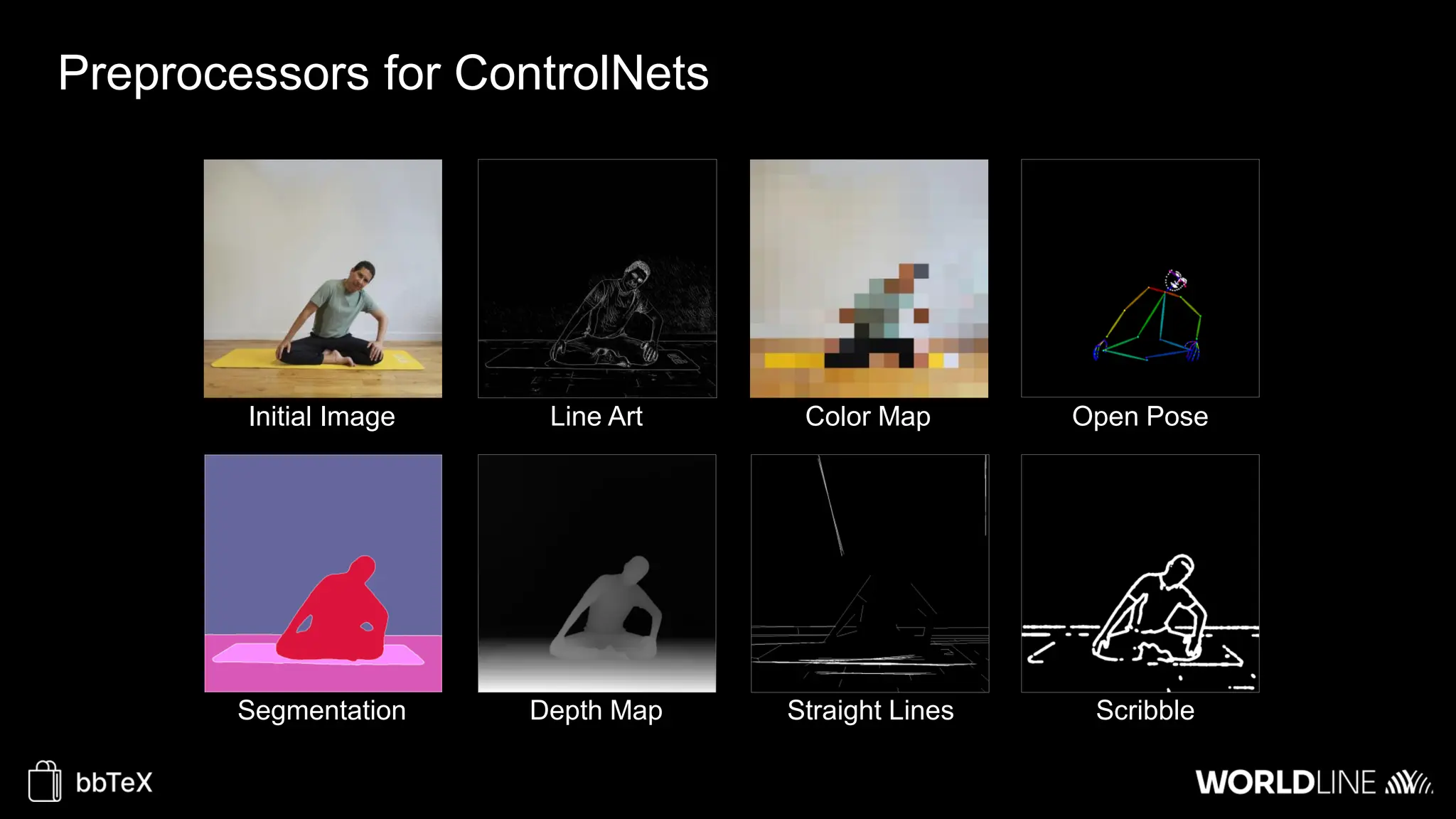

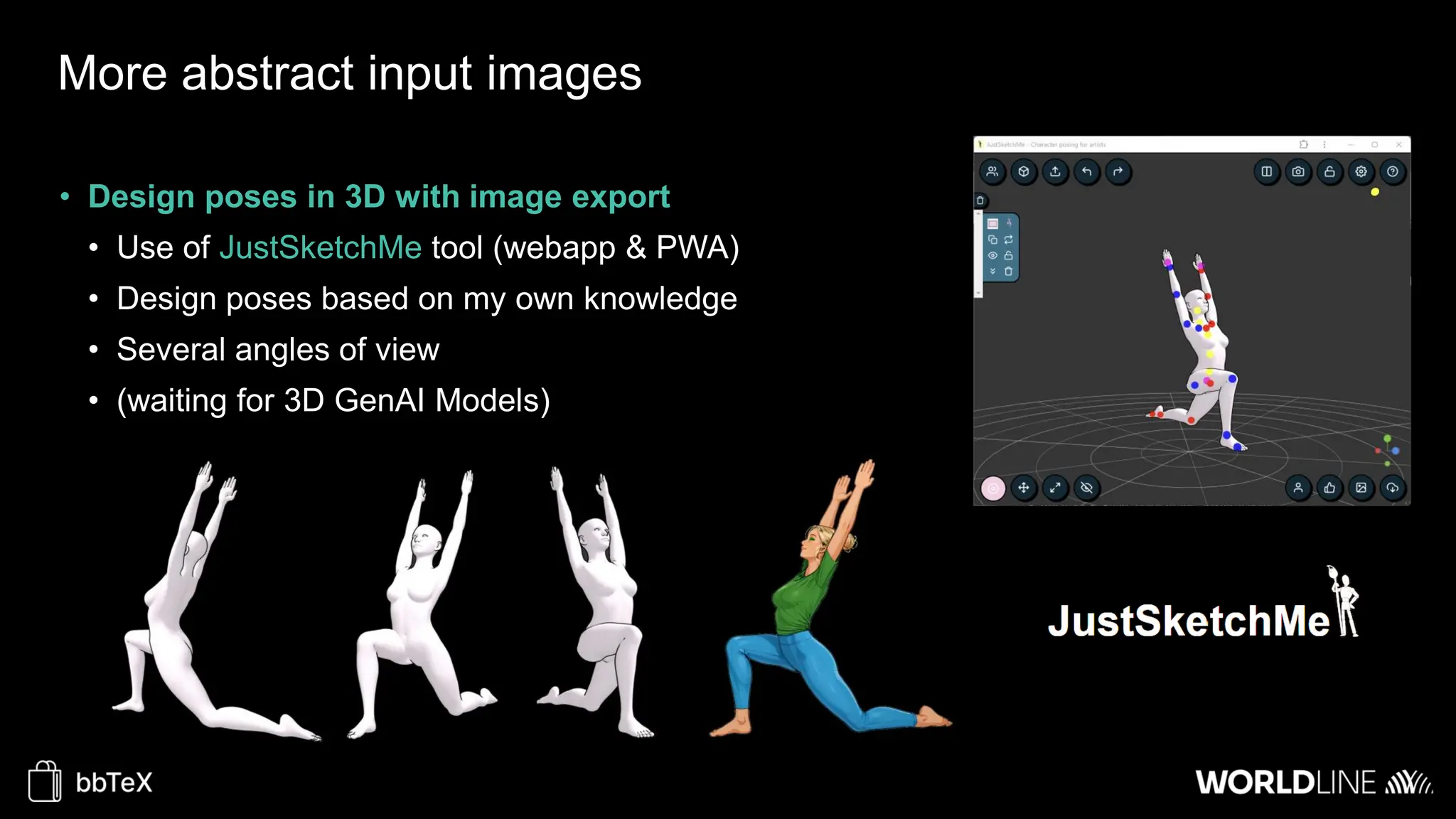

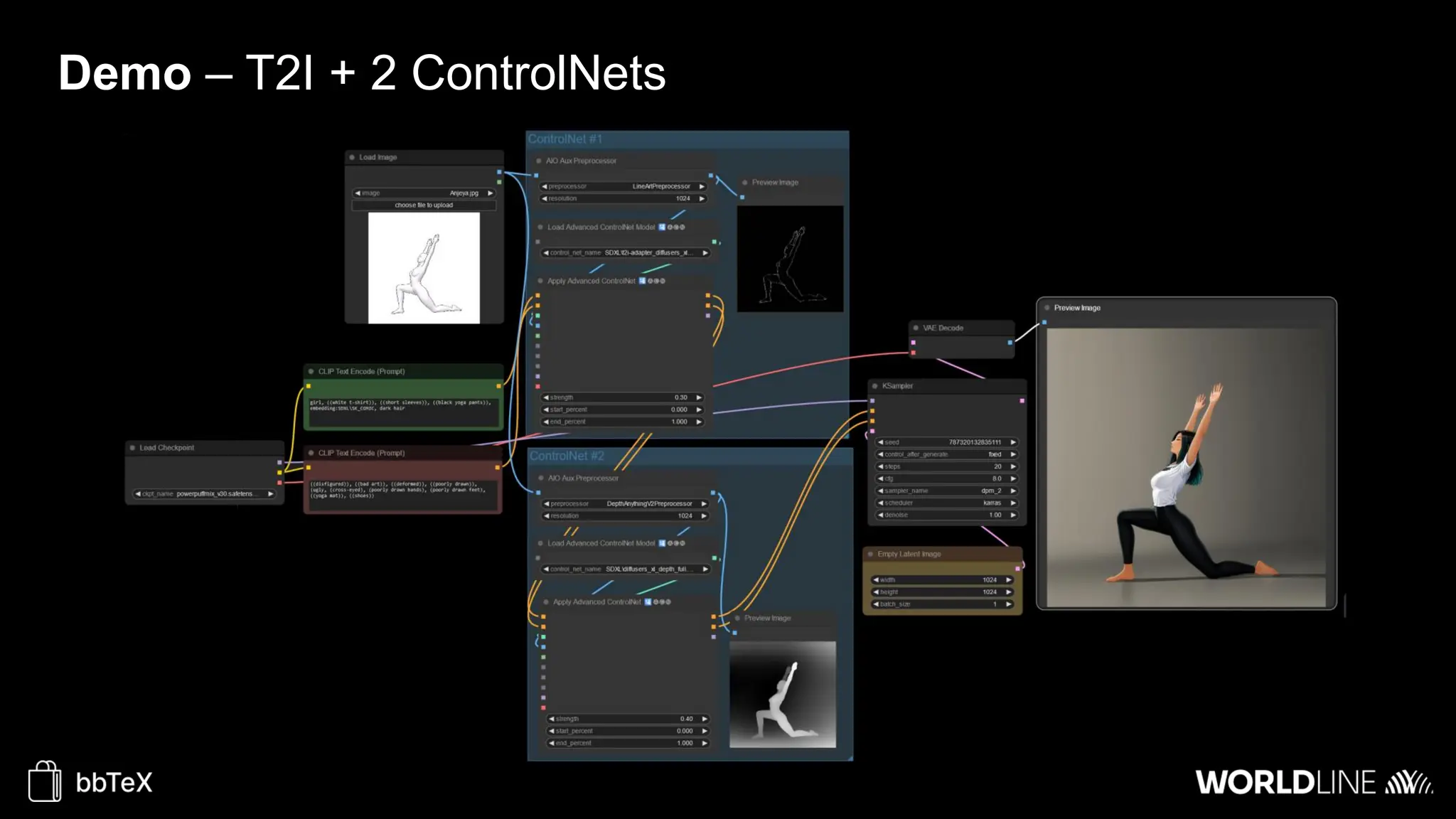

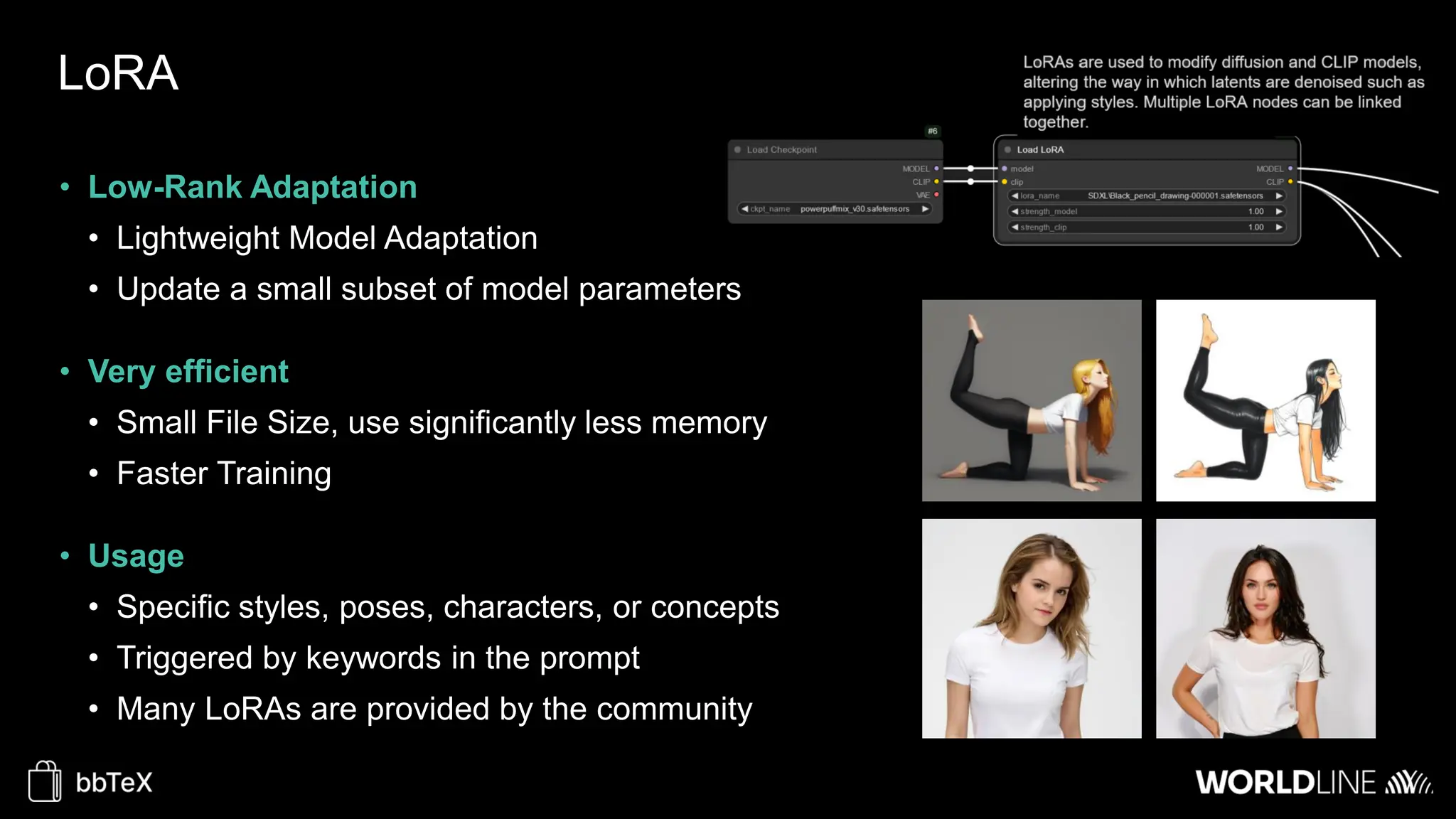

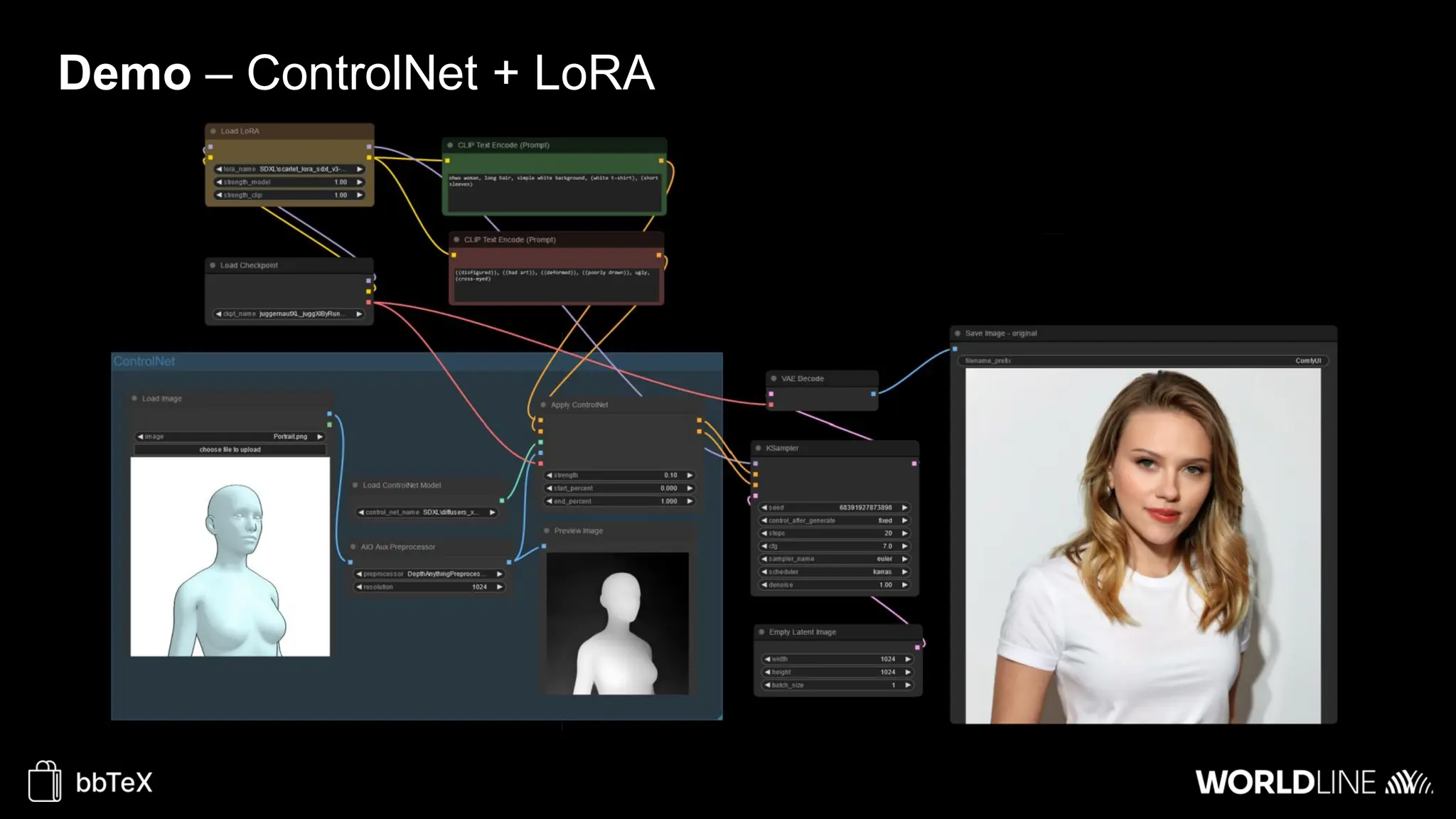

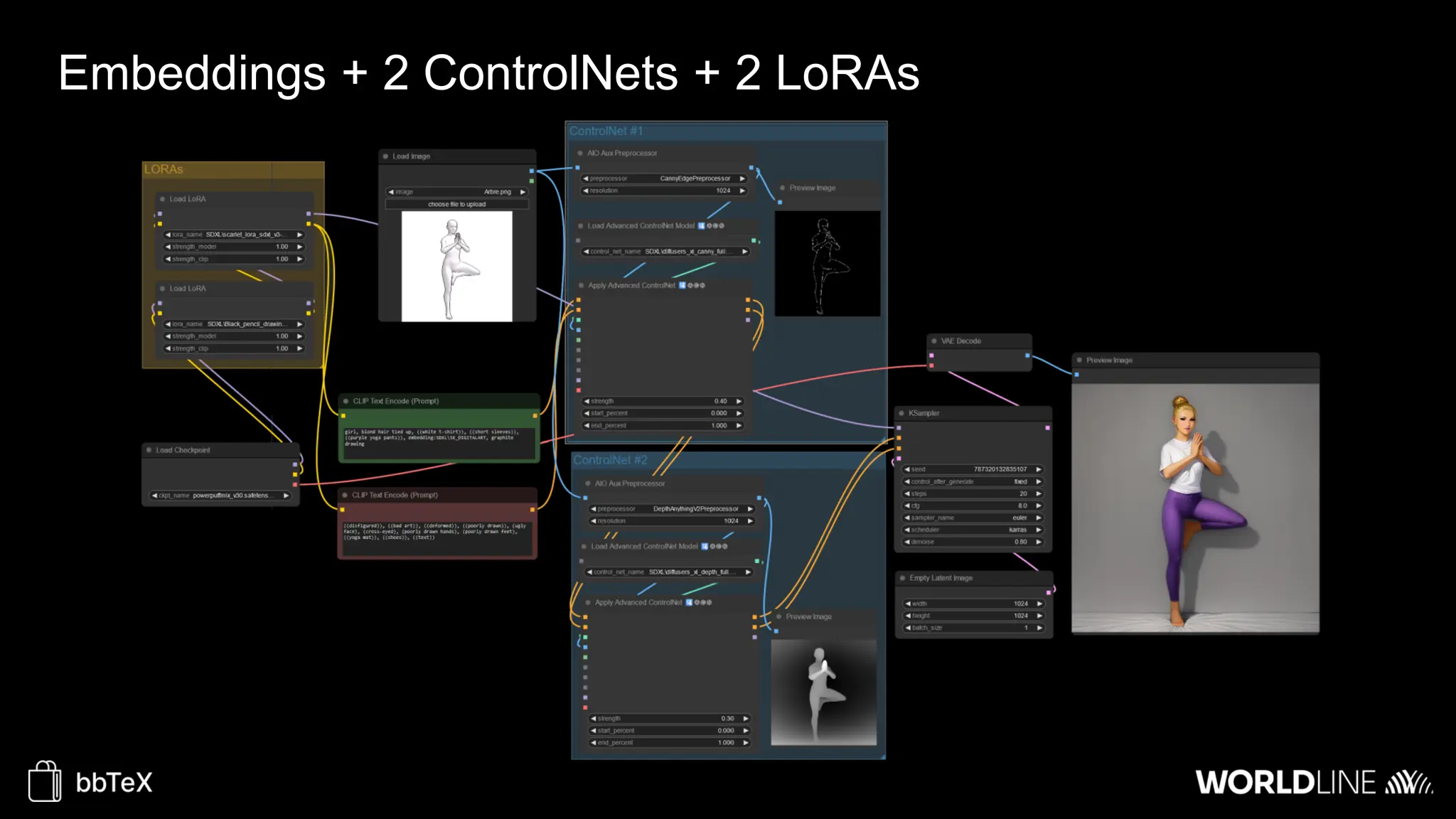

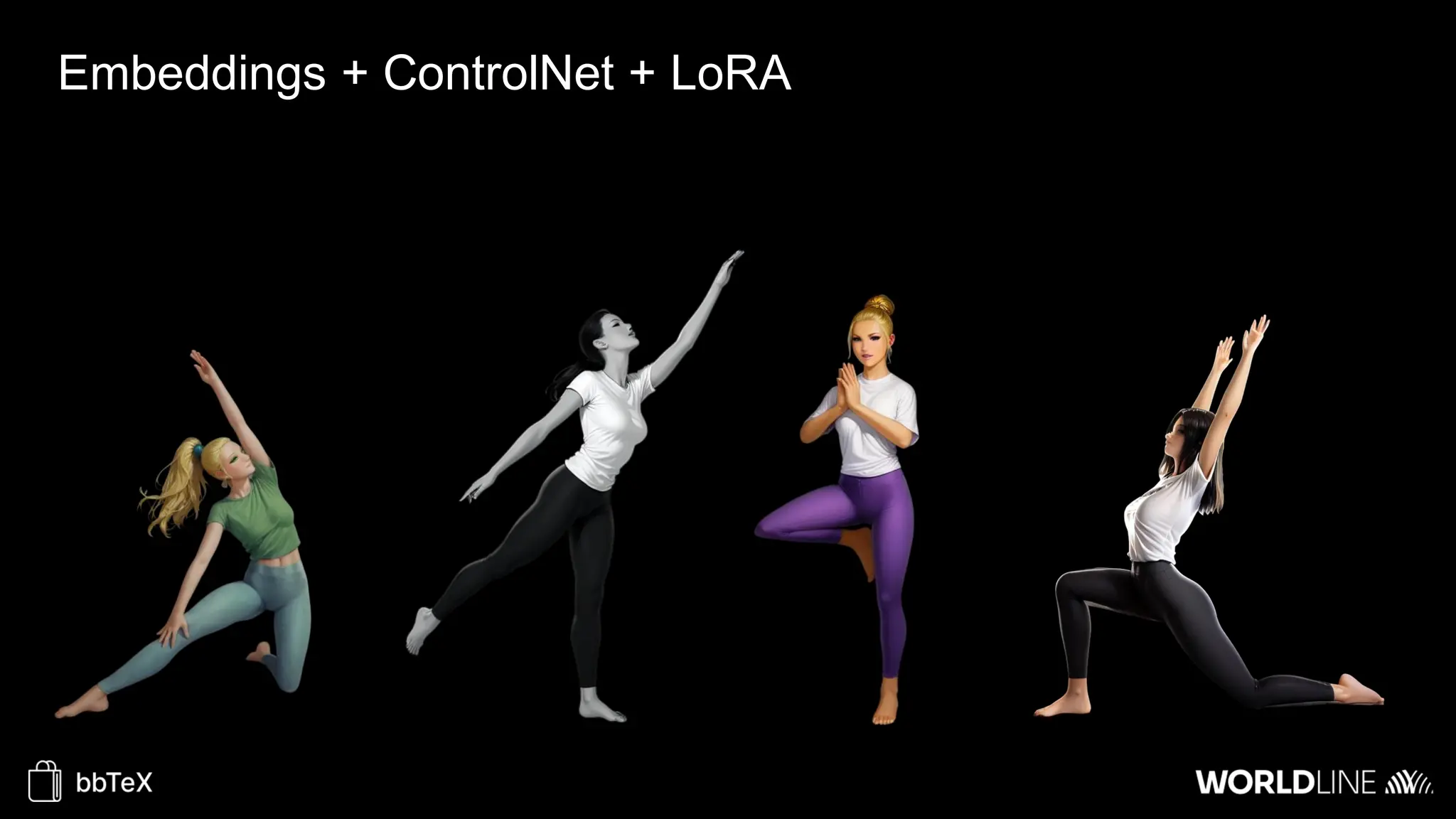

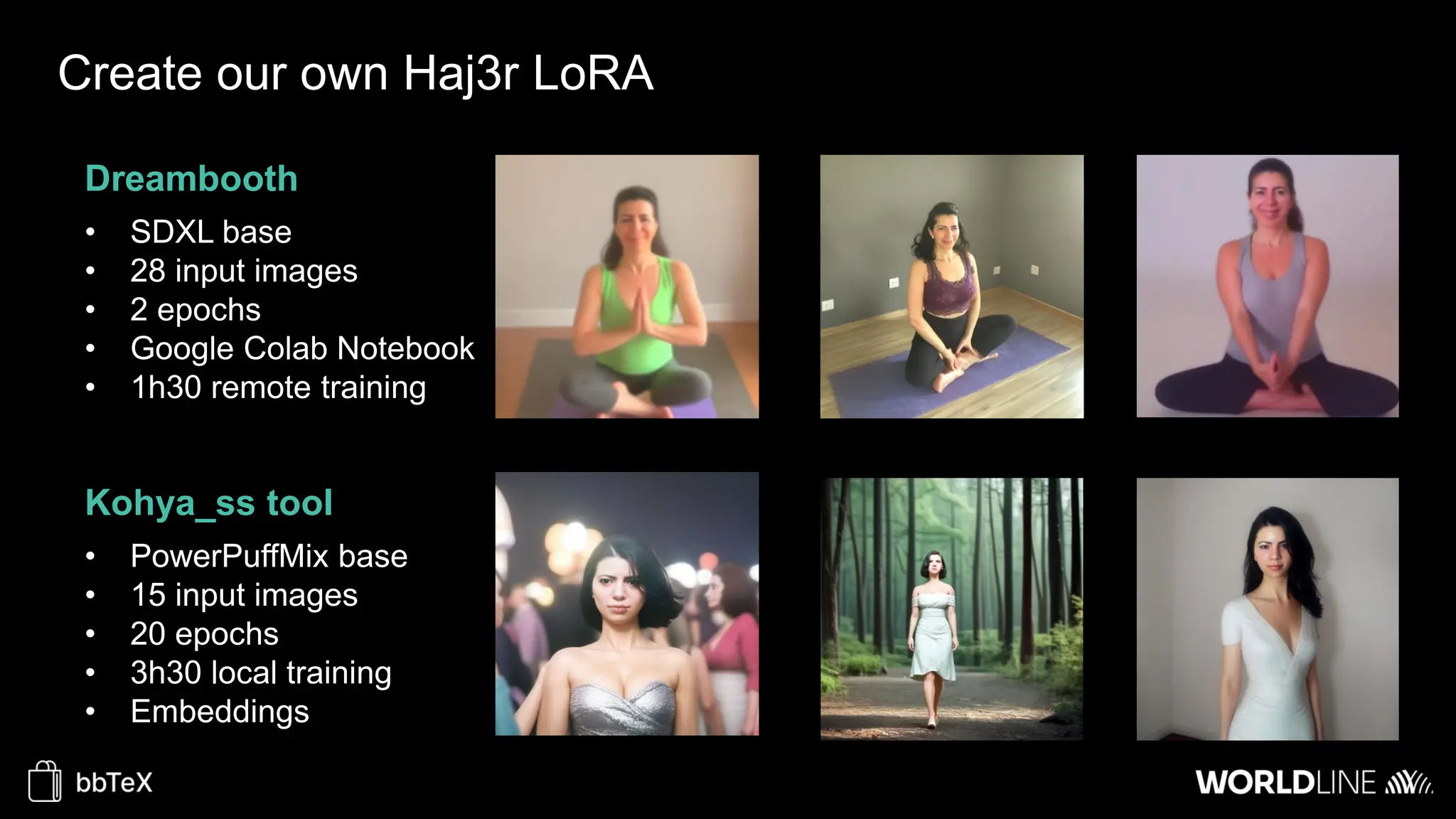

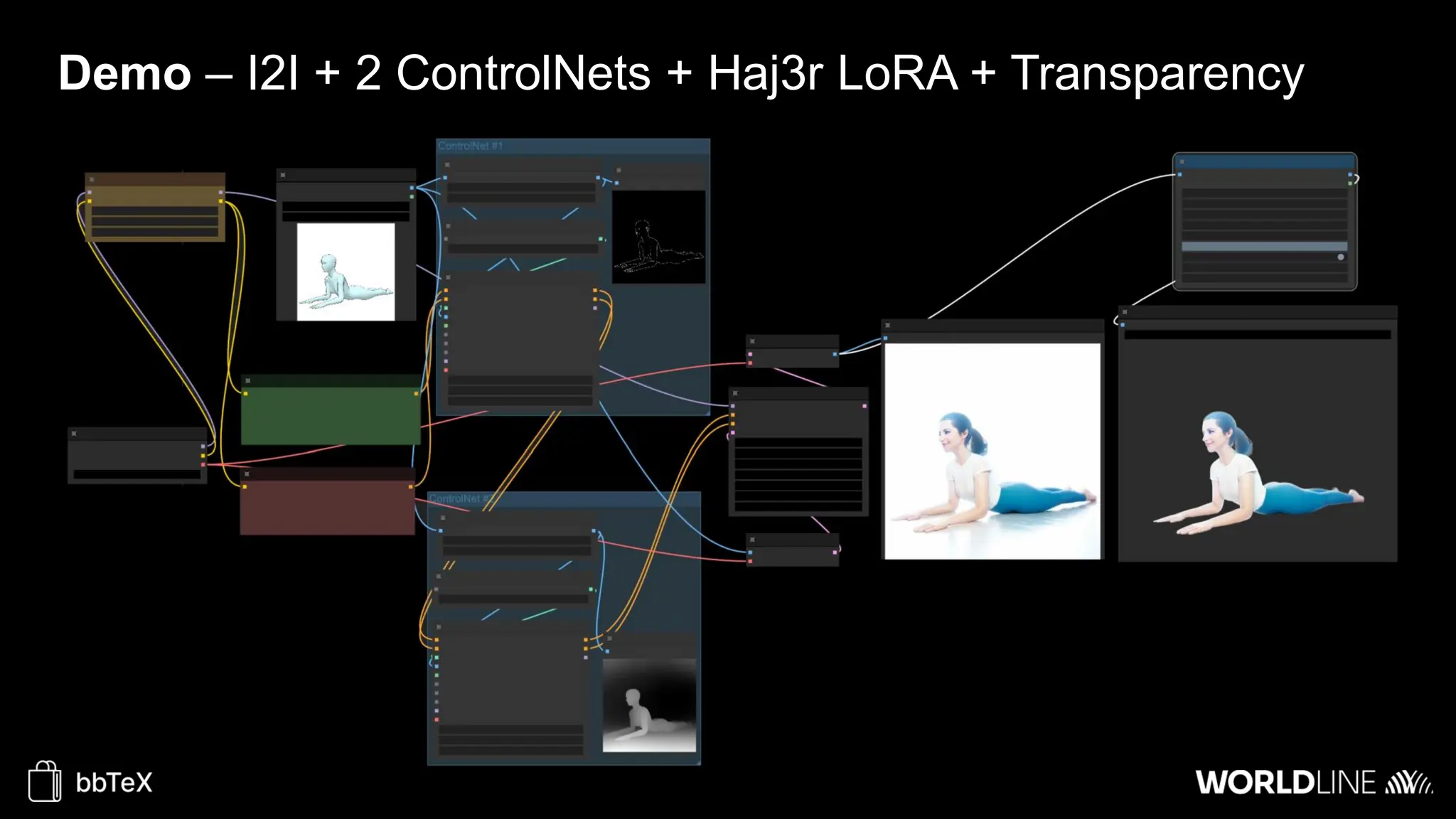

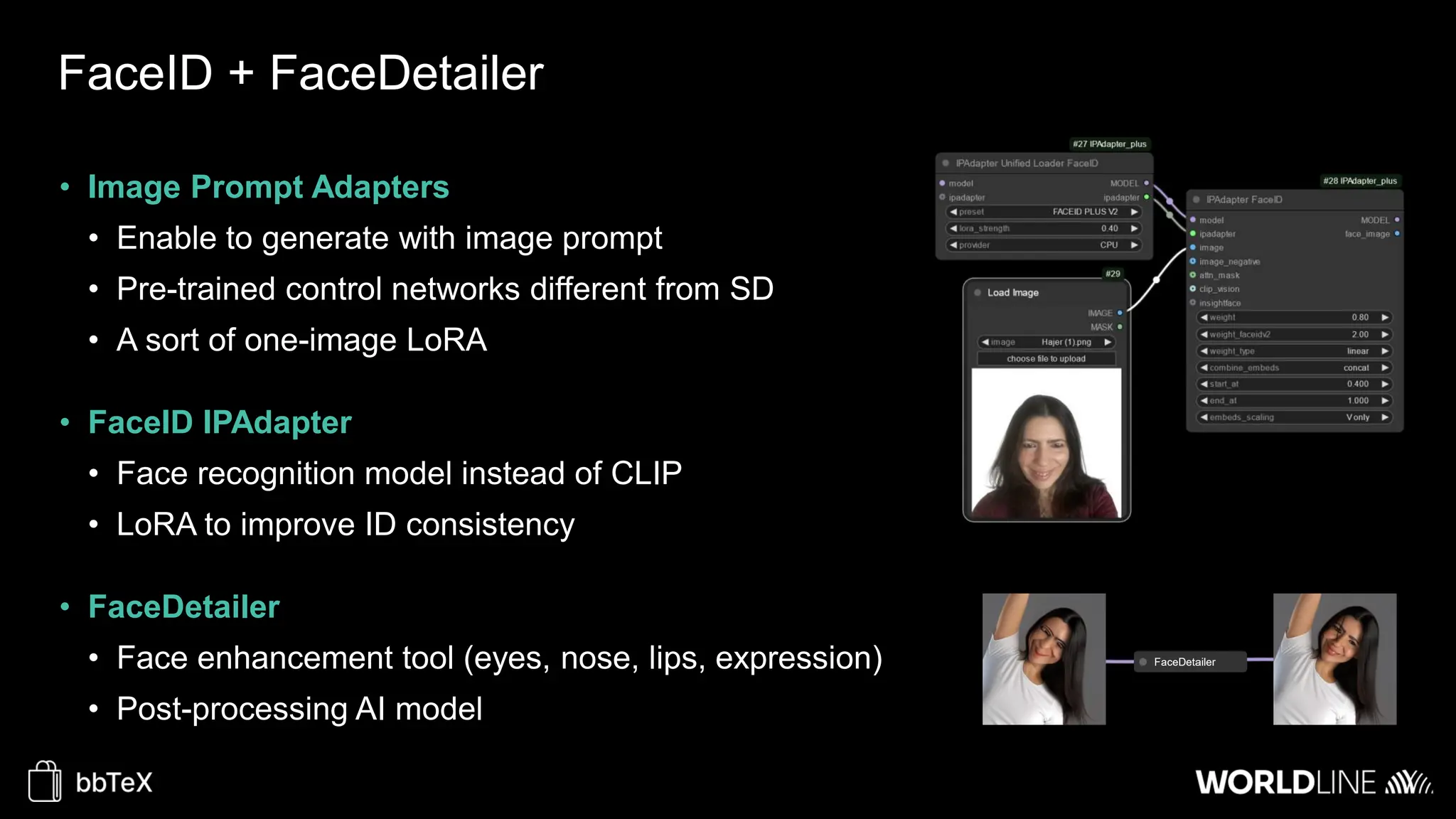

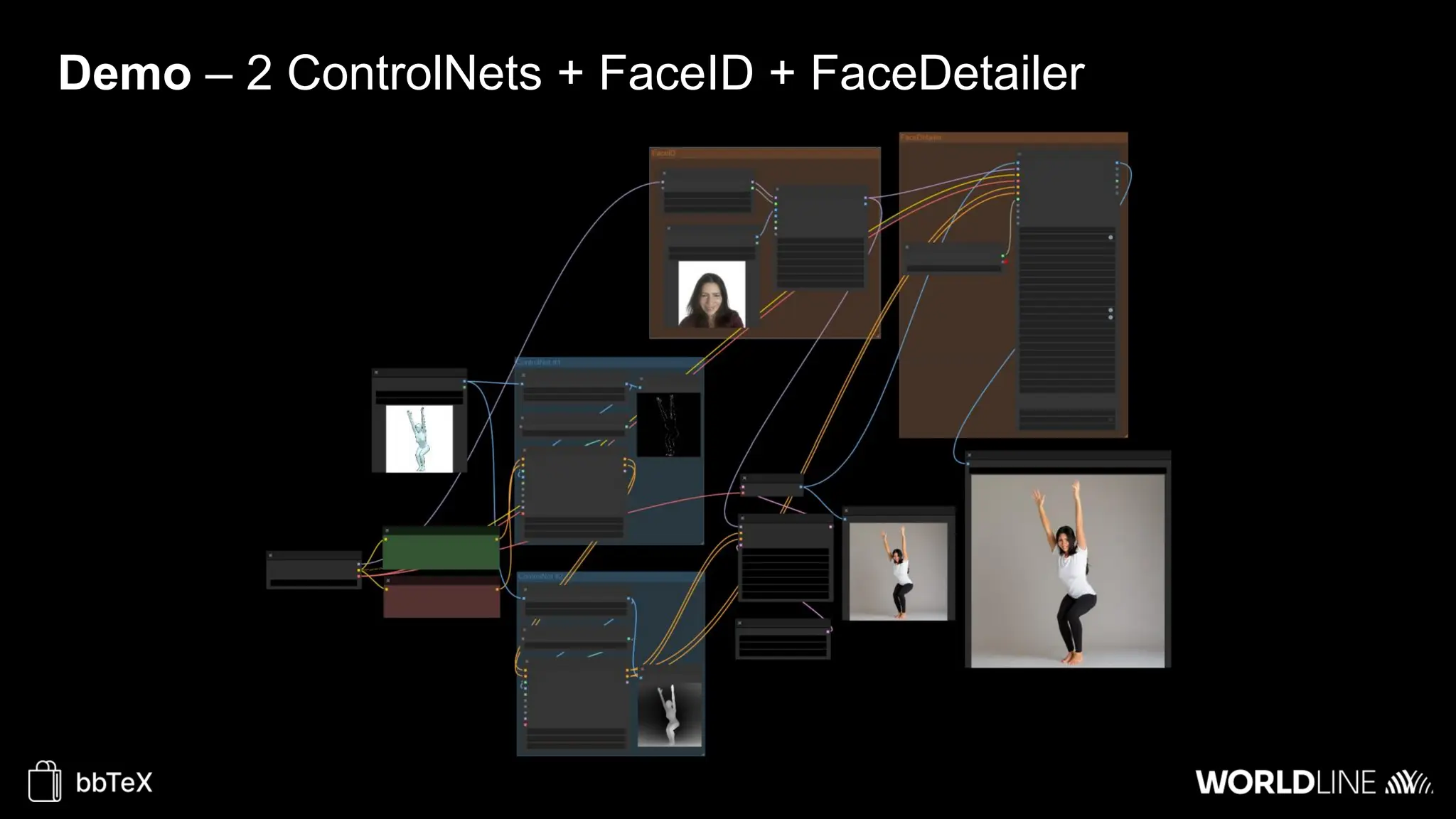

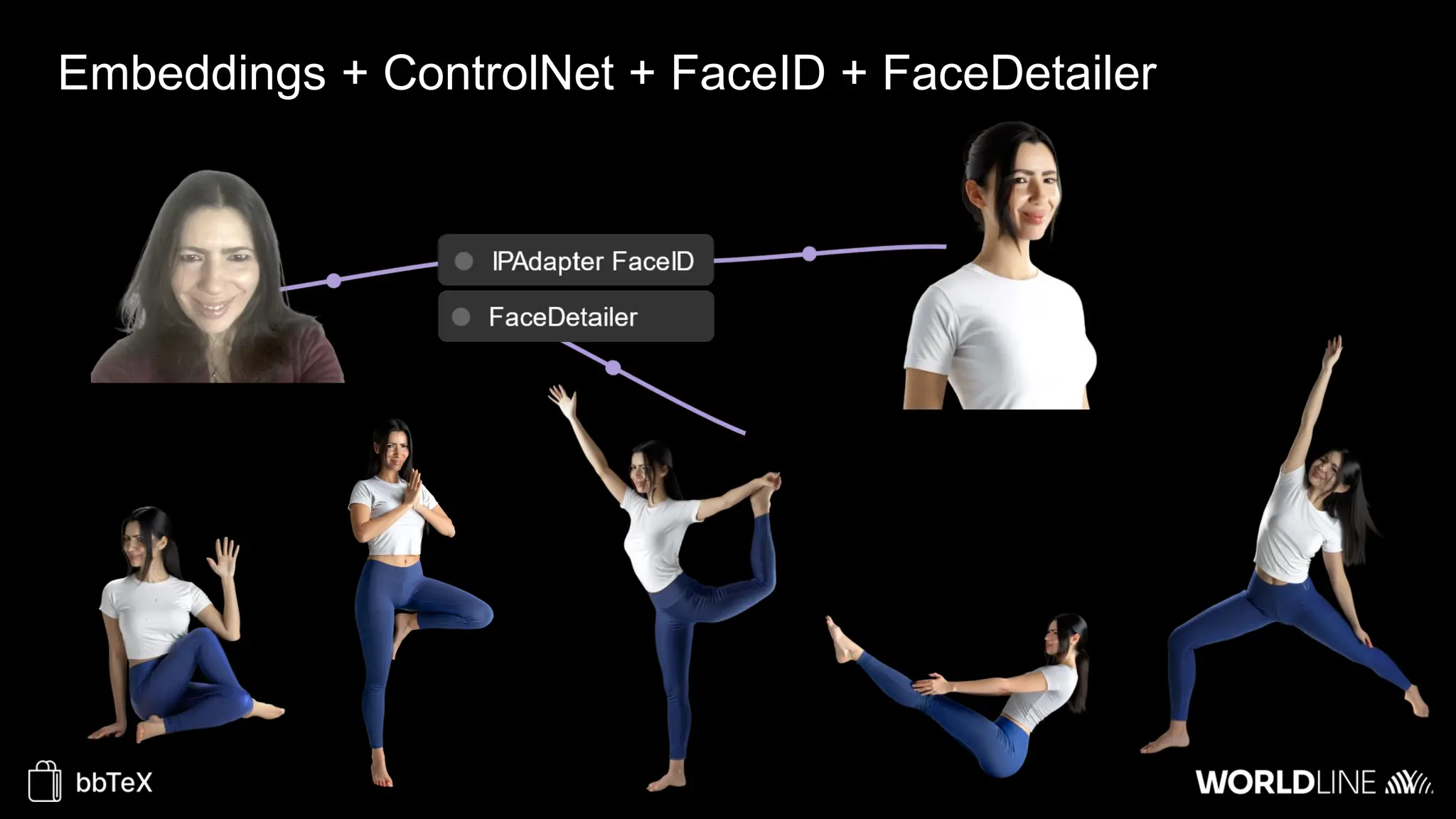

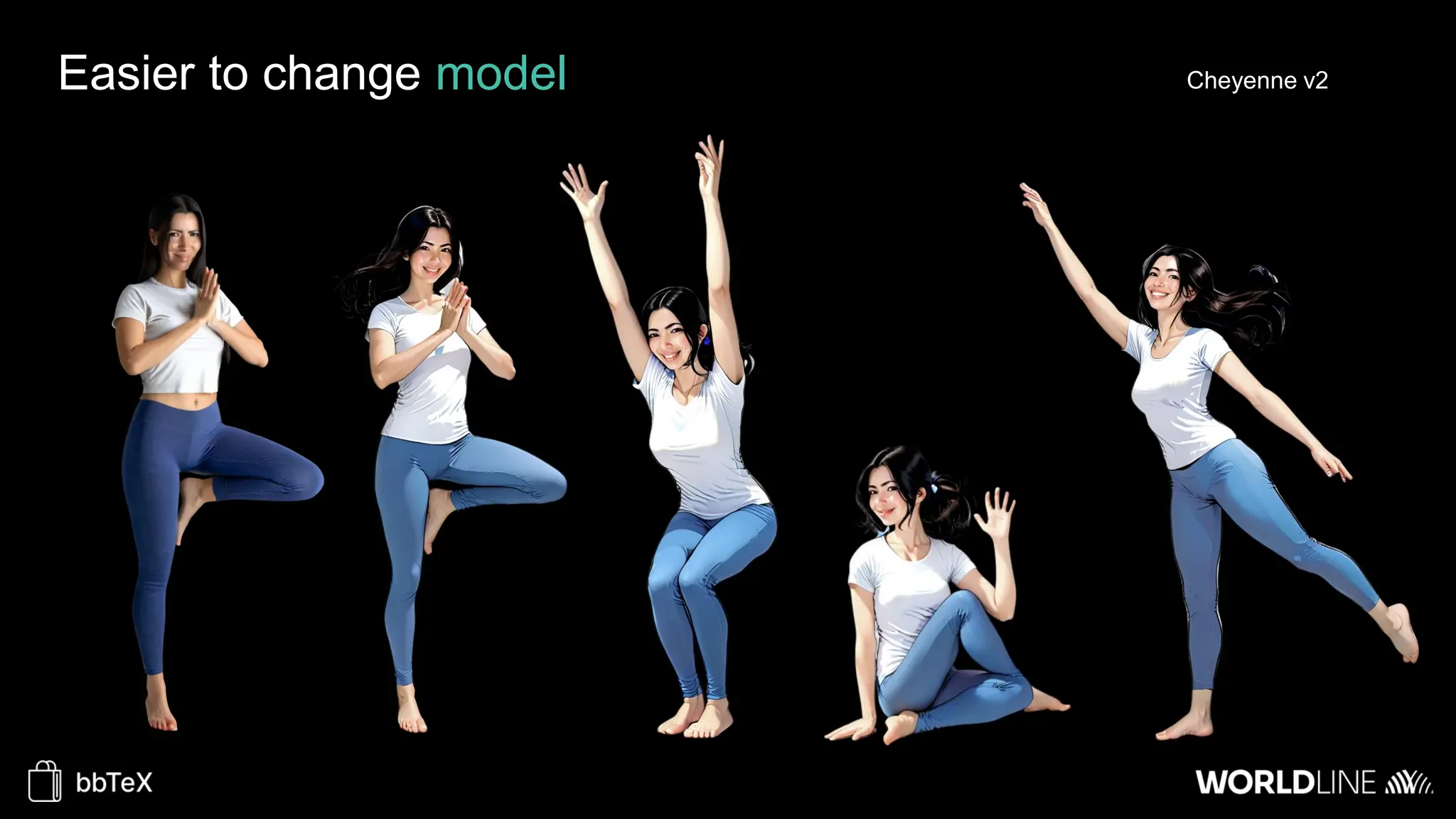

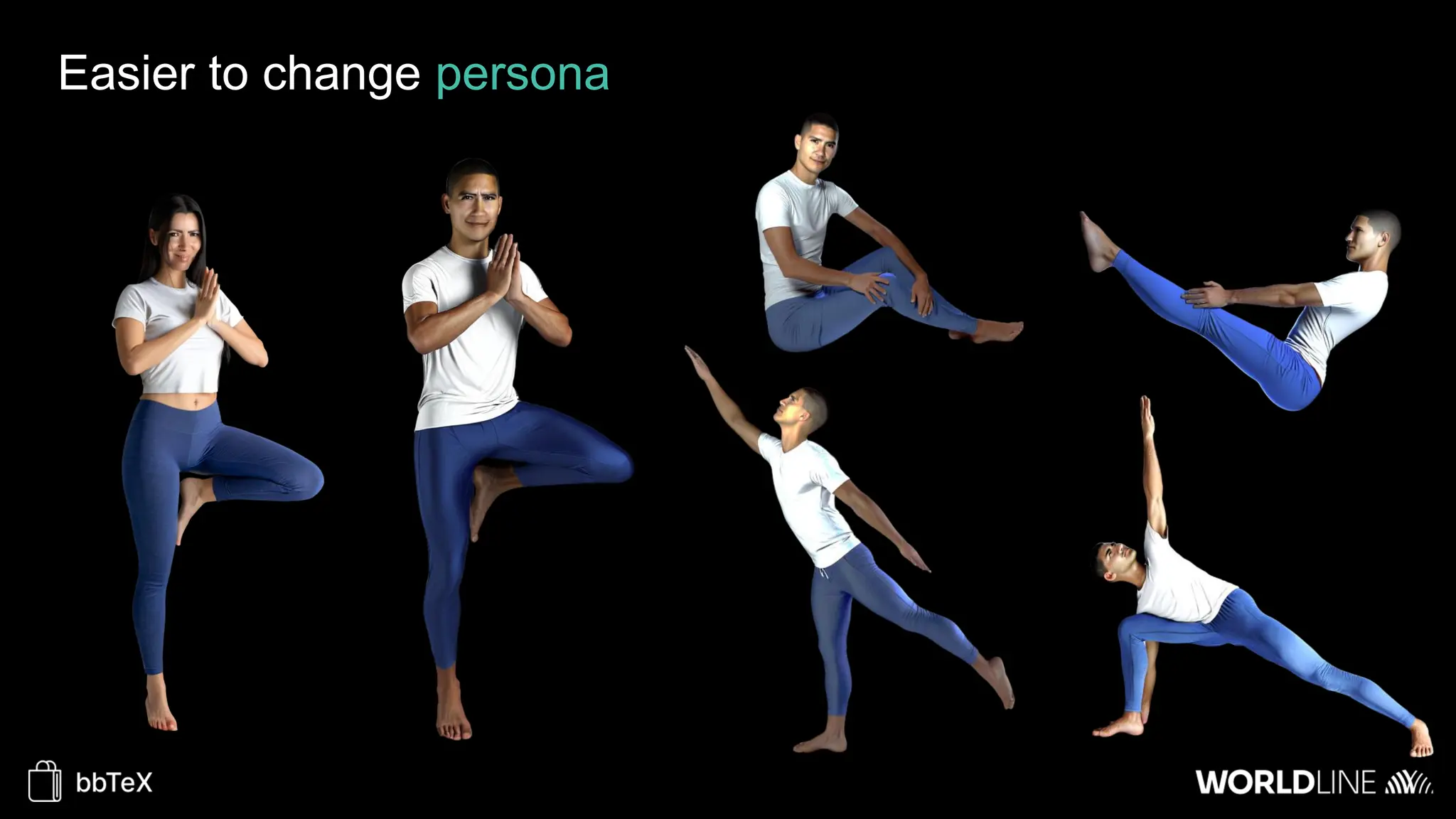

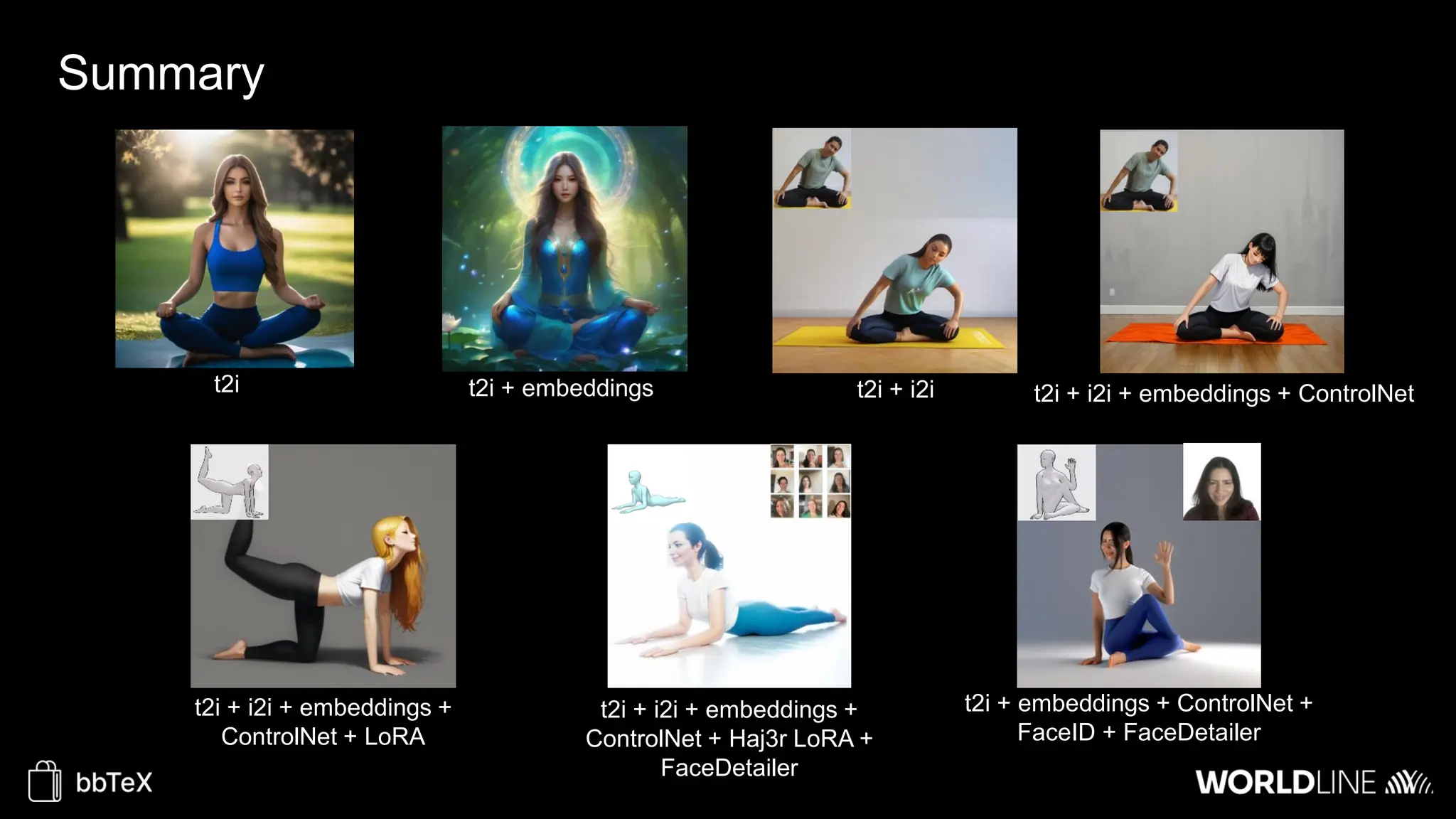

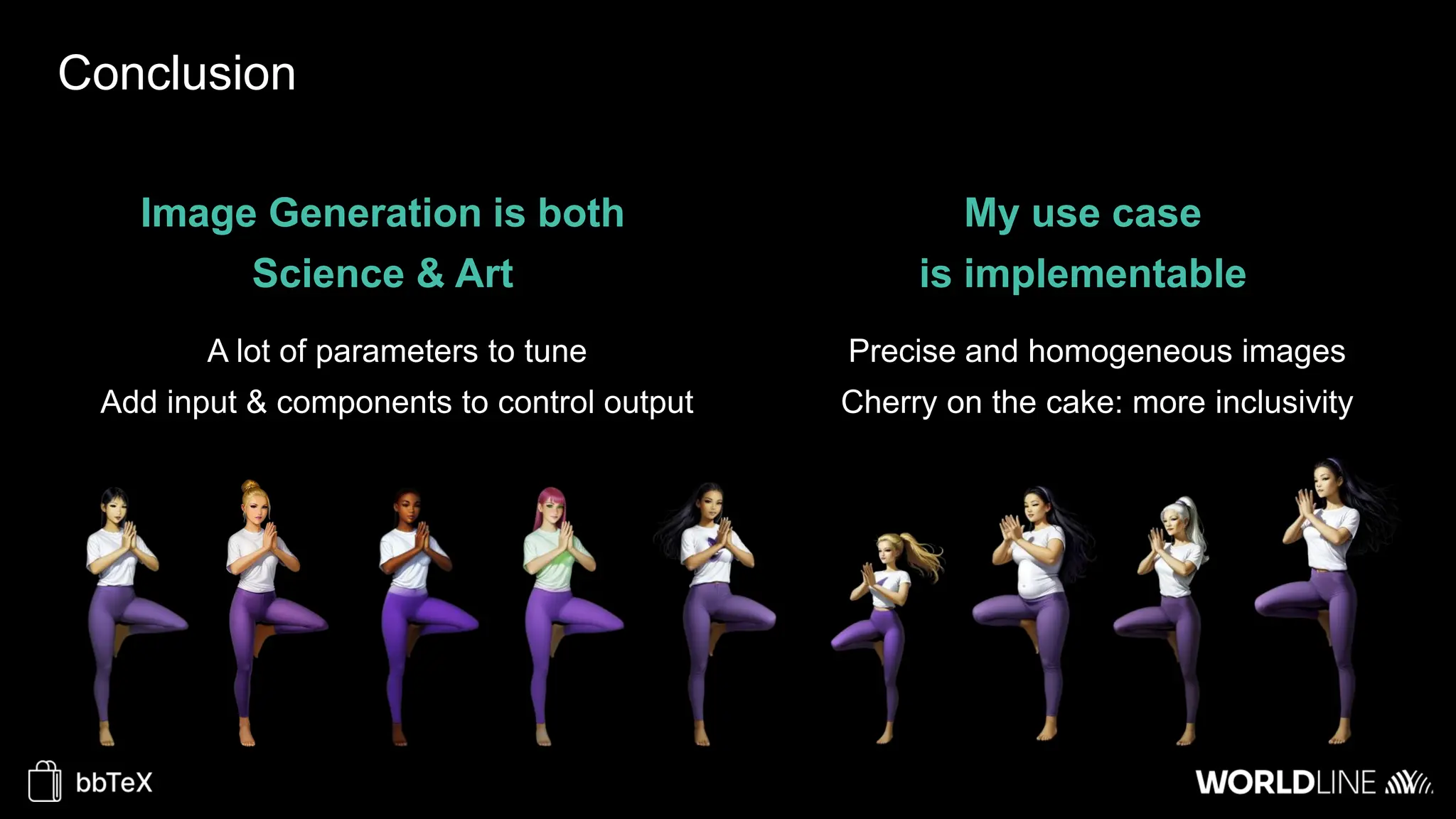

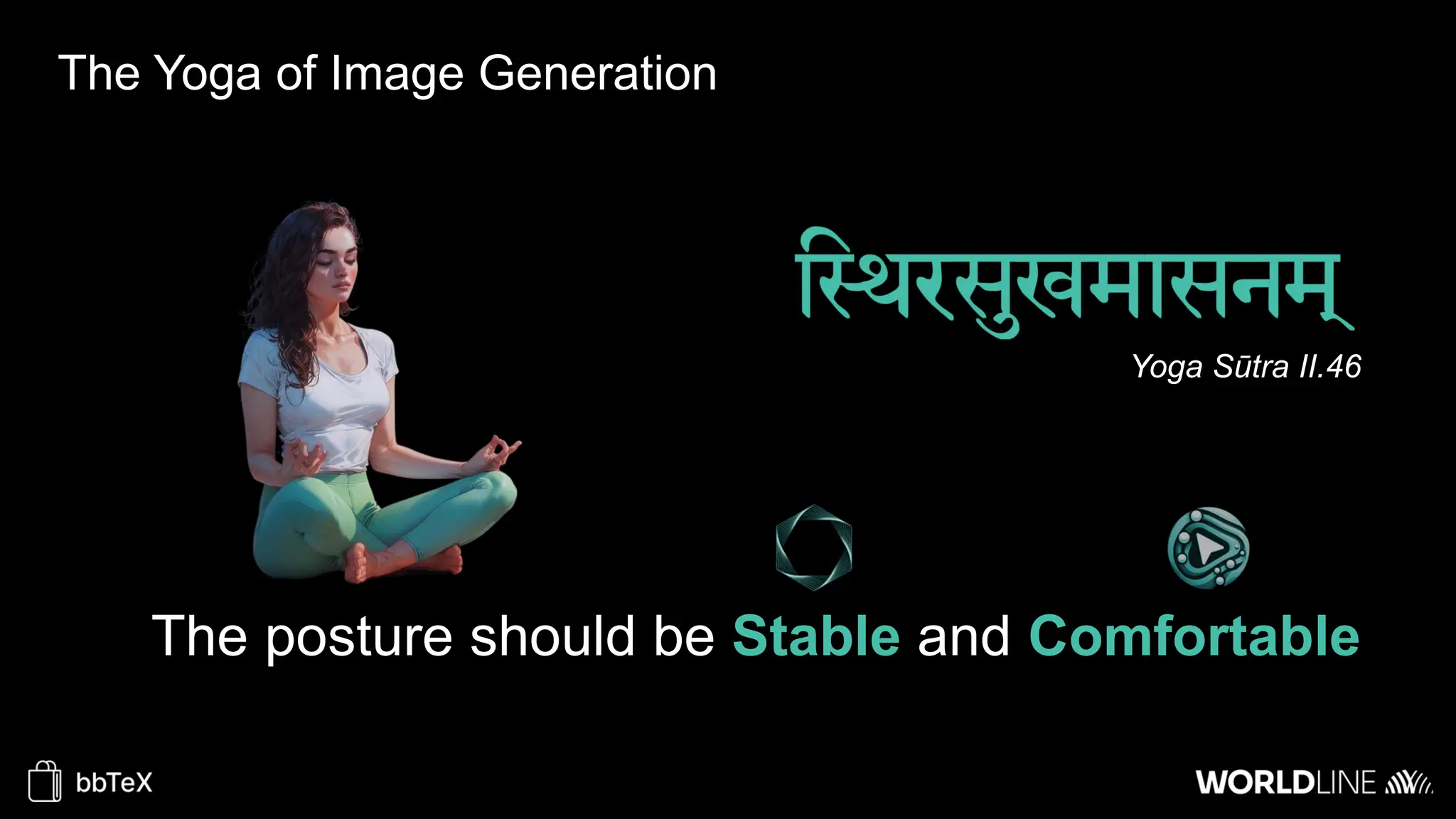

The document discusses the use of stable diffusion for generating precise images of yoga poses through generative AI technology. It highlights various tools, models, and community-driven resources that enable text-to-image and image-to-image generation, including complex customization with control nets and embeddings. The conclusion emphasizes that image generation merges science and art, aiming for inclusivity and precision in the representation of yoga postures.