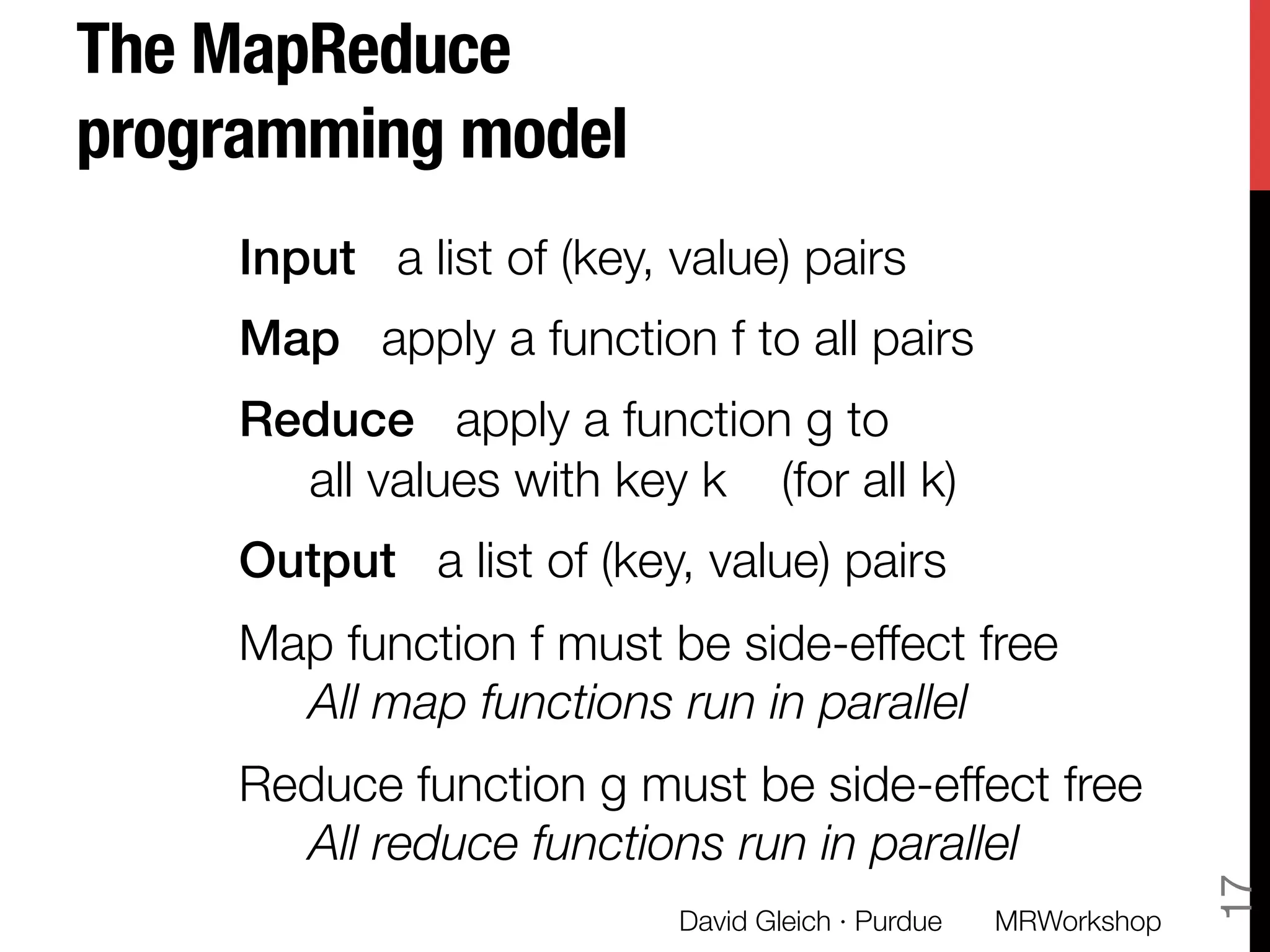

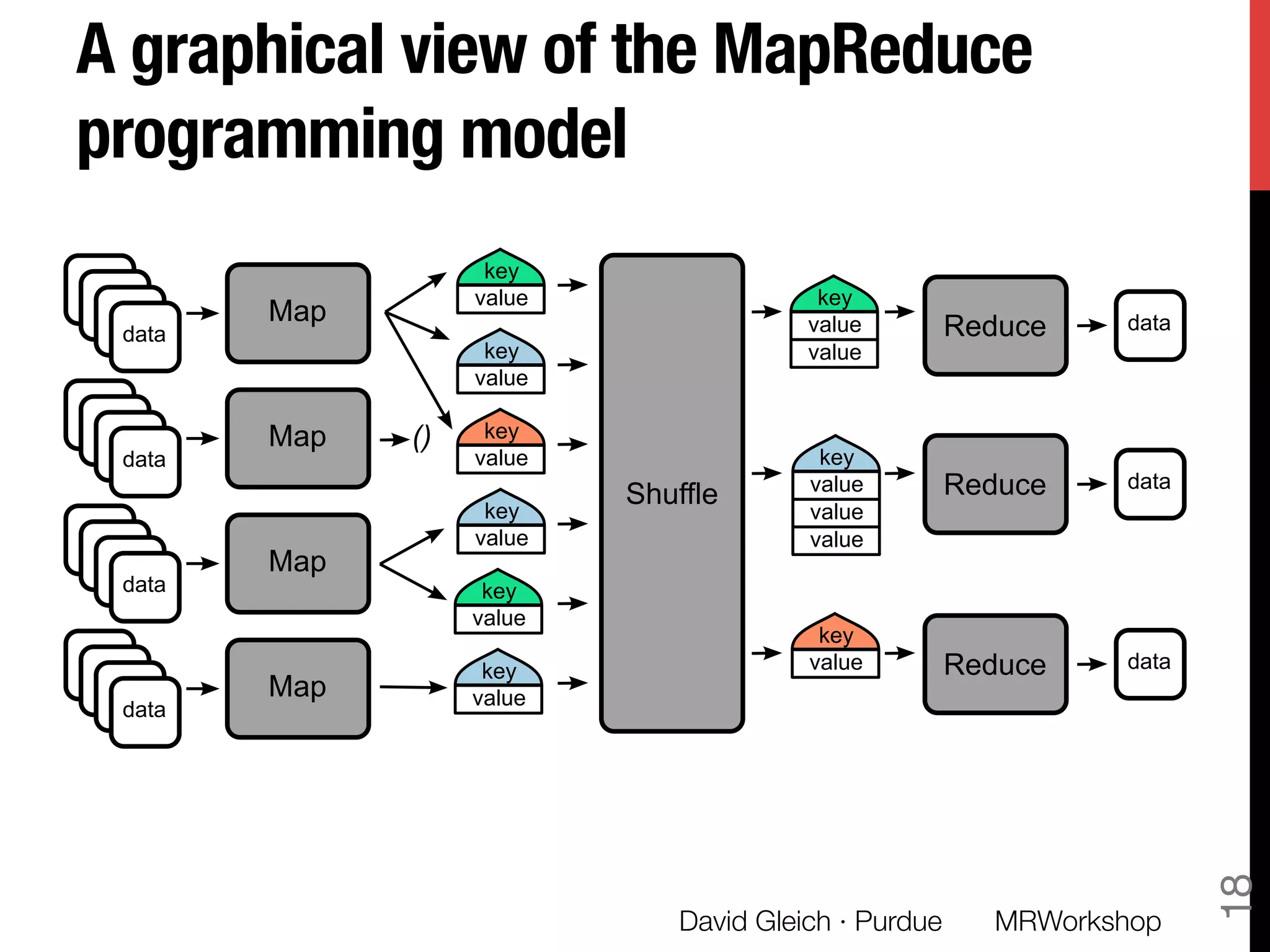

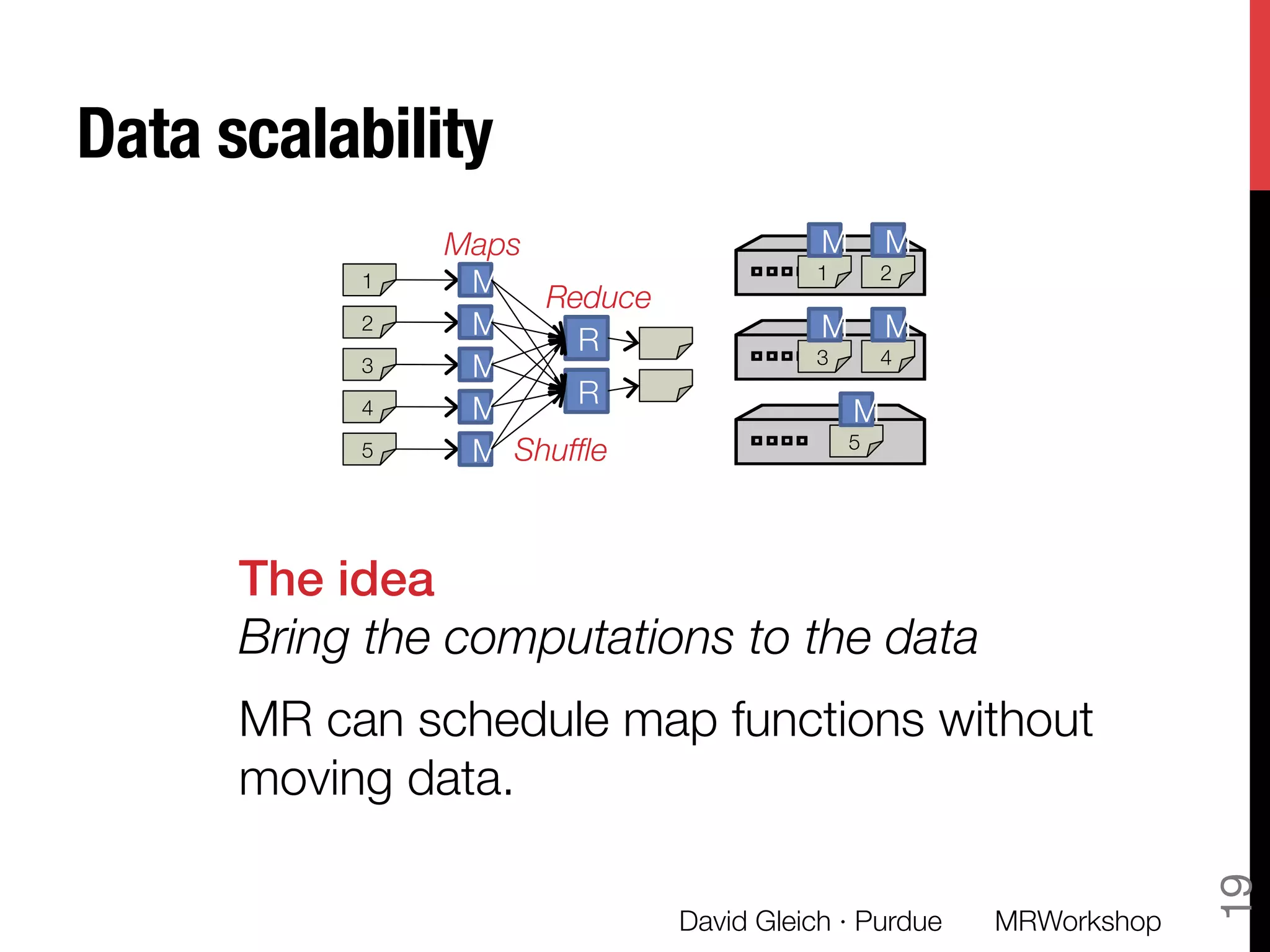

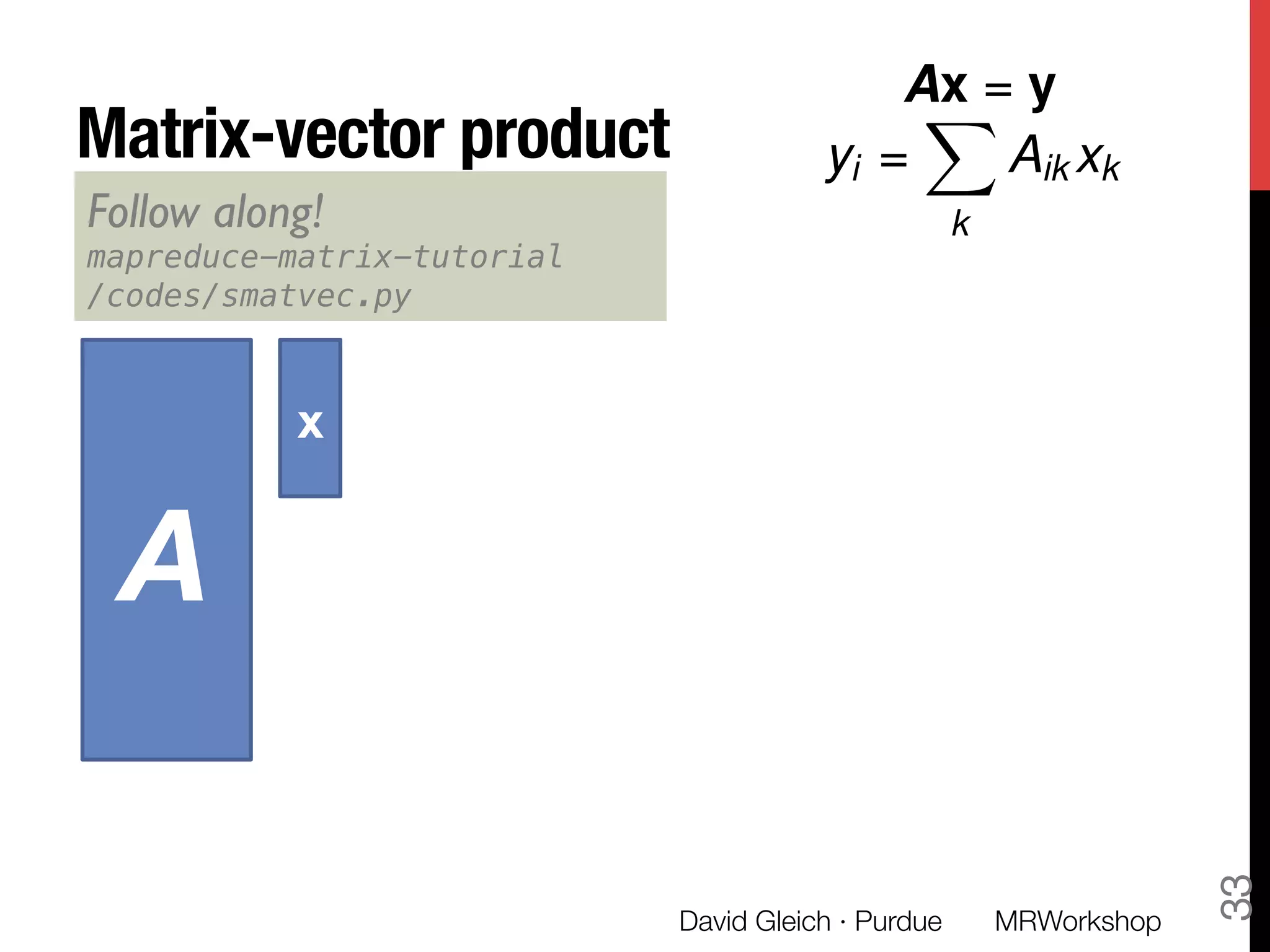

The document announces an ICME MapReduce workshop from April 29 to May 1, 2013. The workshop goals are to learn the basics of MapReduce and Hadoop and be able to process large volumes of scientific data. The workshop overview includes presentations on sparse matrix computations in MapReduce, extending MapReduce for scientific computing, and evaluating MapReduce for science. The document also discusses how to program data computers using MapReduce and Hadoop and provides examples of matrix operations like matrix-vector multiplication in MapReduce.

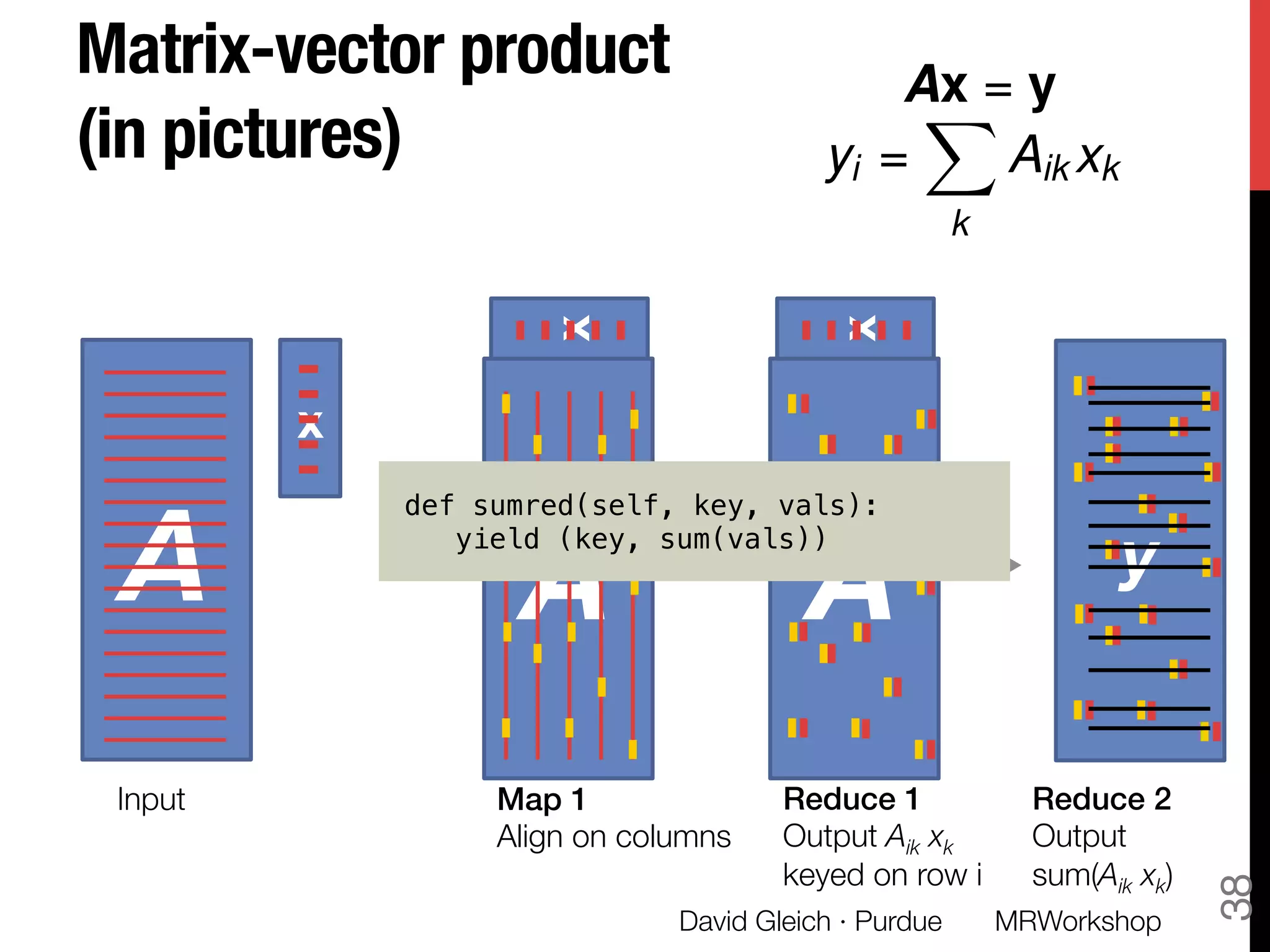

![Matrix-vector product!

(in pictures)

David Gleich · Purdue

36

Ax = y

yi =

X

k

Aik xk

A

x

A

x

Input

Map 1!

Align on columns!

def joinmap(self, key, line):!

vals = line.split()!

if len(vals) == 2:!

# the vector!

yield (vals[0], # row!

(float(vals[1]),)) # xi!

else:!

# the matrix!

row = vals[0]!

for i in xrange(1,len(vals),2):!

yield (vals[i], # column!

(row, # i,Aij!

float(vals[i+1])))!

MRWorkshop](https://image.slidesharecdn.com/sparse-matrices-mapreduce-gleich-slides-only-130429112802-phpapp01/75/Sparse-matrix-computations-in-MapReduce-36-2048.jpg)

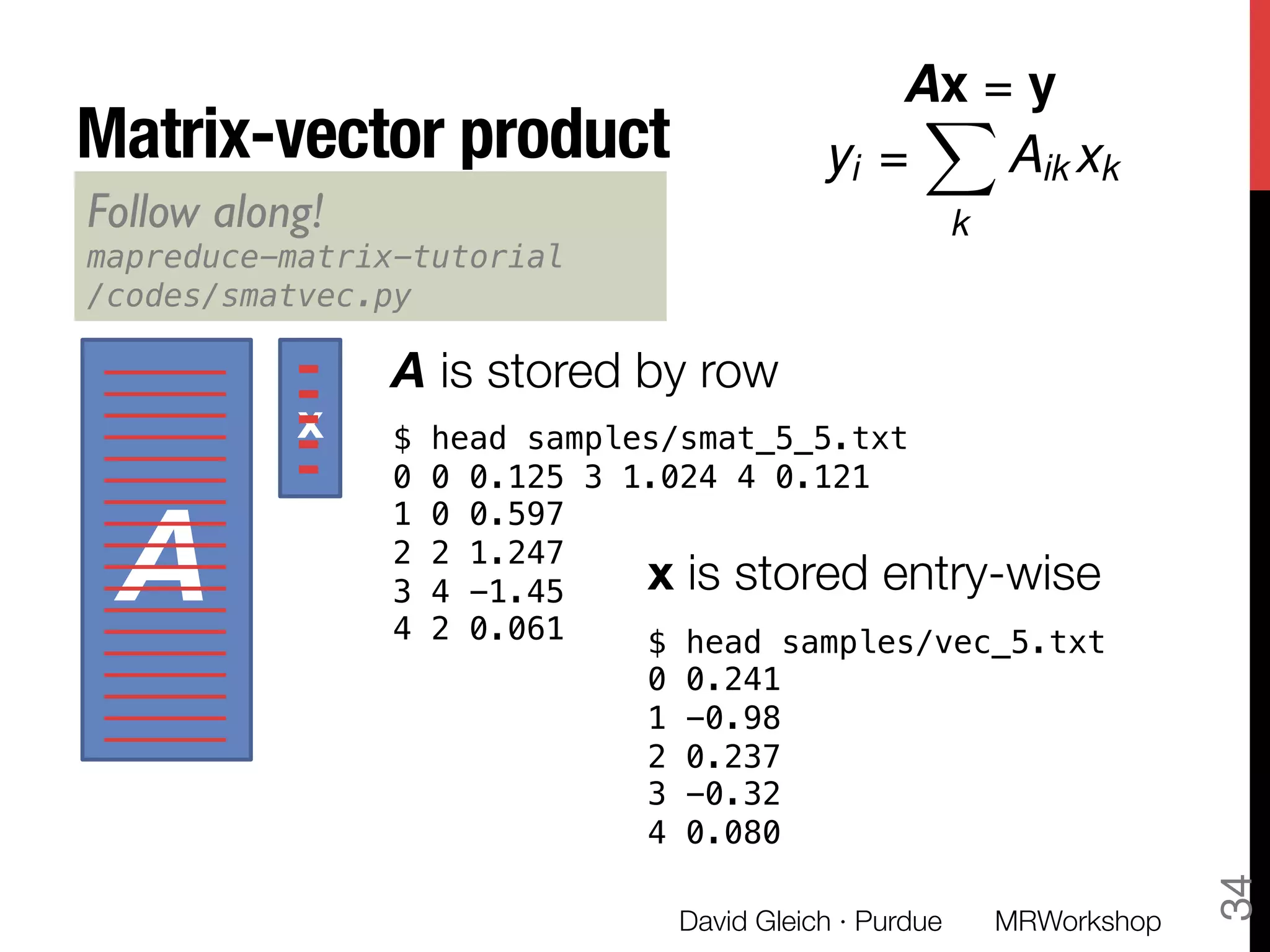

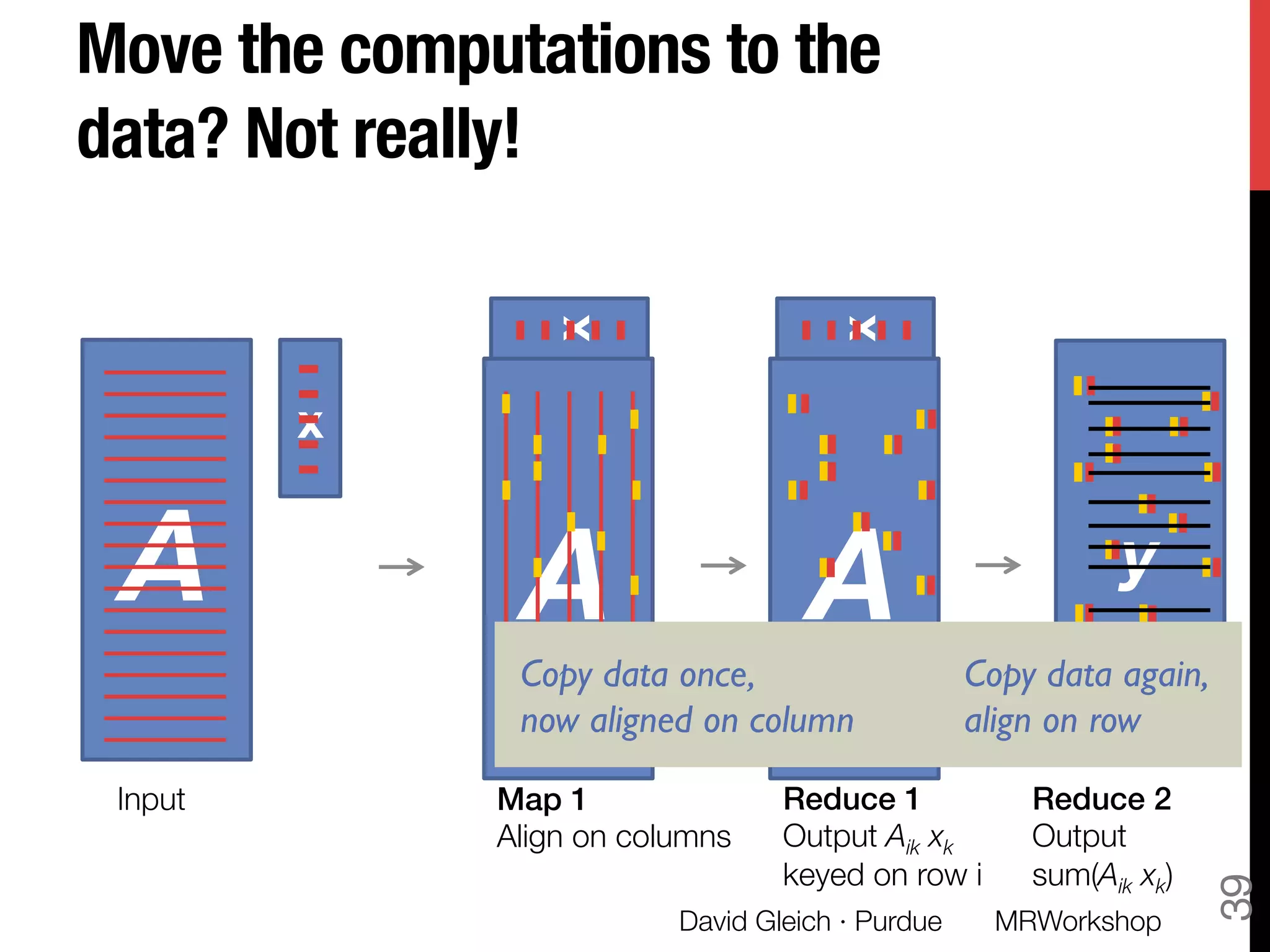

![Matrix-vector product!

(in pictures)

David Gleich · Purdue

37

Ax = y

yi =

X

k

Aik xk

A

x

A

x

Input

Map 1!

Align on columns!

Reduce 1!

Output Aik xk!

keyed on row i

A

x

def joinred(self, key, vals):!

vecval = 0. !

matvals = []!

for val in vals:!

if len(val) == 1:!

vecval += val[0]!

else:!

matvals.append(val) !

for val in matvals:!

yield (val[0], val[1]*vecval)!

Note that you should use a

secondary sort to avoid

reading both in memory

MRWorkshop](https://image.slidesharecdn.com/sparse-matrices-mapreduce-gleich-slides-only-130429112802-phpapp01/75/Sparse-matrix-computations-in-MapReduce-37-2048.jpg)

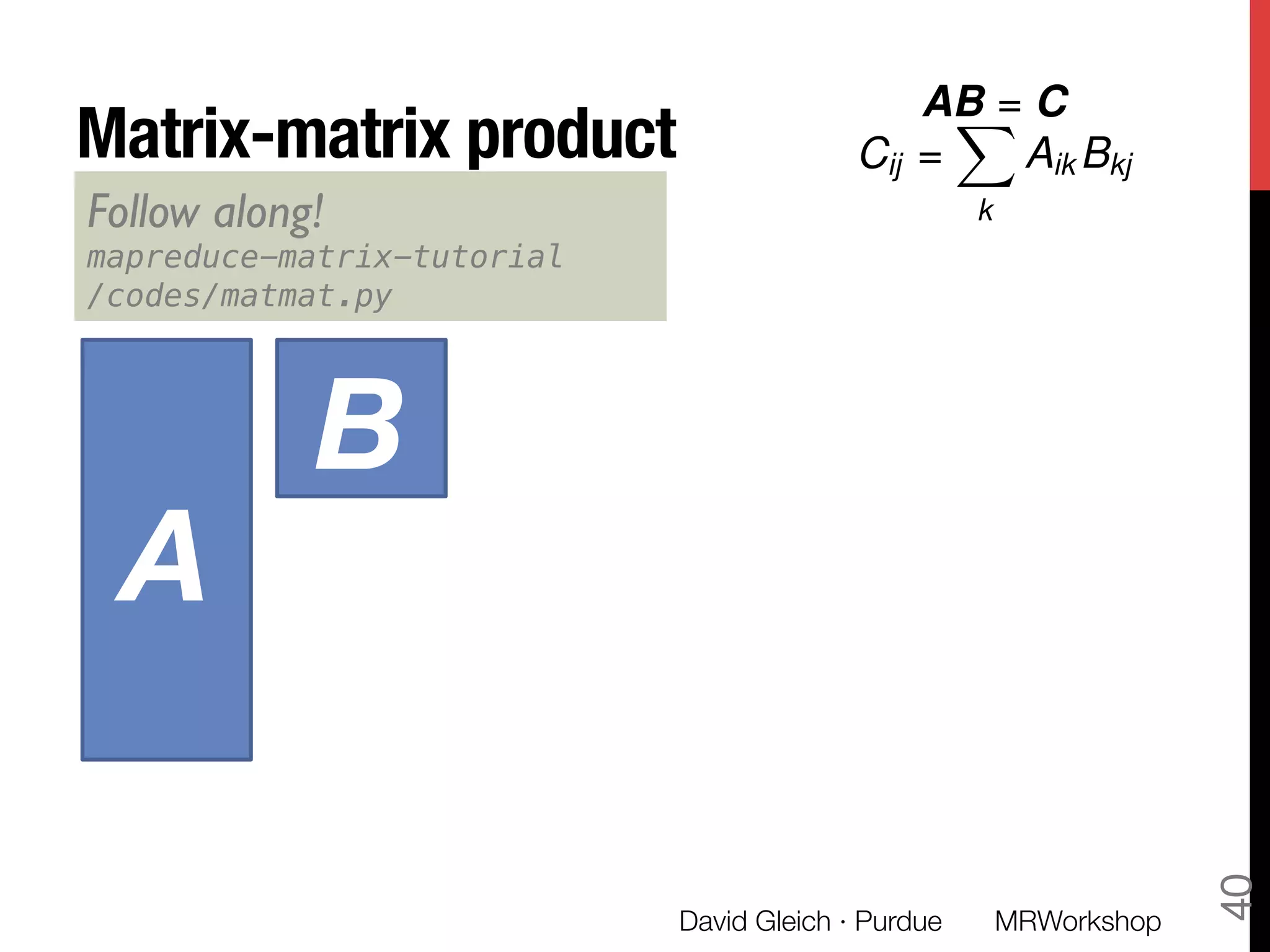

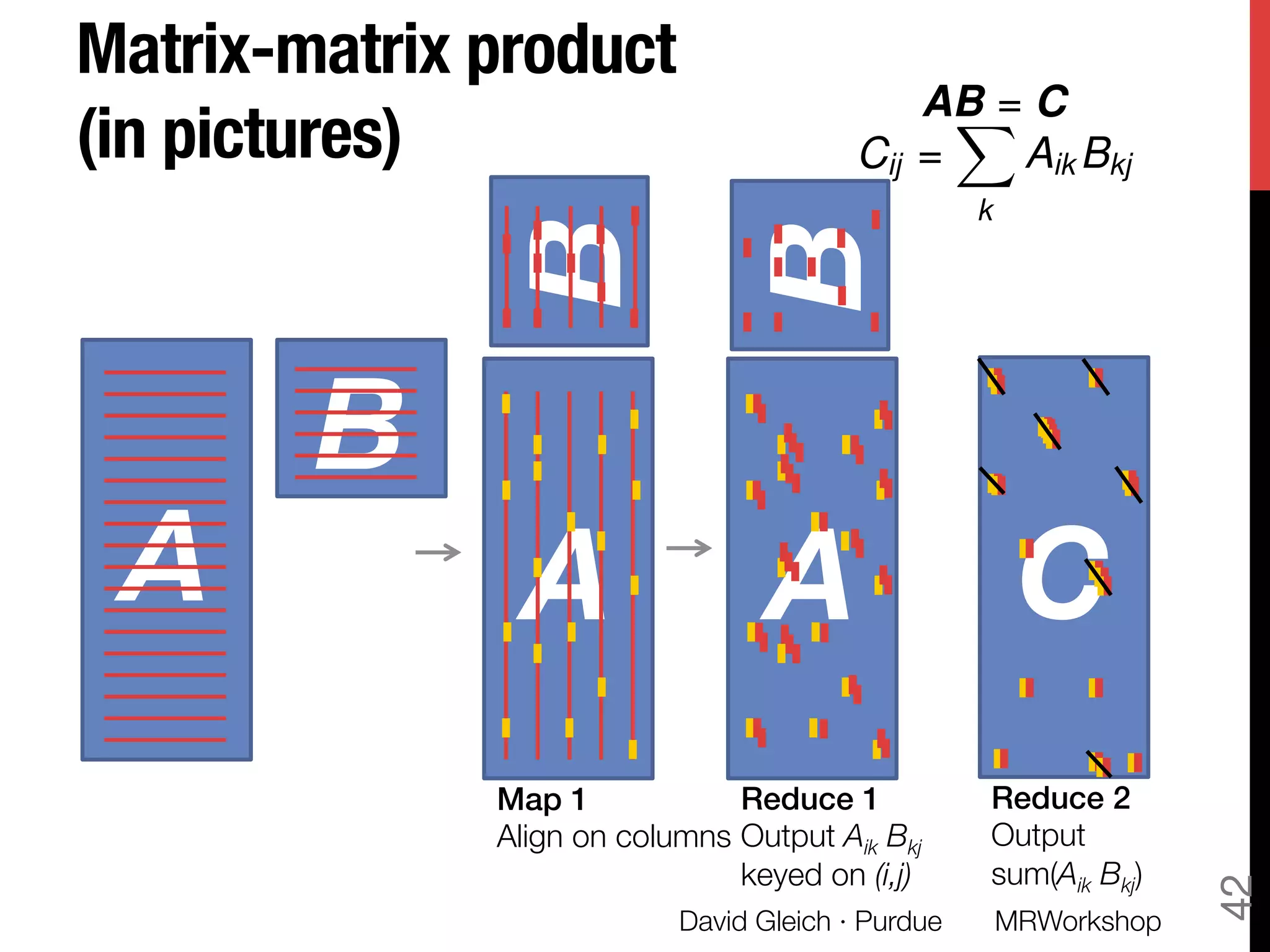

![Matrix-matrix product !

(in code)

David Gleich · Purdue

43

A

B

AB = C

Cij =

X

k

Aik Bkj

A

Map 1!

Align on columns!

B

def joinmap(self, key, line):!

mtype = self.parsemat()!

vals = line.split()!

row = vals[0]!

rowvals = !

[(vals[i],float(vals[i+1])) !

for i in xrange(1,len(vals),2)]!

if mtype==1:!

# matrix A, output by col!

for val in rowvals:!

yield (val[0], (row, val[1]))!

else:!

yield (row, (rowvals,))!

MRWorkshop](https://image.slidesharecdn.com/sparse-matrices-mapreduce-gleich-slides-only-130429112802-phpapp01/75/Sparse-matrix-computations-in-MapReduce-43-2048.jpg)

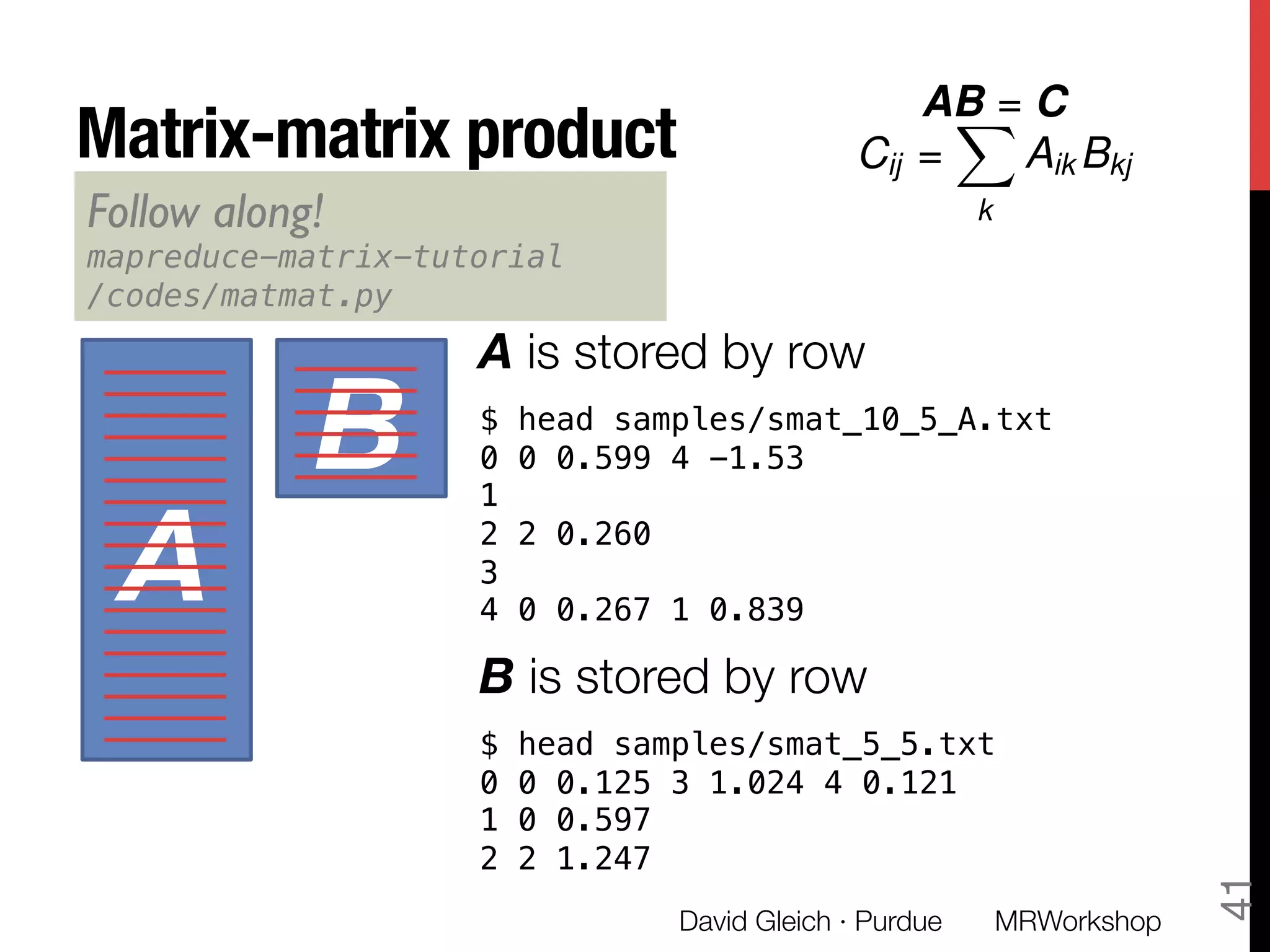

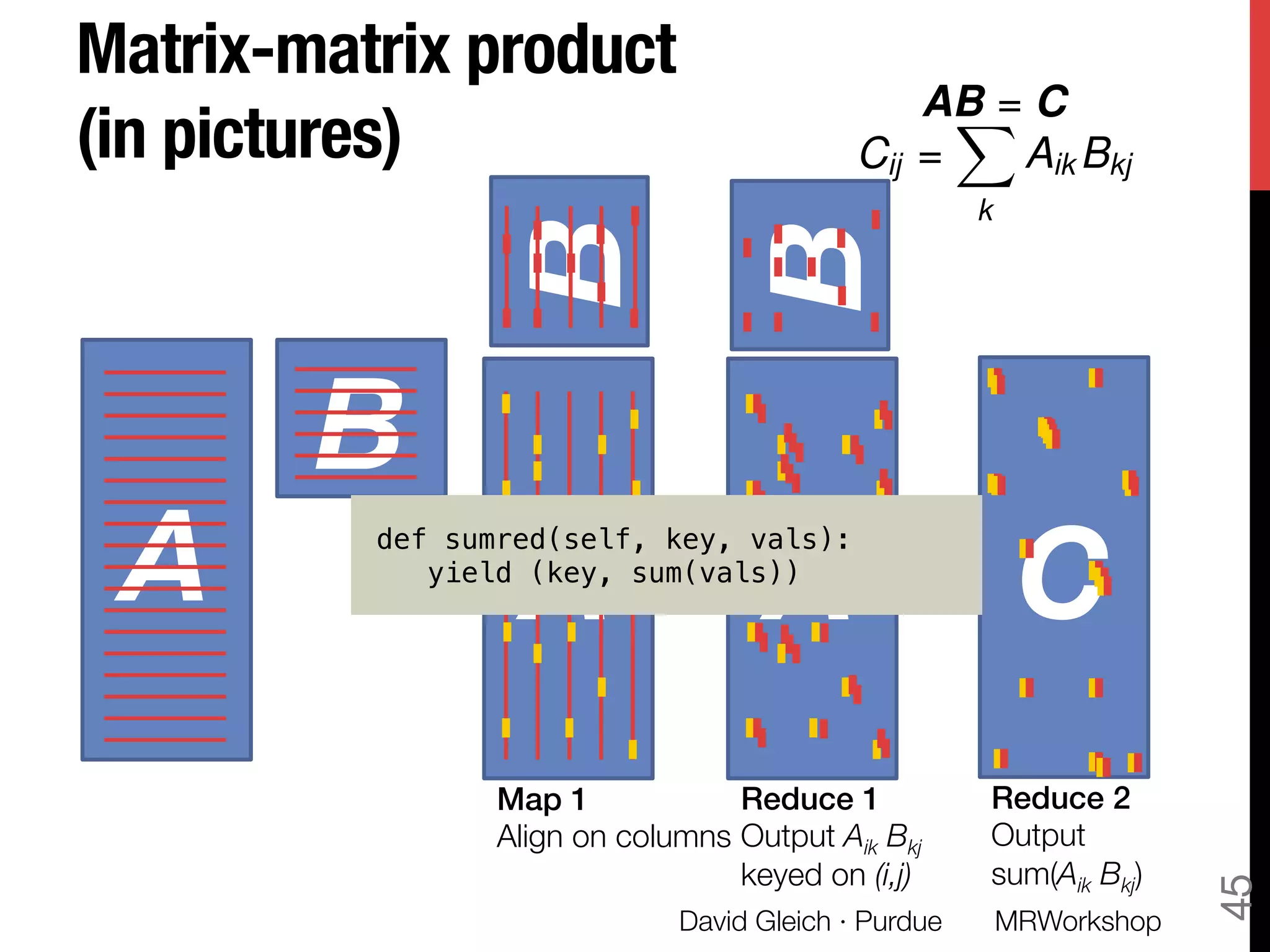

![Matrix-matrix product !

(in code)

David Gleich · Purdue

44

A

B

AB = C

Cij =

X

k

Aik Bkj

A

Map 1!

Align on columns!

B

Reduce 1!

Output Aik Bkj!

keyed on (i,j)

A

B

def joinred(self, key, line):!

# load the data into memory !

brow = []!

acol = []!

for val in vals:!

if len(val) == 1:!

brow.extend(val[0])!

else:!

acol.append(val)!

!

for (bcol,bval) in brow:!

for (arow,aval) in acol:!

yield ((arow,bcol),aval*bval)!

MRWorkshop](https://image.slidesharecdn.com/sparse-matrices-mapreduce-gleich-slides-only-130429112802-phpapp01/75/Sparse-matrix-computations-in-MapReduce-44-2048.jpg)

![Error analysis of summation

s = 0; for i=1 to n: s = s + x[i]

A simple summation formula has !

error that is not always small if n is a billion

David Gleich · Purdue

49

fl(x + y) = (x + y)(1 + )

fl(

X

i

xi )

X

i

xi nµ

X

i

|xi | µ ⇡ 10 16

MRWorkshop](https://image.slidesharecdn.com/sparse-matrices-mapreduce-gleich-slides-only-130429112802-phpapp01/75/Sparse-matrix-computations-in-MapReduce-49-2048.jpg)

![Compensated Summation

“Kahan summation algorithm” on Wikipedia

s = 0.; c = 0.;

for i=1 to n:

y = x[i] – c

t = s + y

c = (t – s) – y

s = t

David Gleich · Purdue

51

Mathematically, c is always zero.

On a computer, c can be non-zero

The parentheses matter!

fl(csum(x))

X

i

xi (µ + nµ2

)

X

i

|xi |

µ ⇡ 10 16

MRWorkshop](https://image.slidesharecdn.com/sparse-matrices-mapreduce-gleich-slides-only-130429112802-phpapp01/75/Sparse-matrix-computations-in-MapReduce-51-2048.jpg)