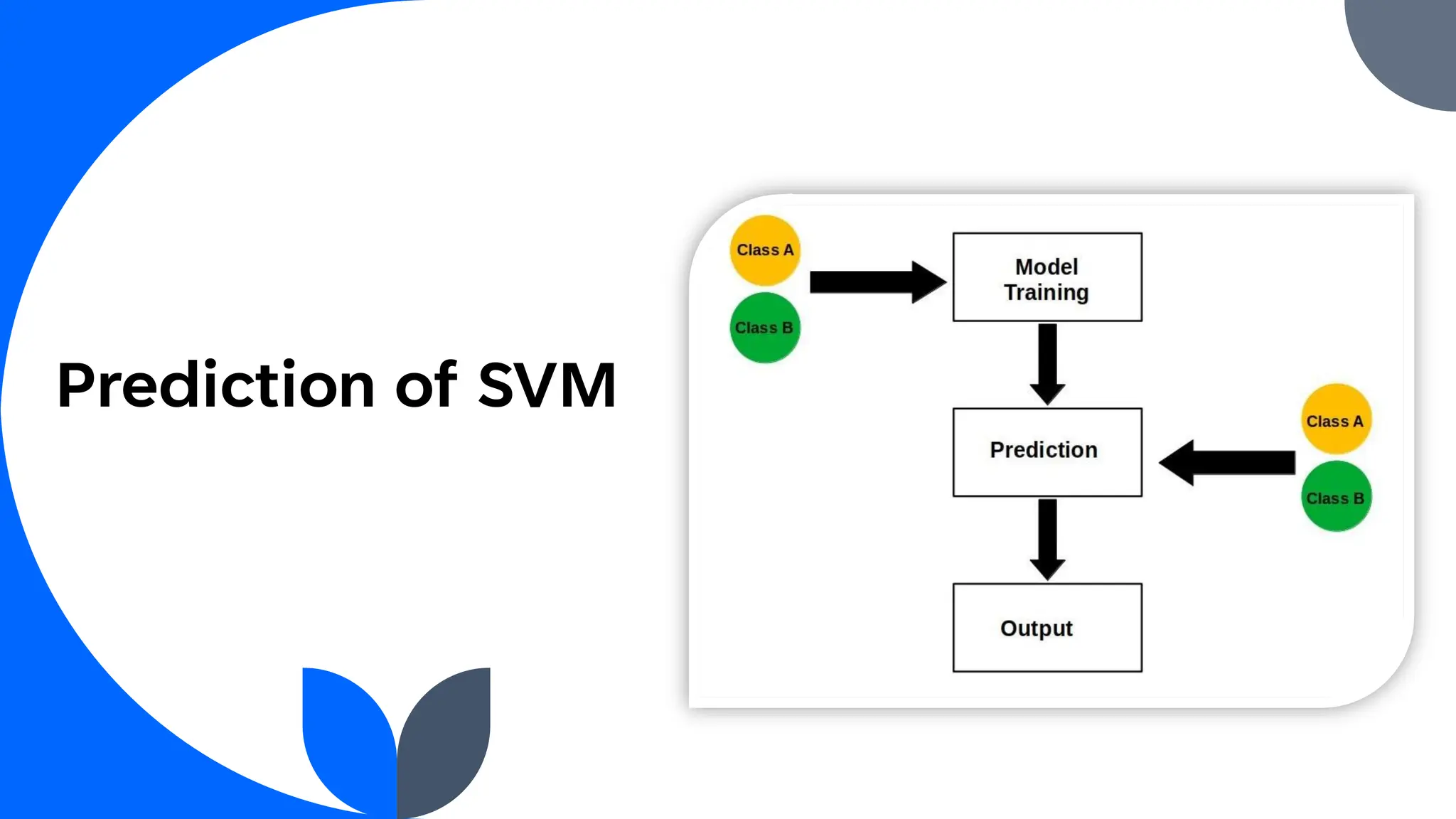

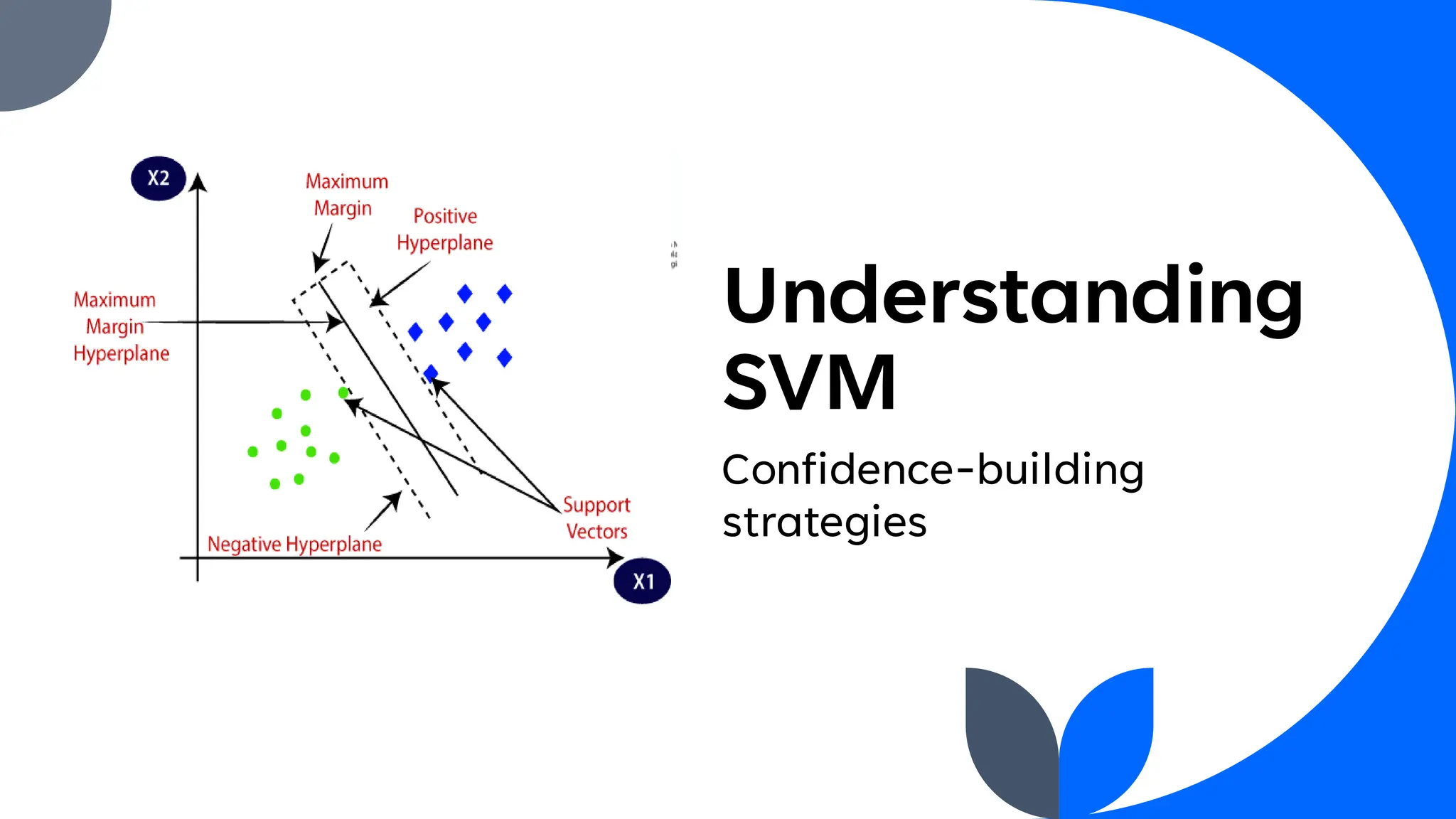

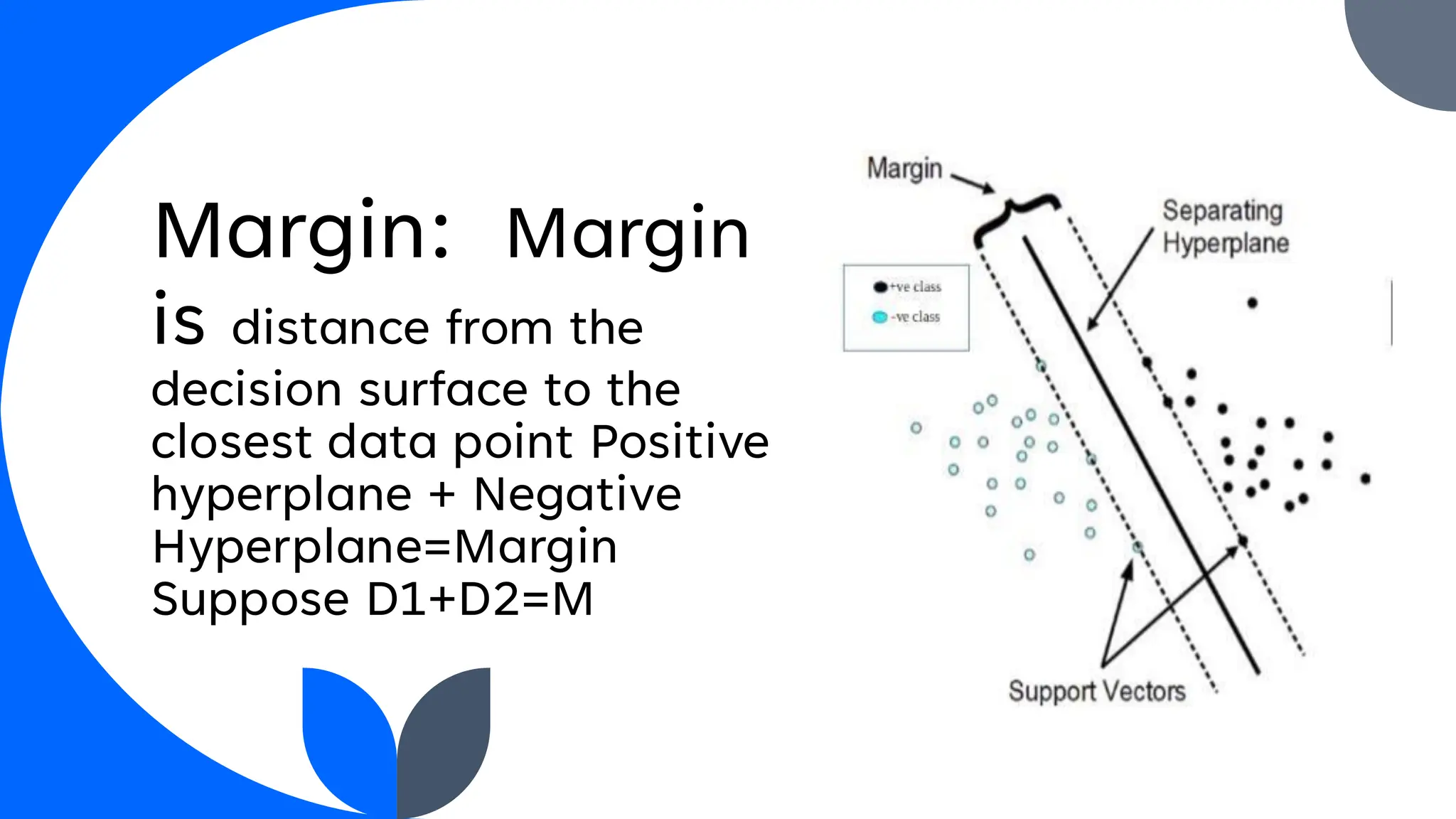

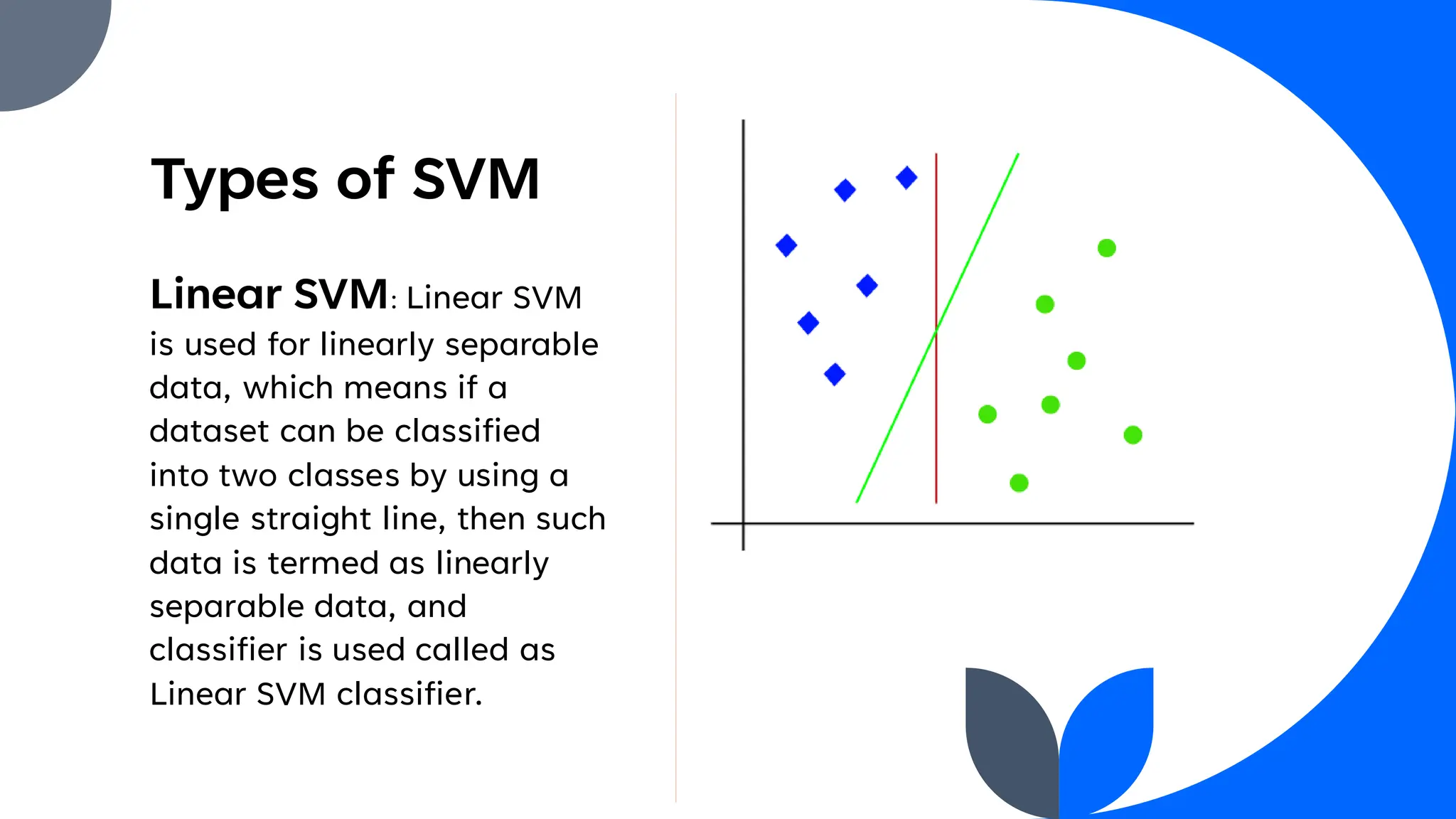

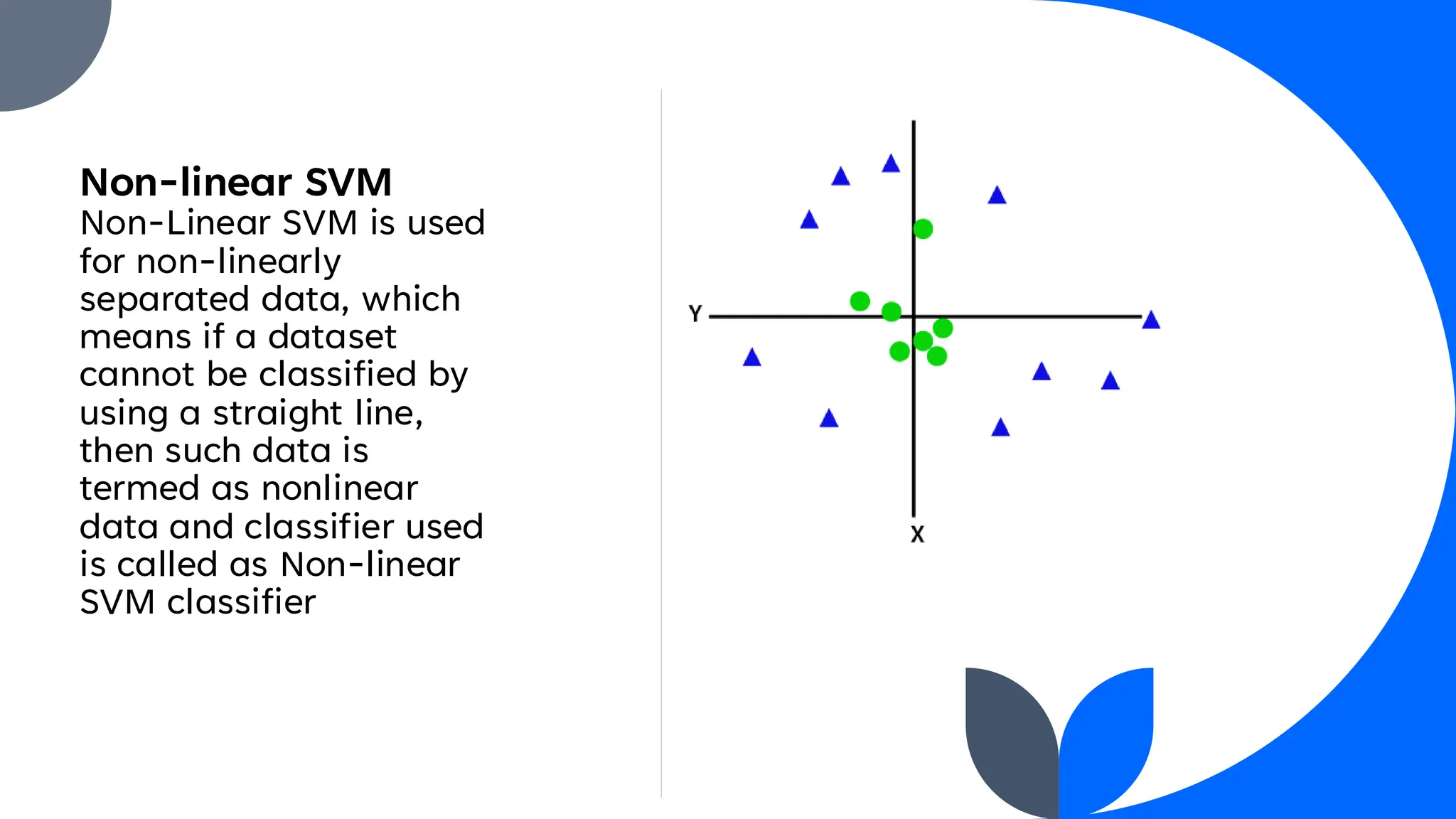

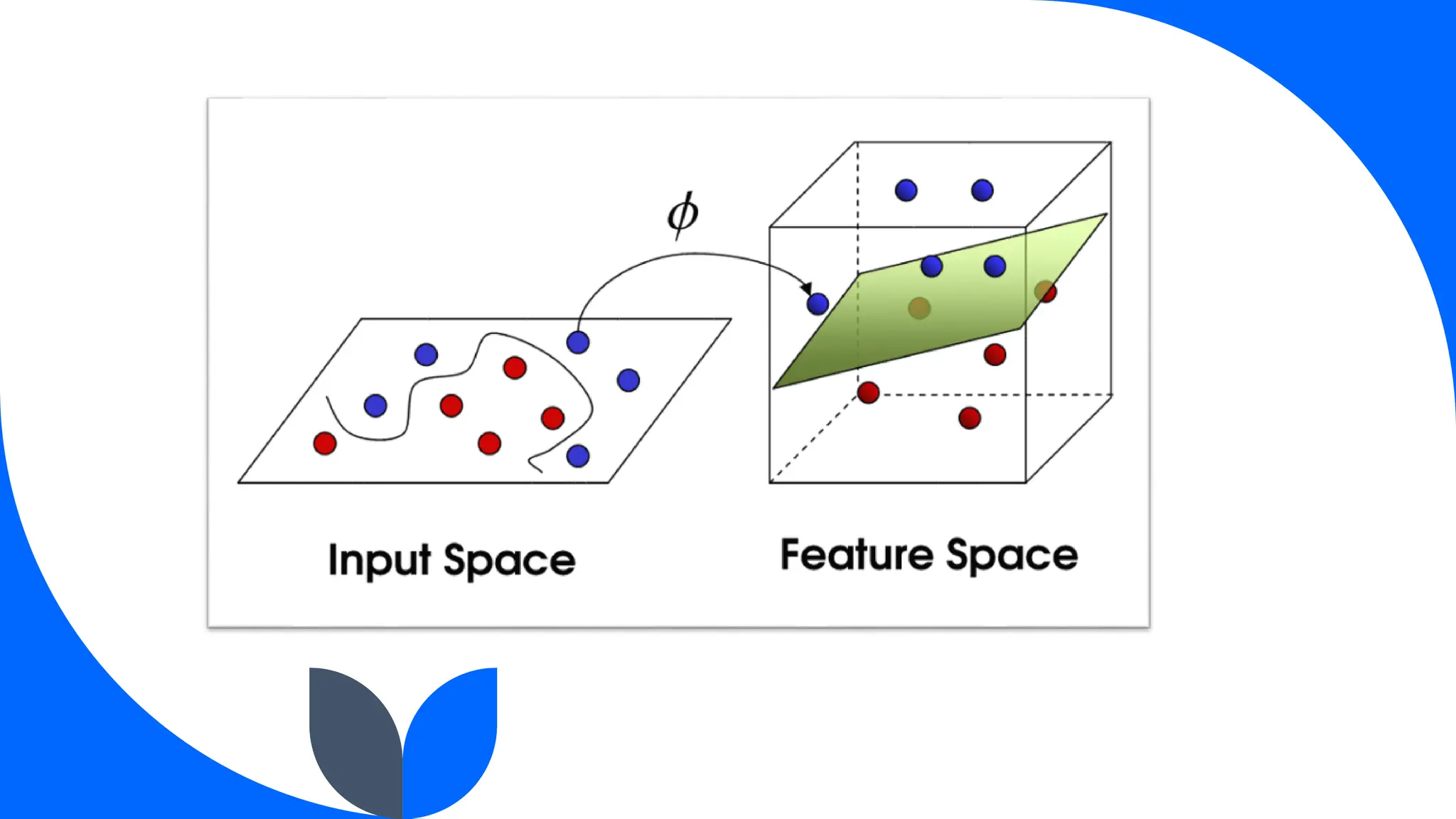

Support Vector Machine (SVM) is a widely used supervised learning algorithm for classification and regression, primarily focusing on classification. It identifies the optimal hyperplane that separates data points into classes, with support vectors being the closest points to this hyperplane. SVM can handle both linear and non-linear datasets using the kernel trick but may struggle with large datasets and high noise.