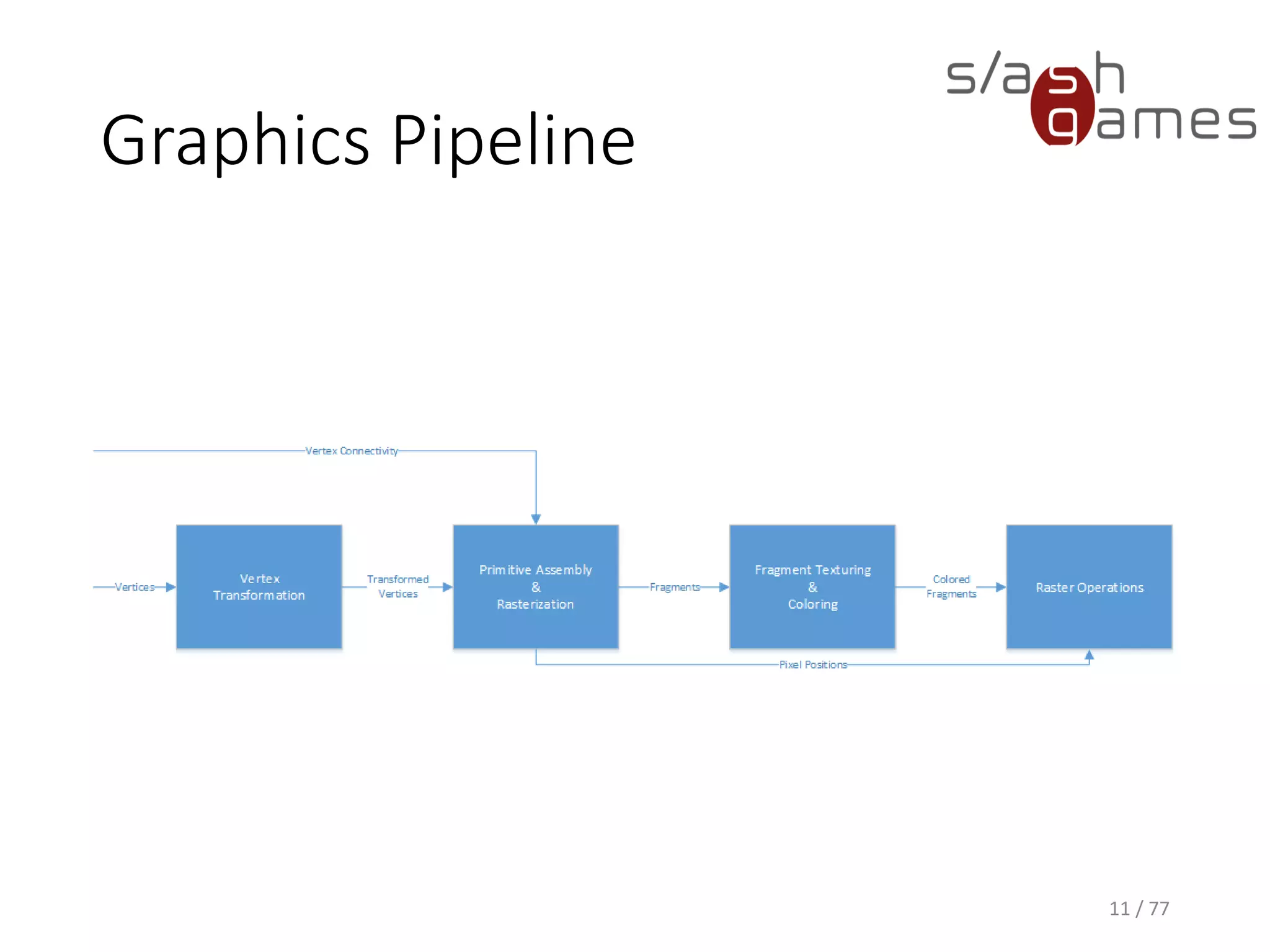

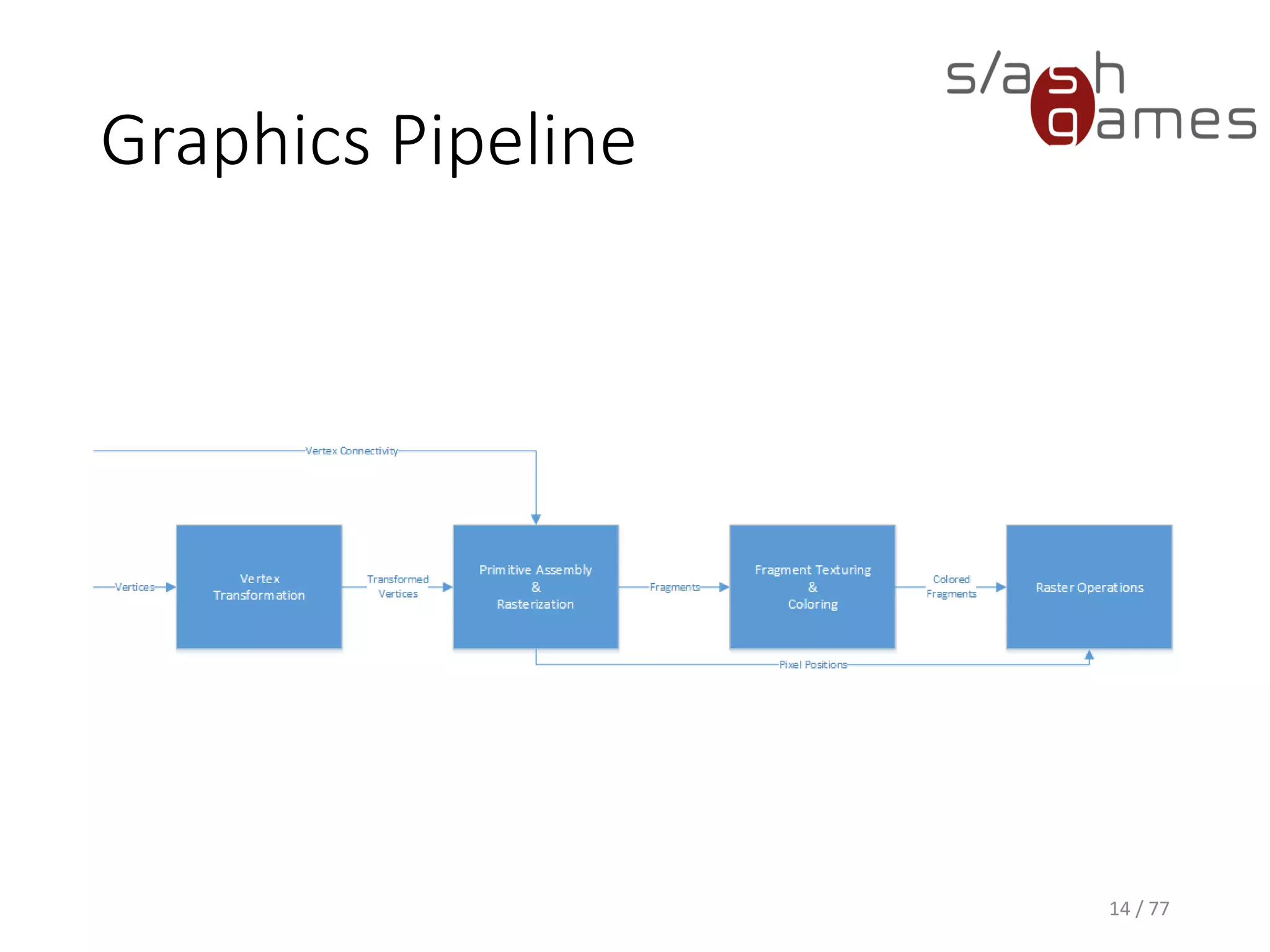

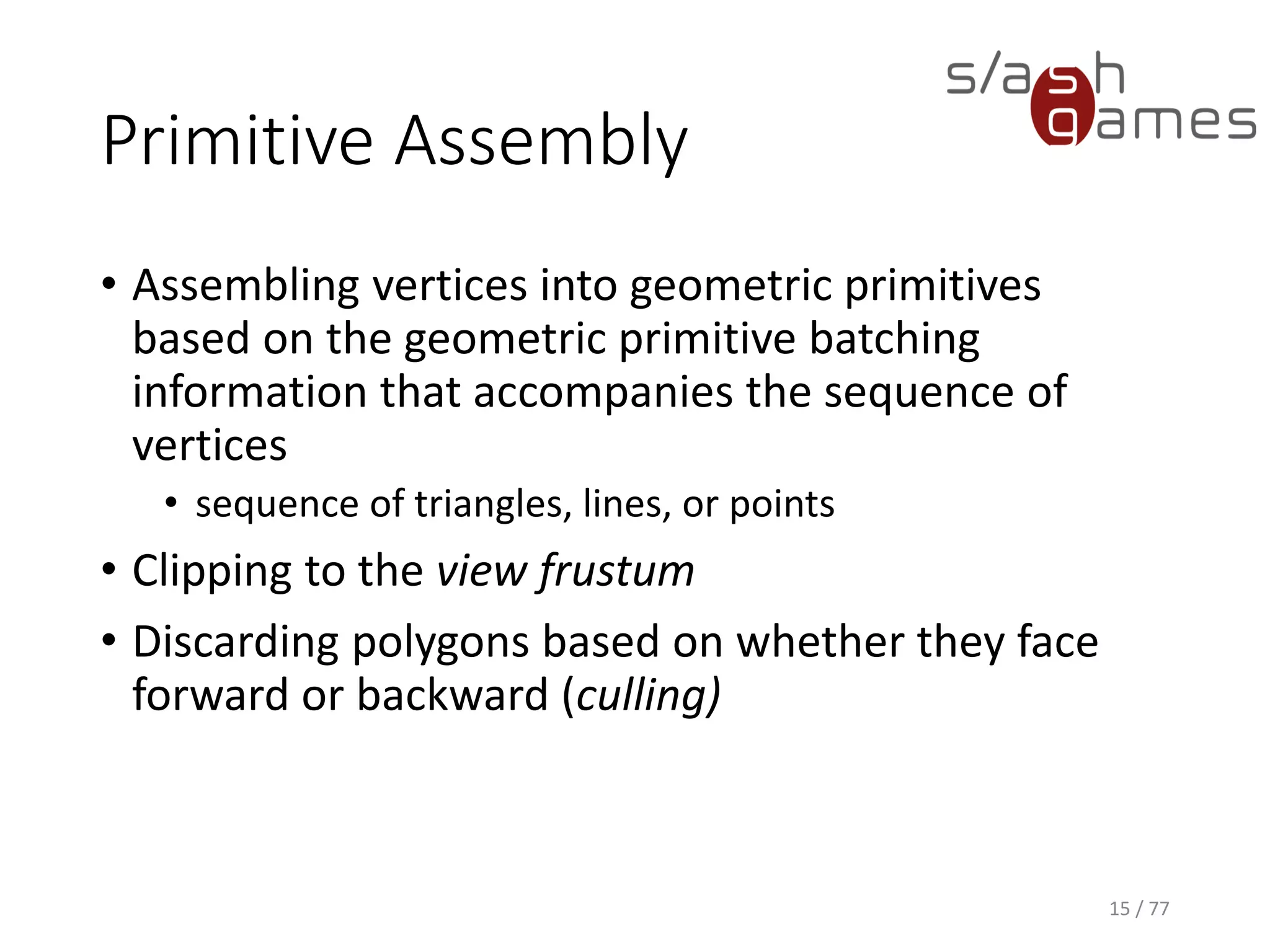

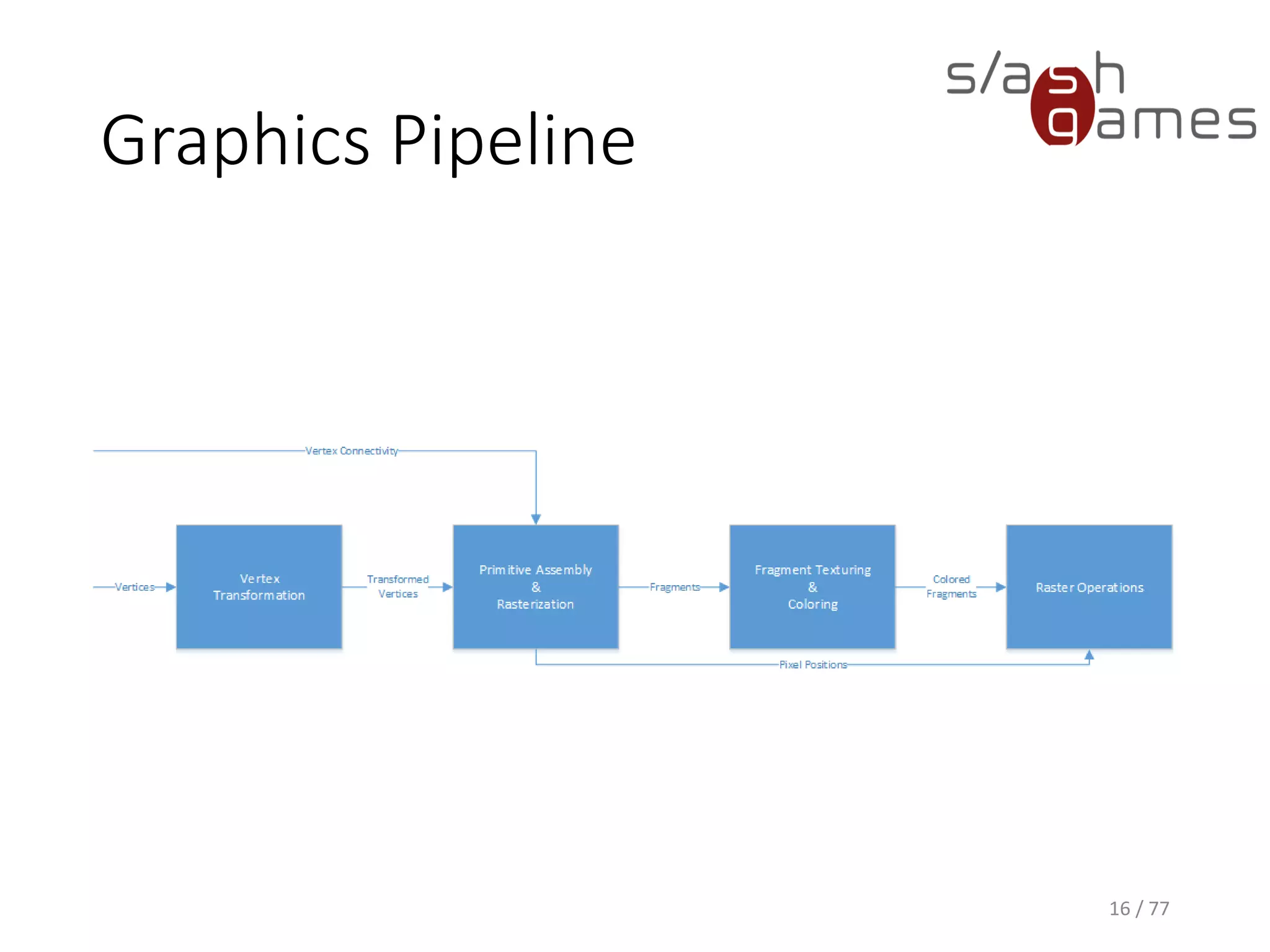

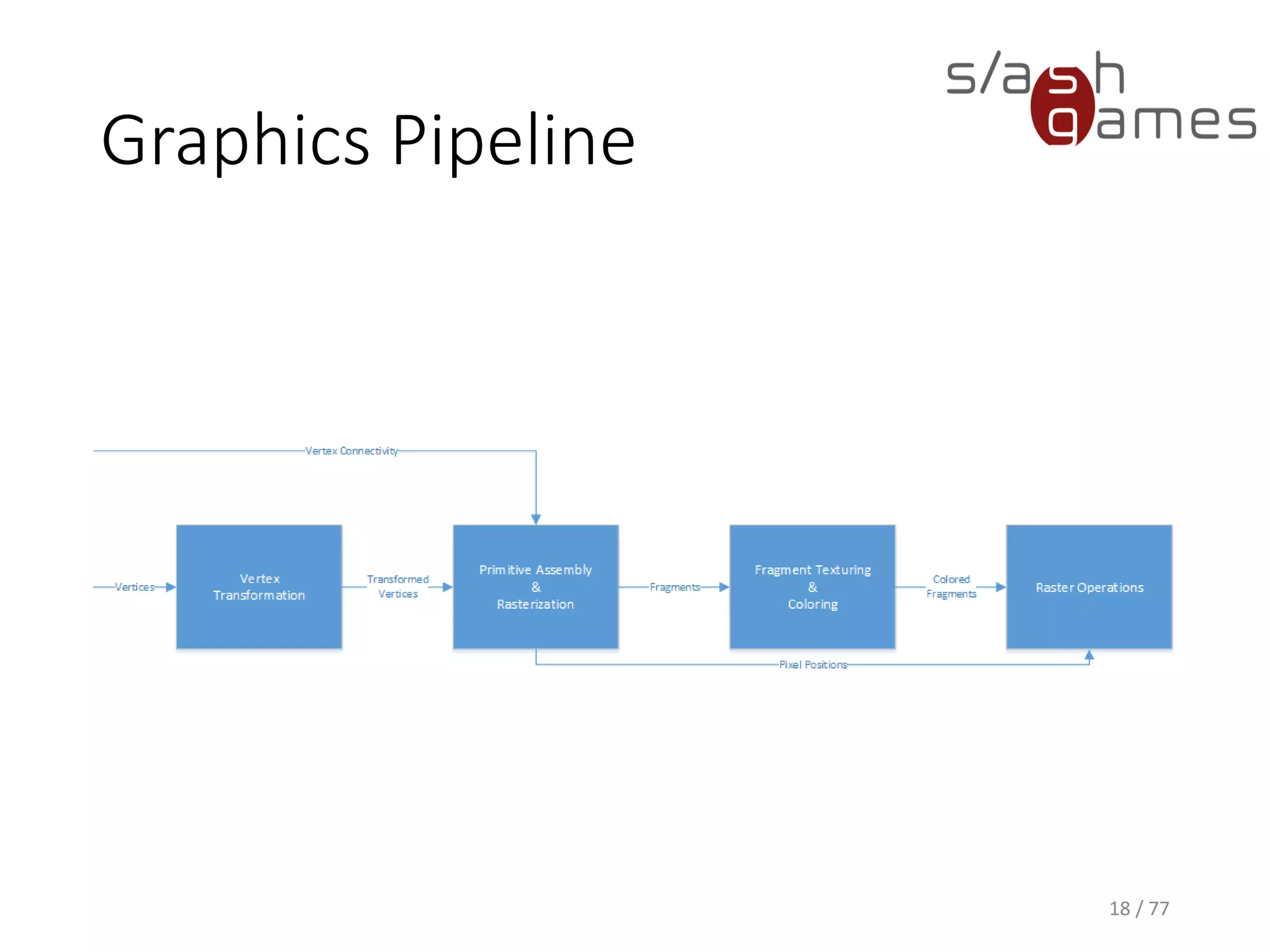

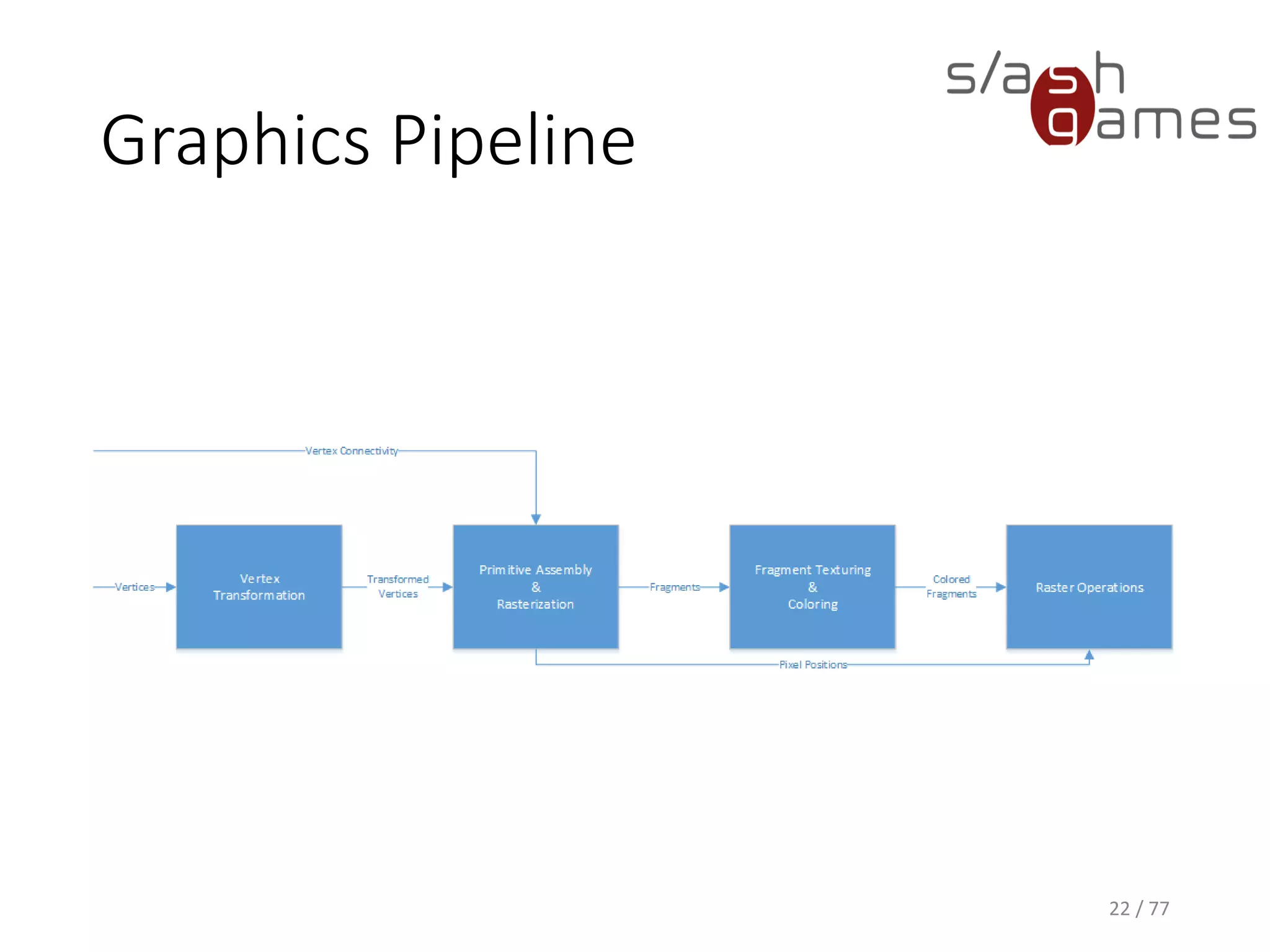

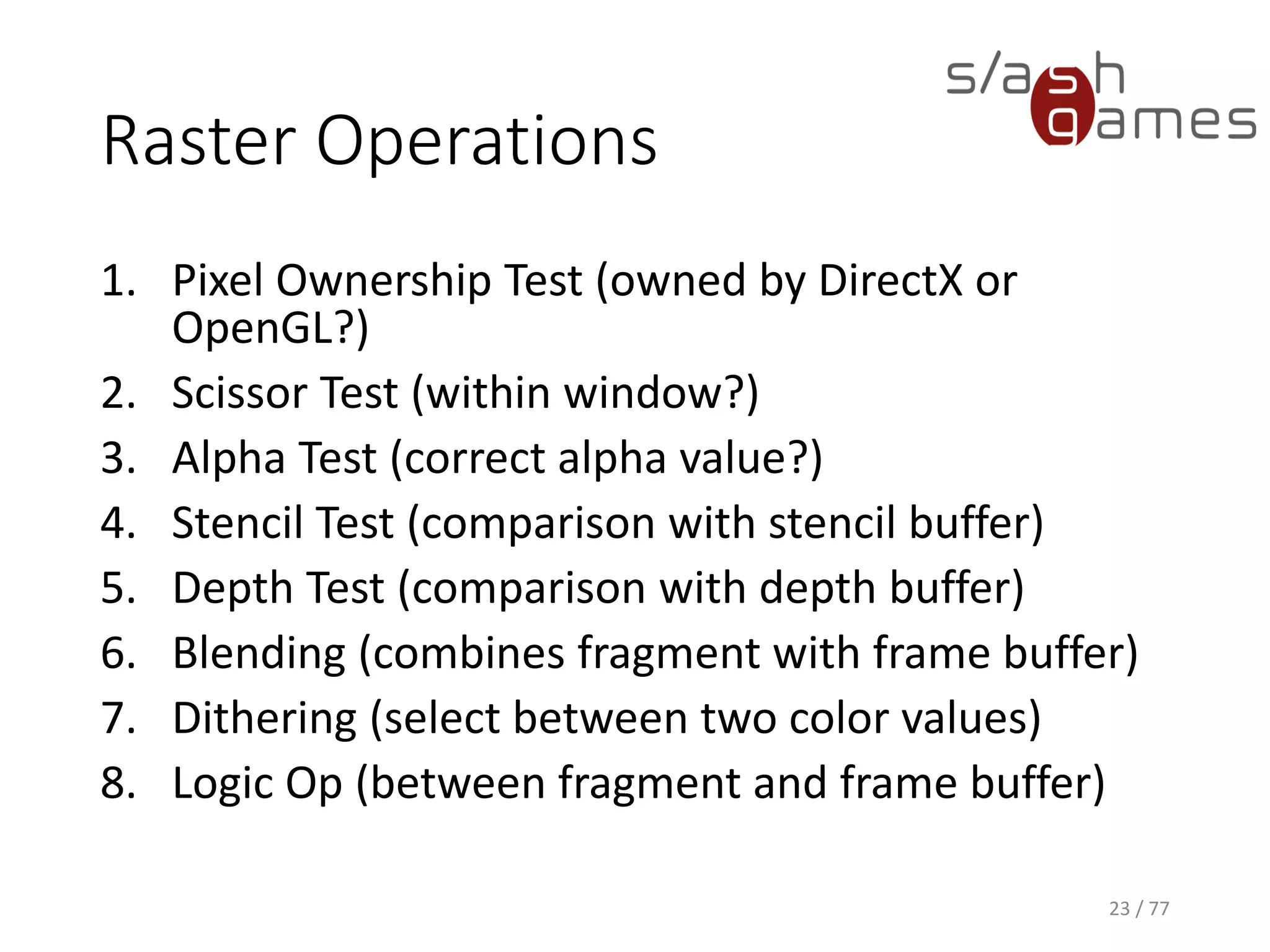

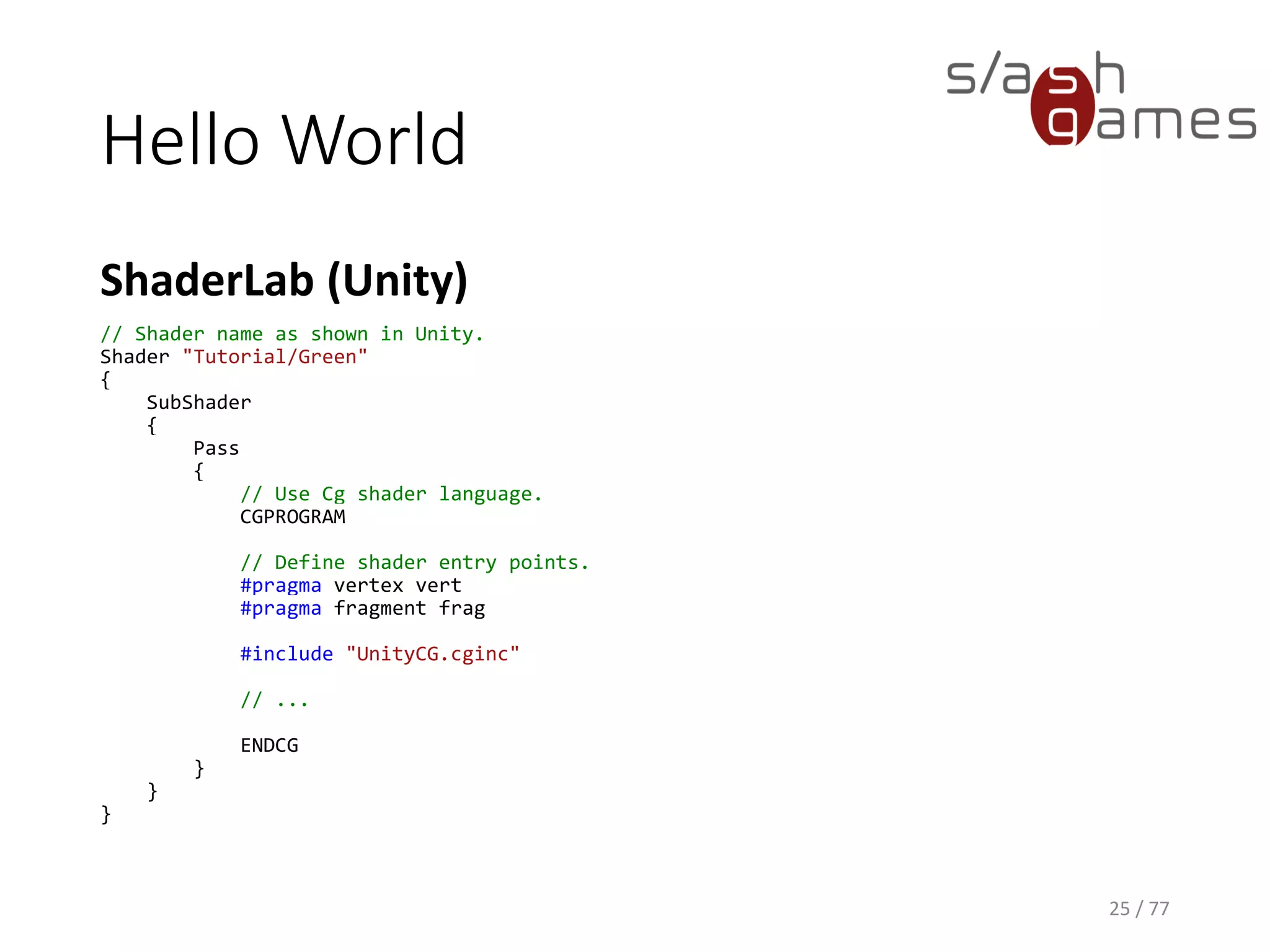

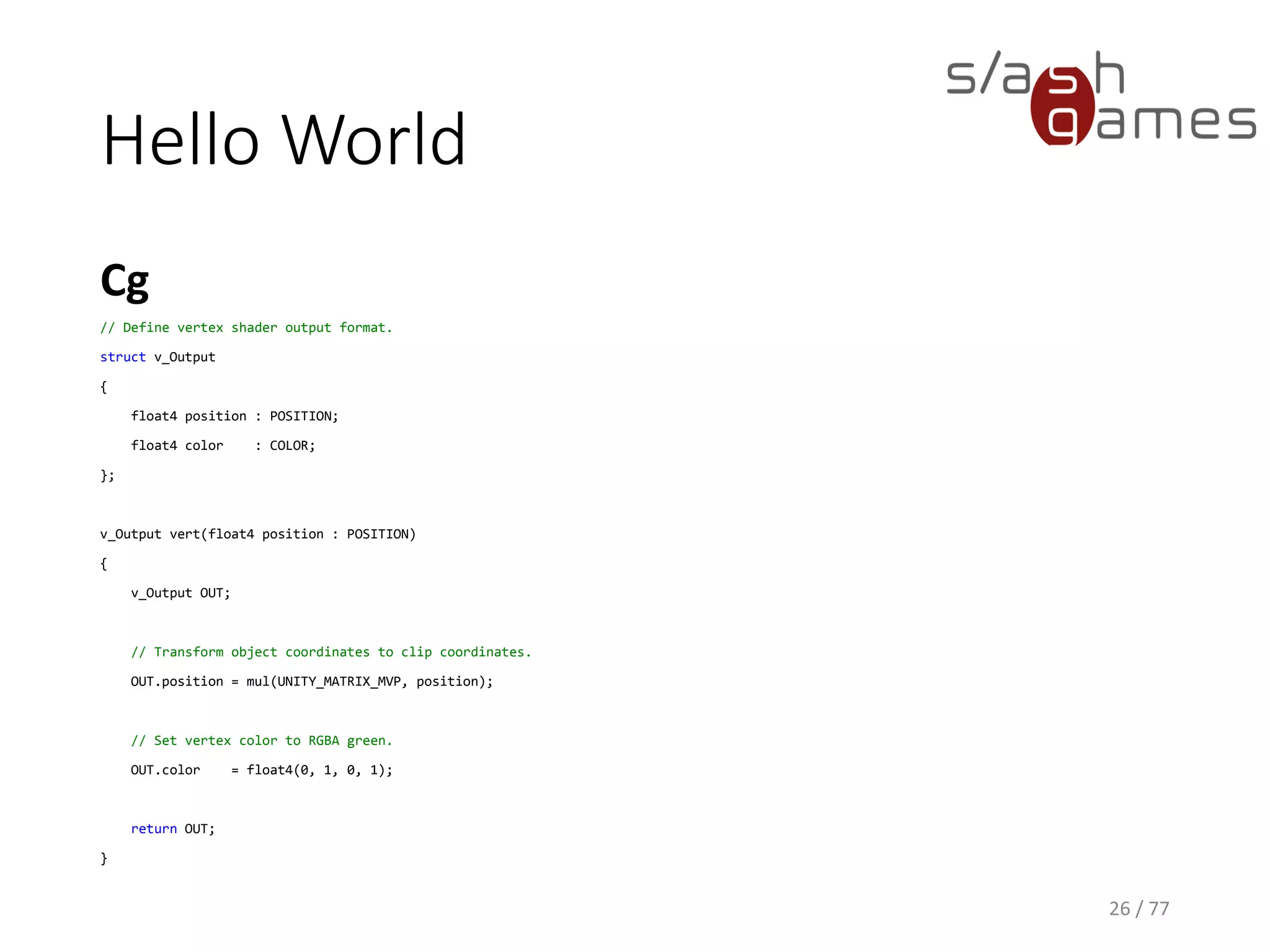

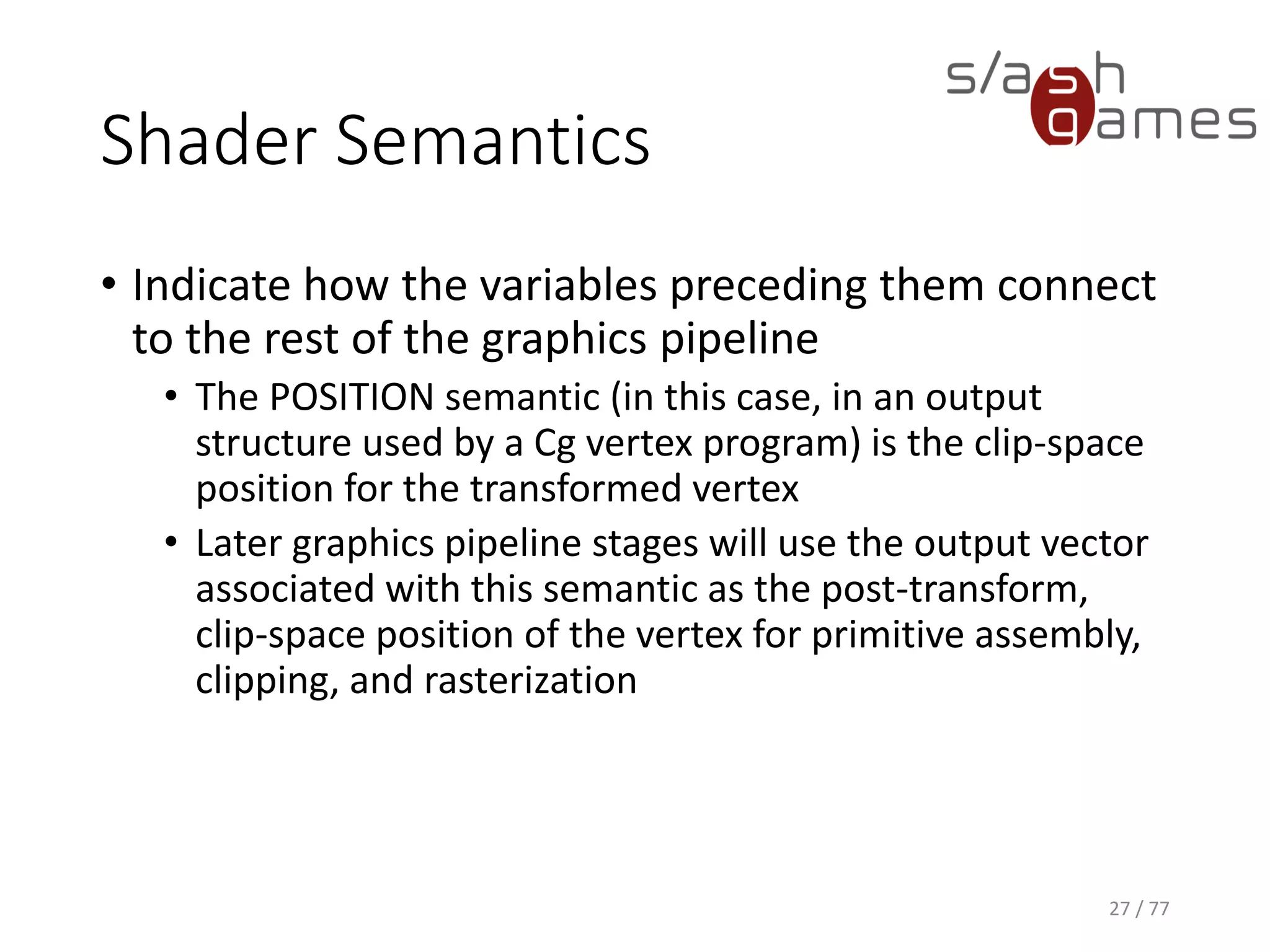

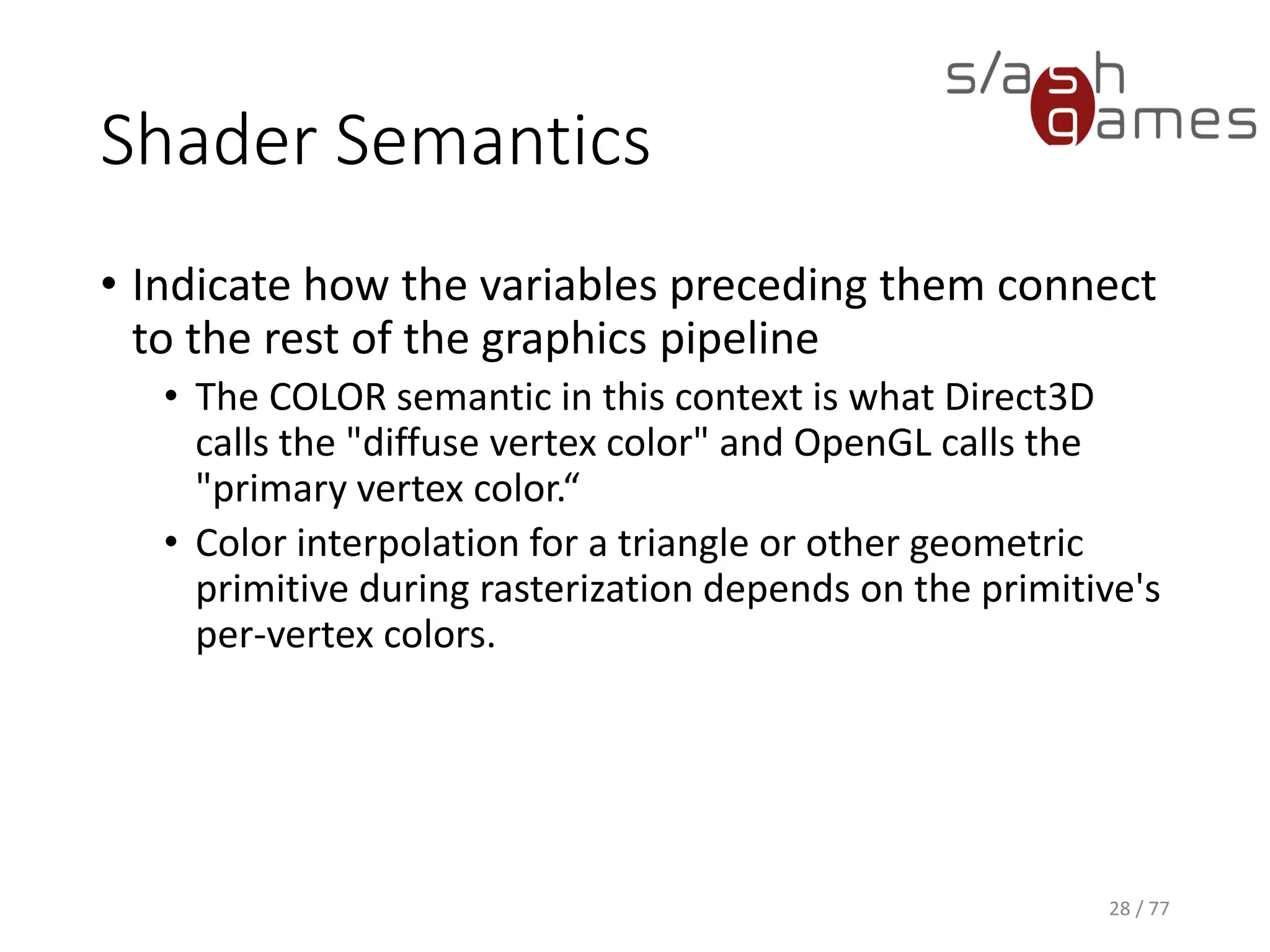

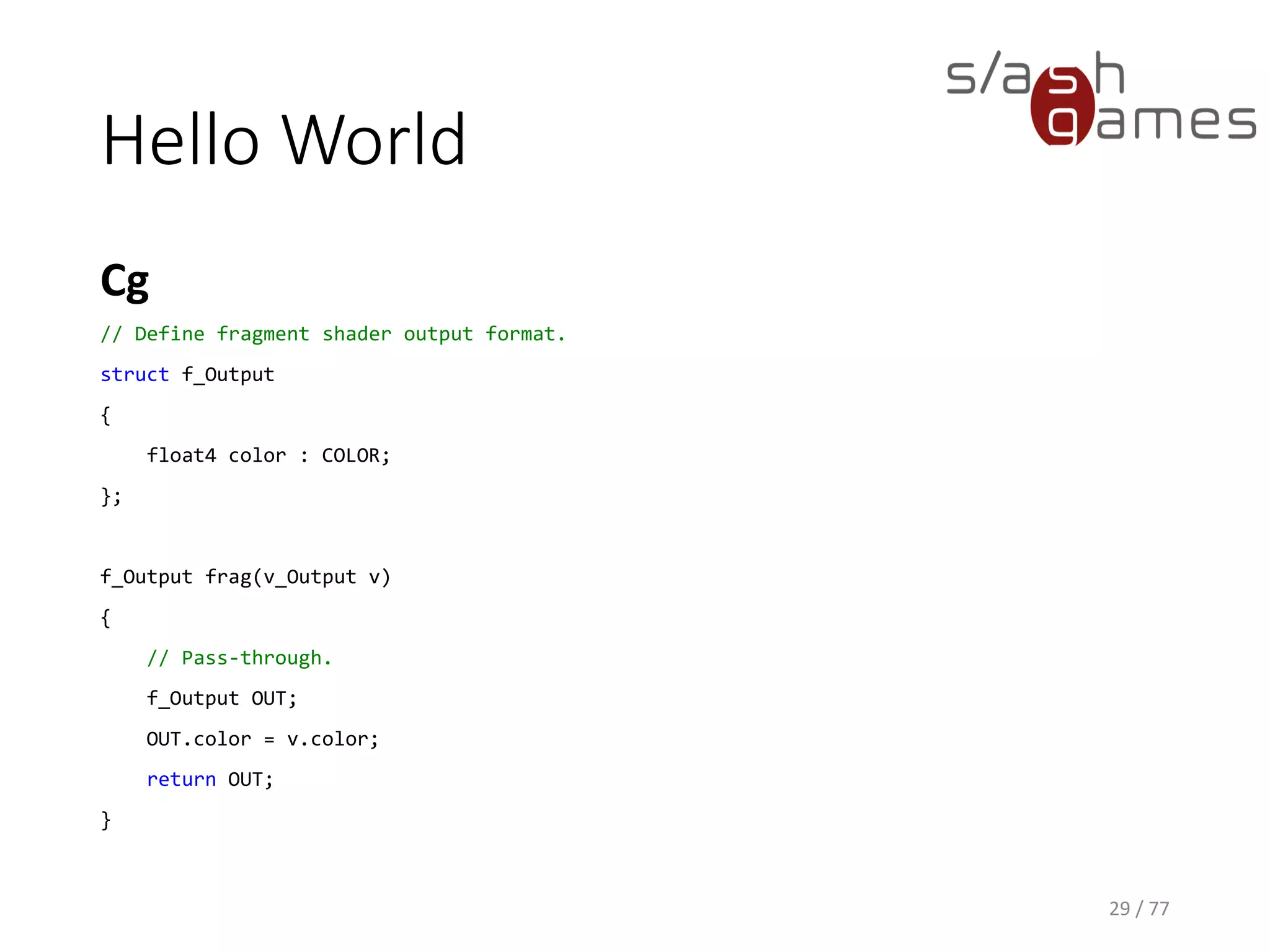

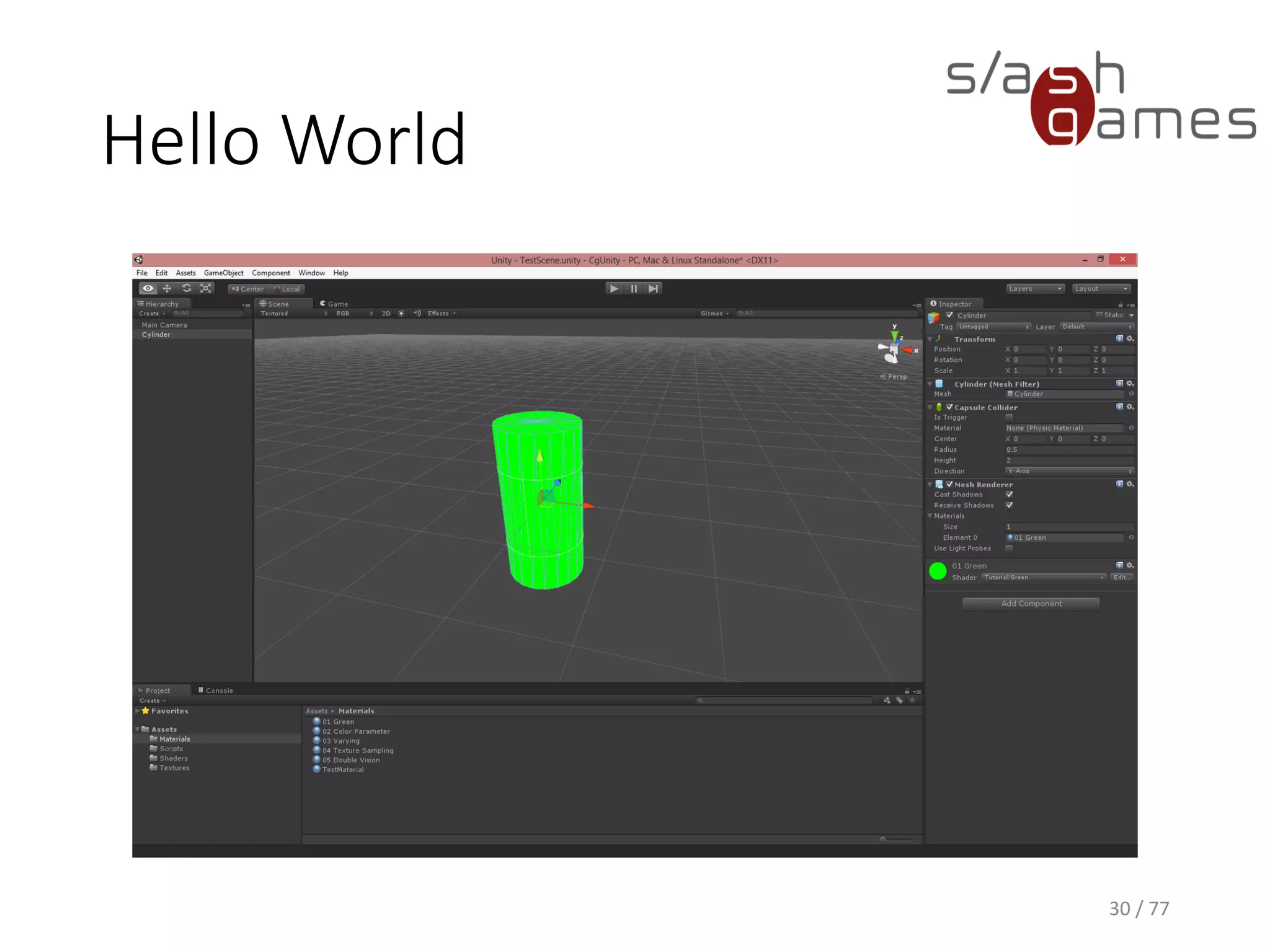

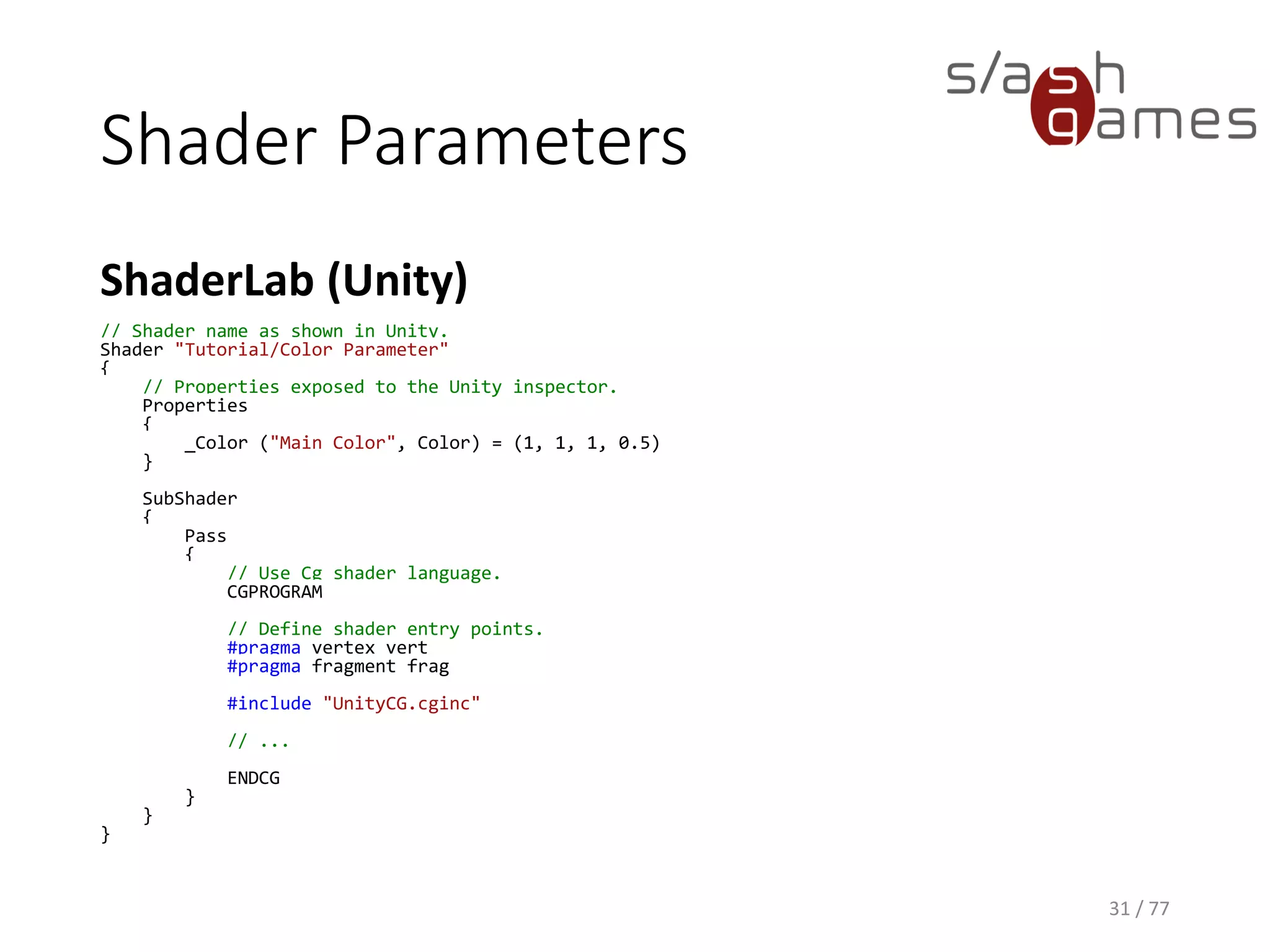

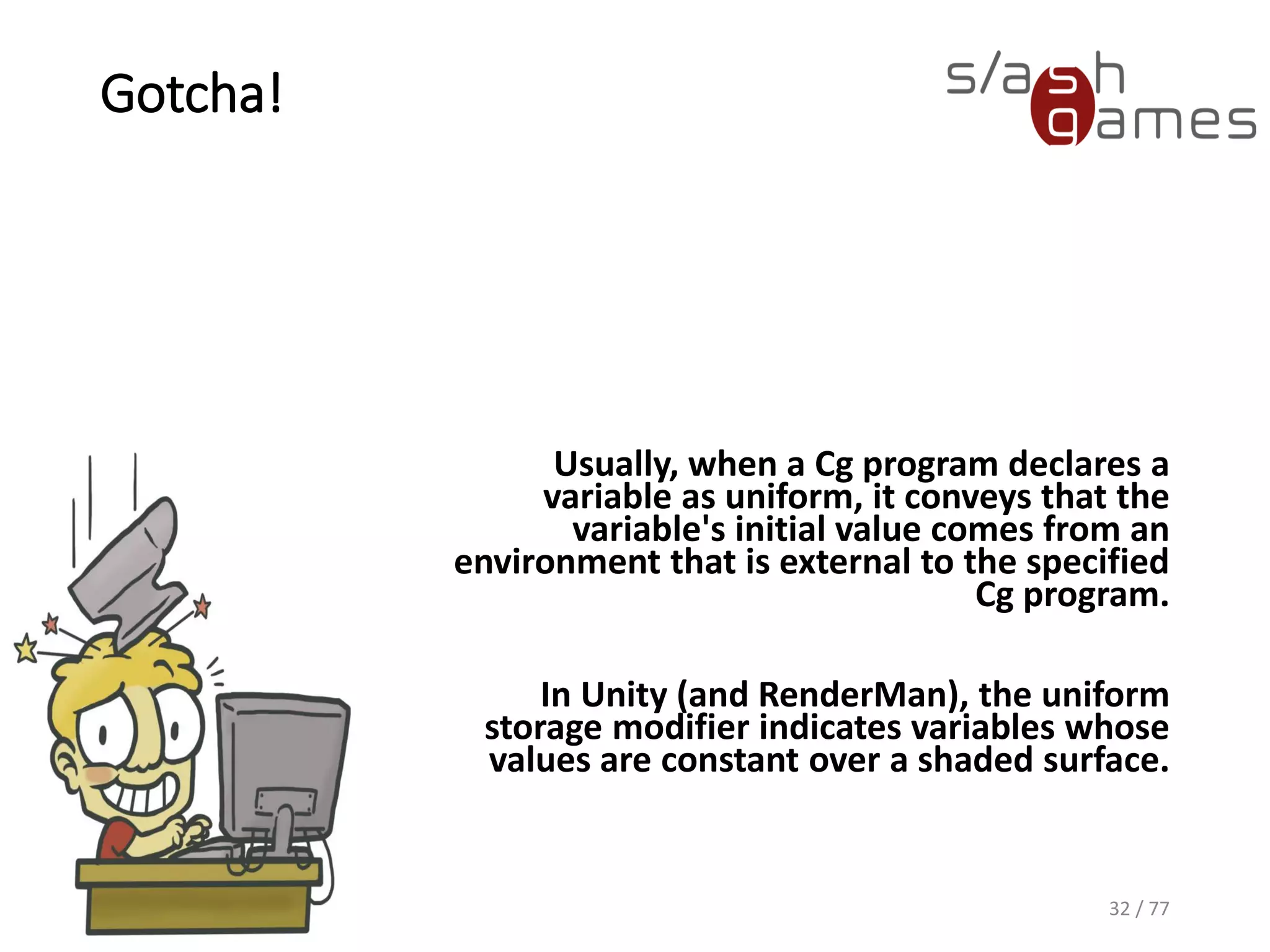

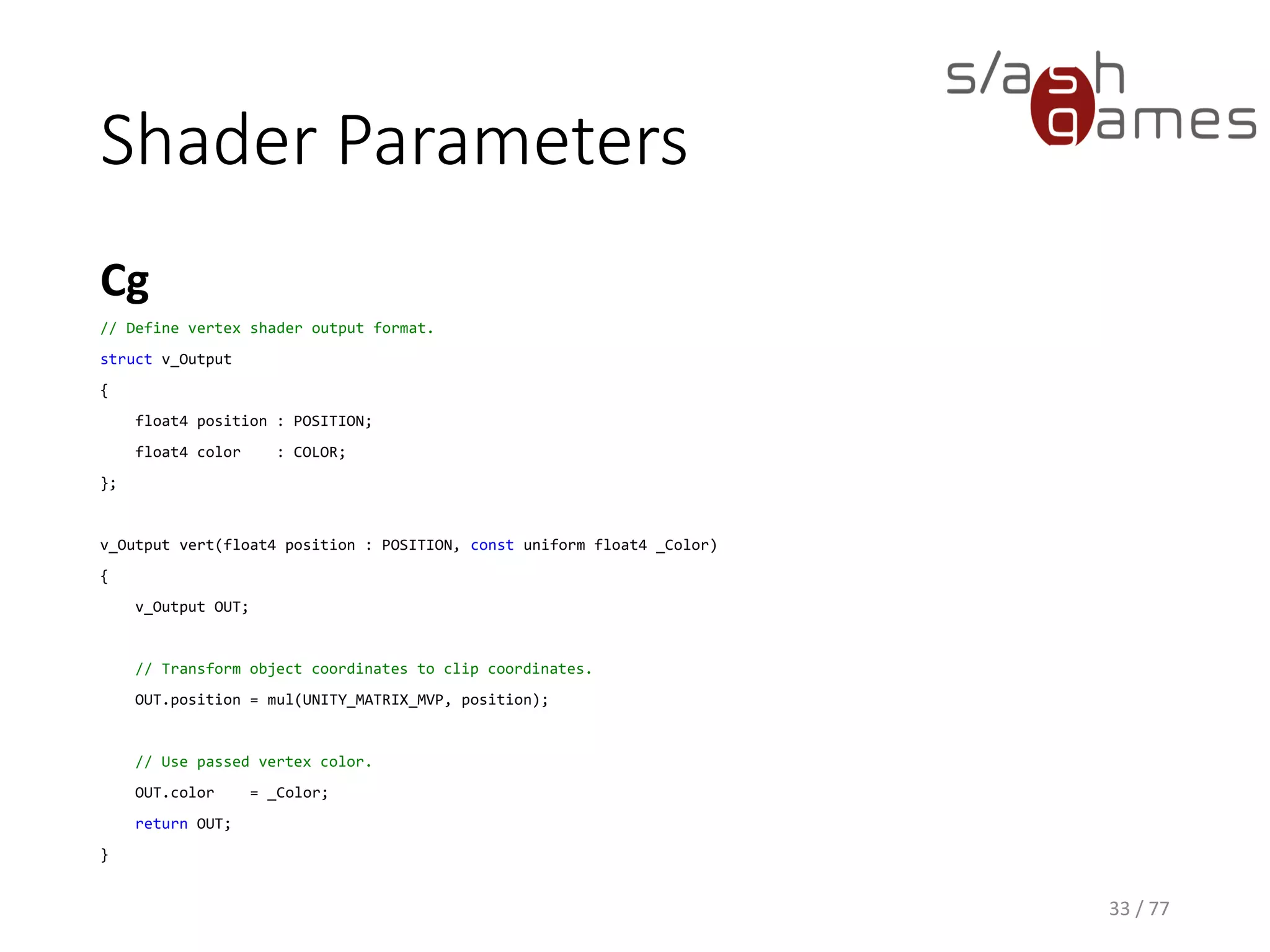

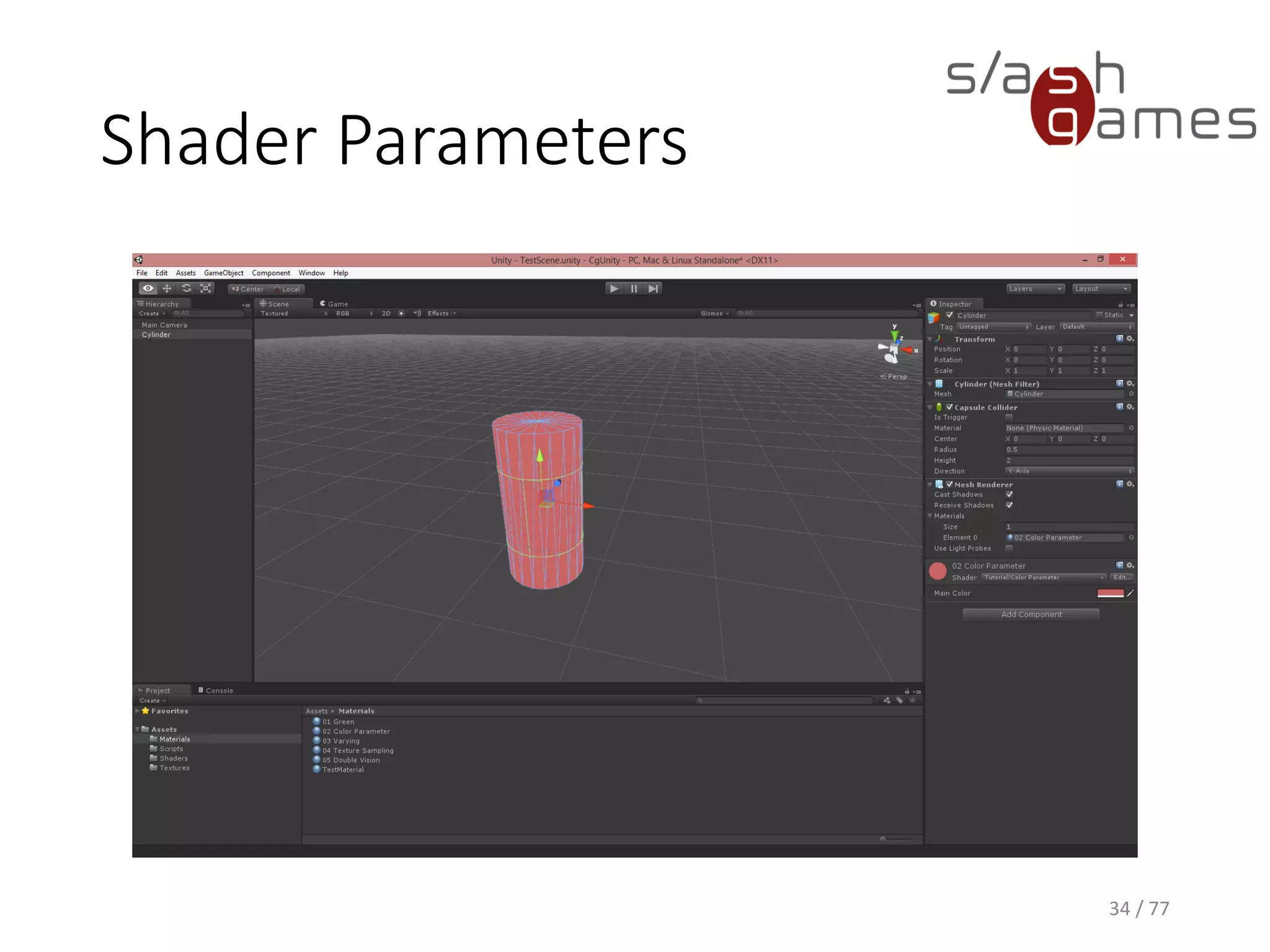

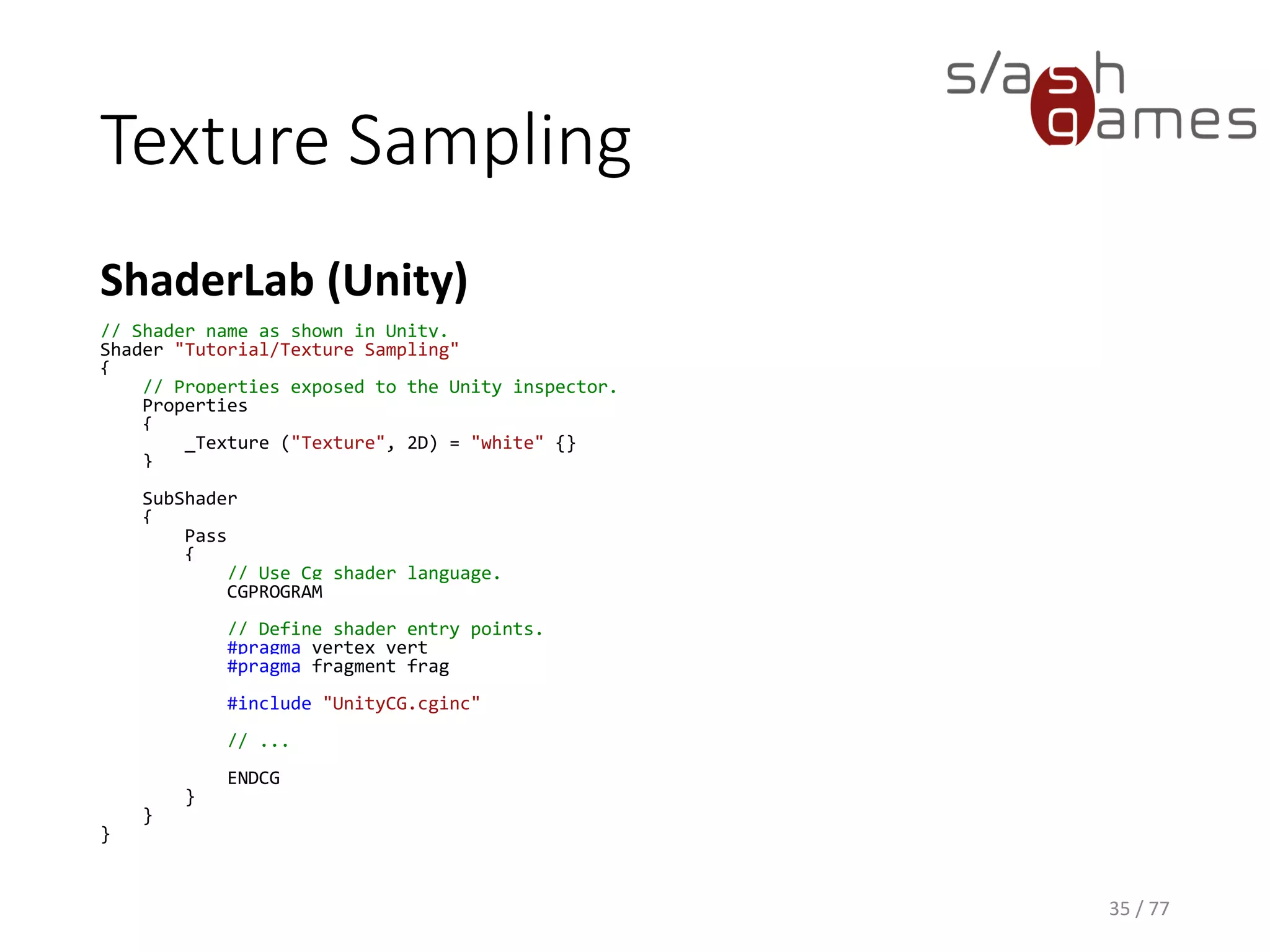

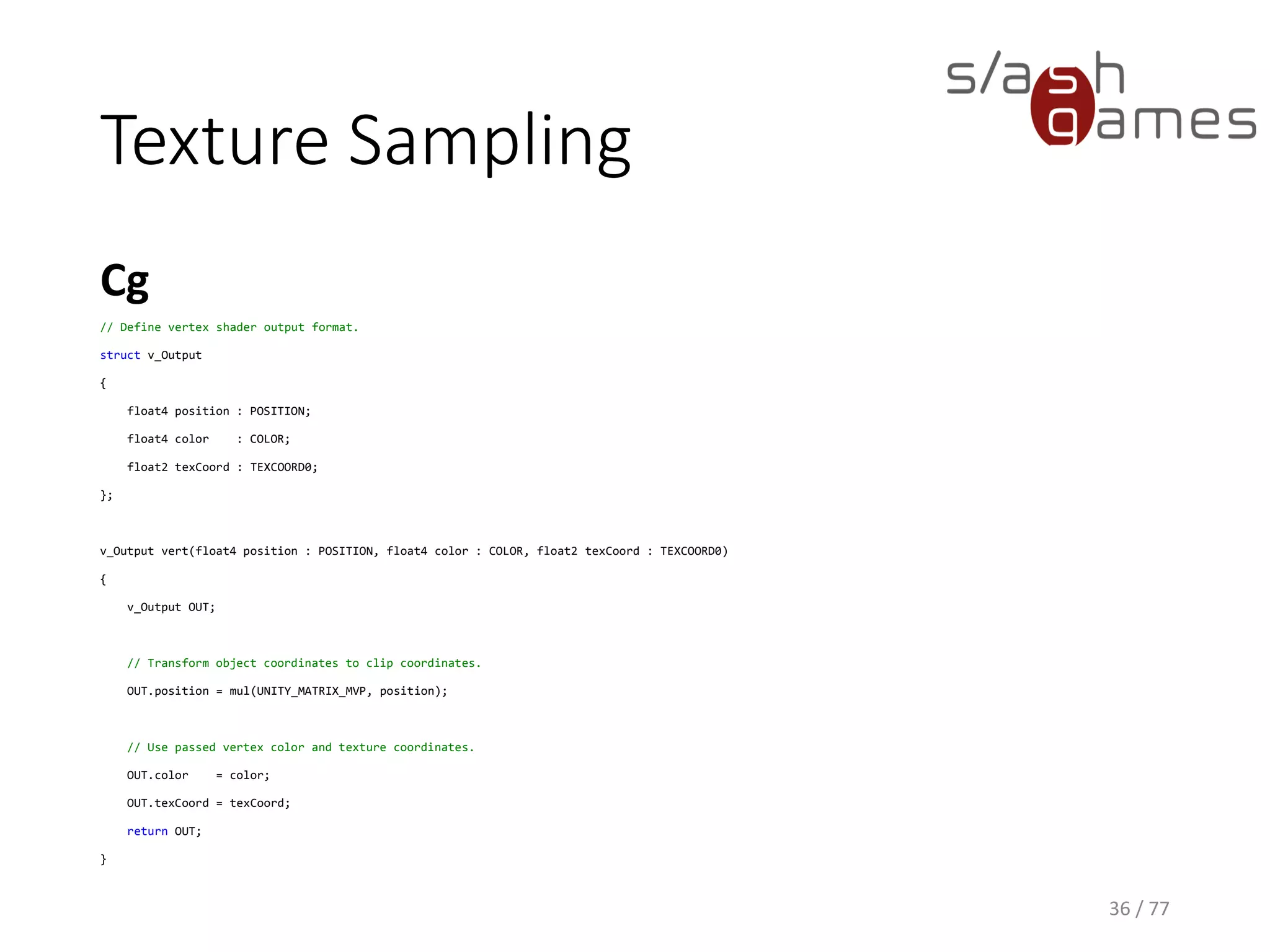

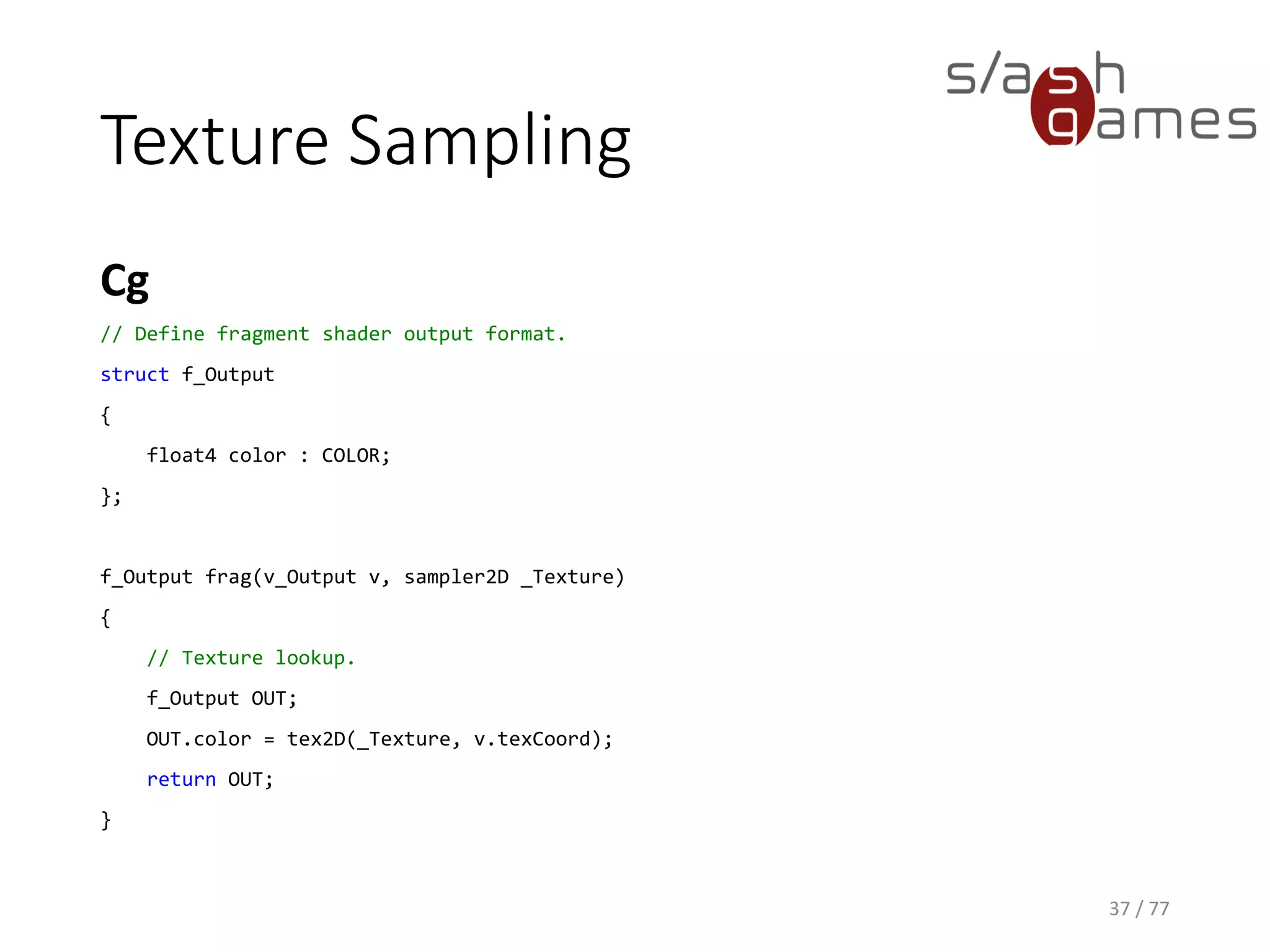

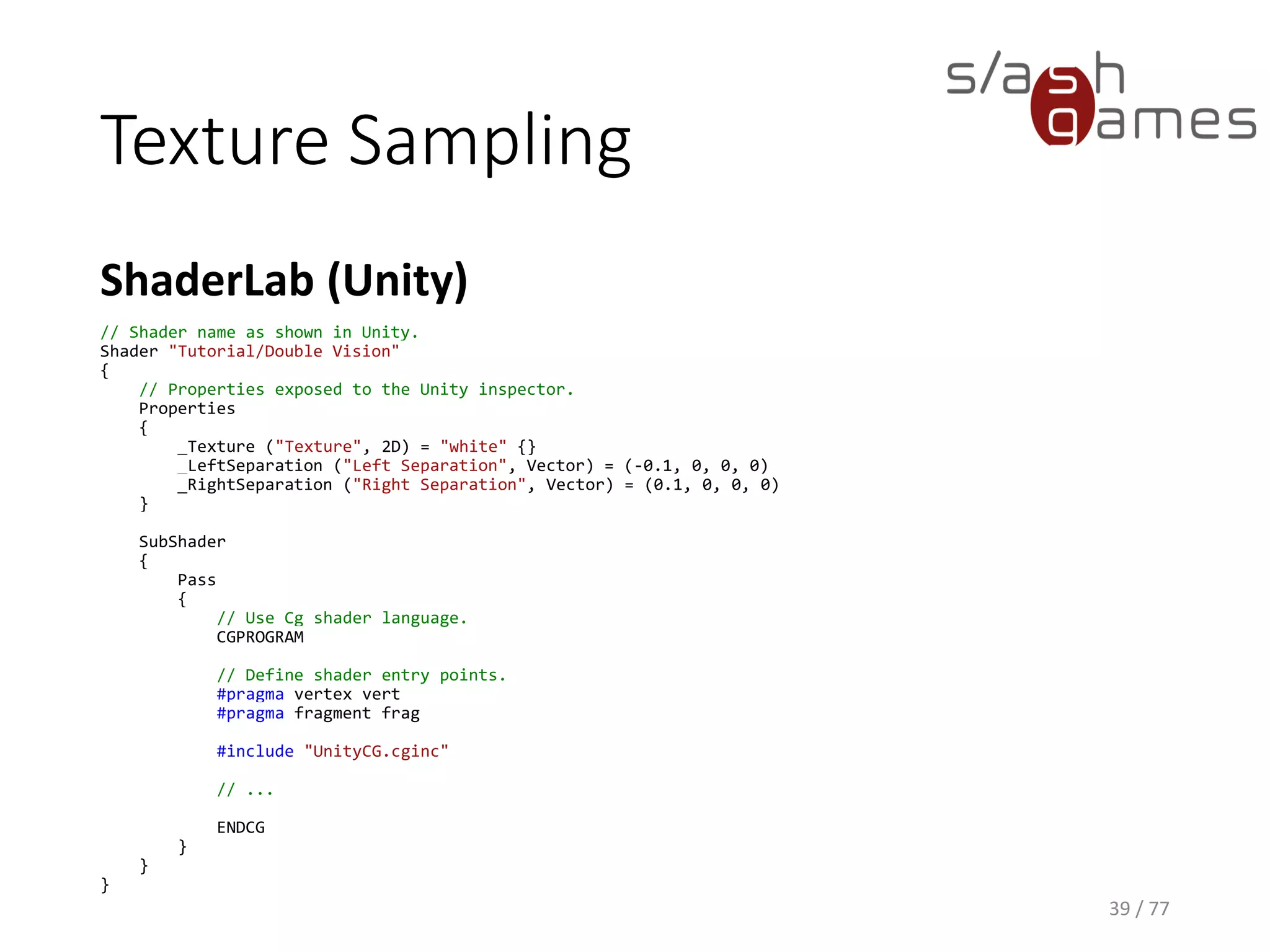

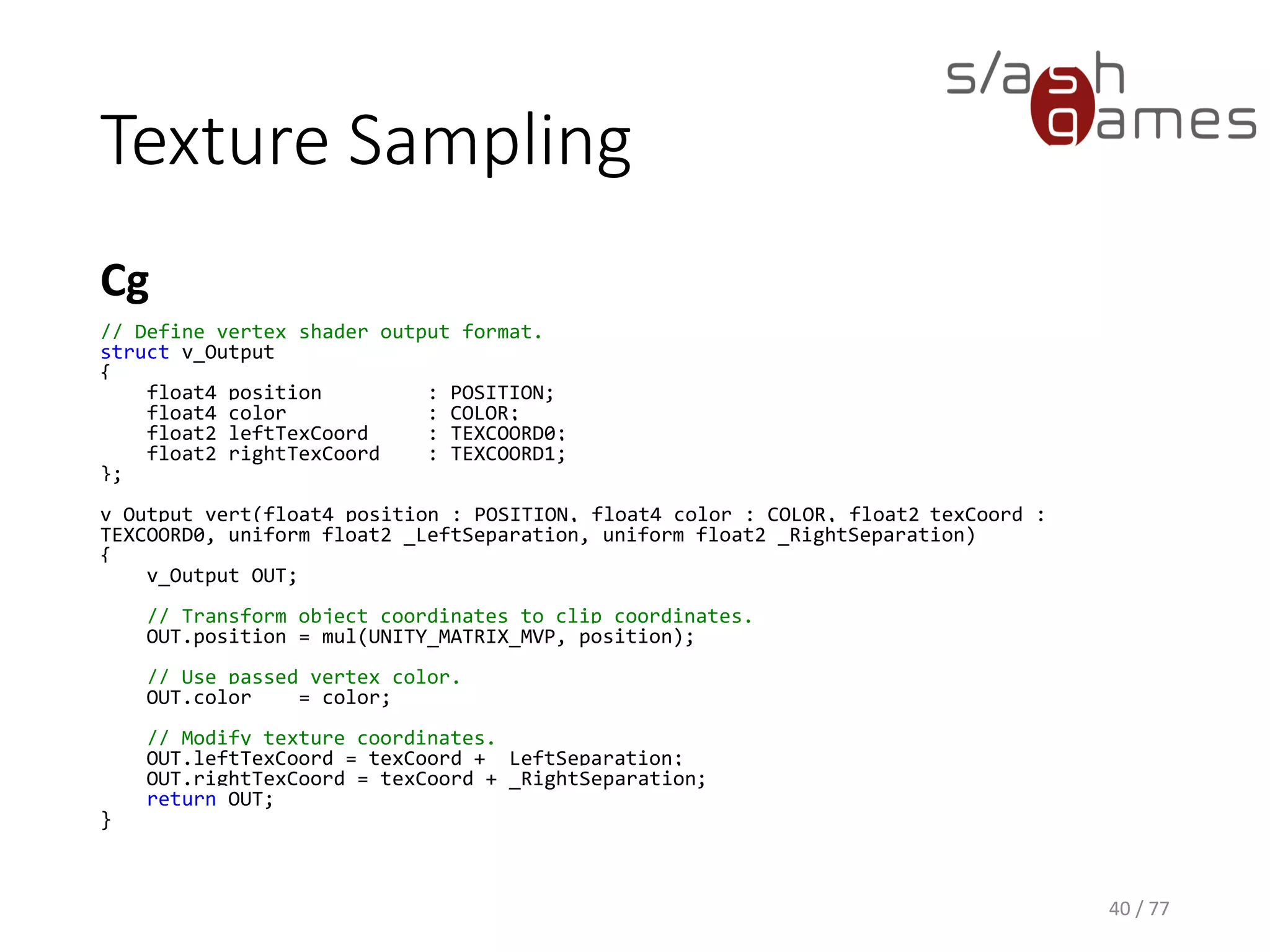

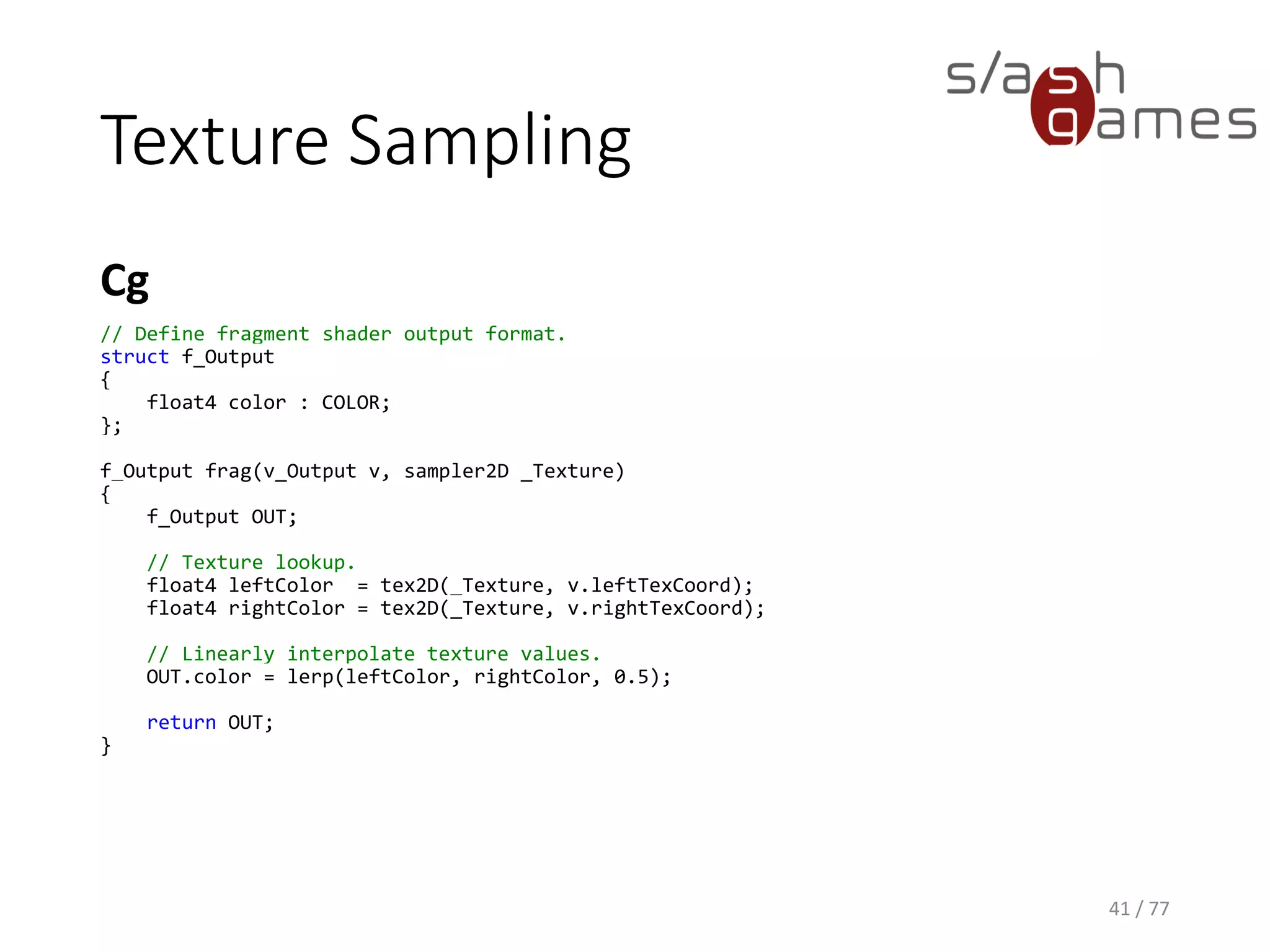

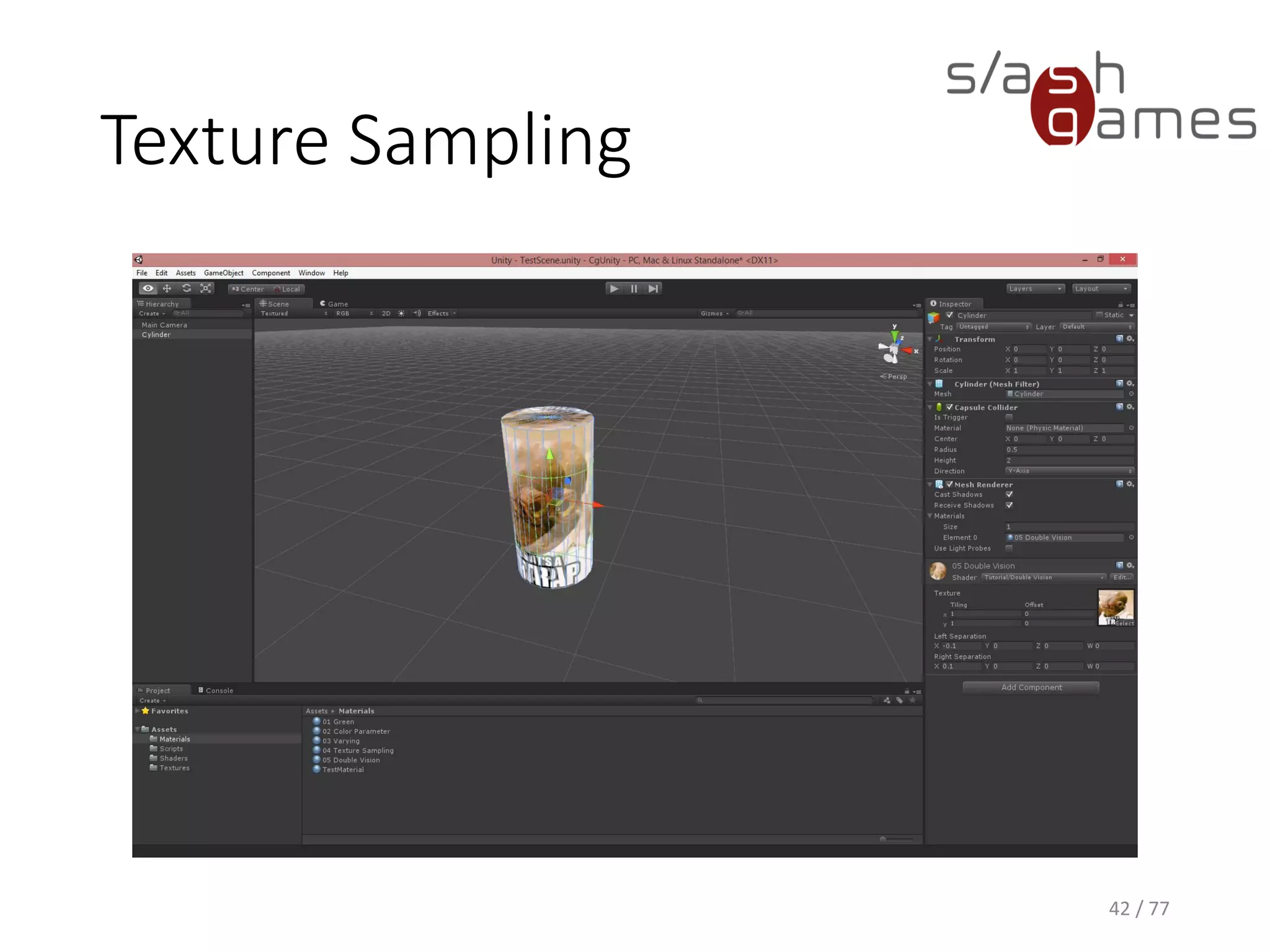

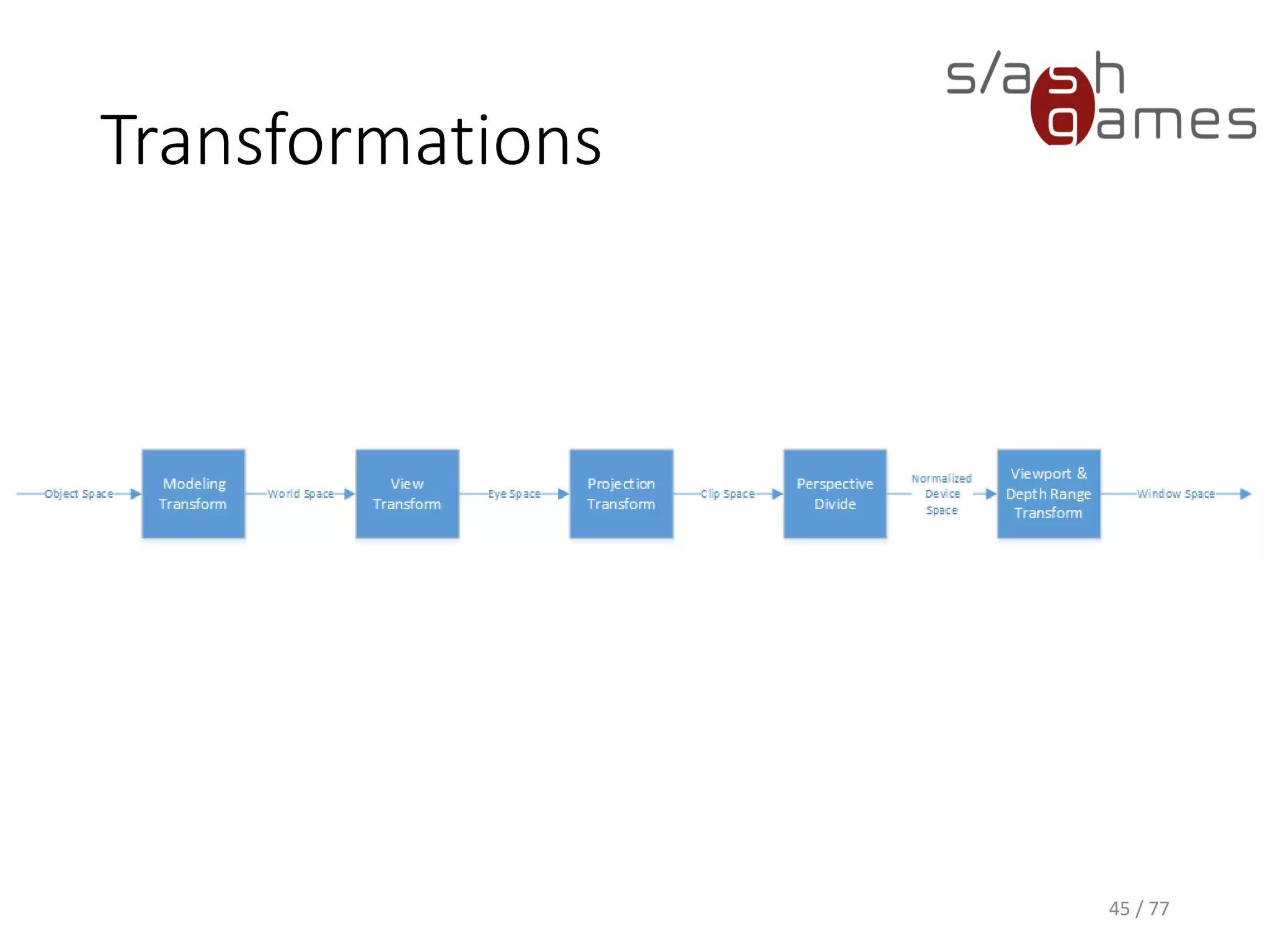

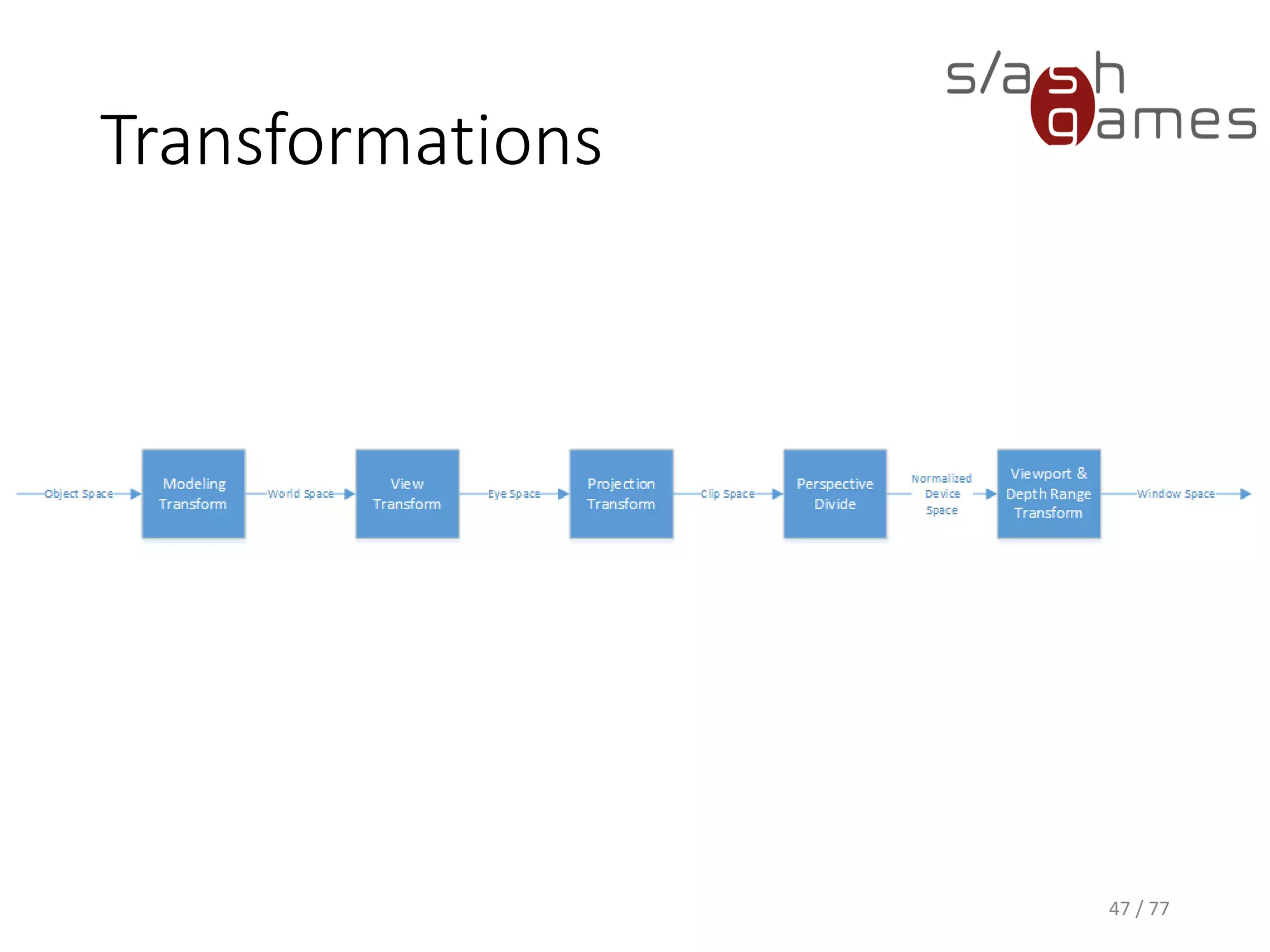

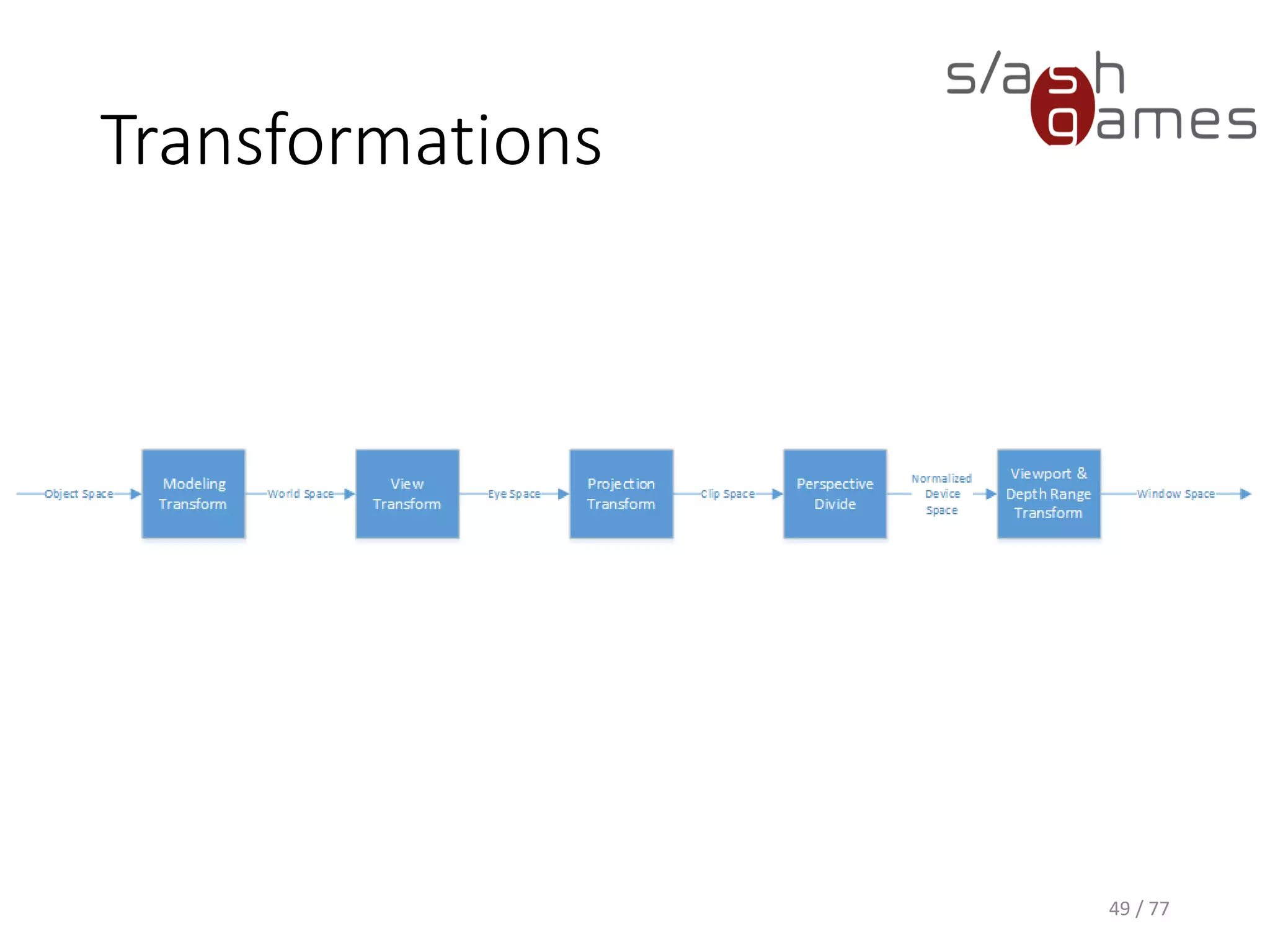

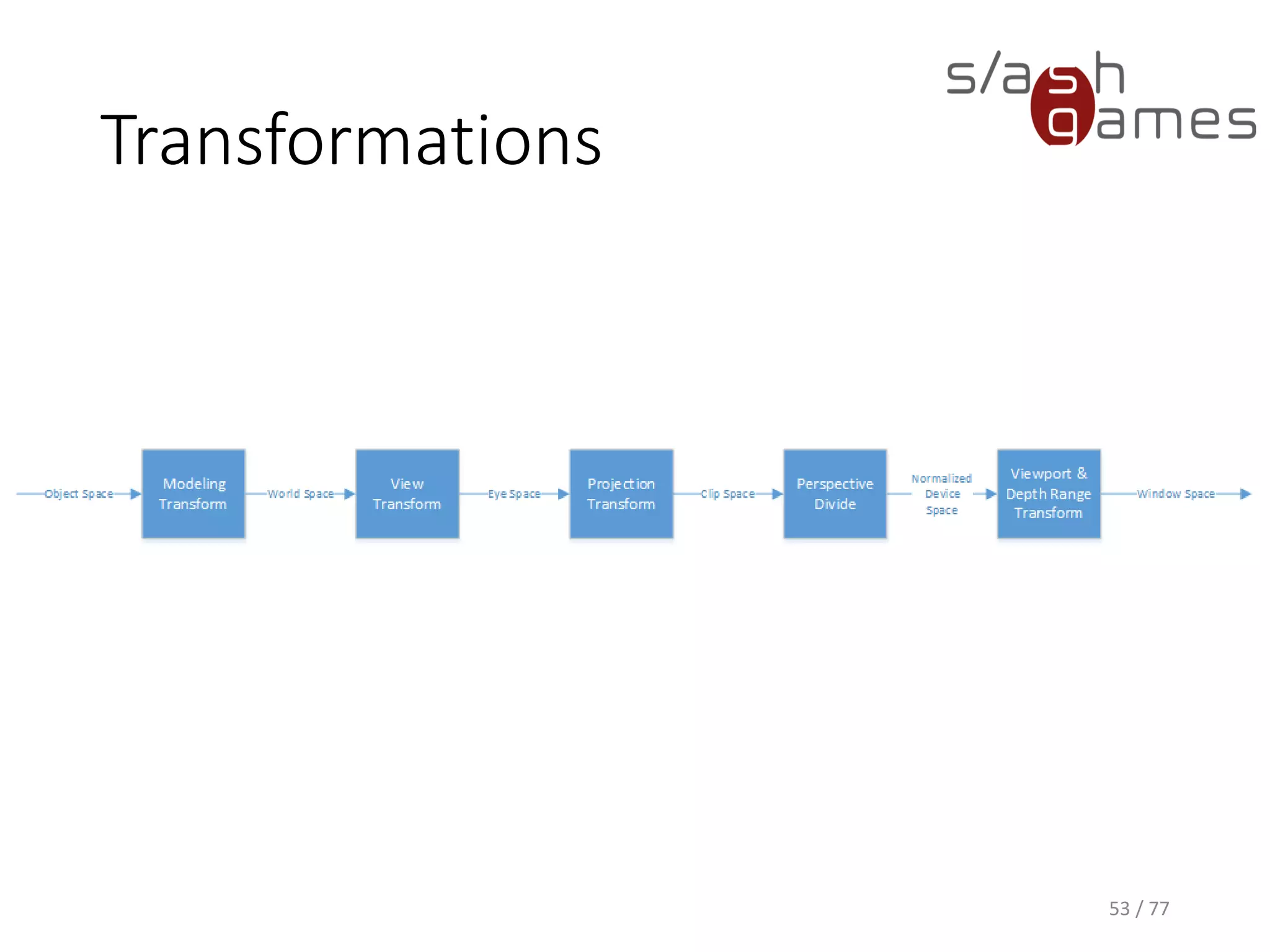

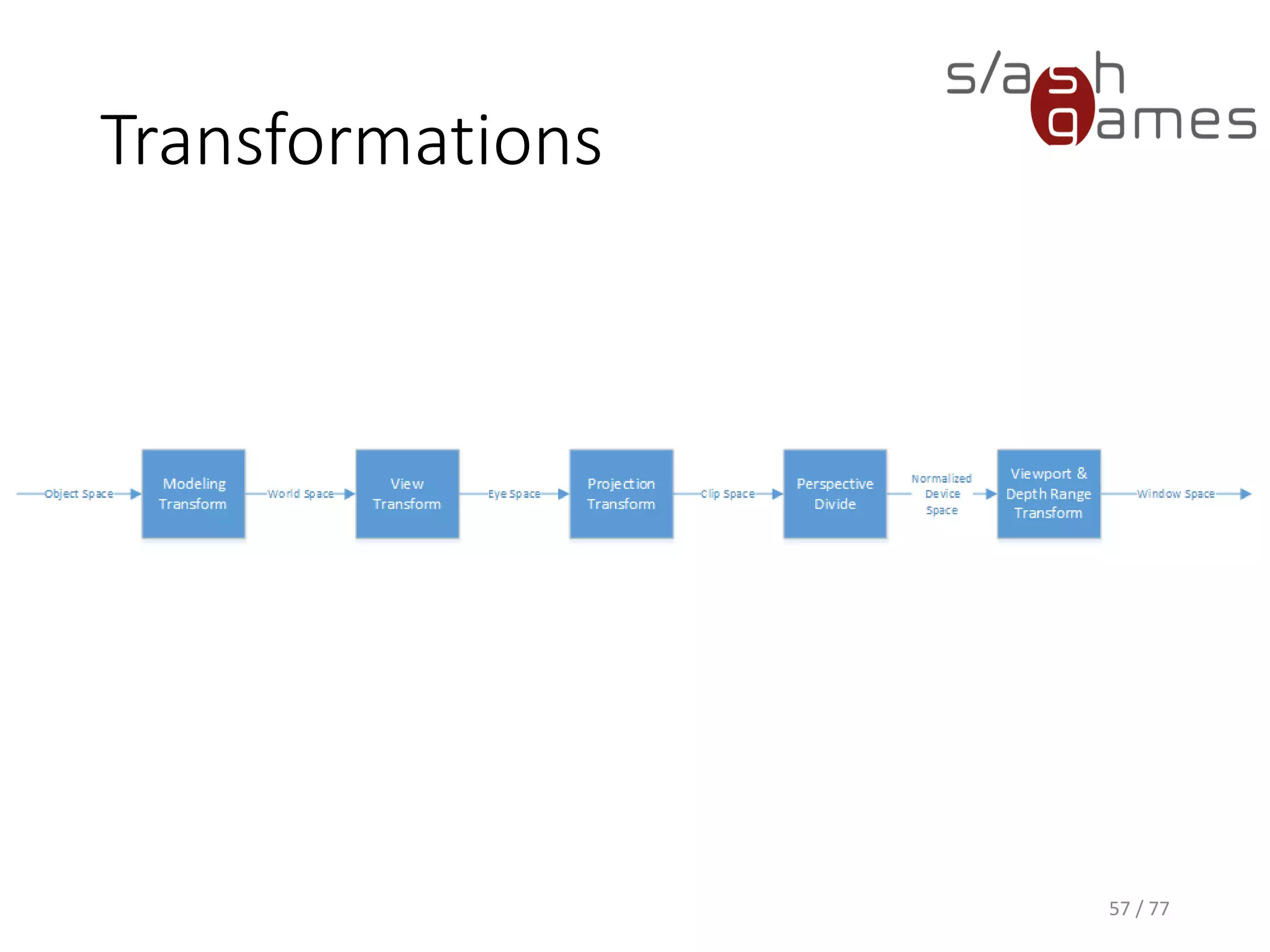

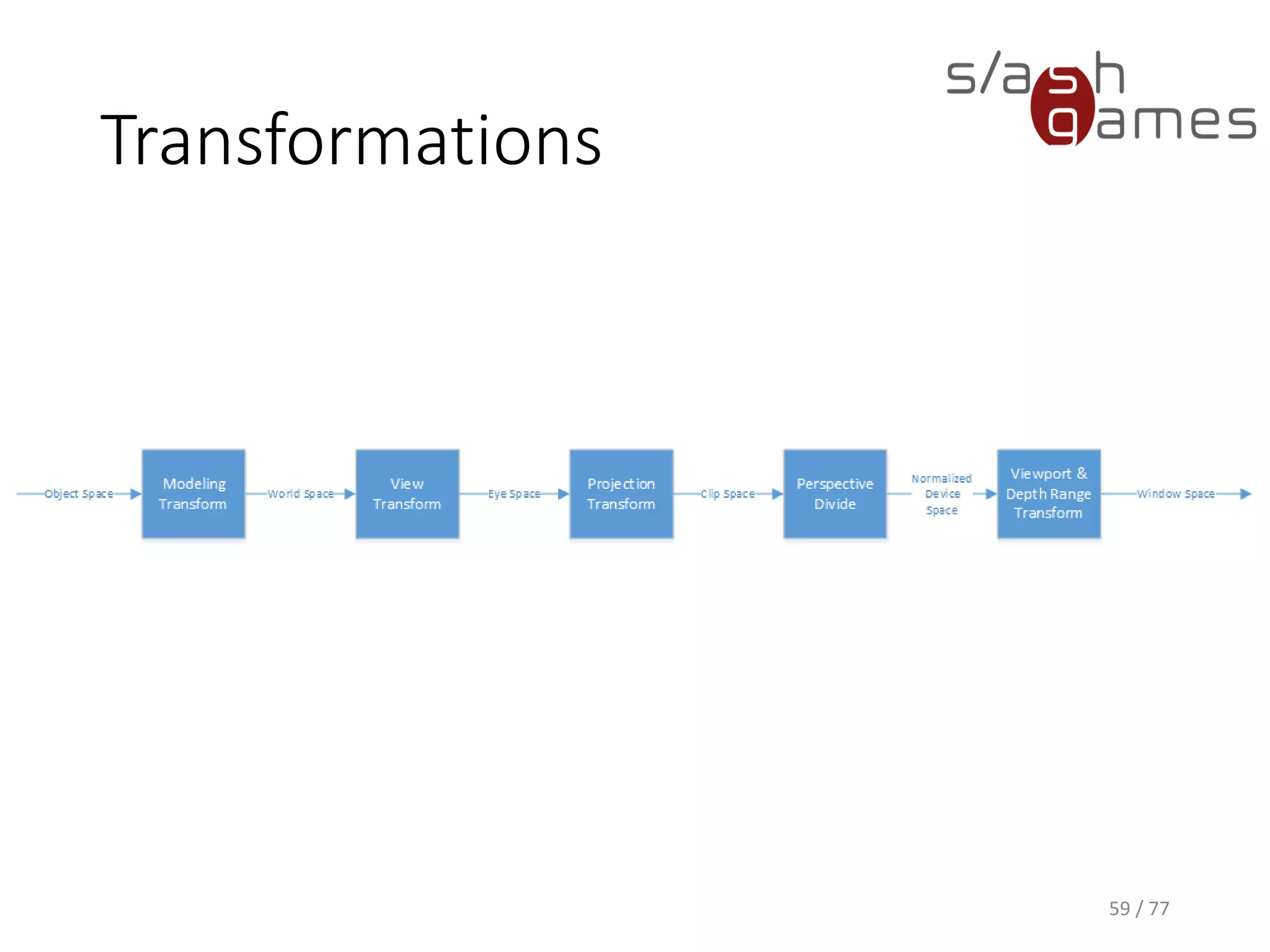

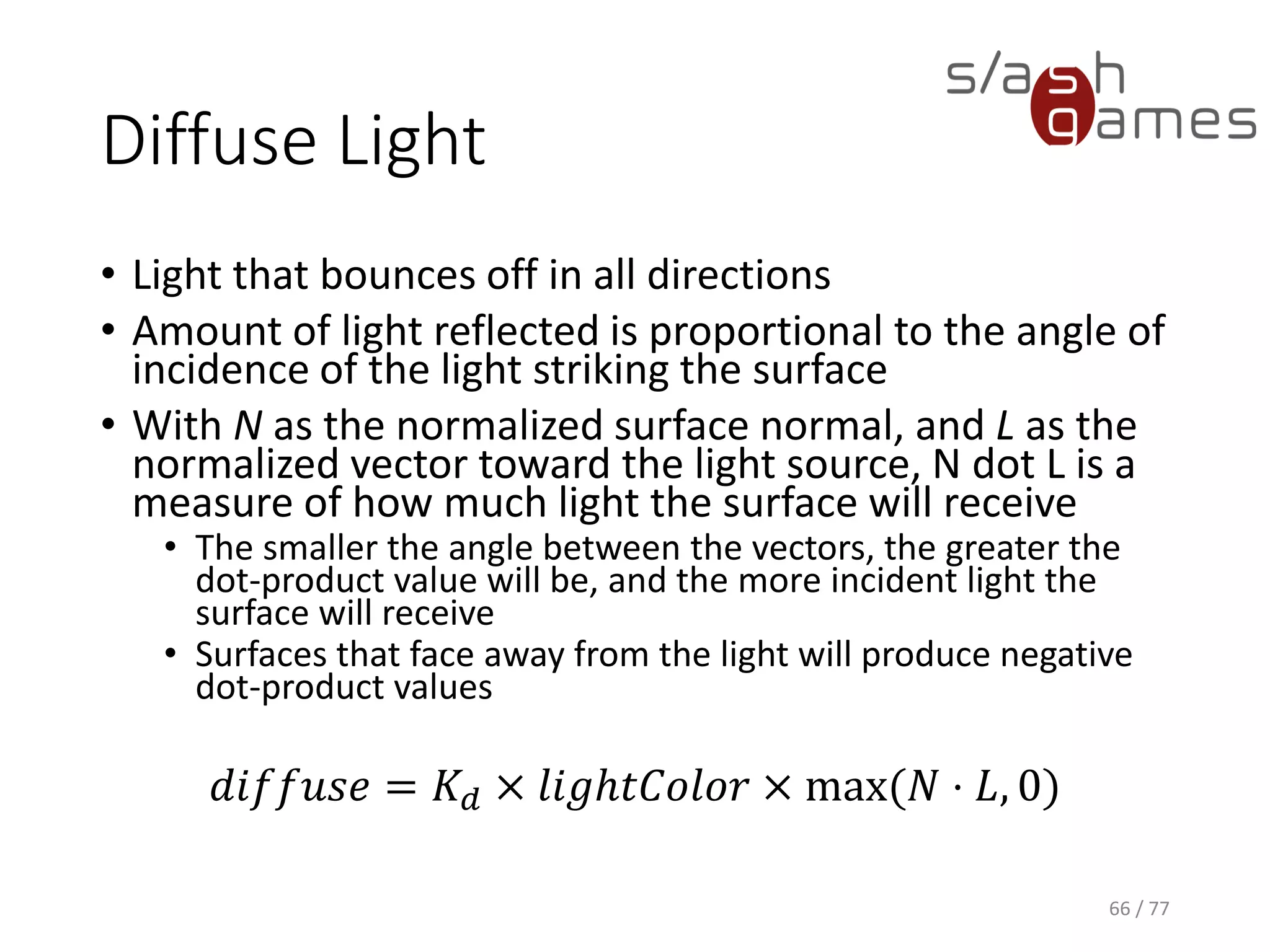

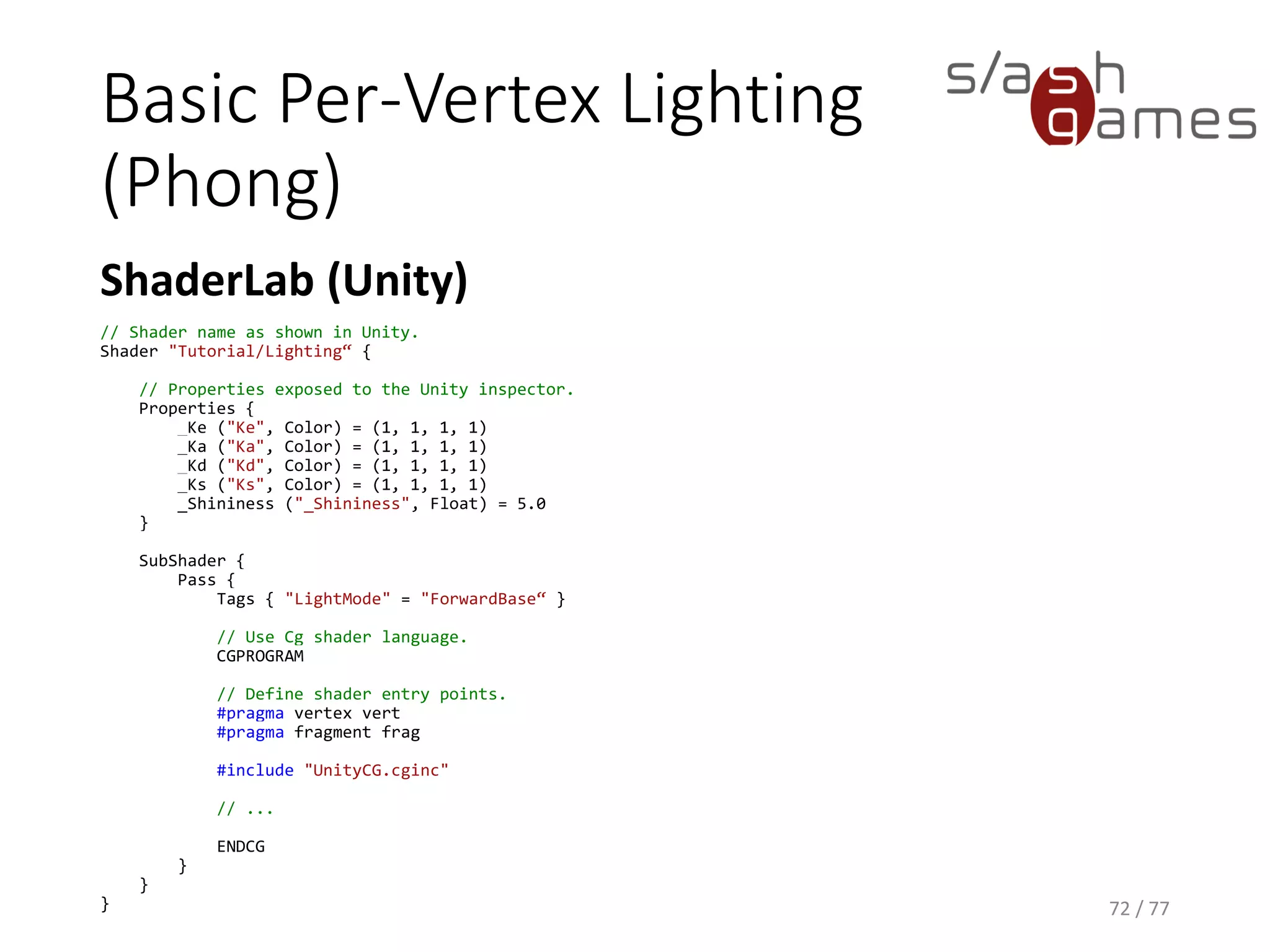

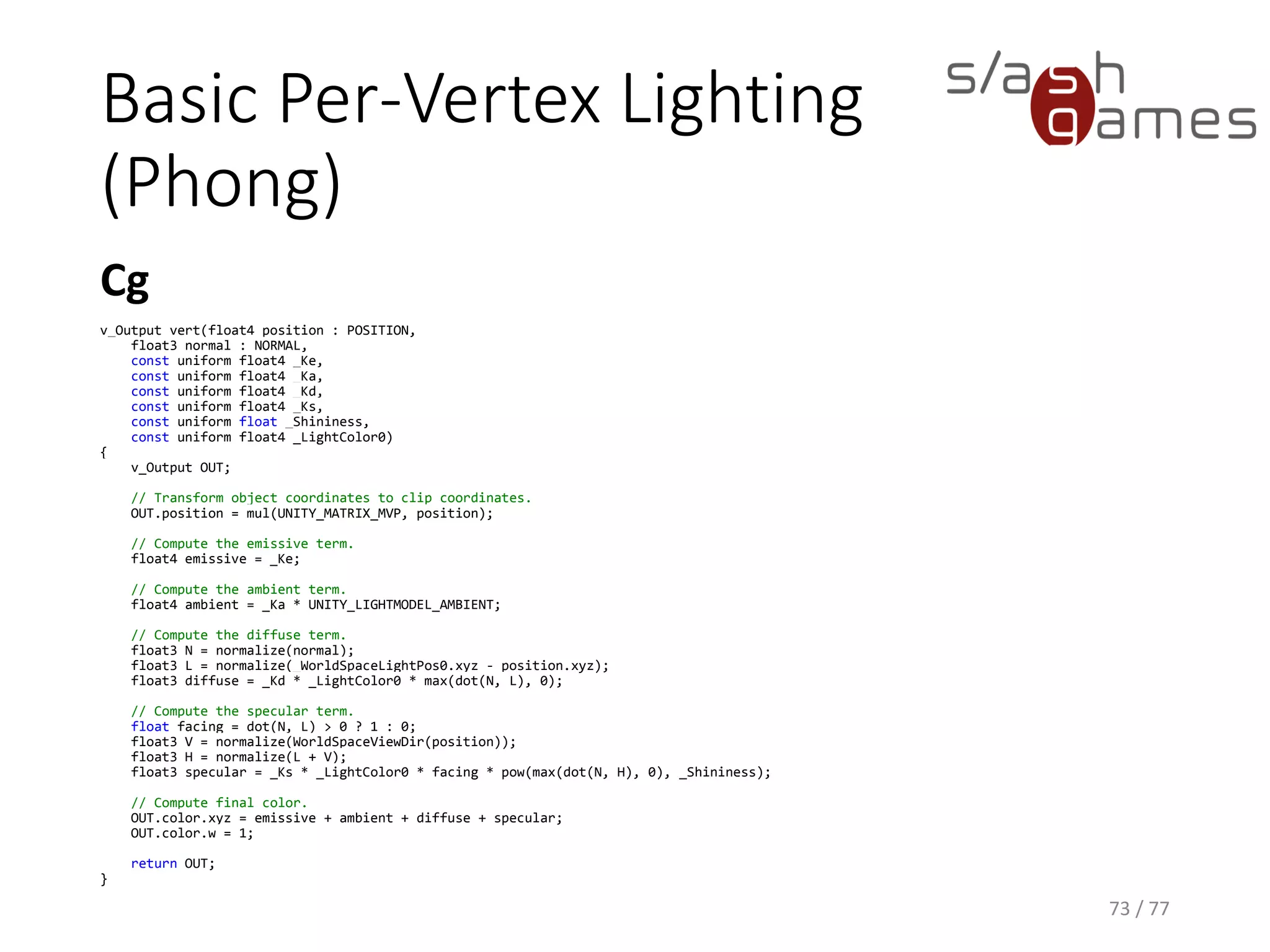

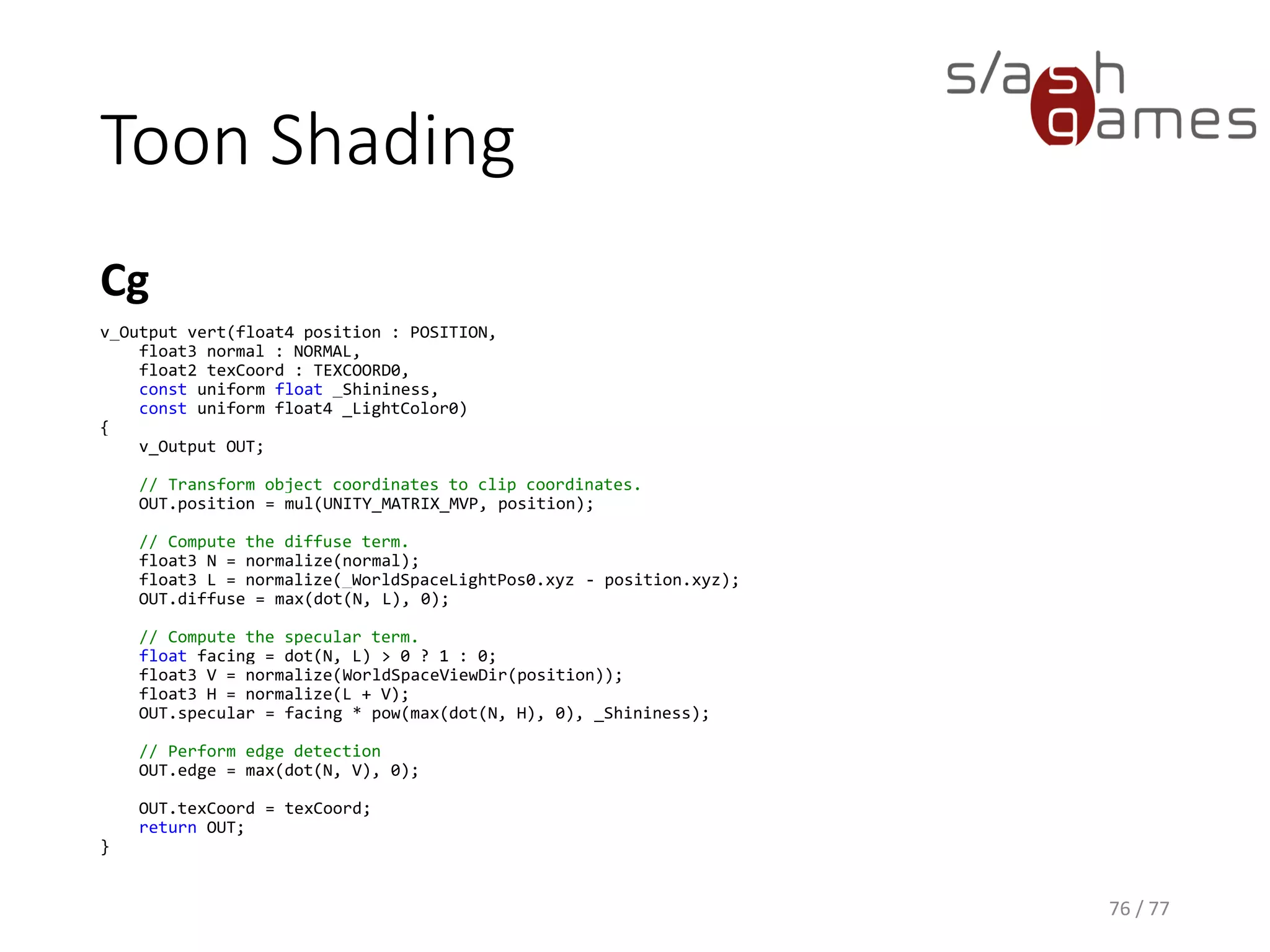

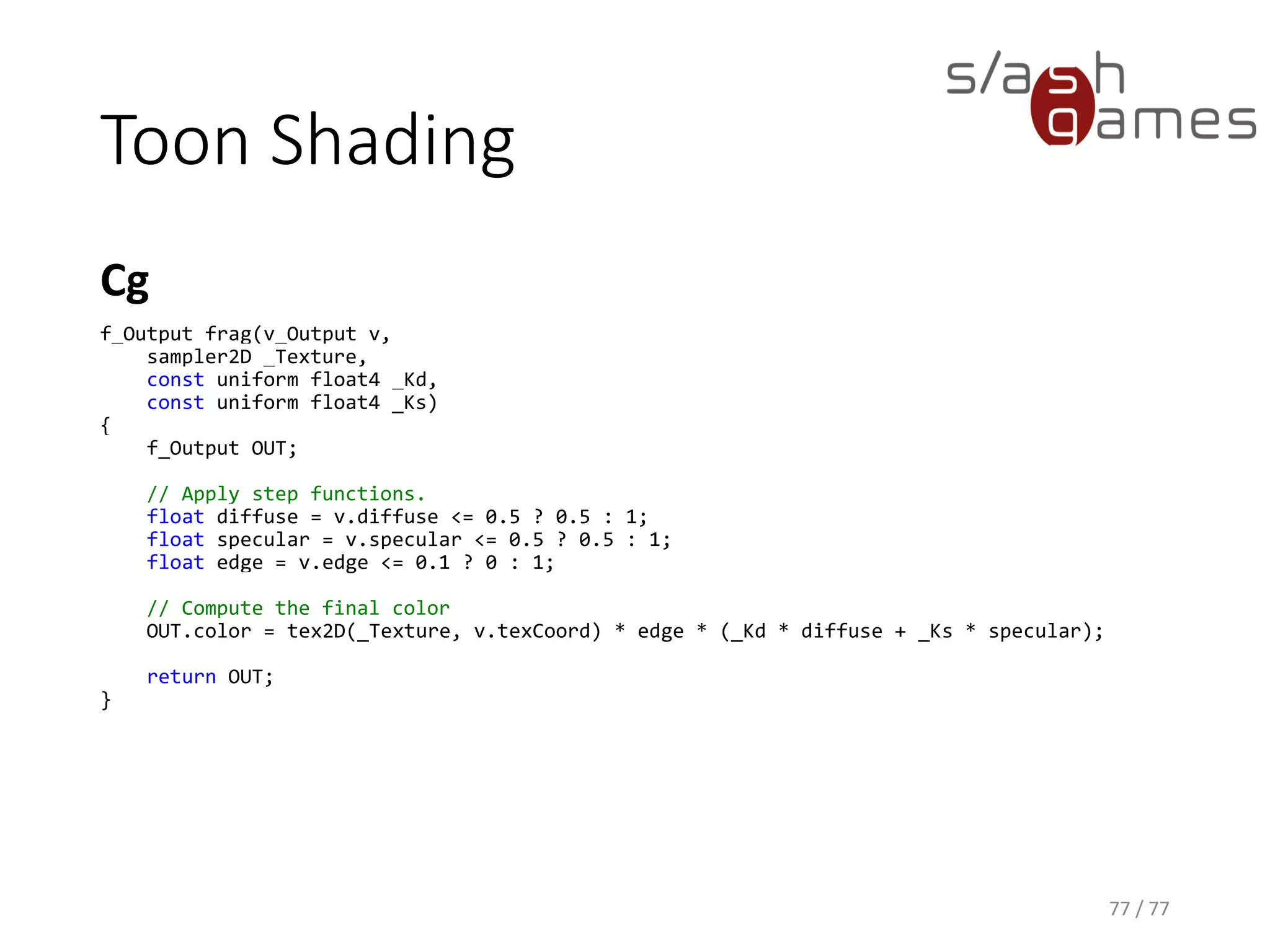

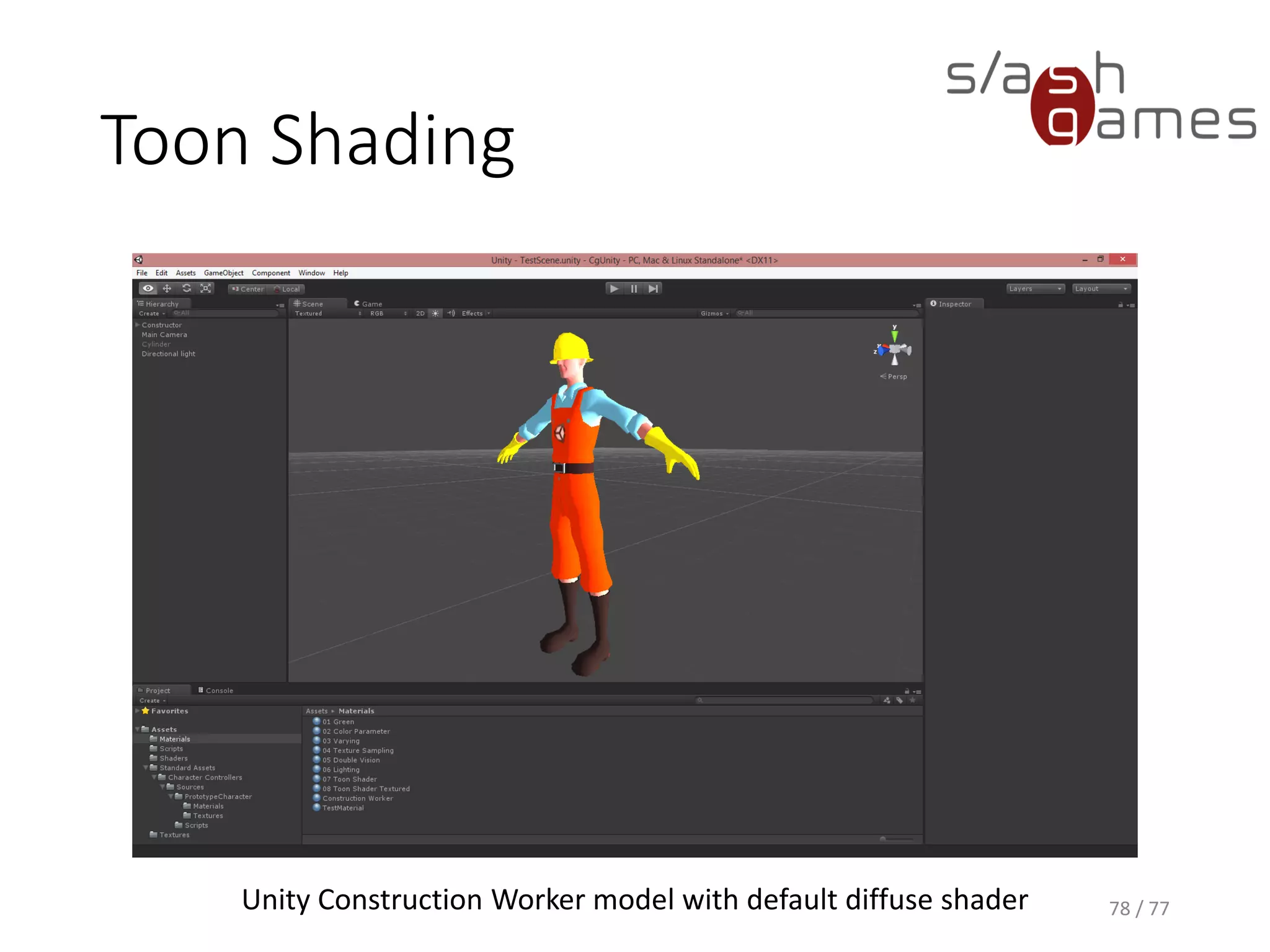

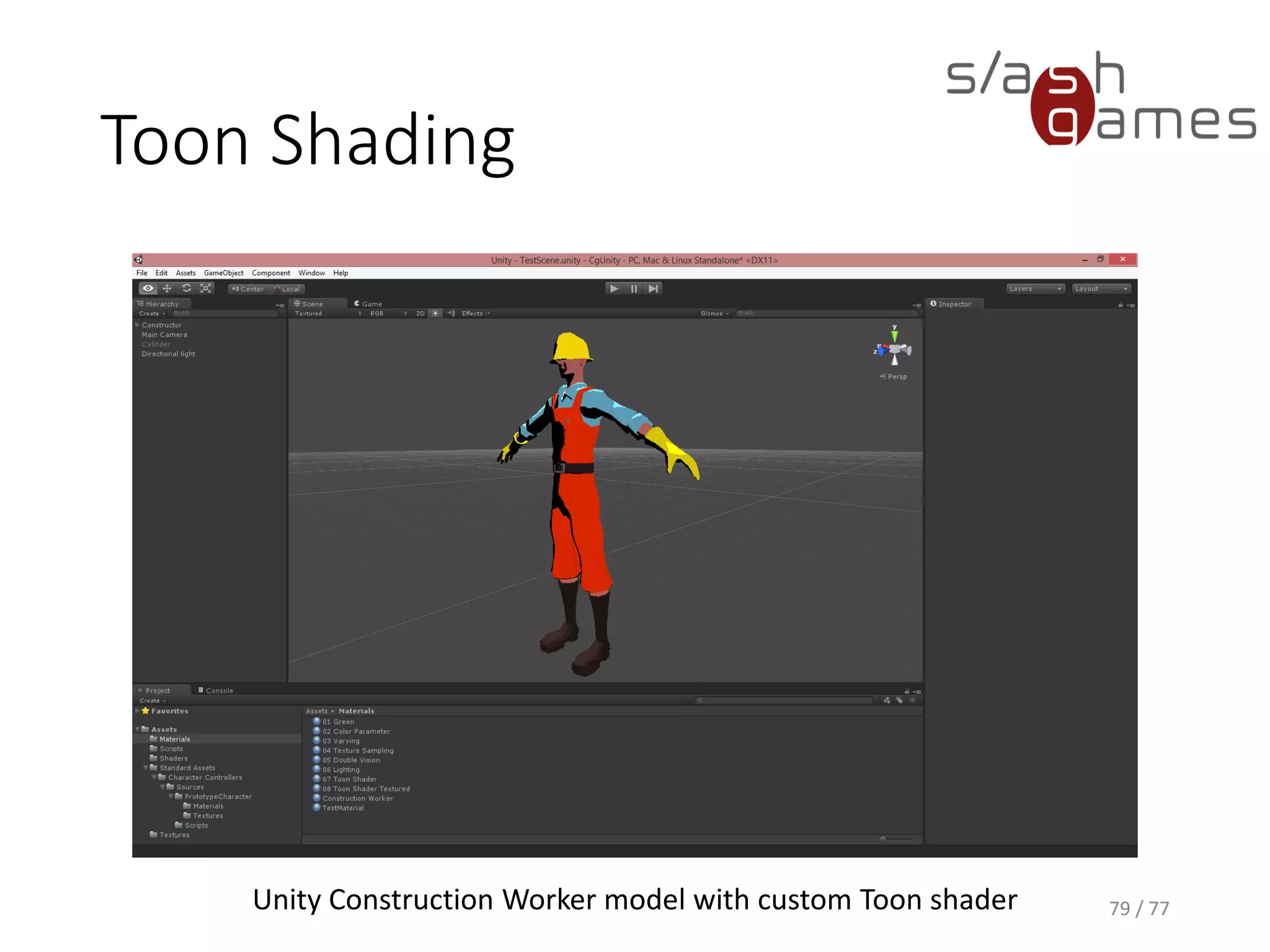

The document outlines the essential aspects of shader development and the graphics pipeline in modern GPUs. It covers key topics like CPU versus GPU performance, shader languages, and the stages of the graphics pipeline including vertex transformation, rasterization, and fragment processing. Additionally, it discusses lighting models and provides practical shader programming examples using Cg in Unity.