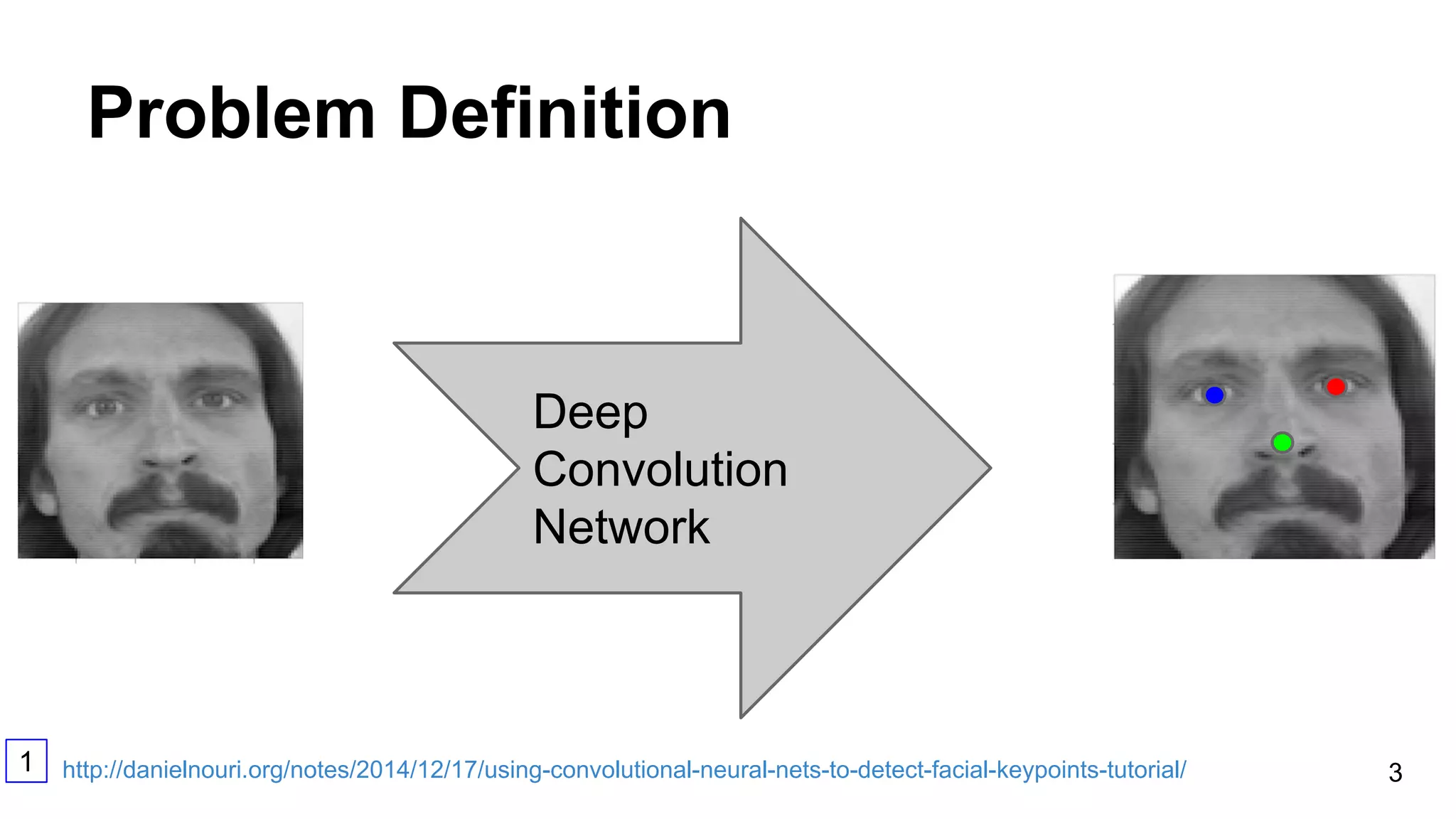

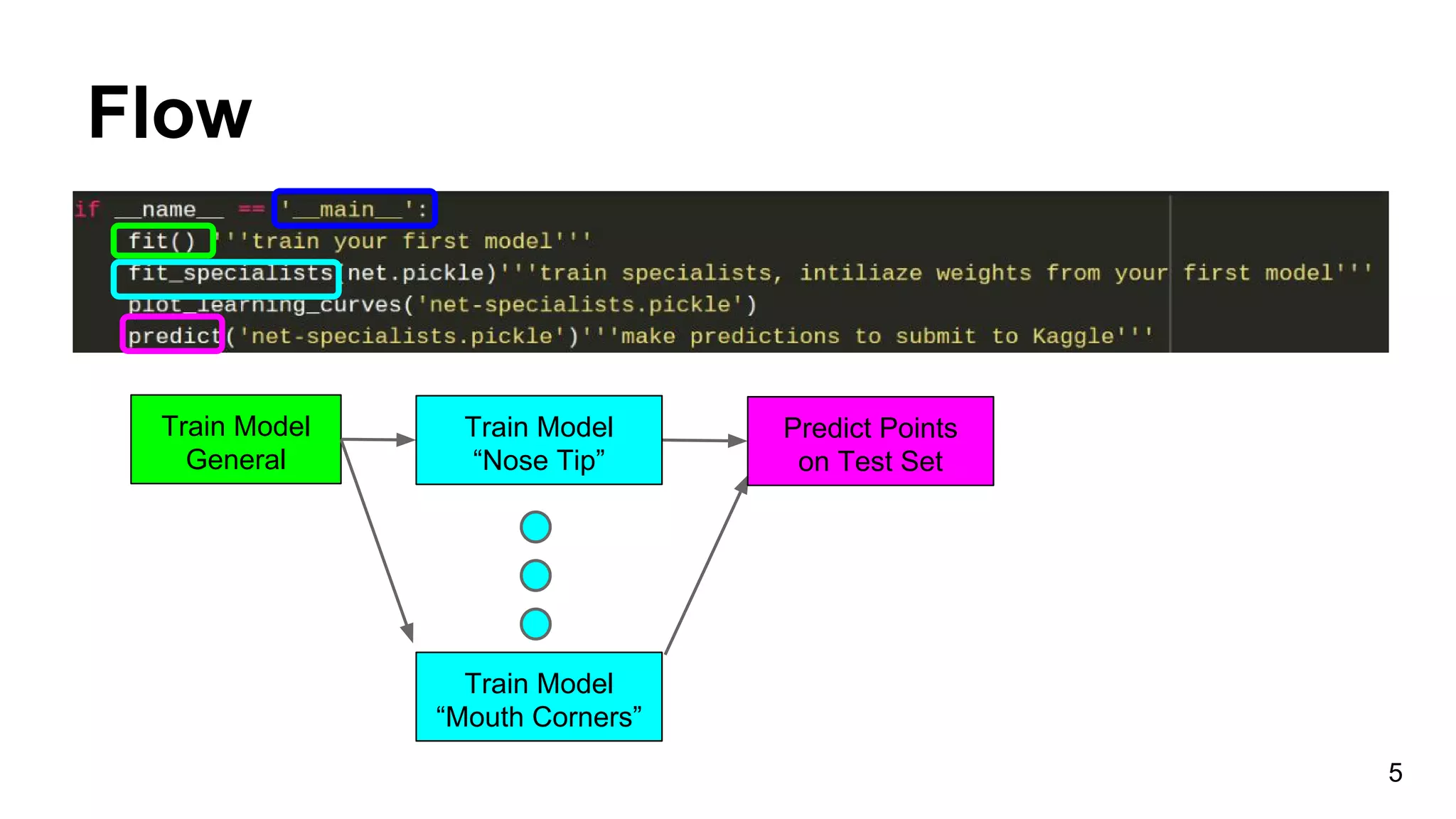

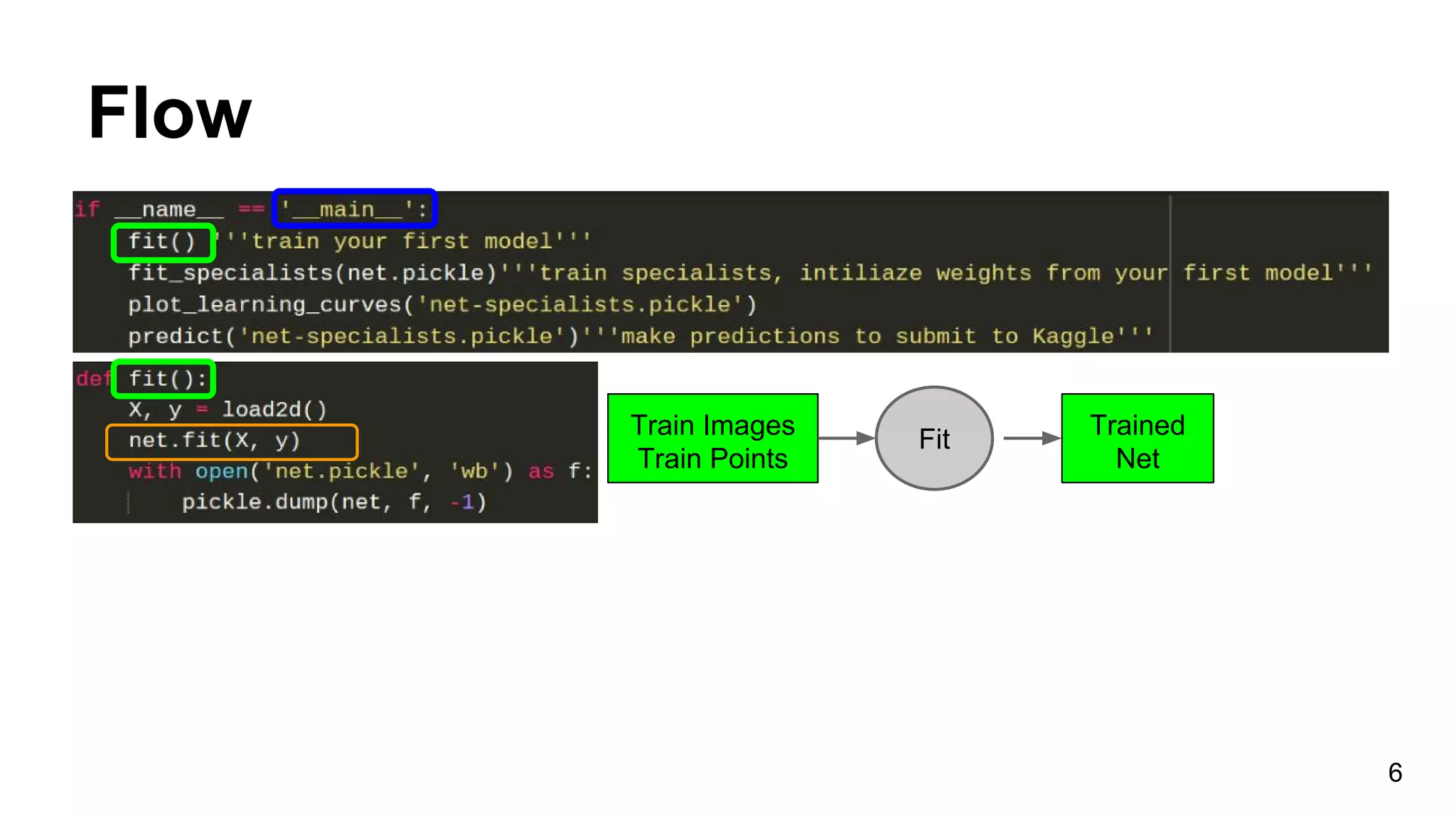

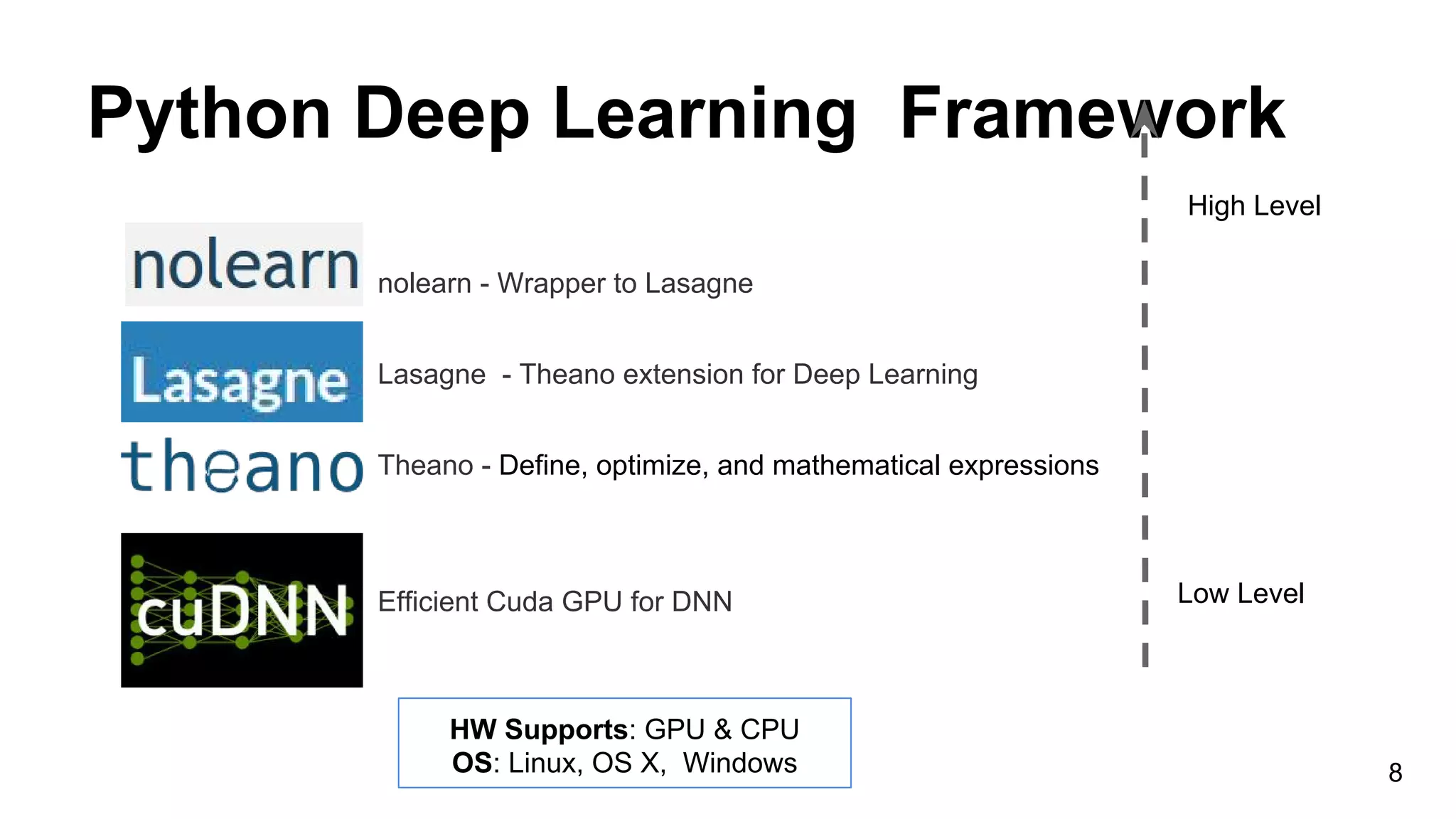

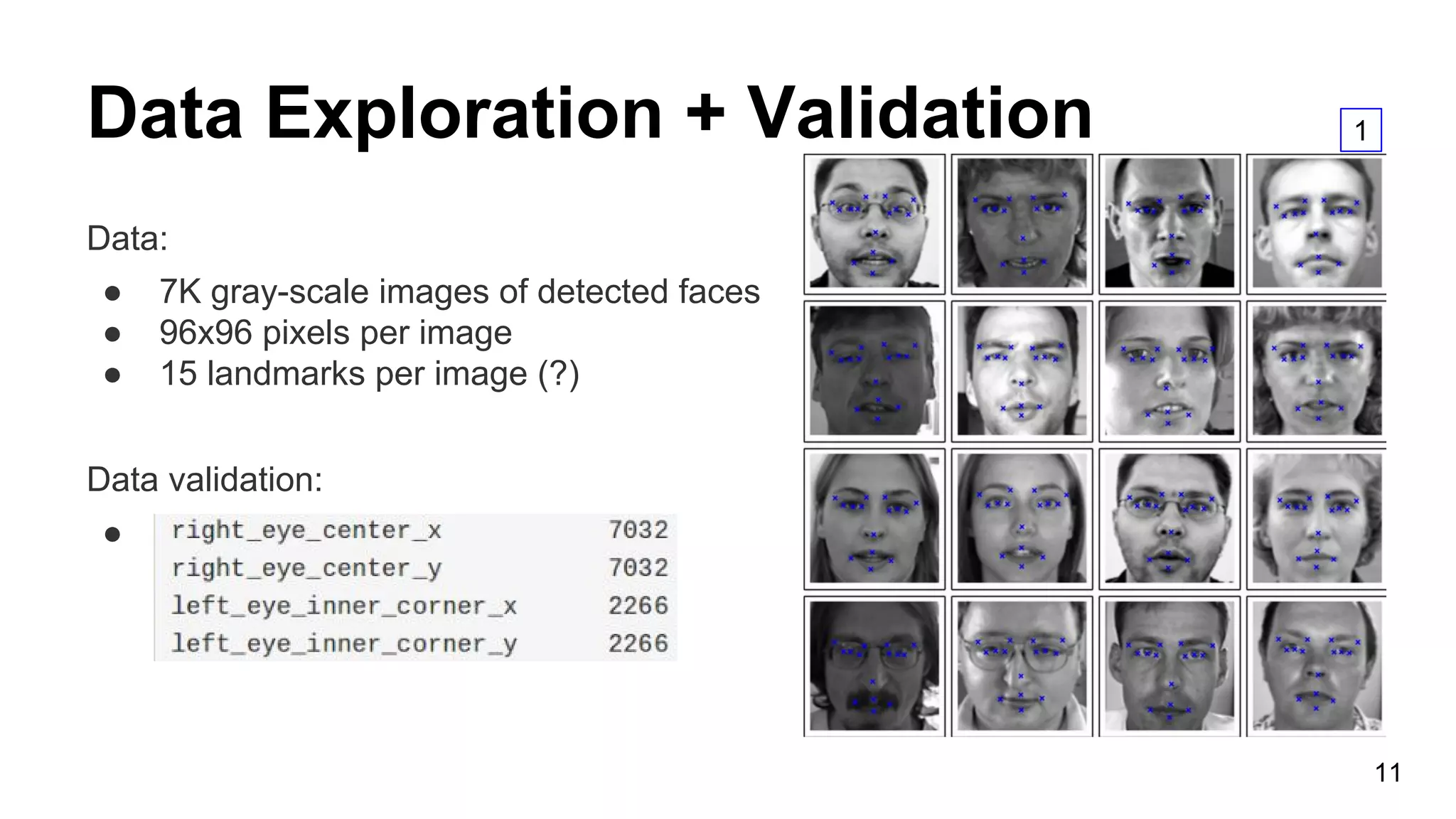

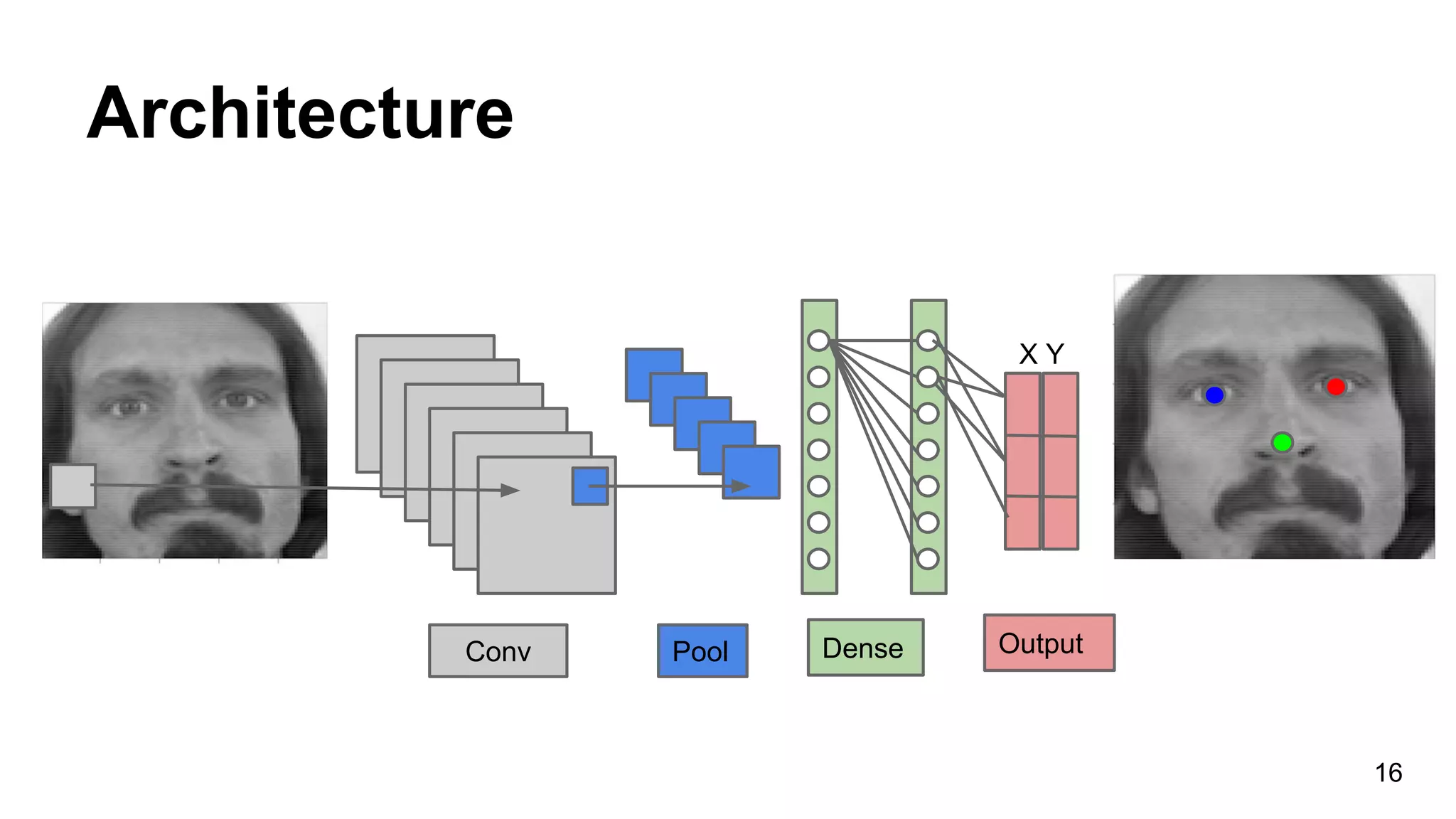

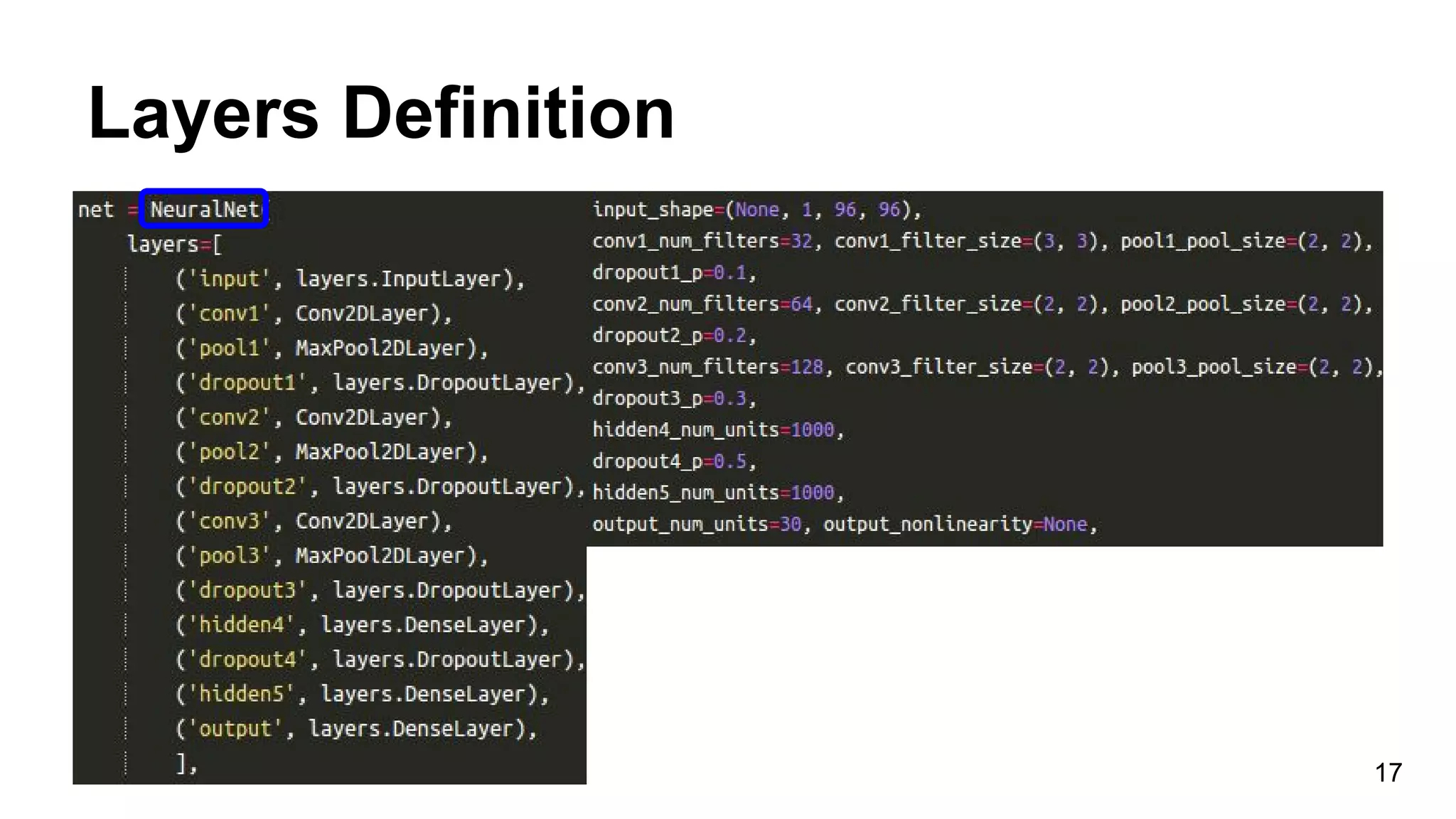

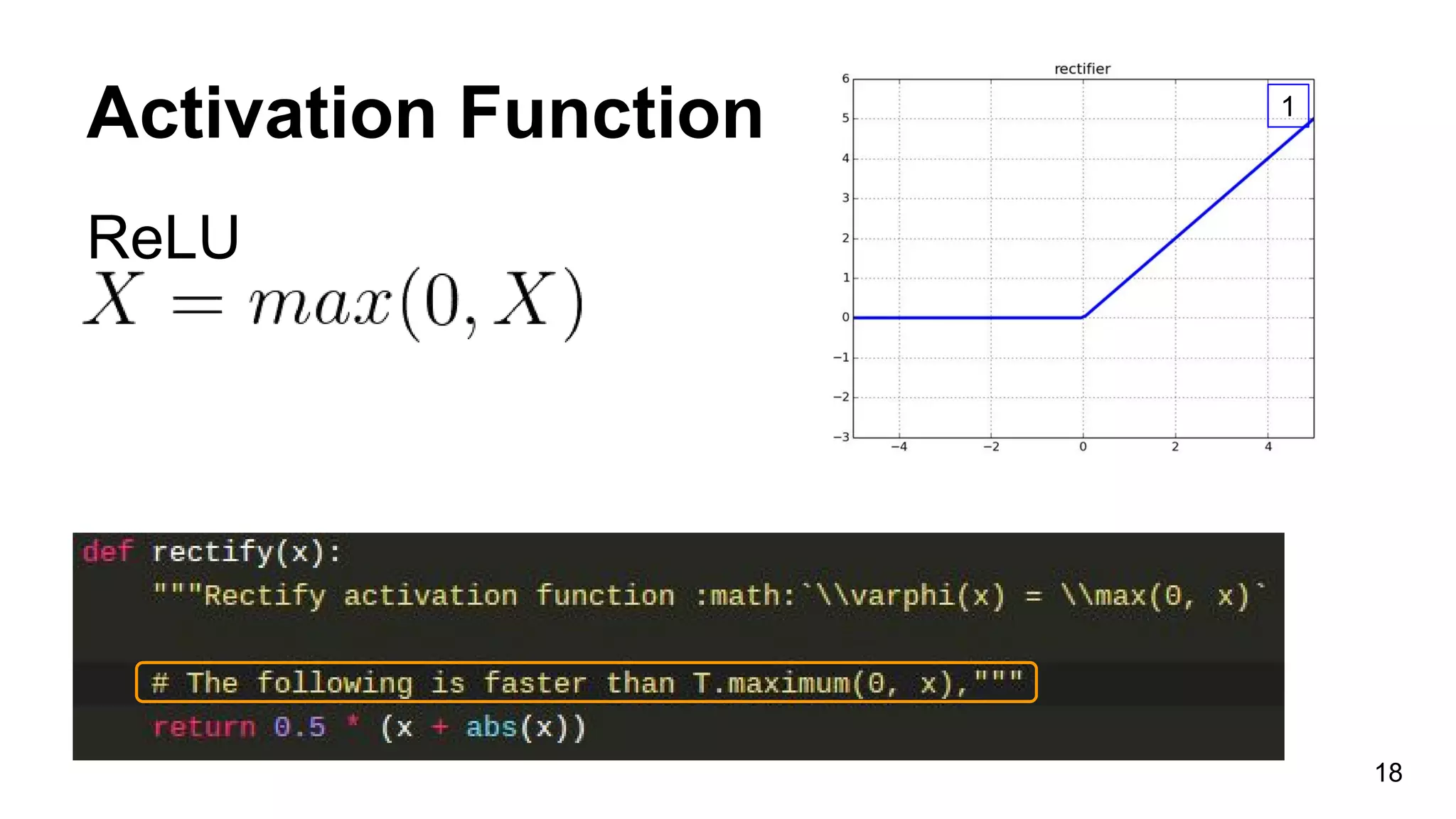

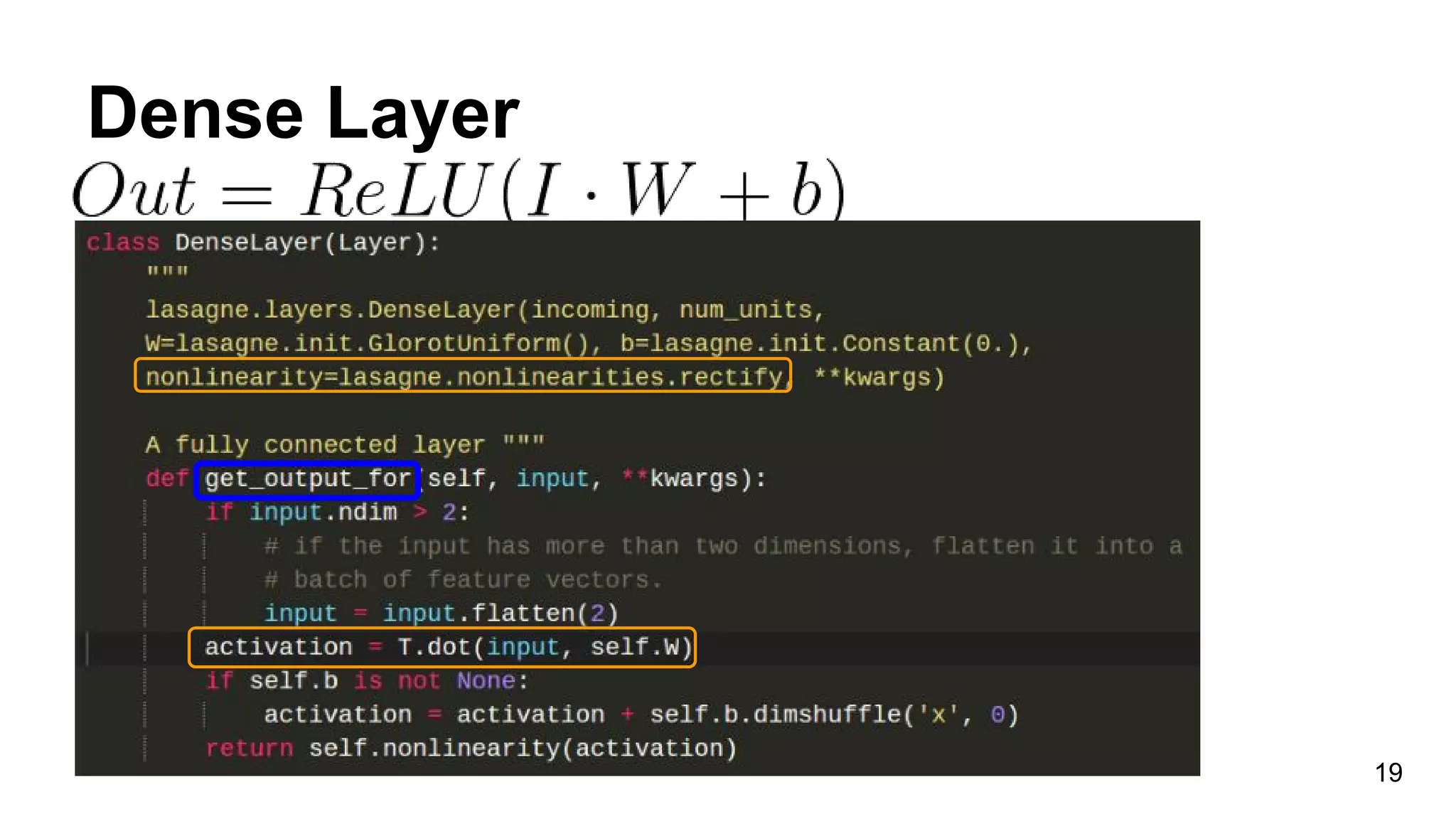

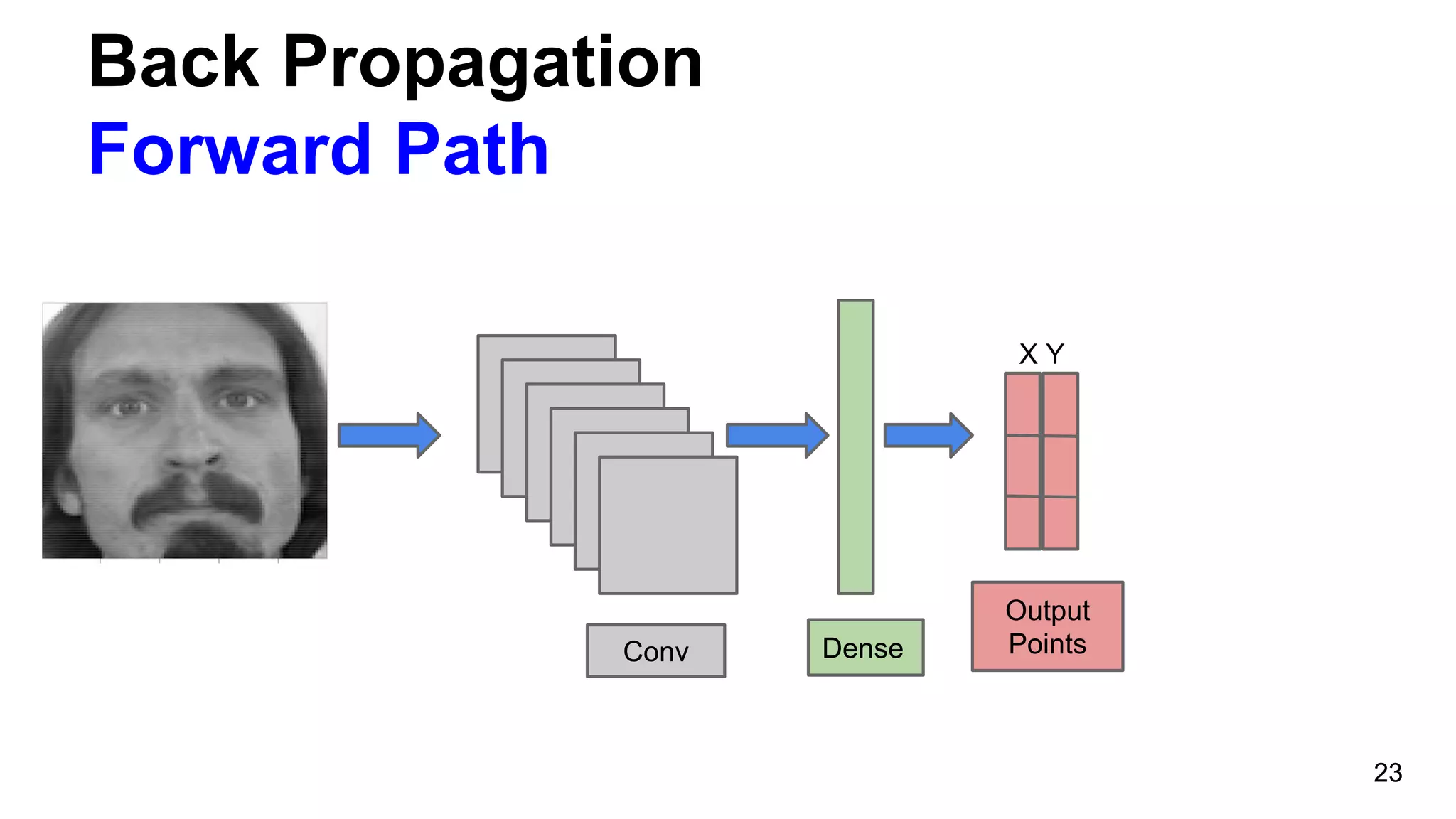

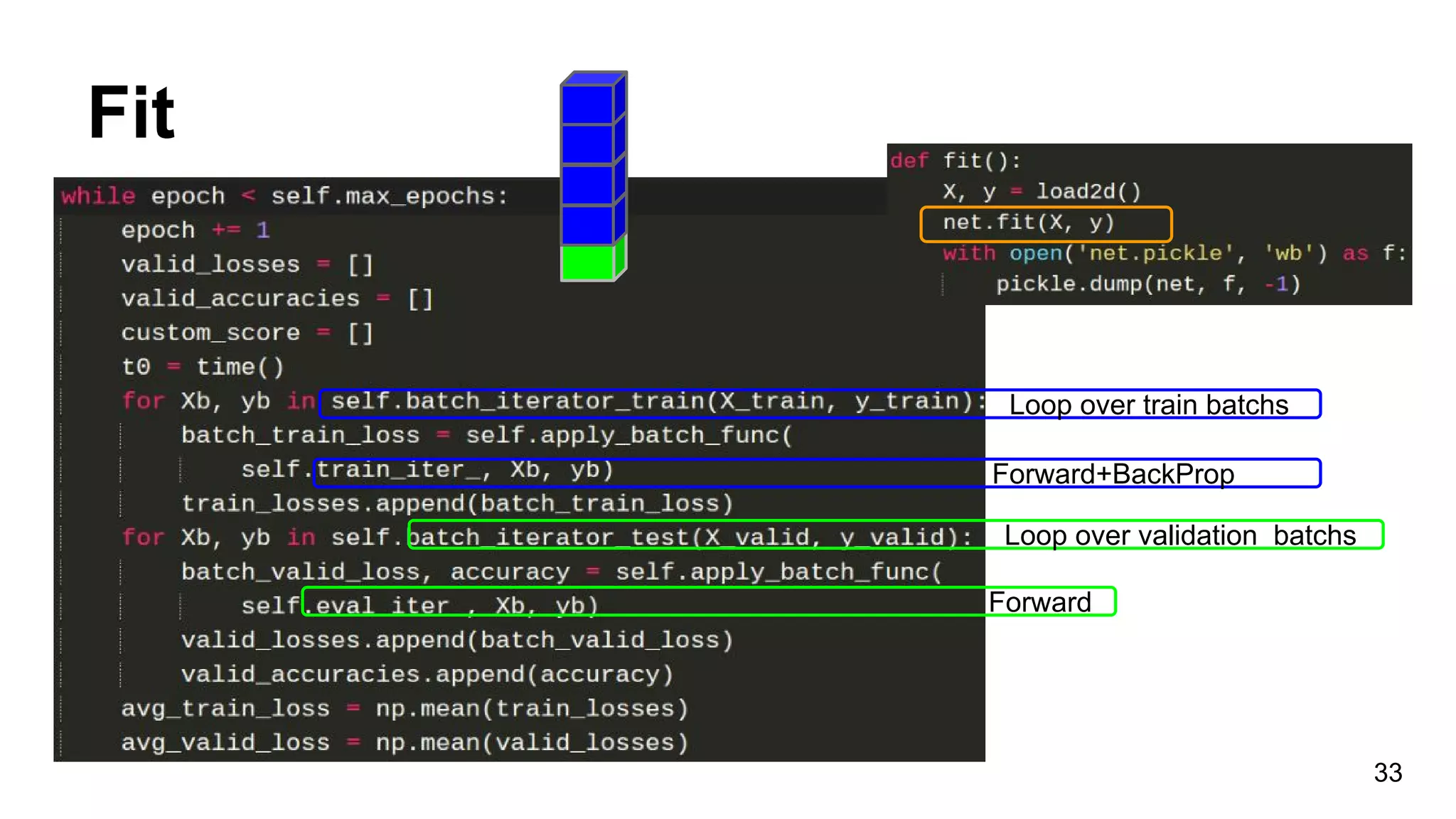

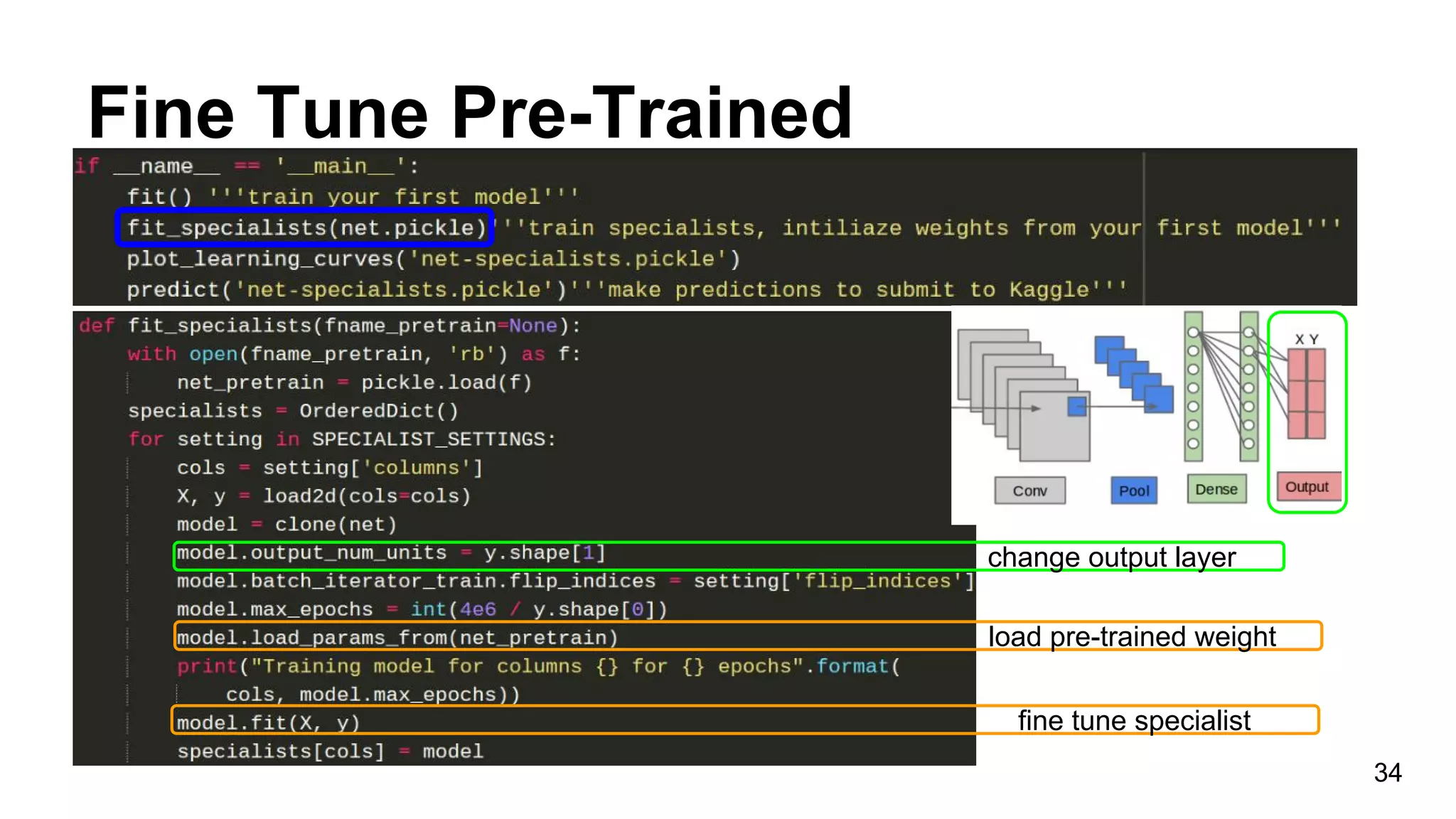

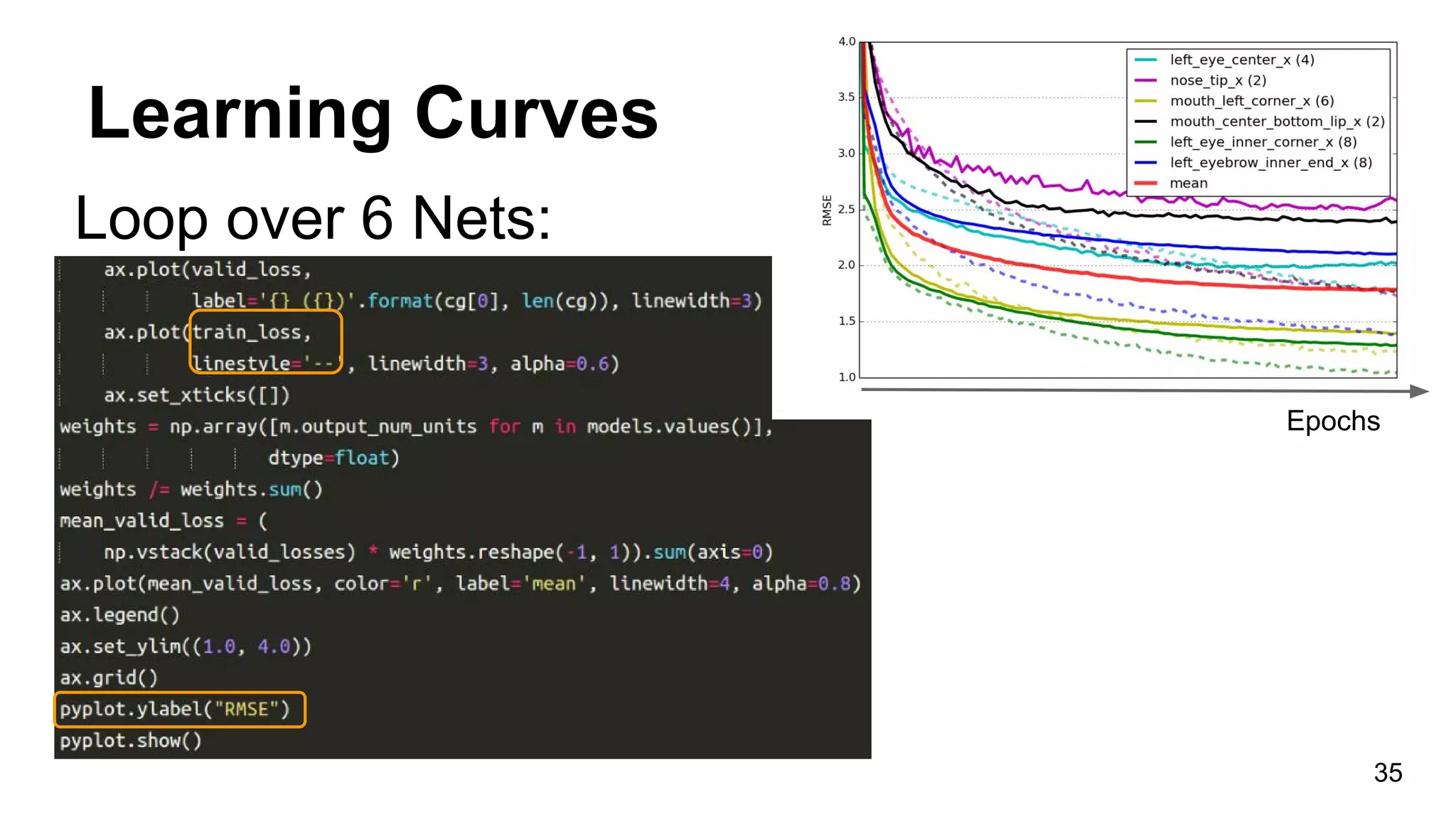

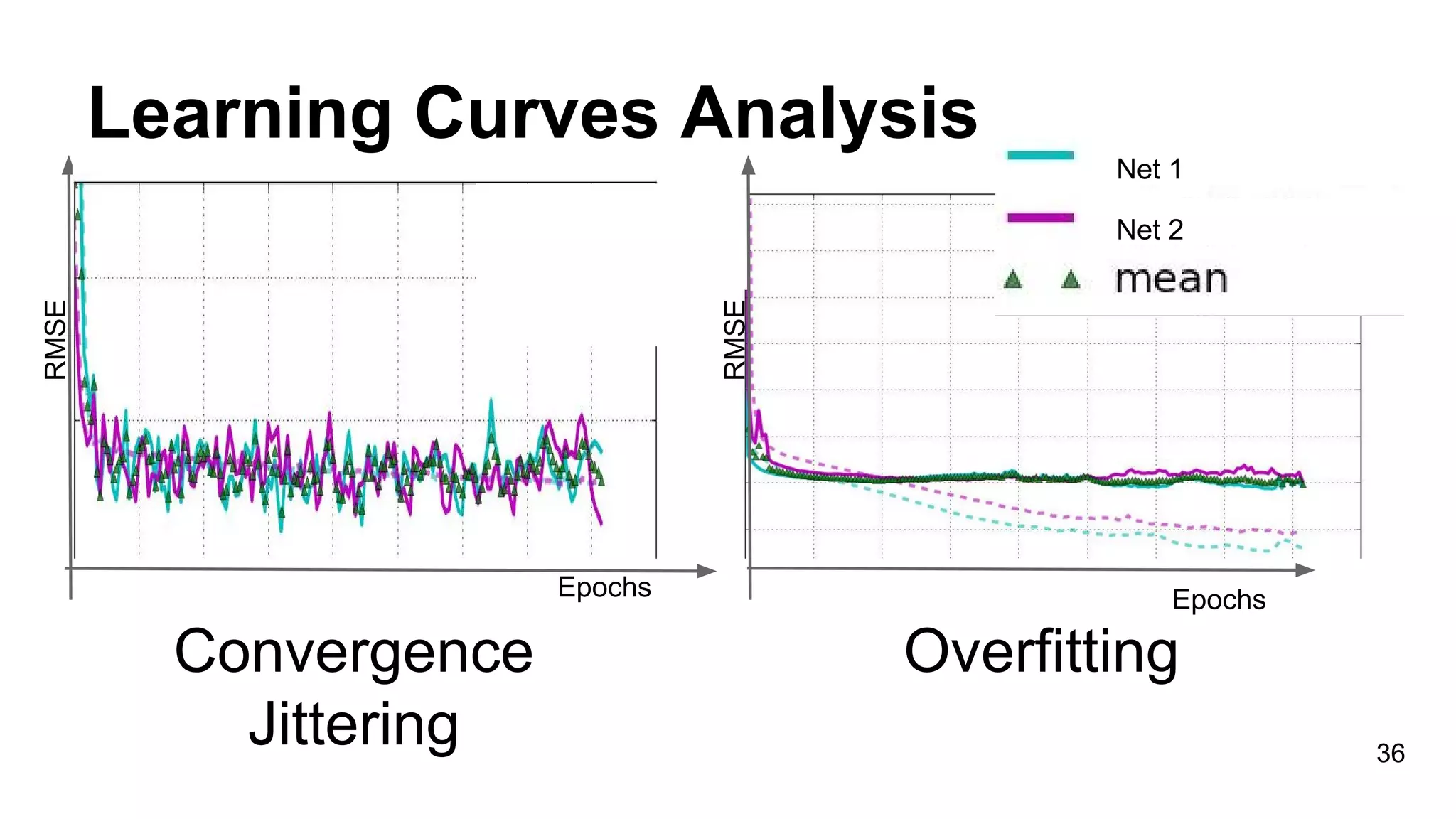

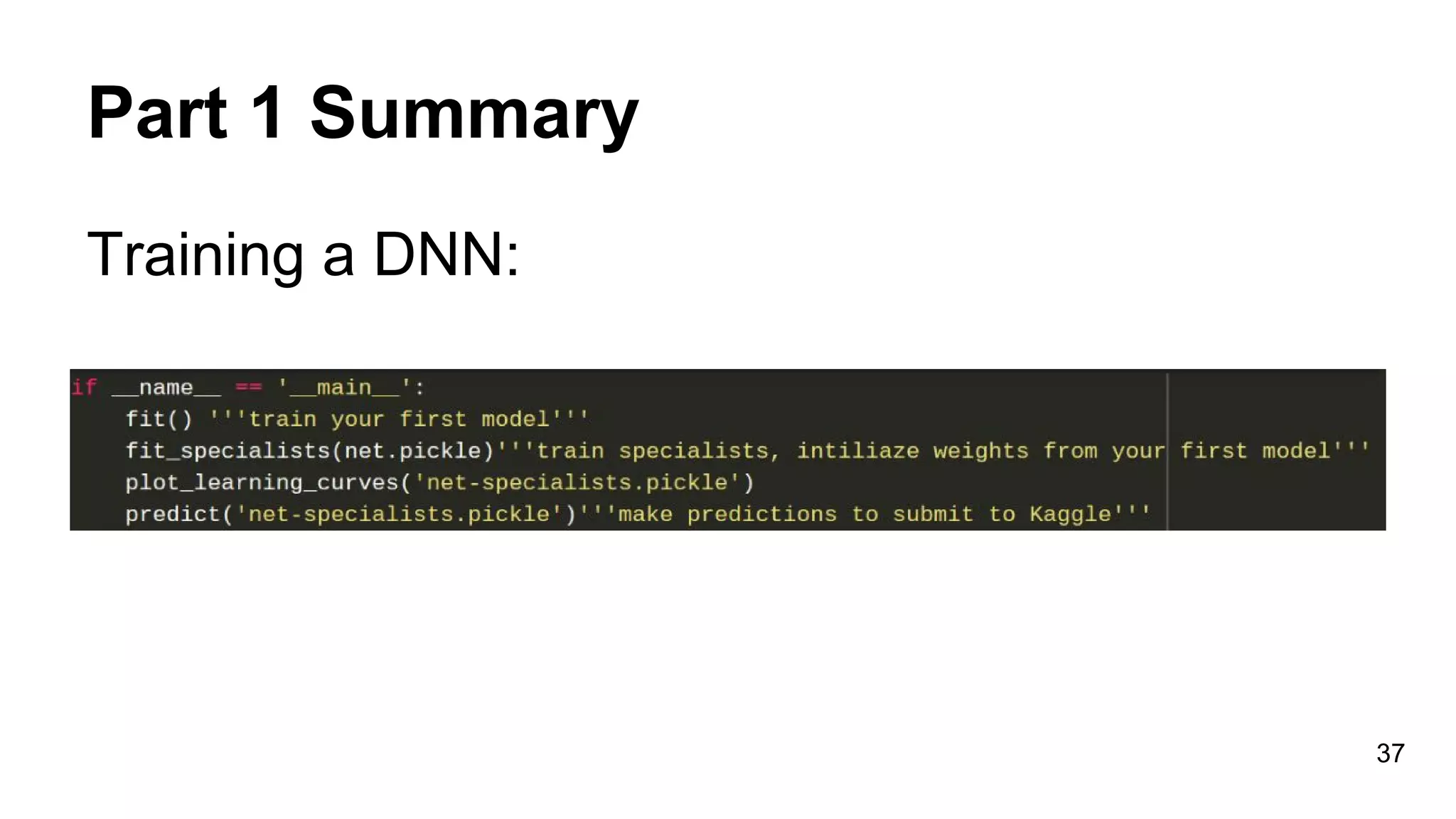

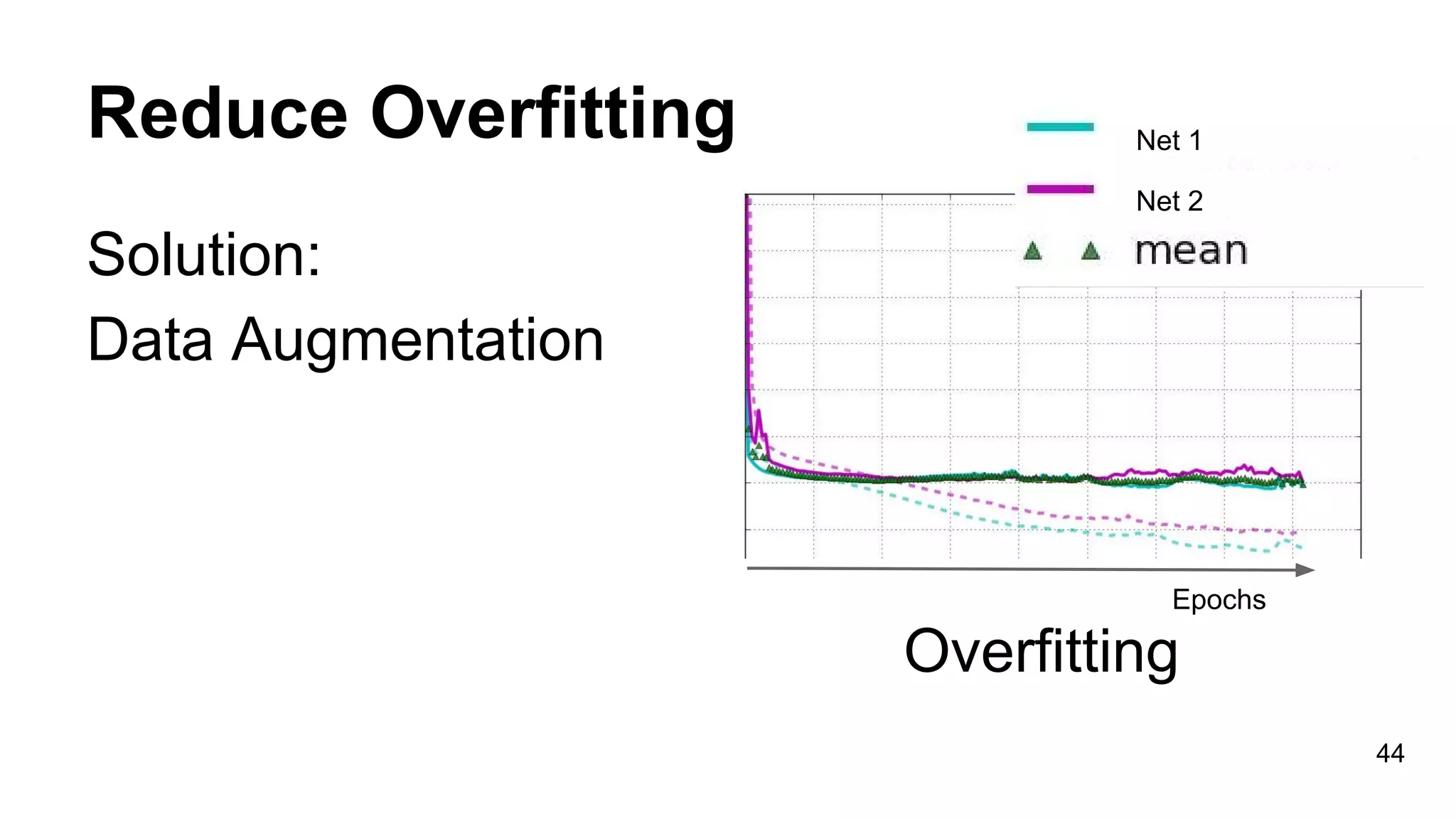

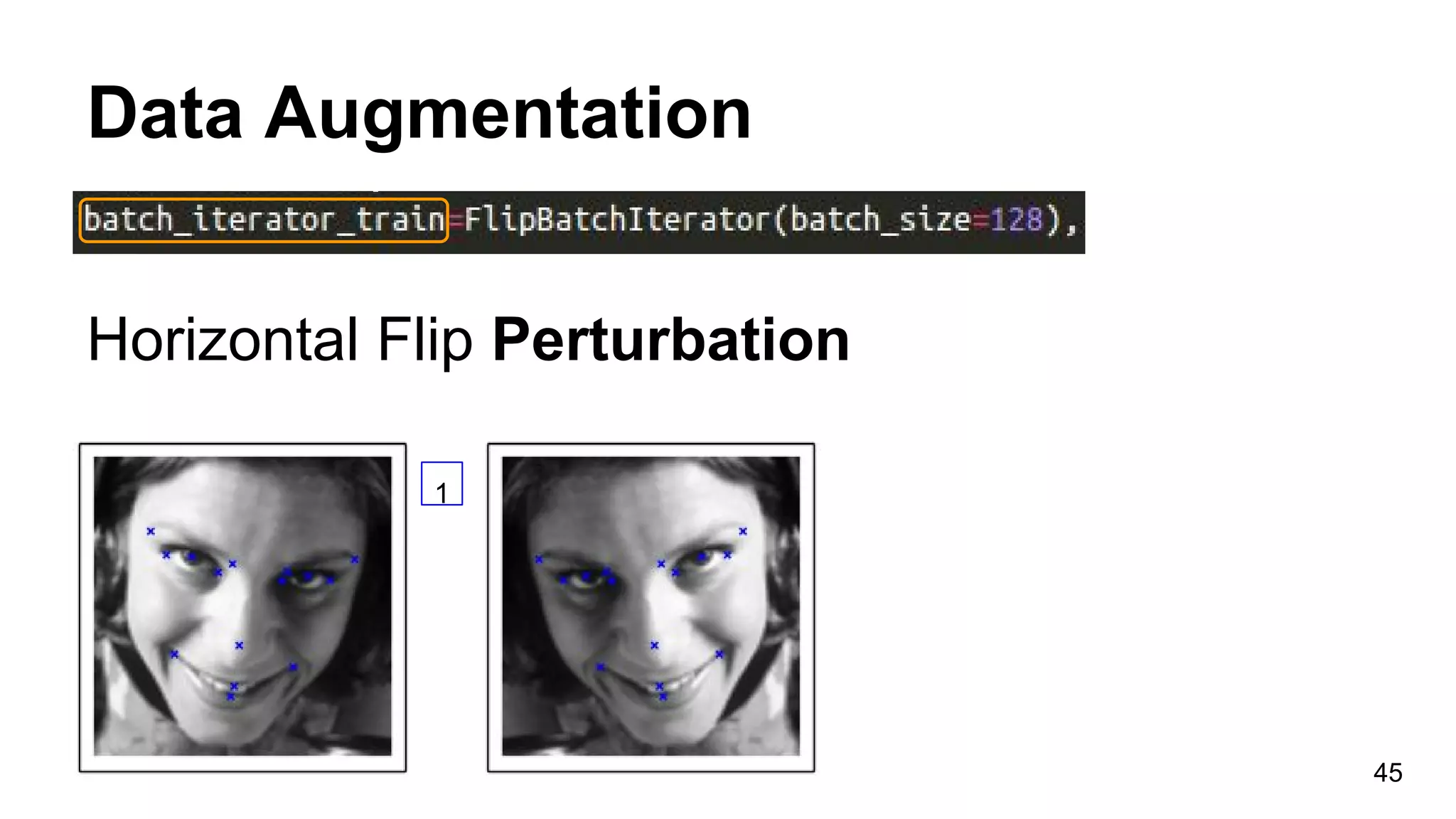

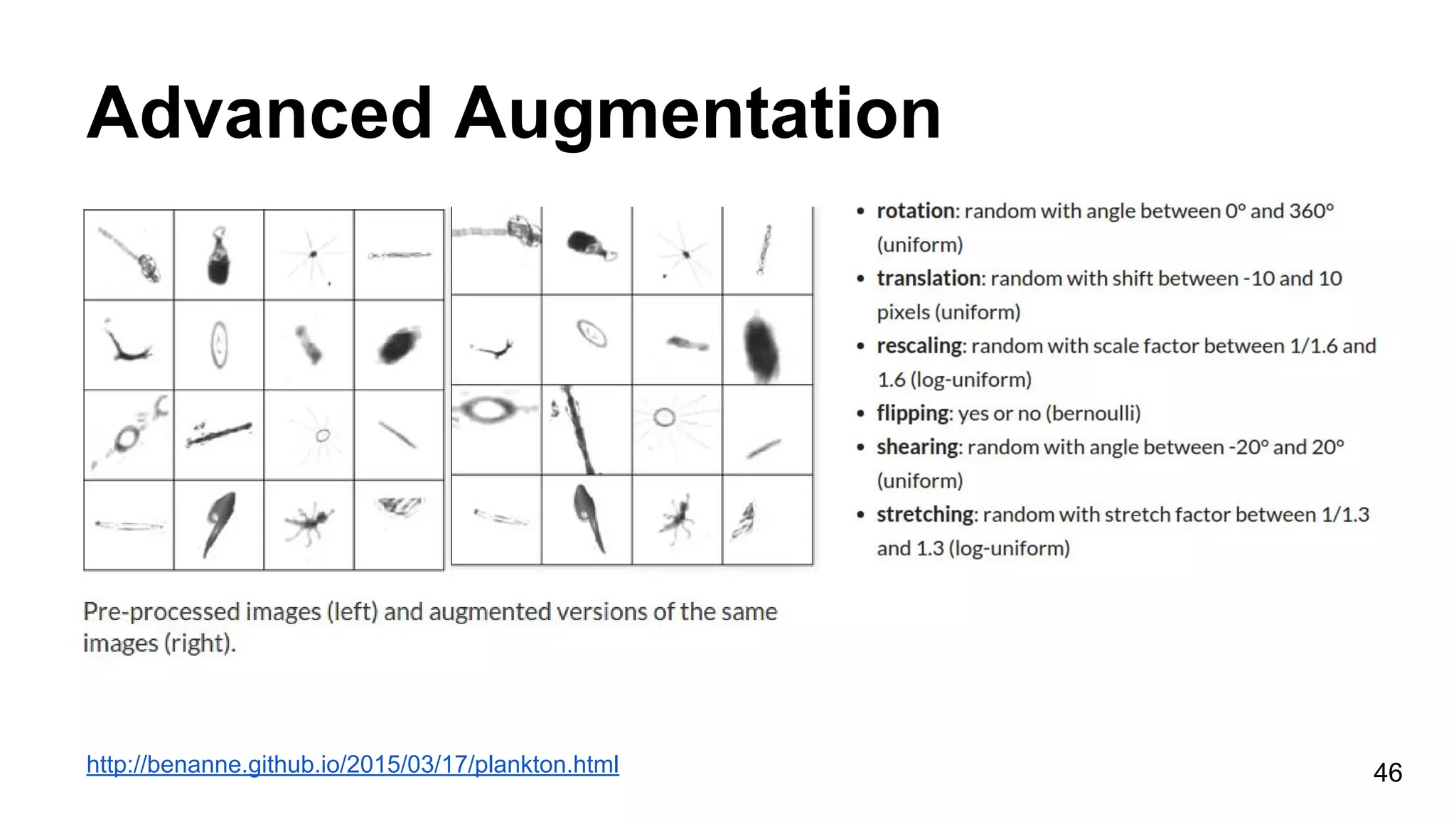

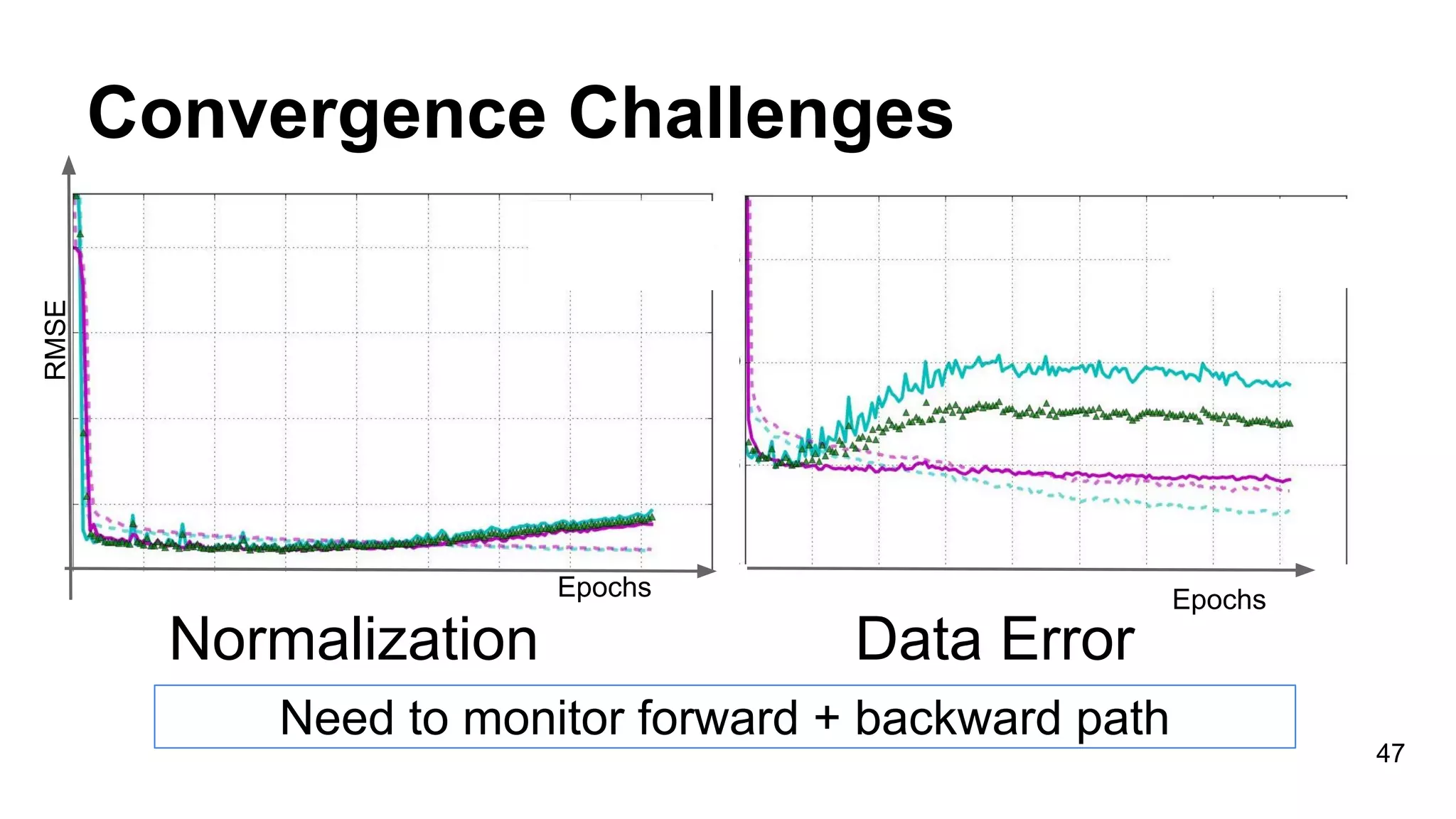

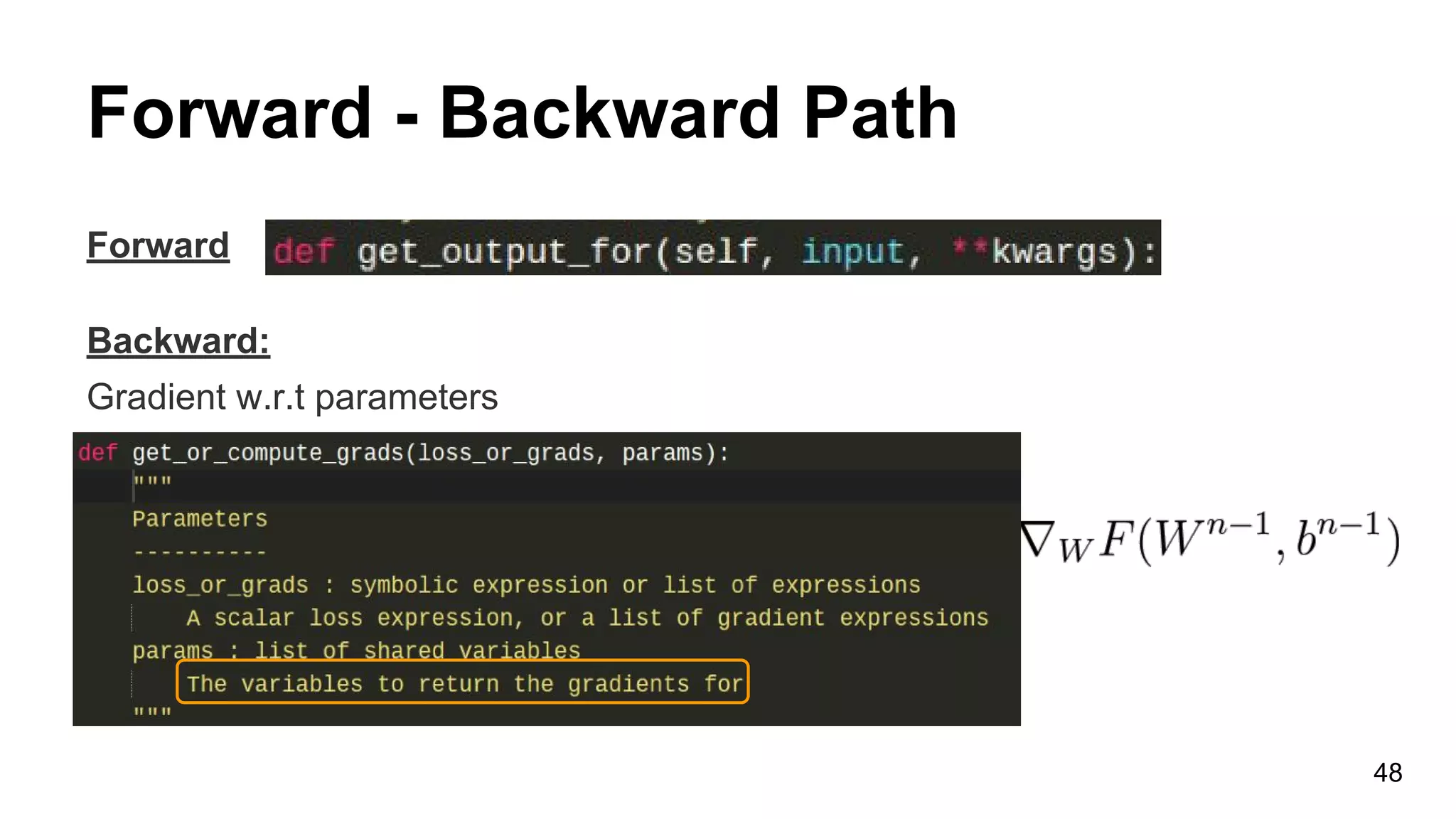

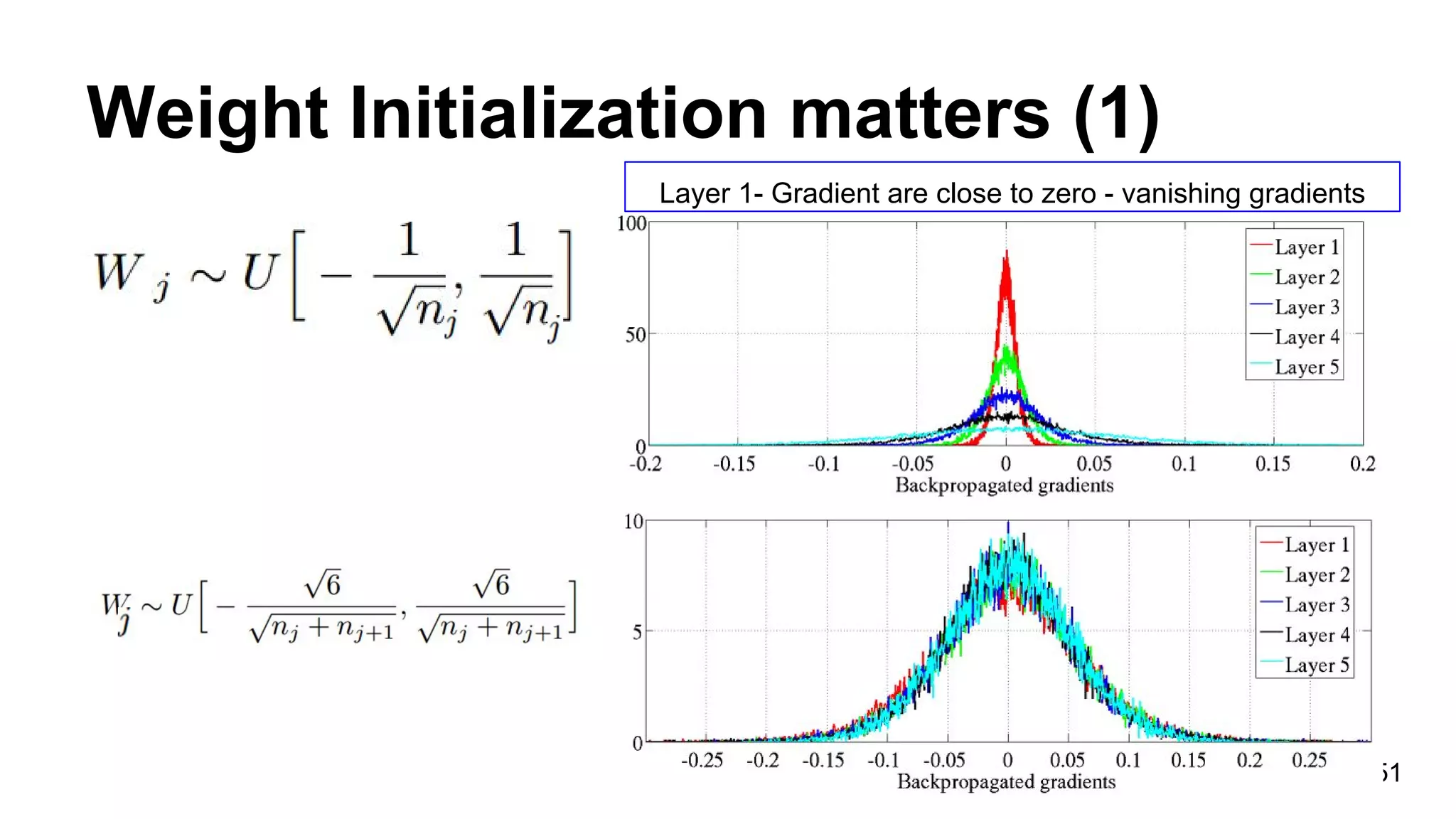

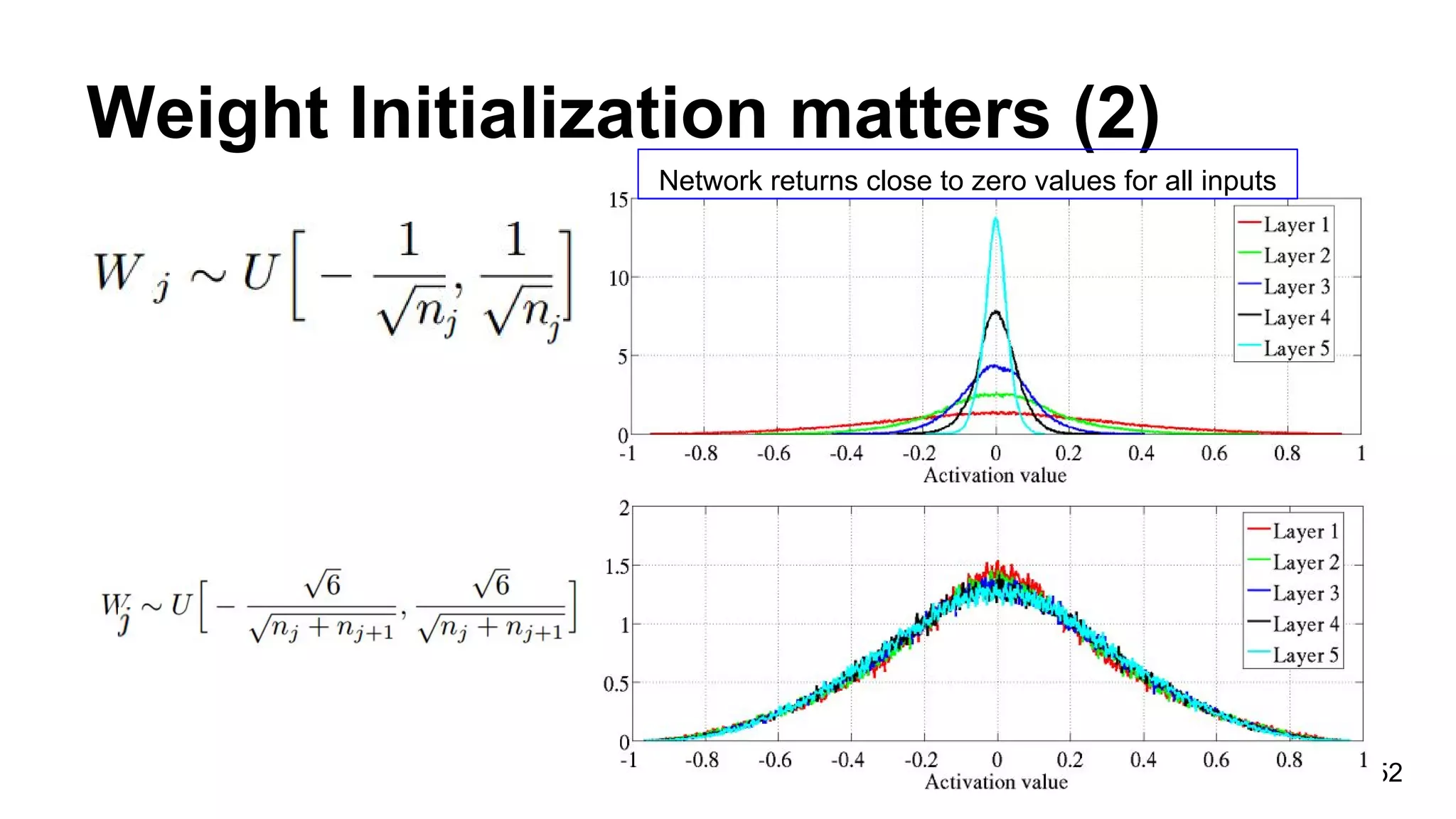

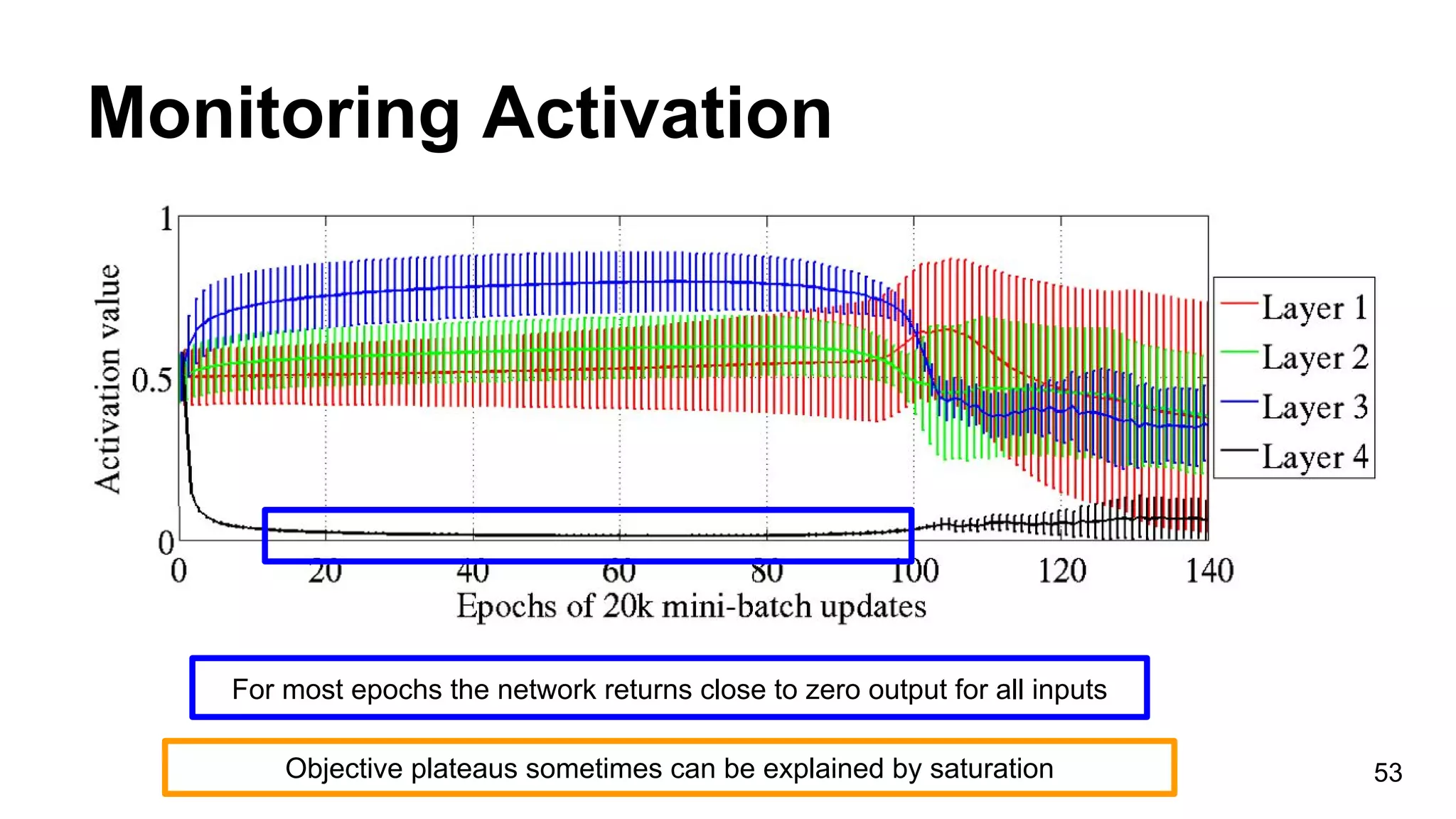

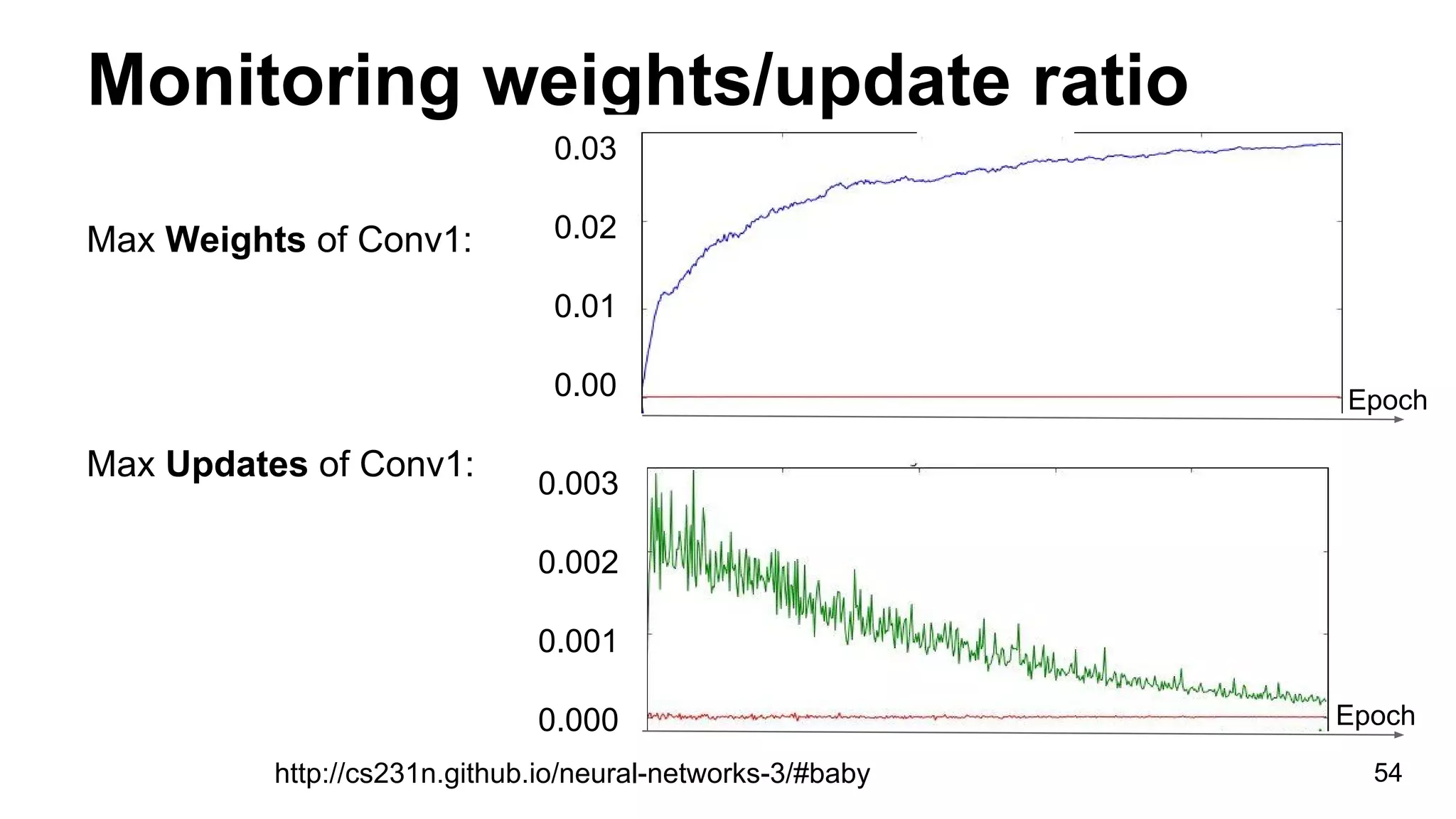

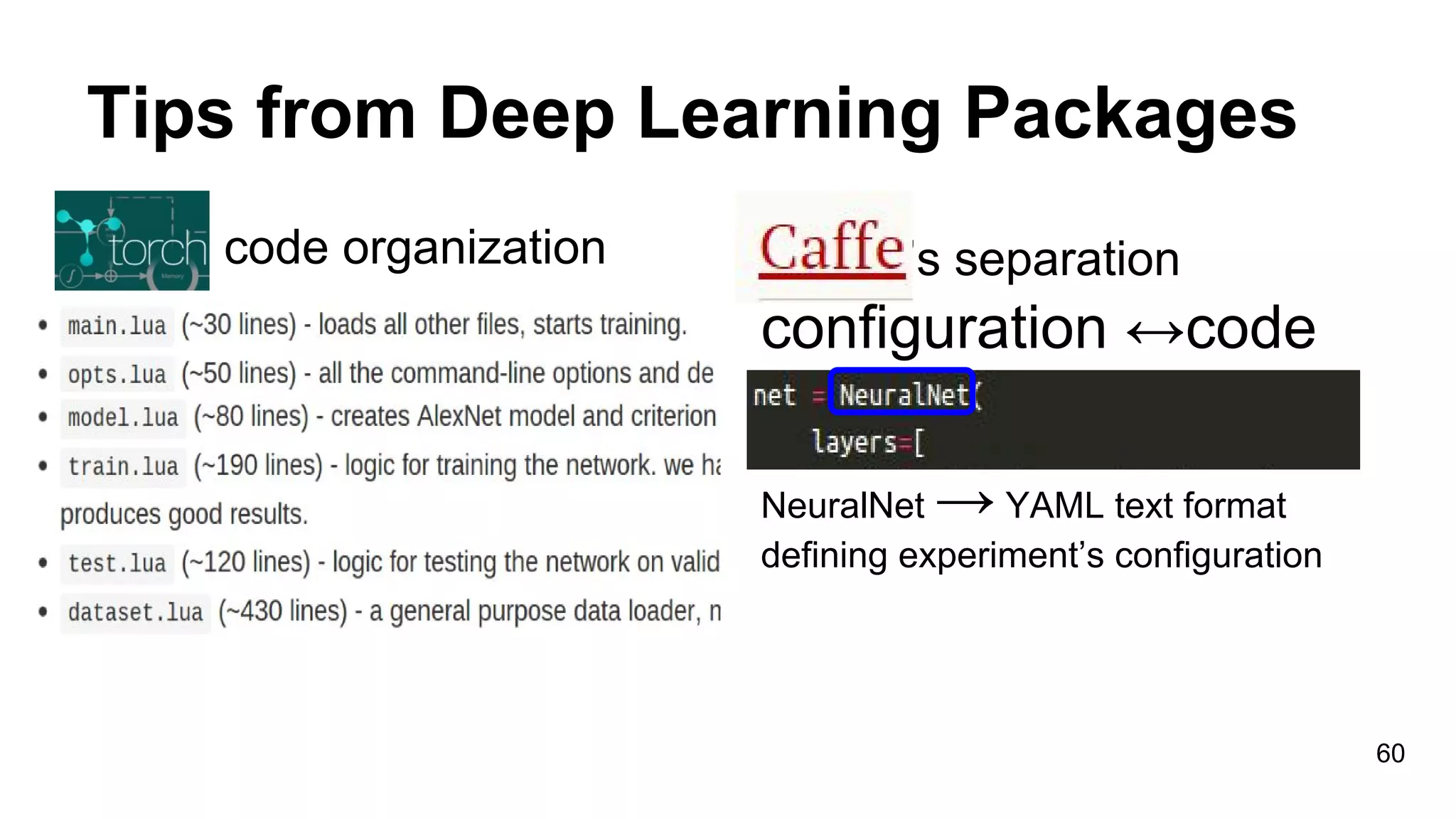

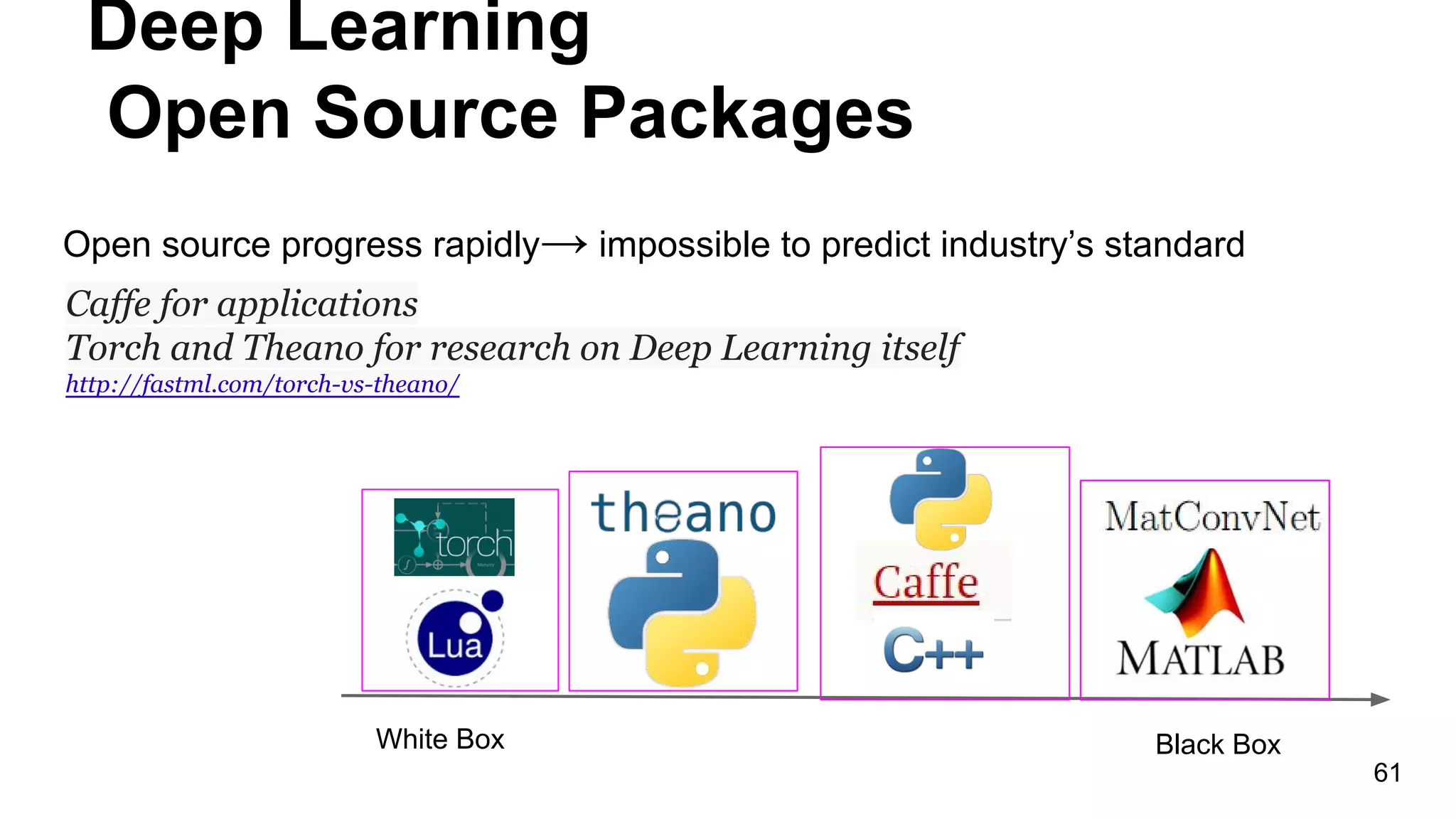

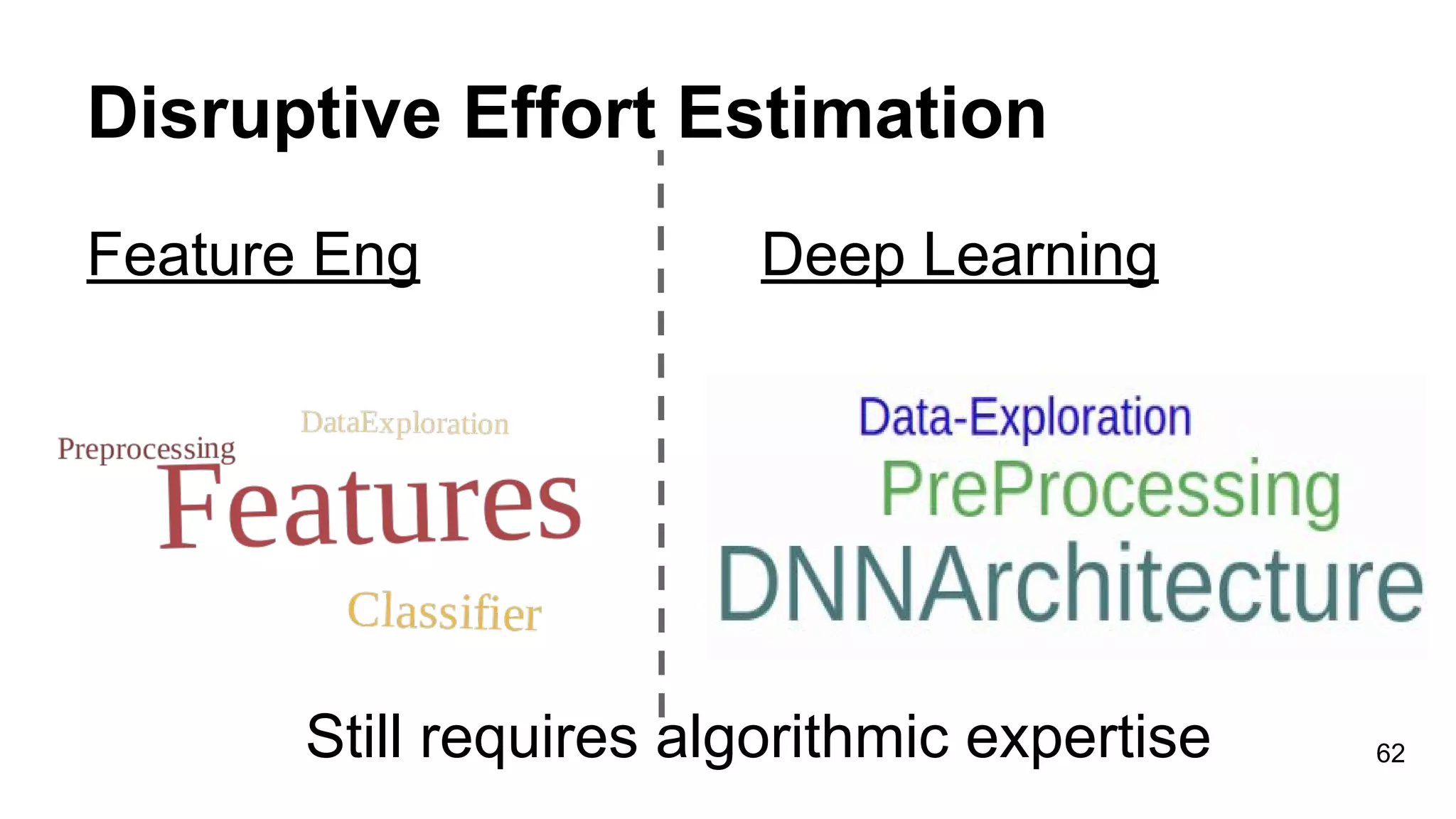

This document summarizes a presentation on deep learning in Python. It discusses training a deep neural network (DNN), including data analysis, architecture design, optimization, and training. It also covers improving the DNN through techniques like data augmentation and monitoring layer training. Finally, it reviews popular open-source Python packages for deep learning like Theano, Keras, and Caffe and their uses in applications and research.