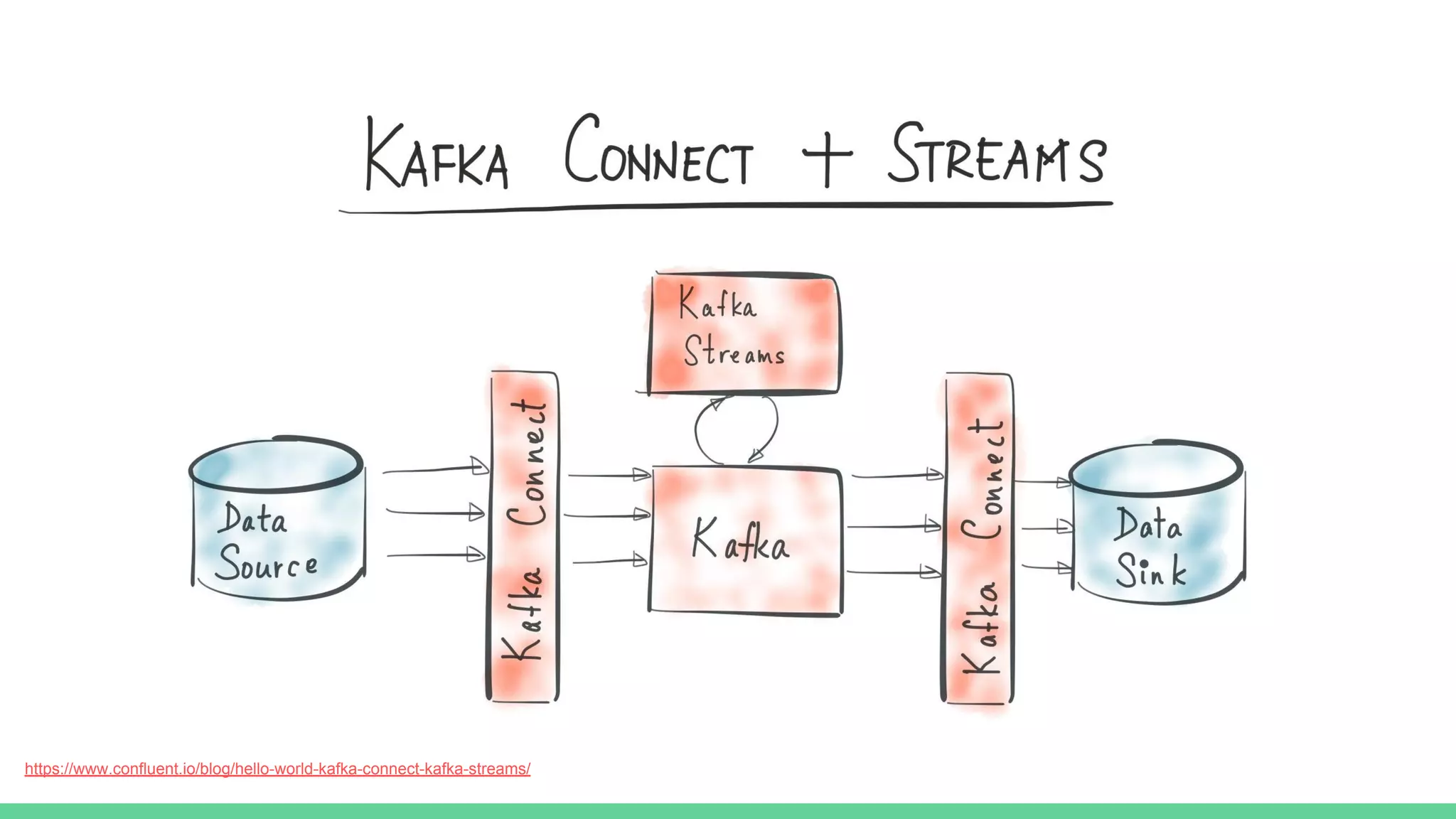

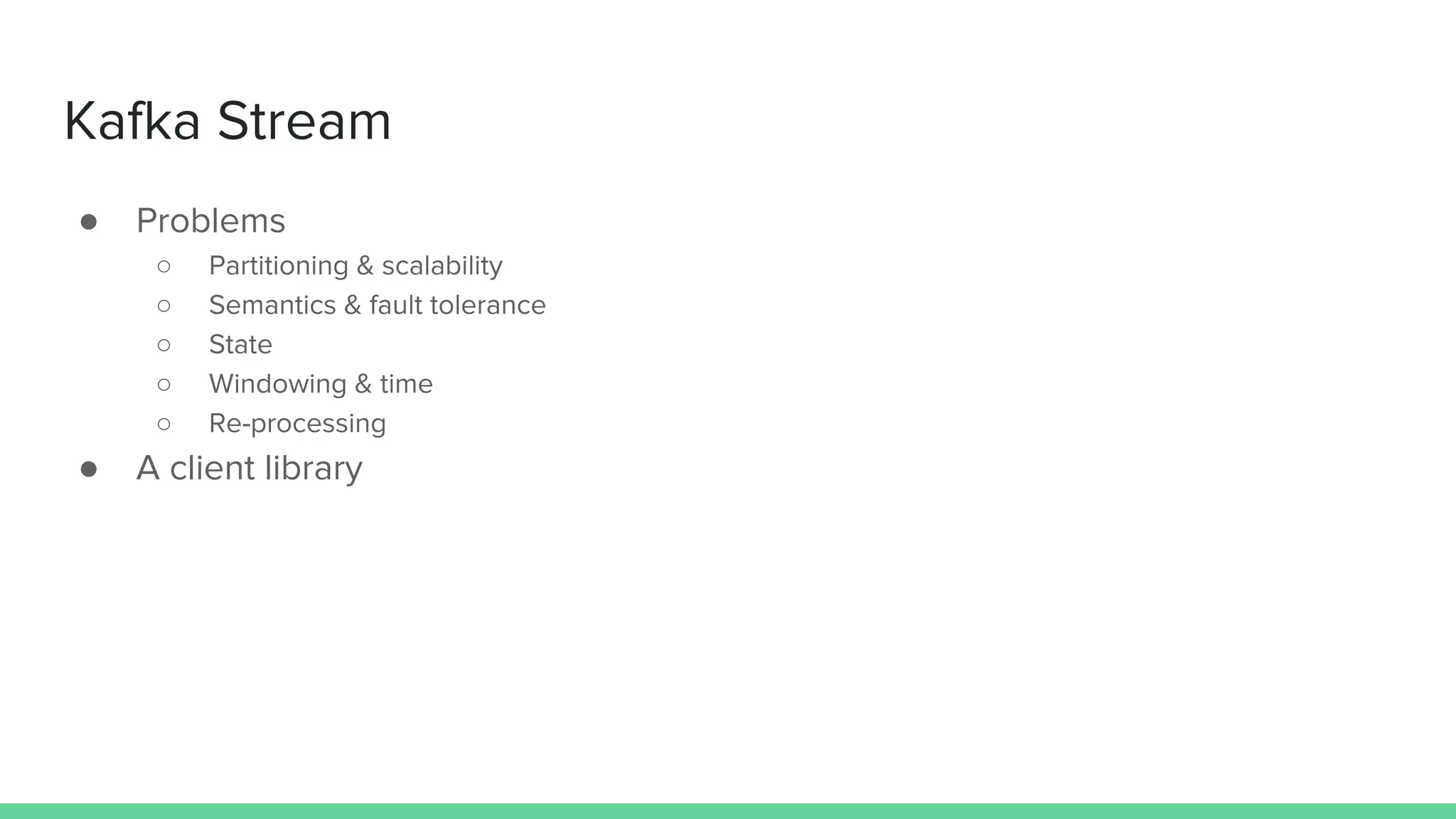

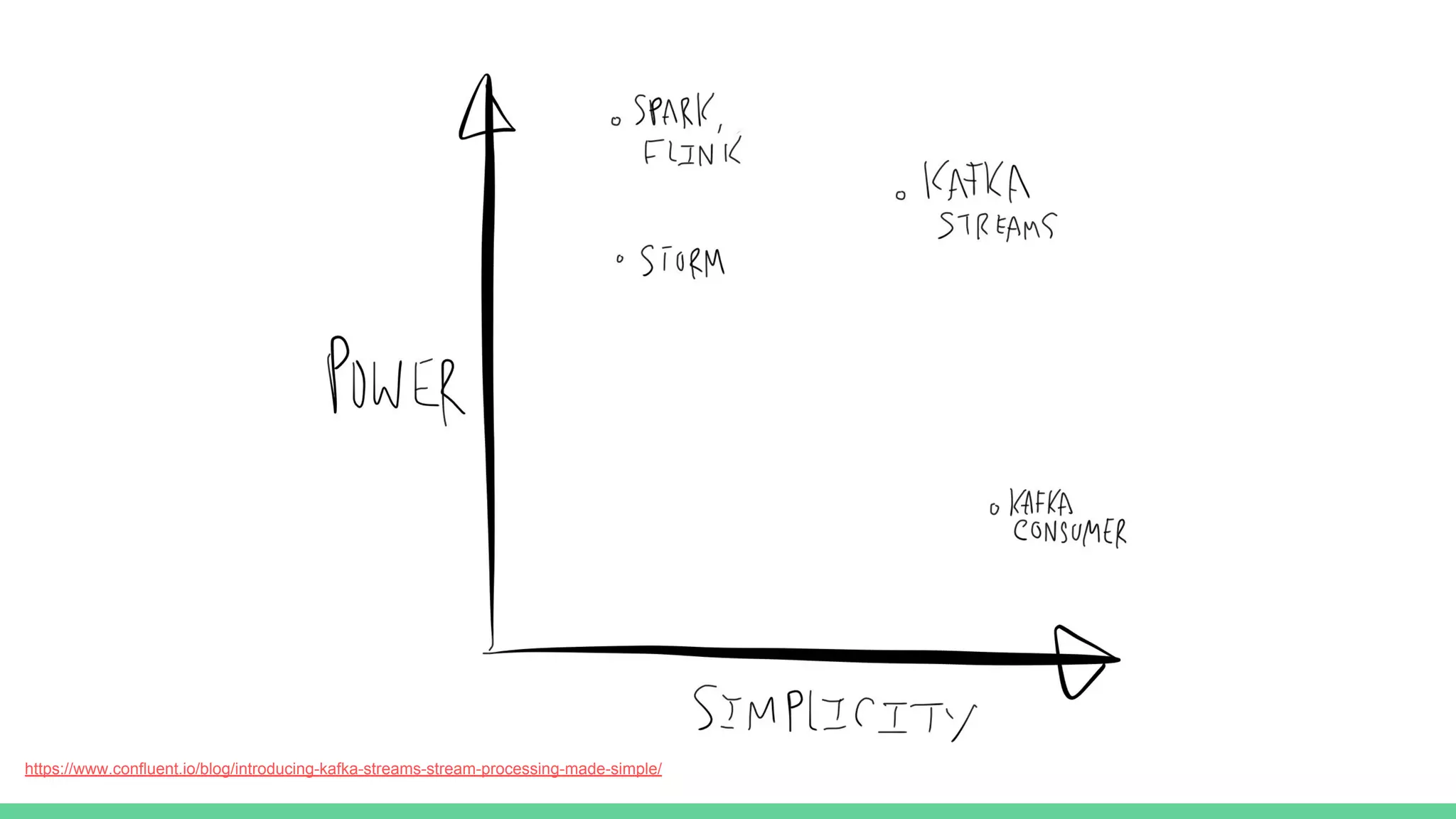

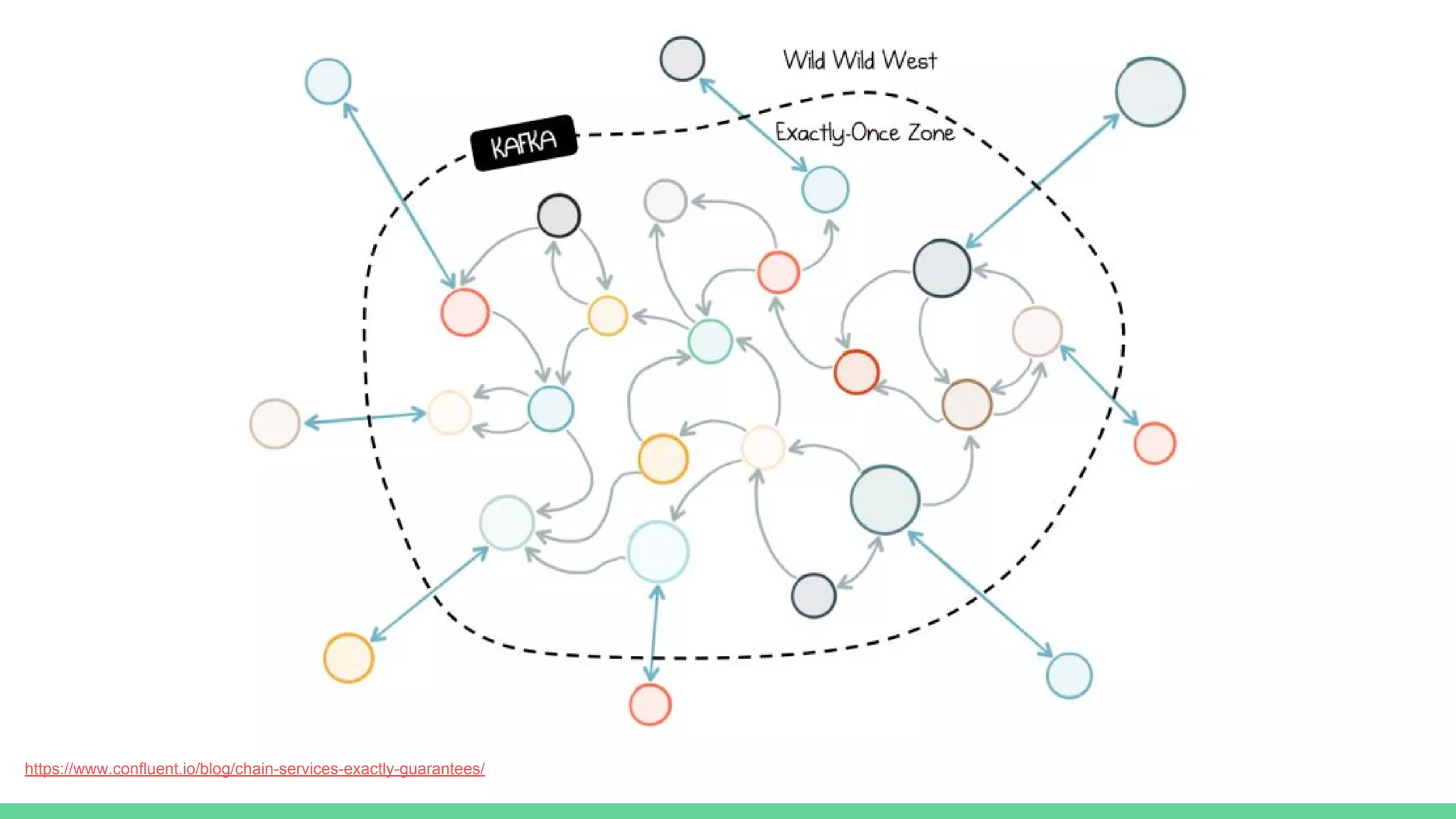

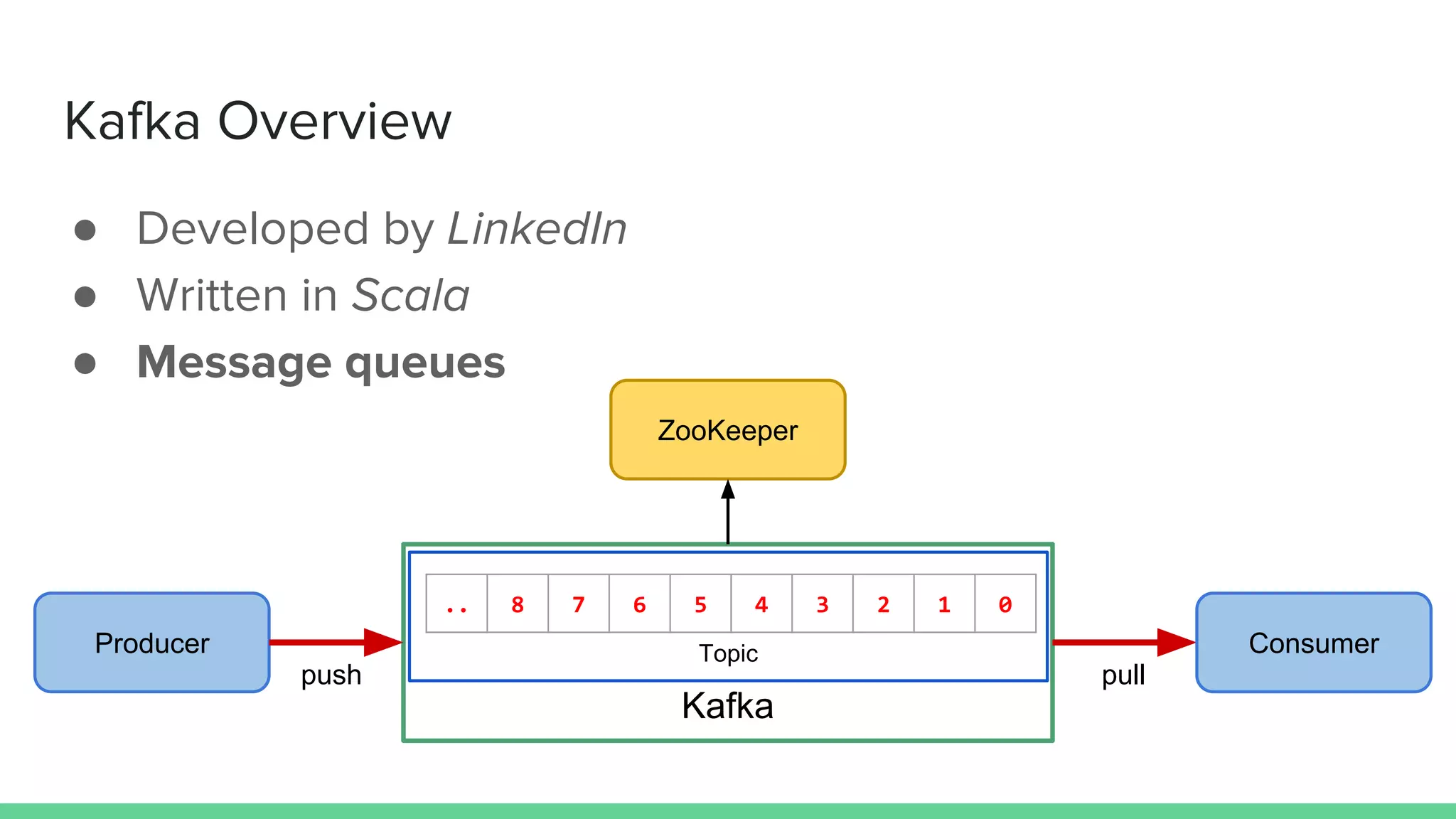

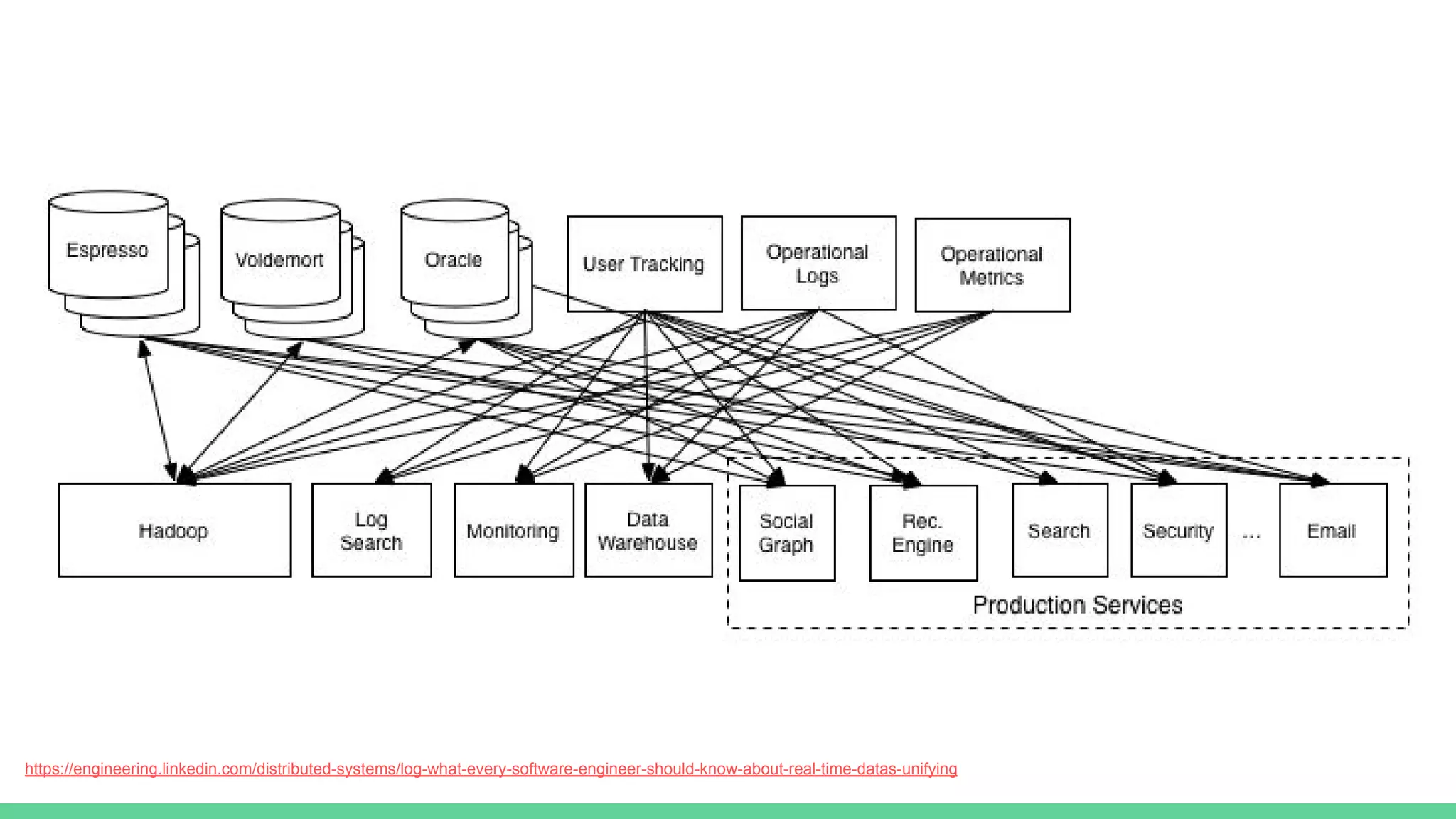

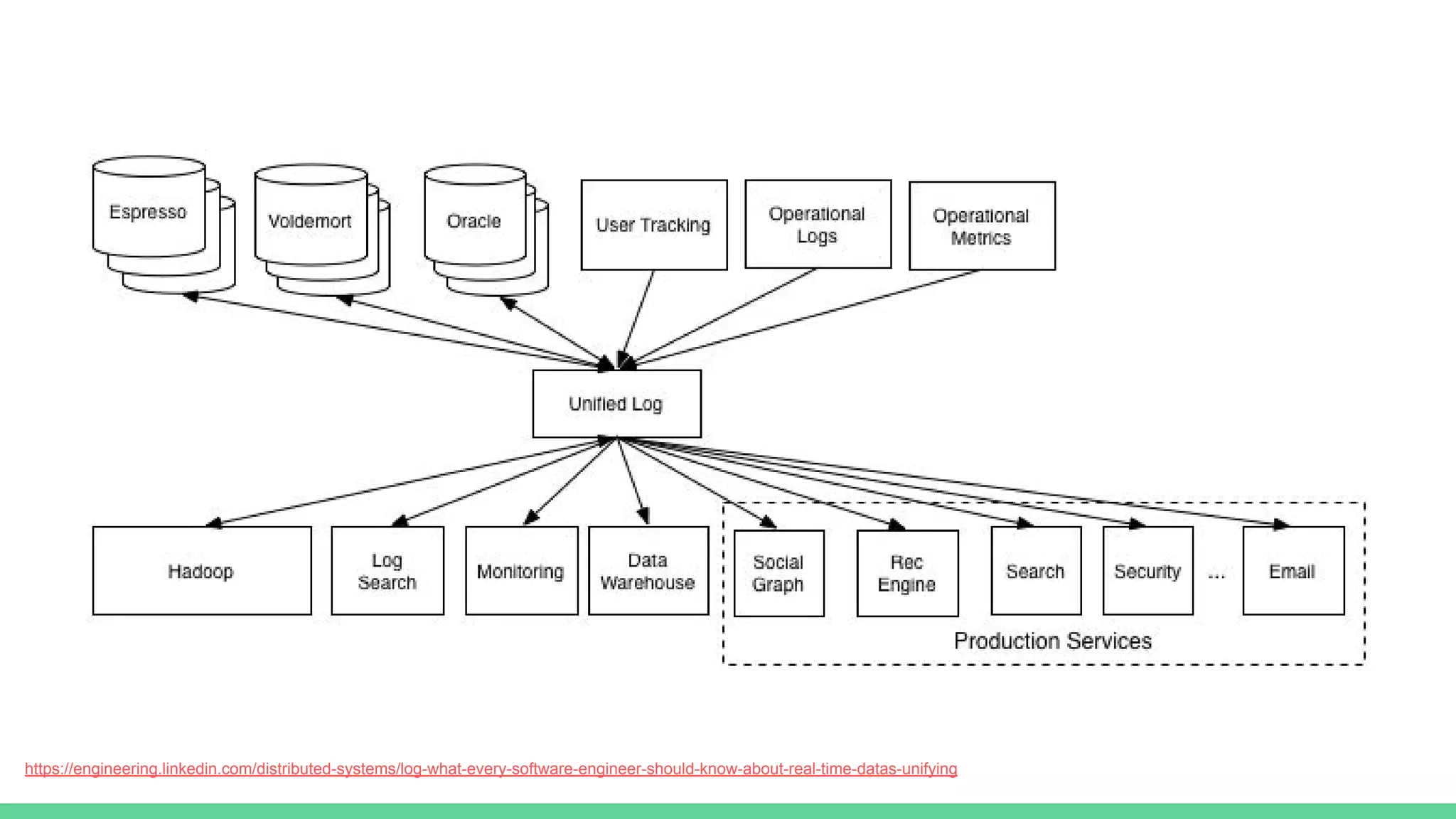

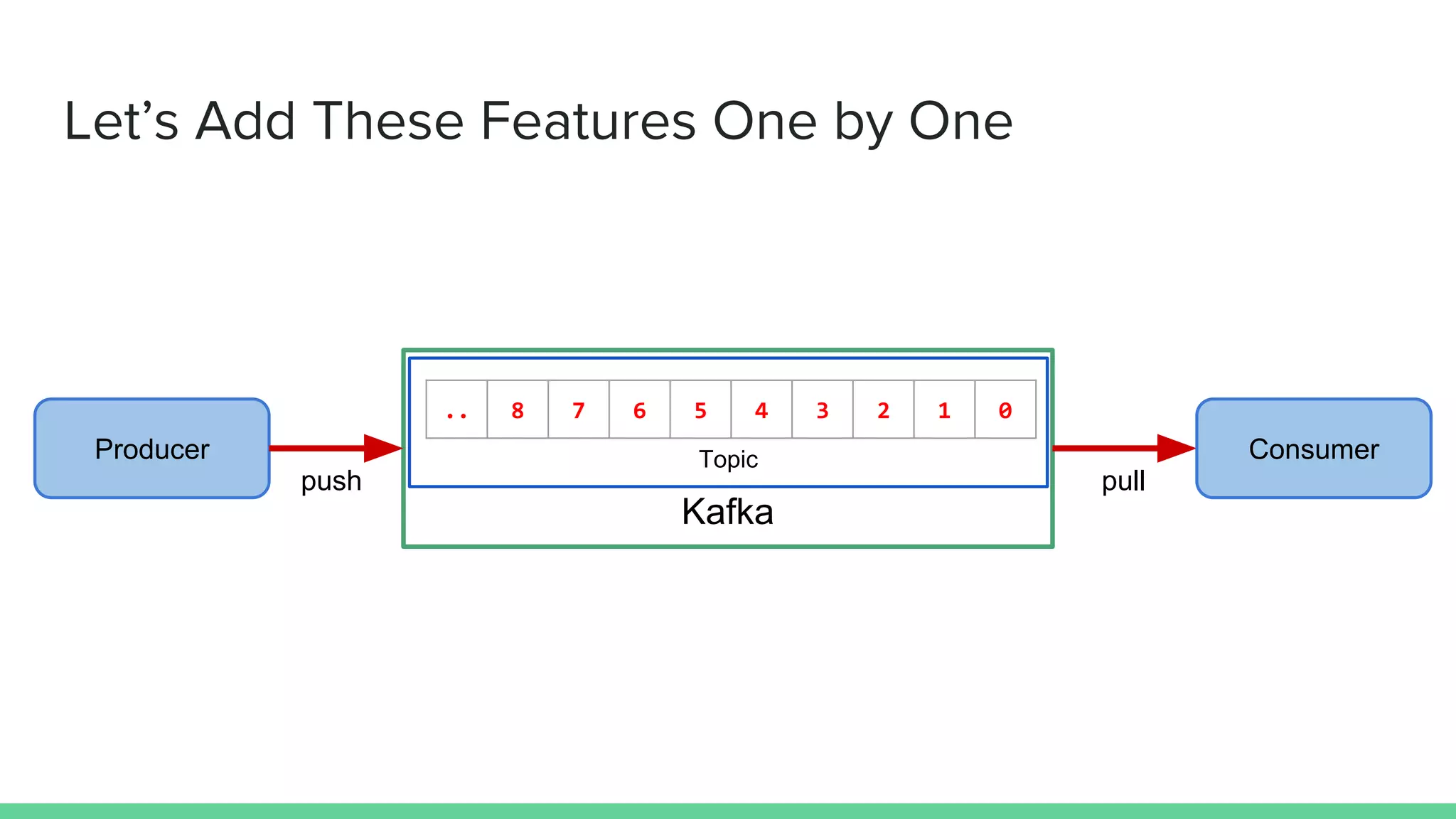

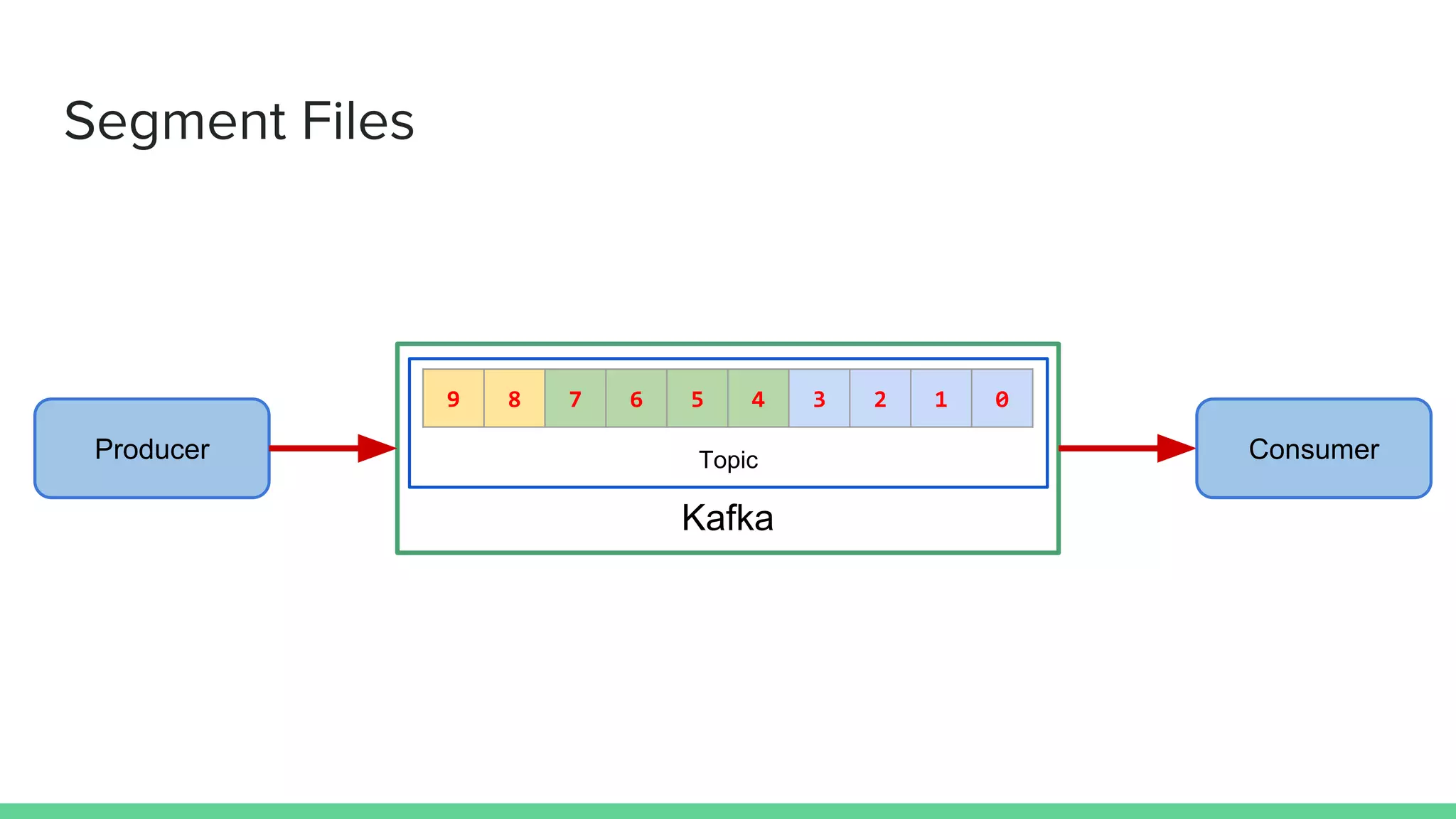

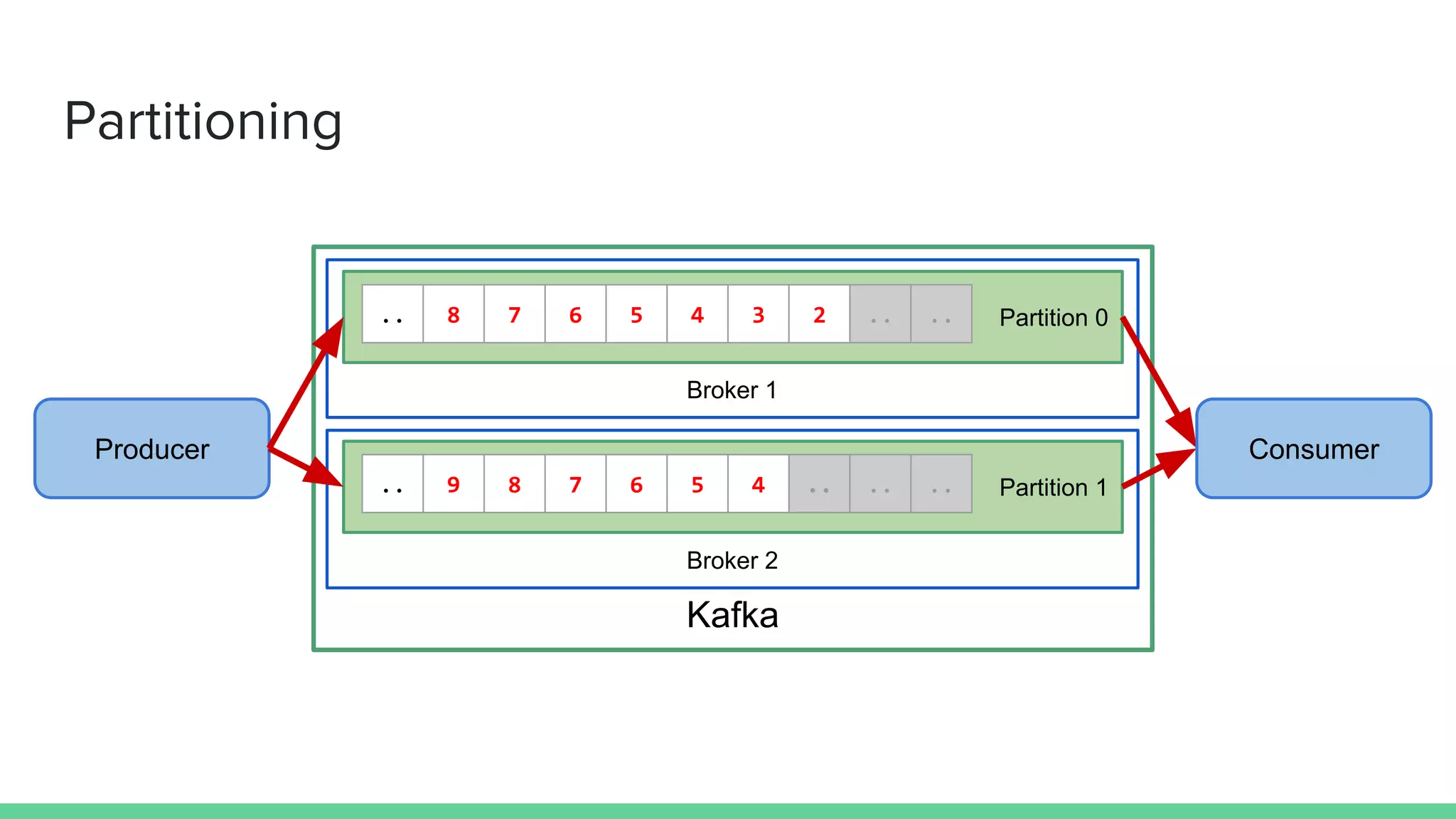

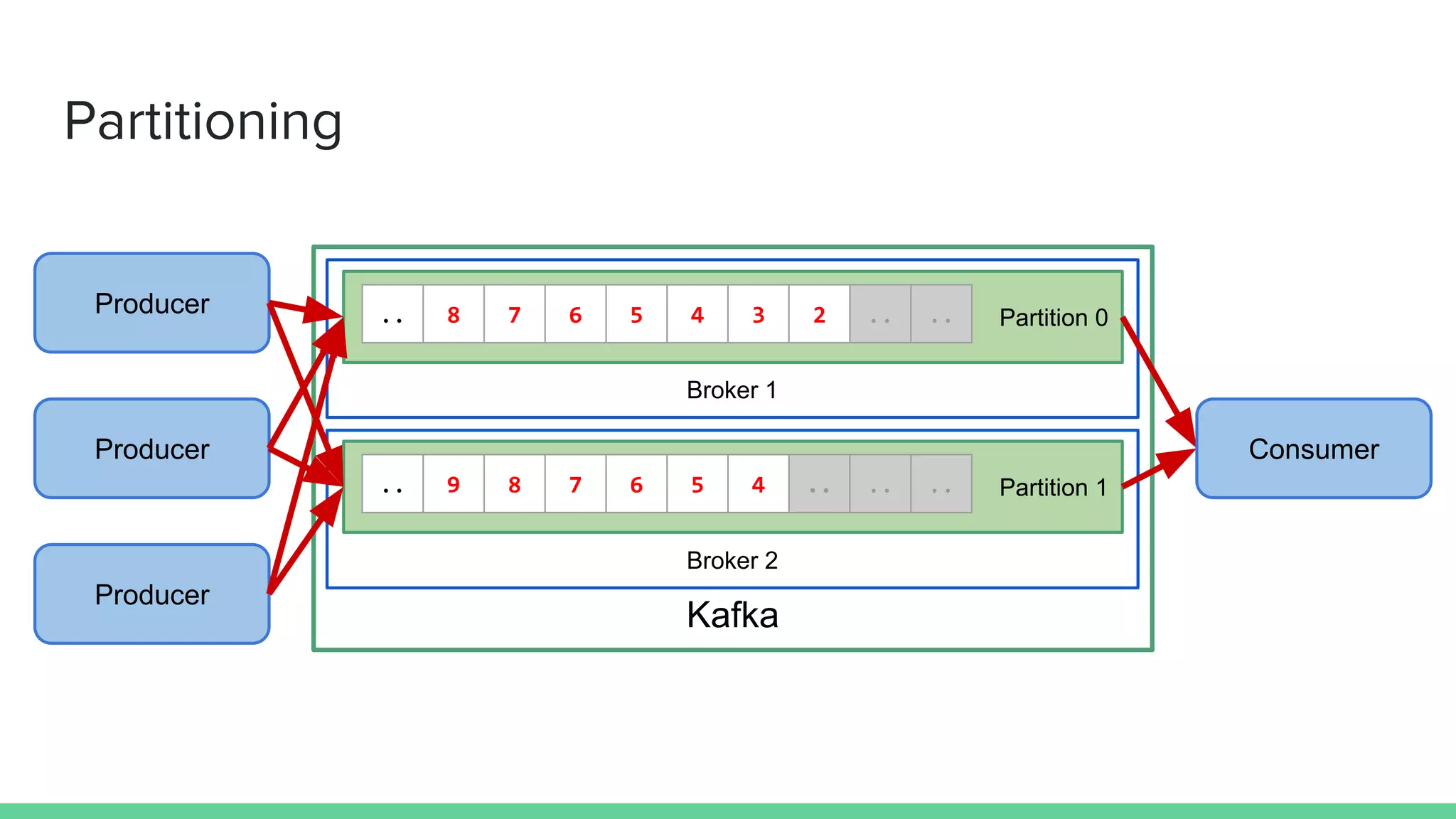

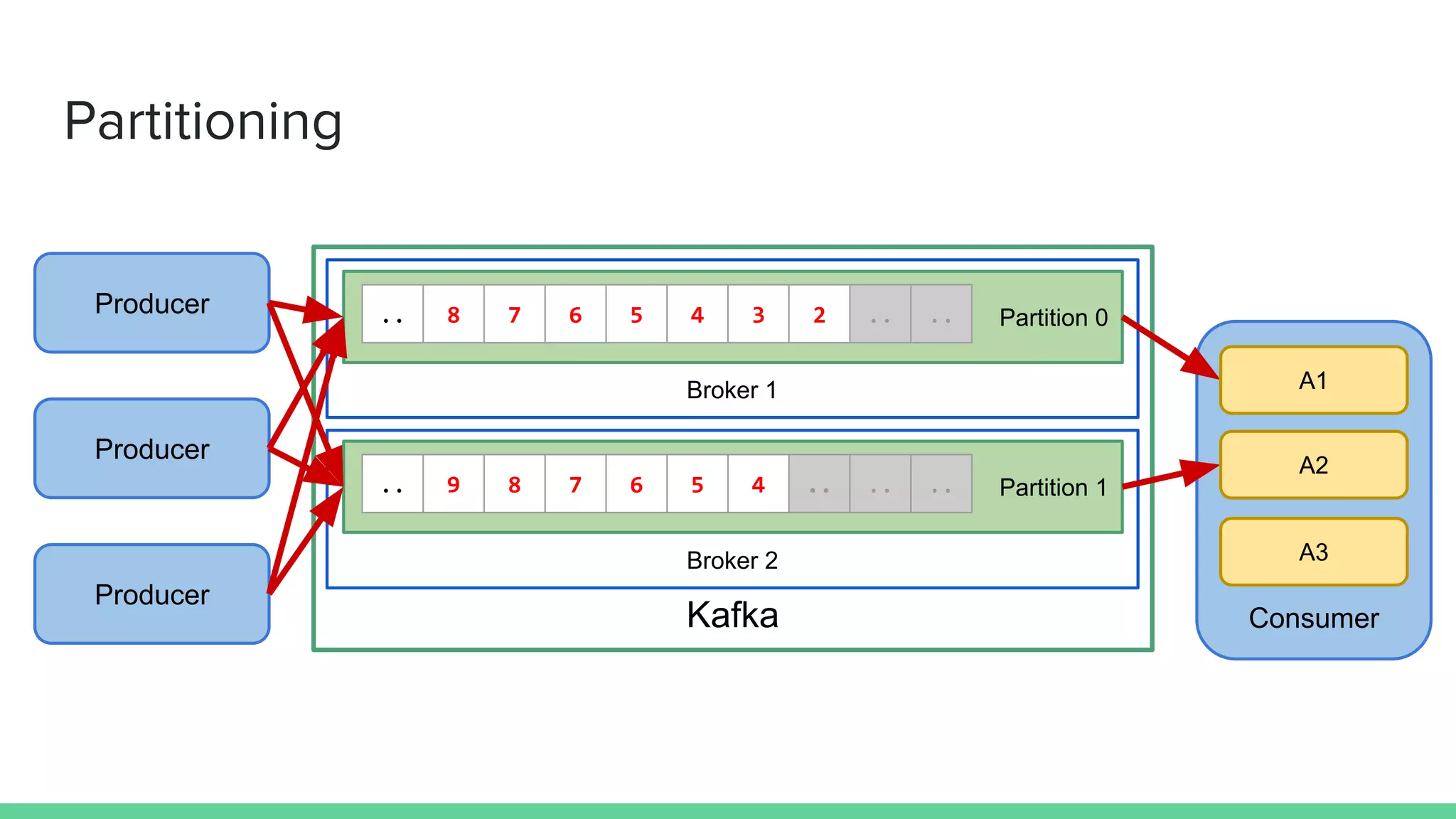

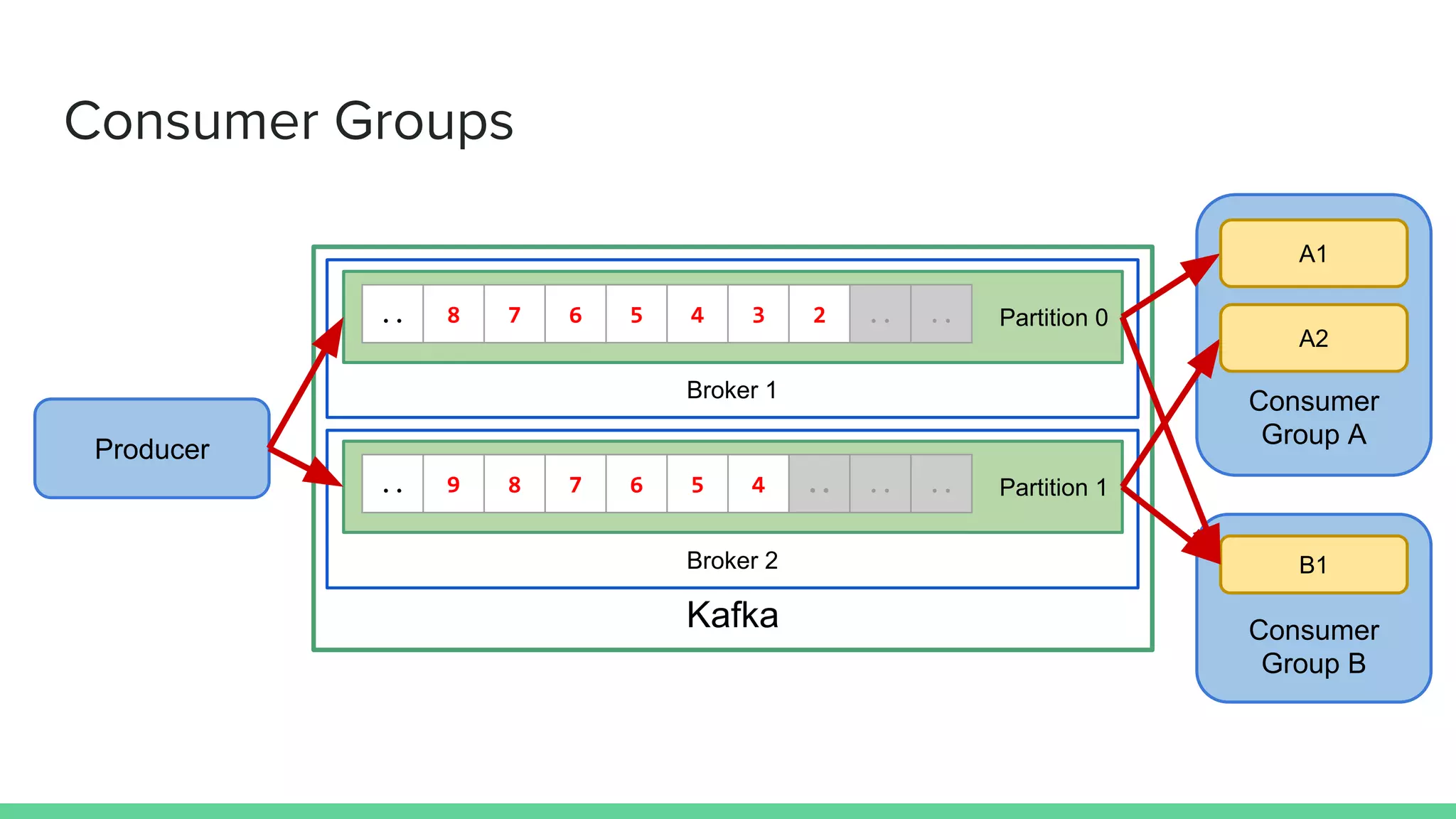

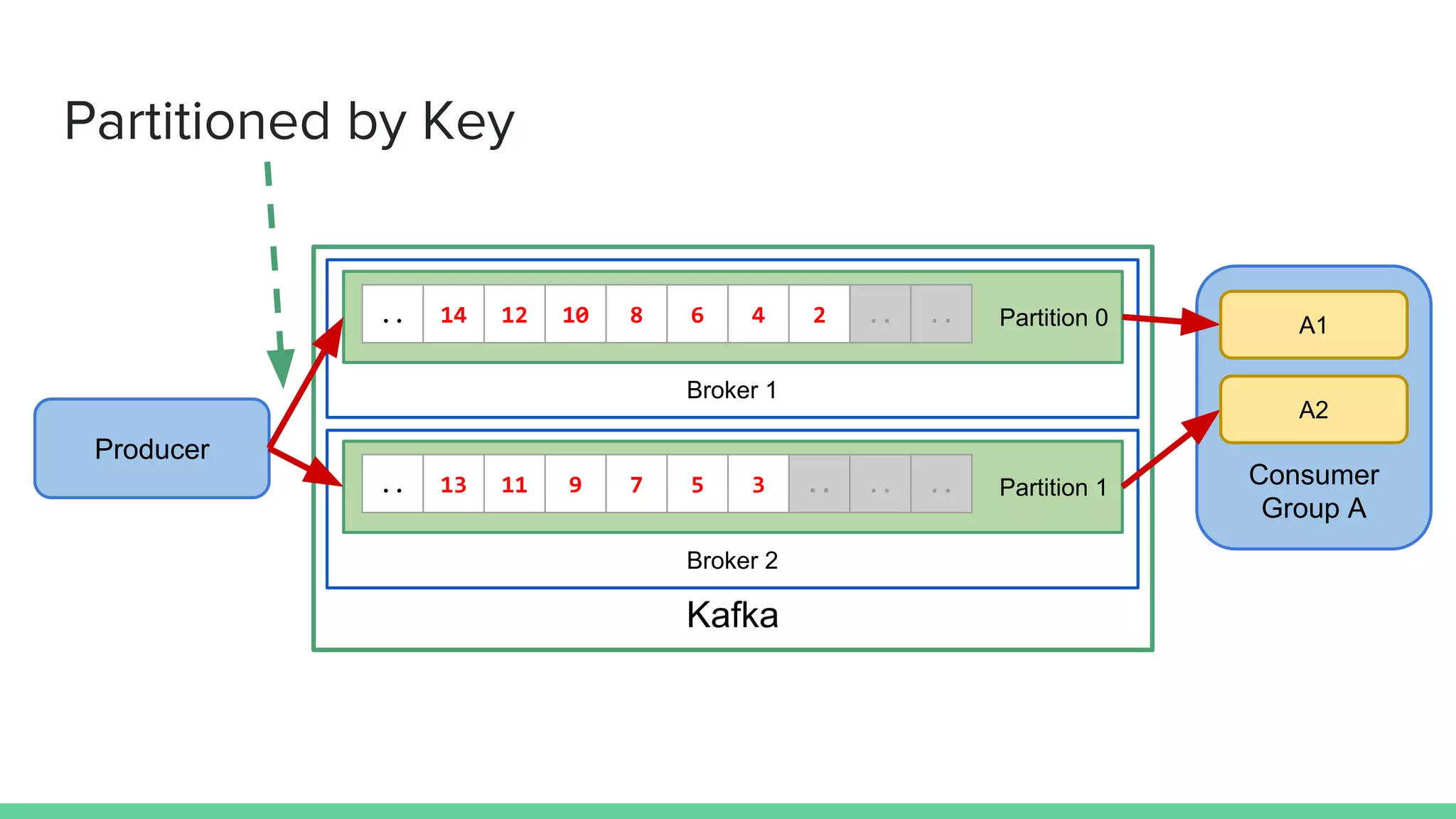

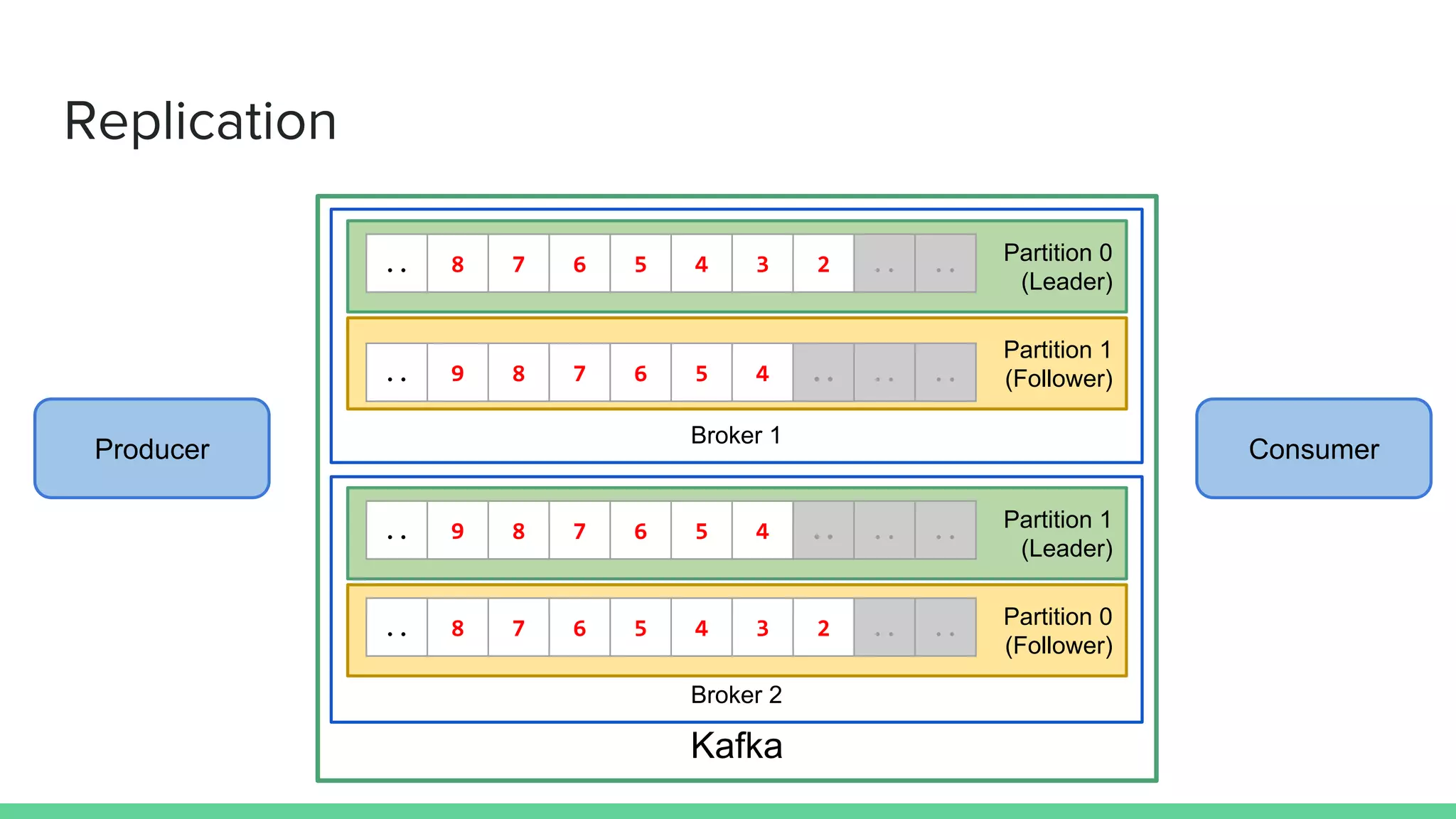

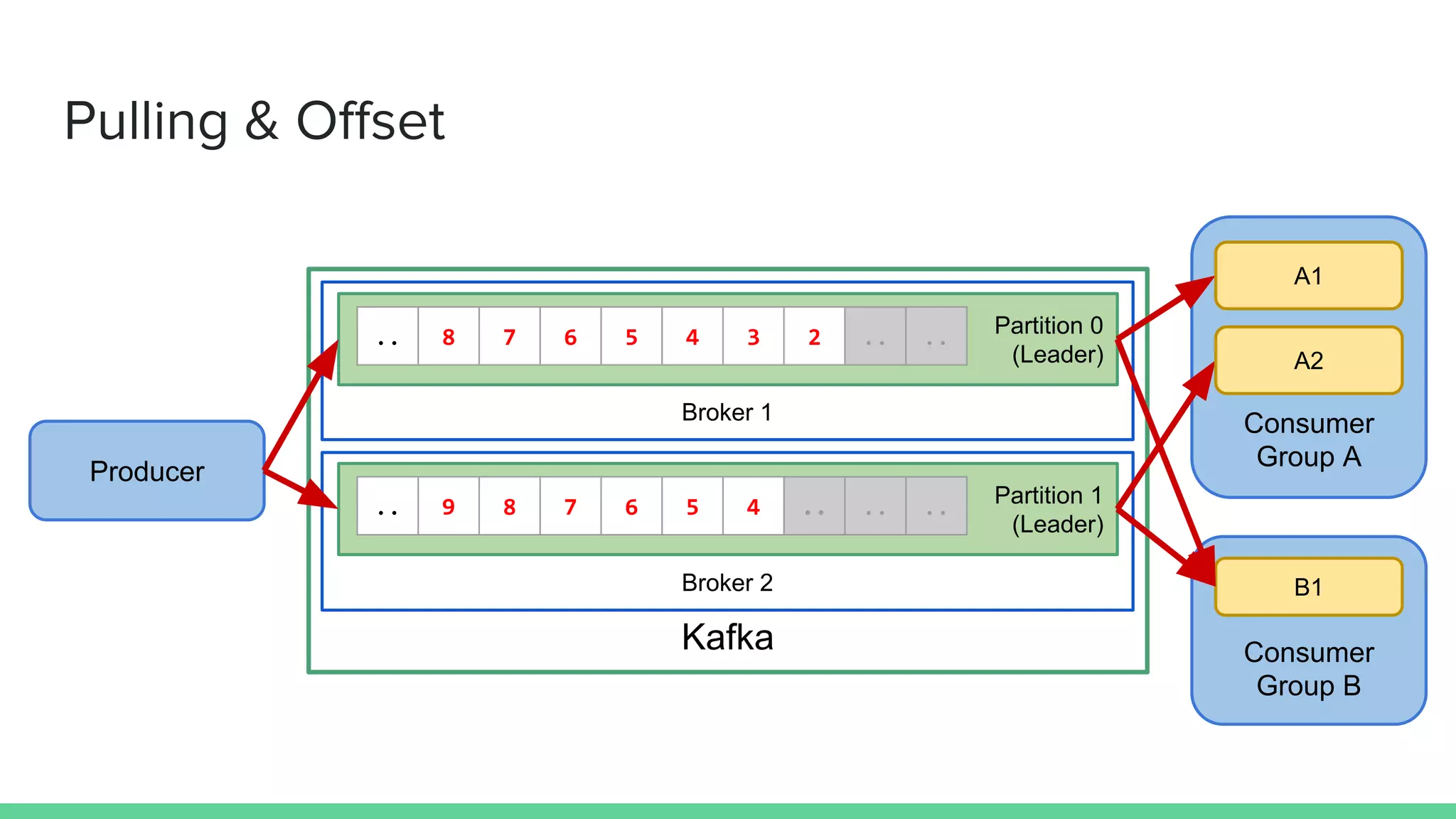

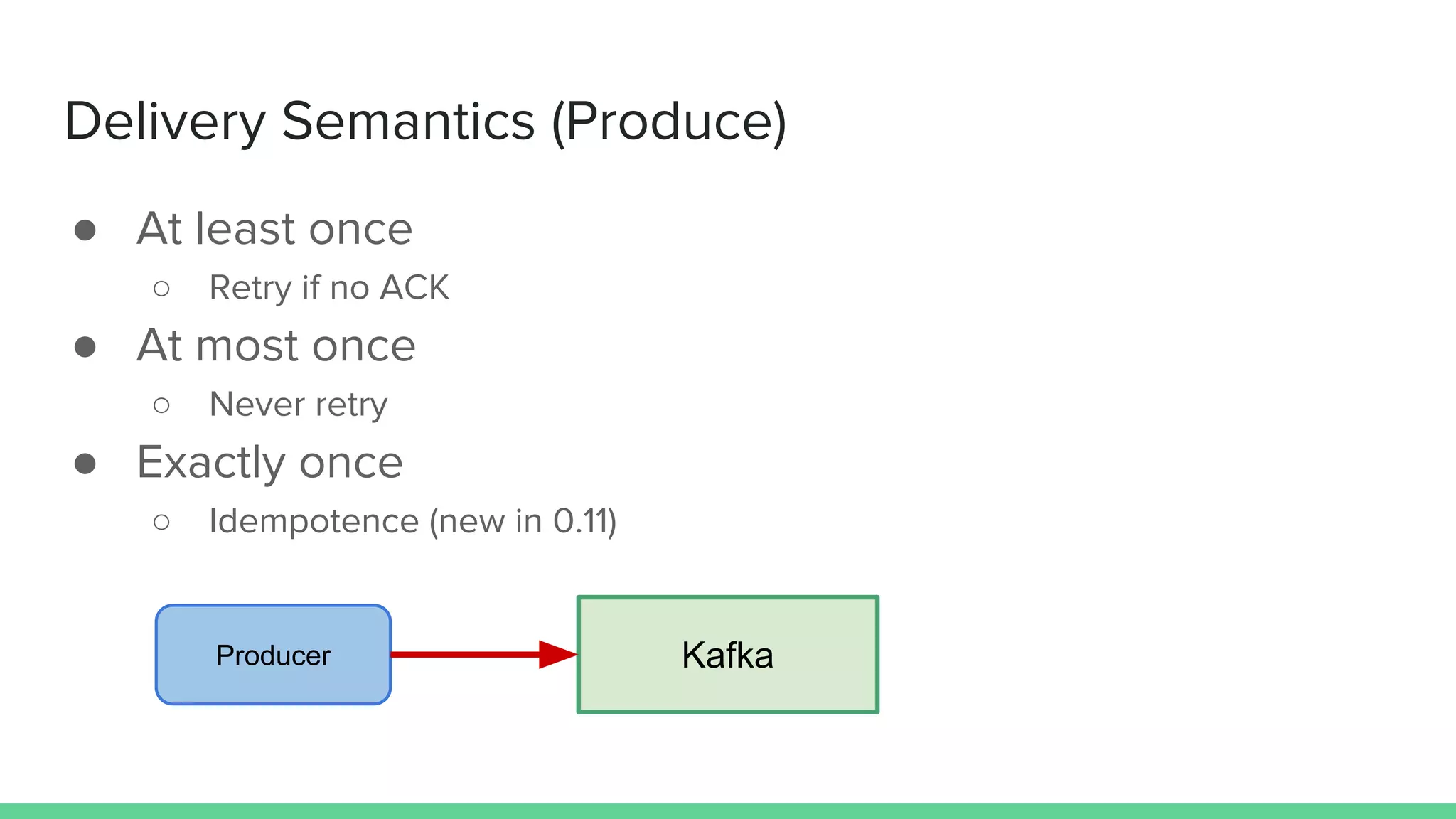

This document provides an introduction to Apache Kafka. It describes Kafka as a distributed messaging system with features like durability, scalability, publish-subscribe capabilities, and ordering. It discusses key Kafka concepts like producers, consumers, topics, partitions and brokers. It also summarizes use cases for Kafka and how to implement producers and consumers in code. Finally, it briefly outlines related tools like Kafka Connect and Kafka Streams that build upon the Kafka platform.

val f: java.util.concurrent.Future[RecordMetadata] =

producer.send(new ProducerRecord[String, String]("my-topic", "key", "value"))

f.get // sync

producer.close](https://image.slidesharecdn.com/introductiontokafka-171213143212/75/Introduction-to-Apache-Kafka-33-2048.jpg)

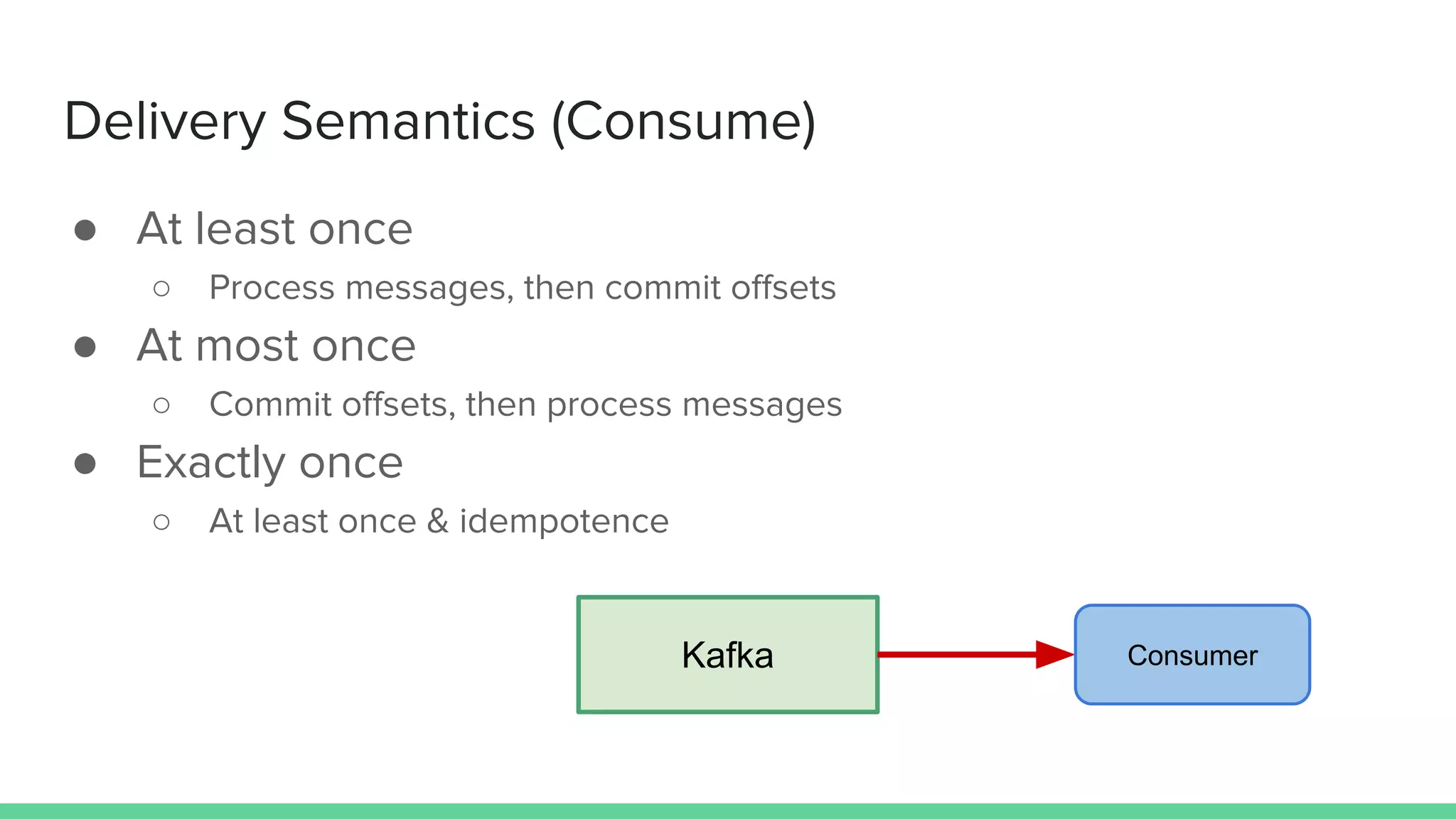

consumer.subscribe(Arrays.asList("my-topic"))

while (true) {

val records = consumer.poll(1000) // timeout for long pull

for (record <- records.asScala)

println(record.partition, record.offset, record.key, record.value)

consumer.commitSync

}](https://image.slidesharecdn.com/introductiontokafka-171213143212/75/Introduction-to-Apache-Kafka-34-2048.jpg)