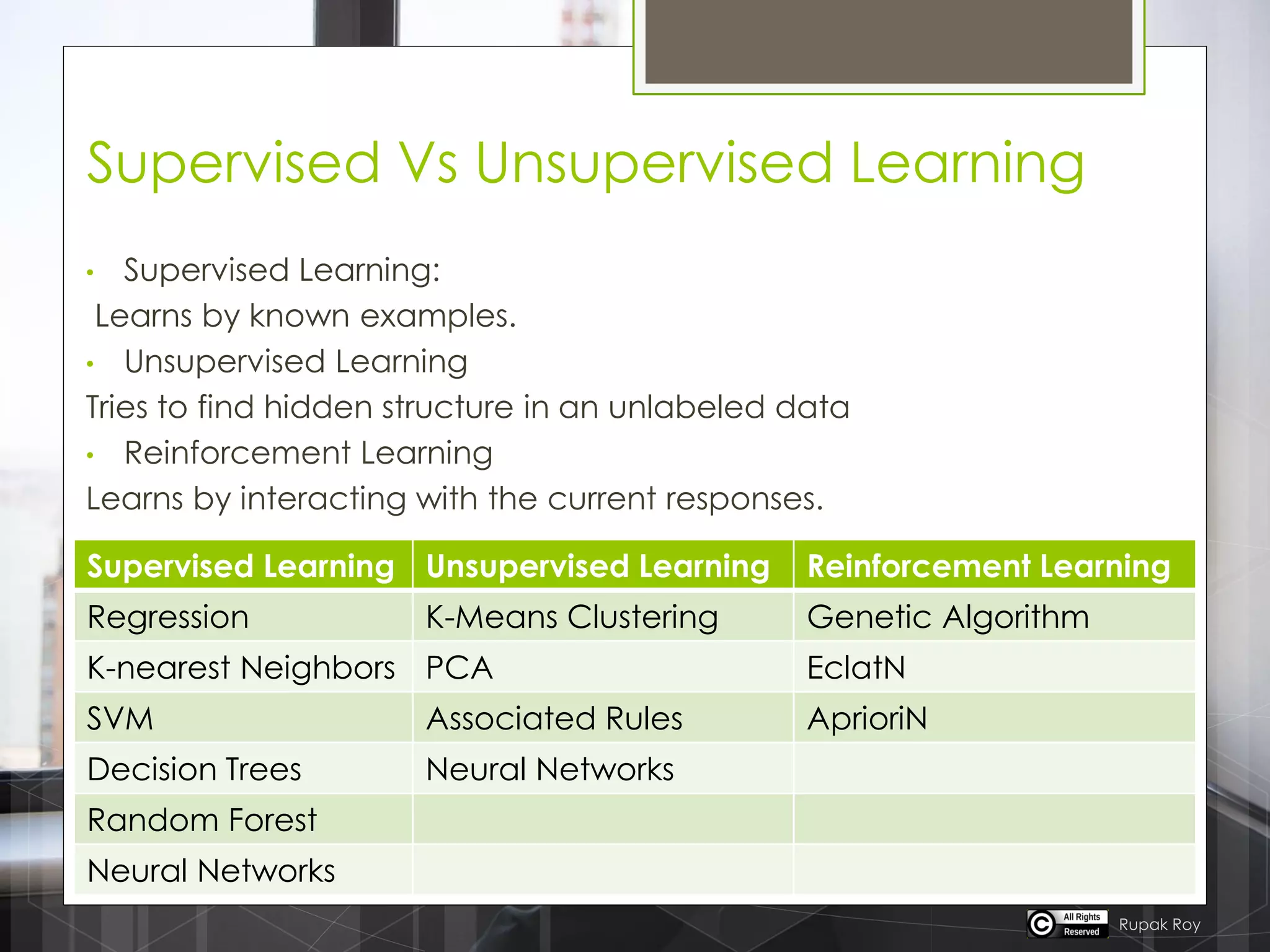

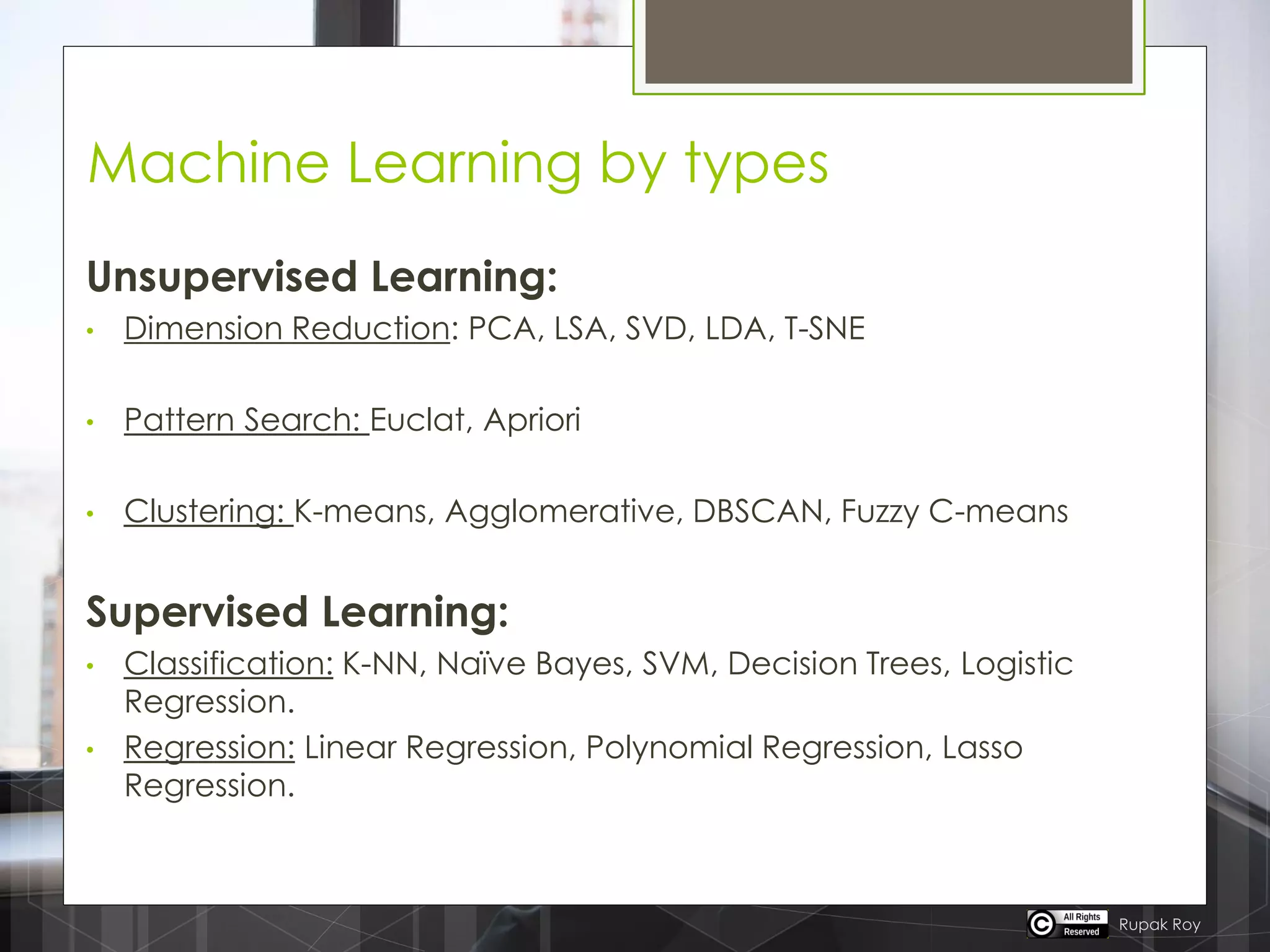

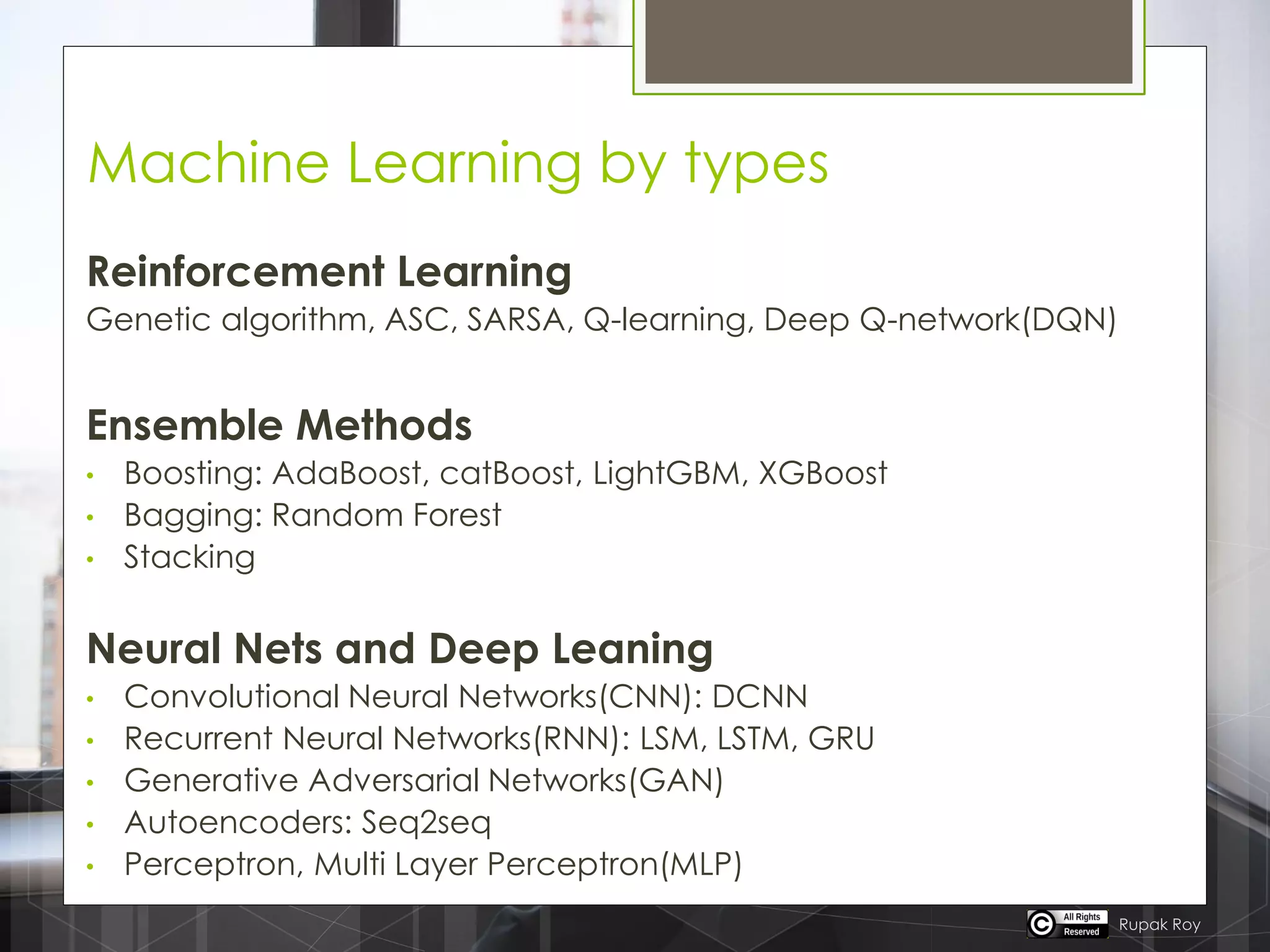

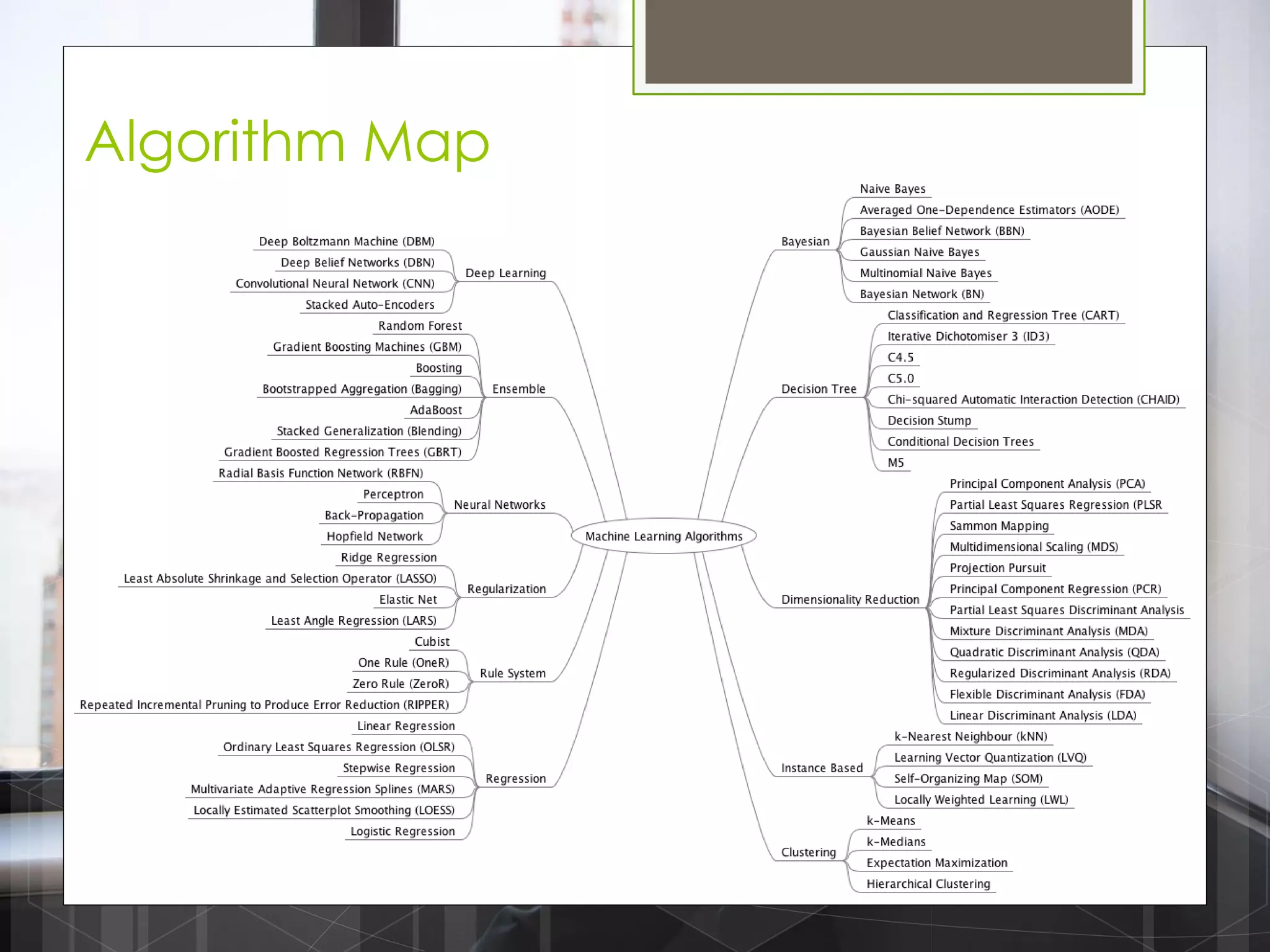

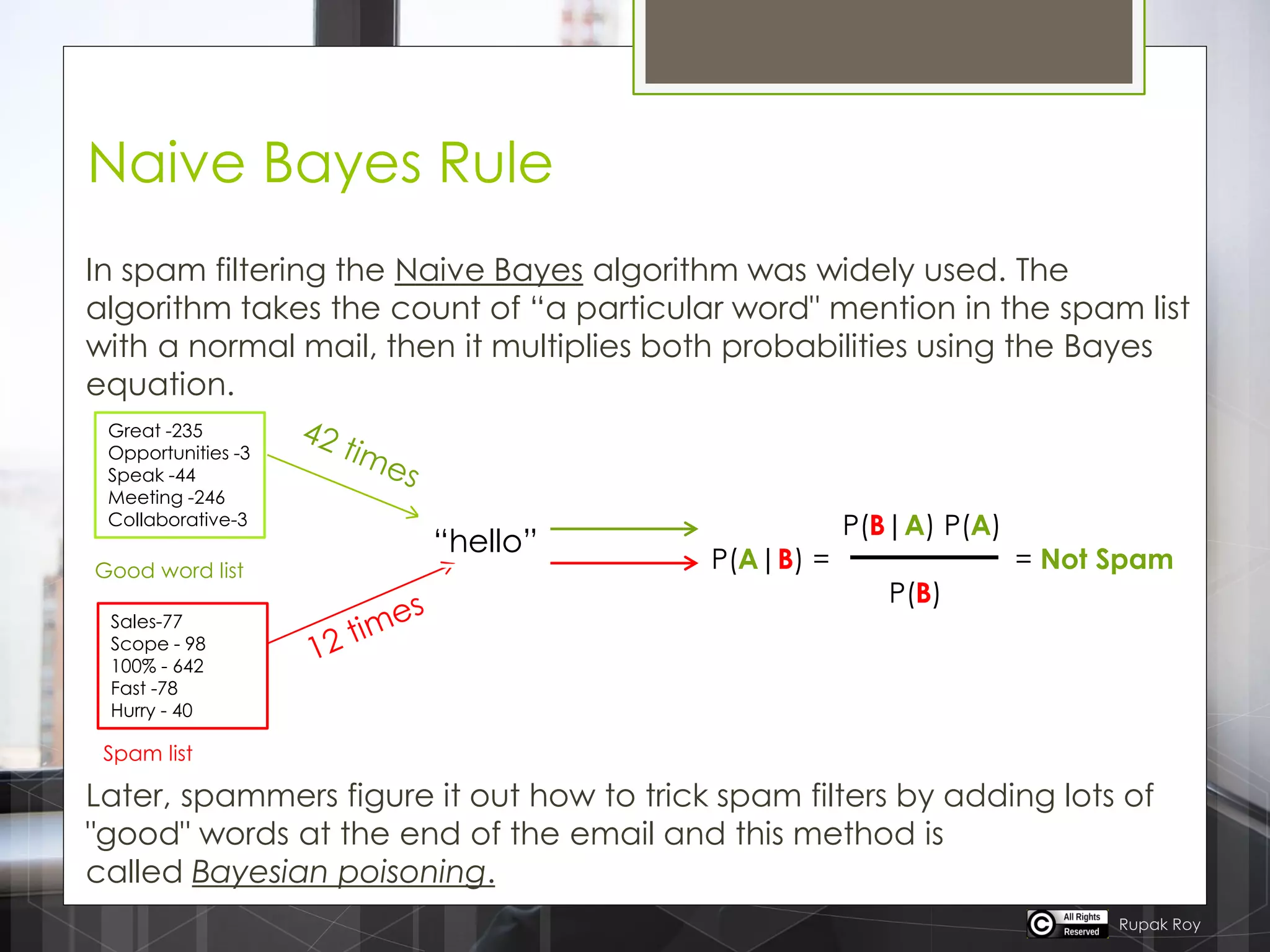

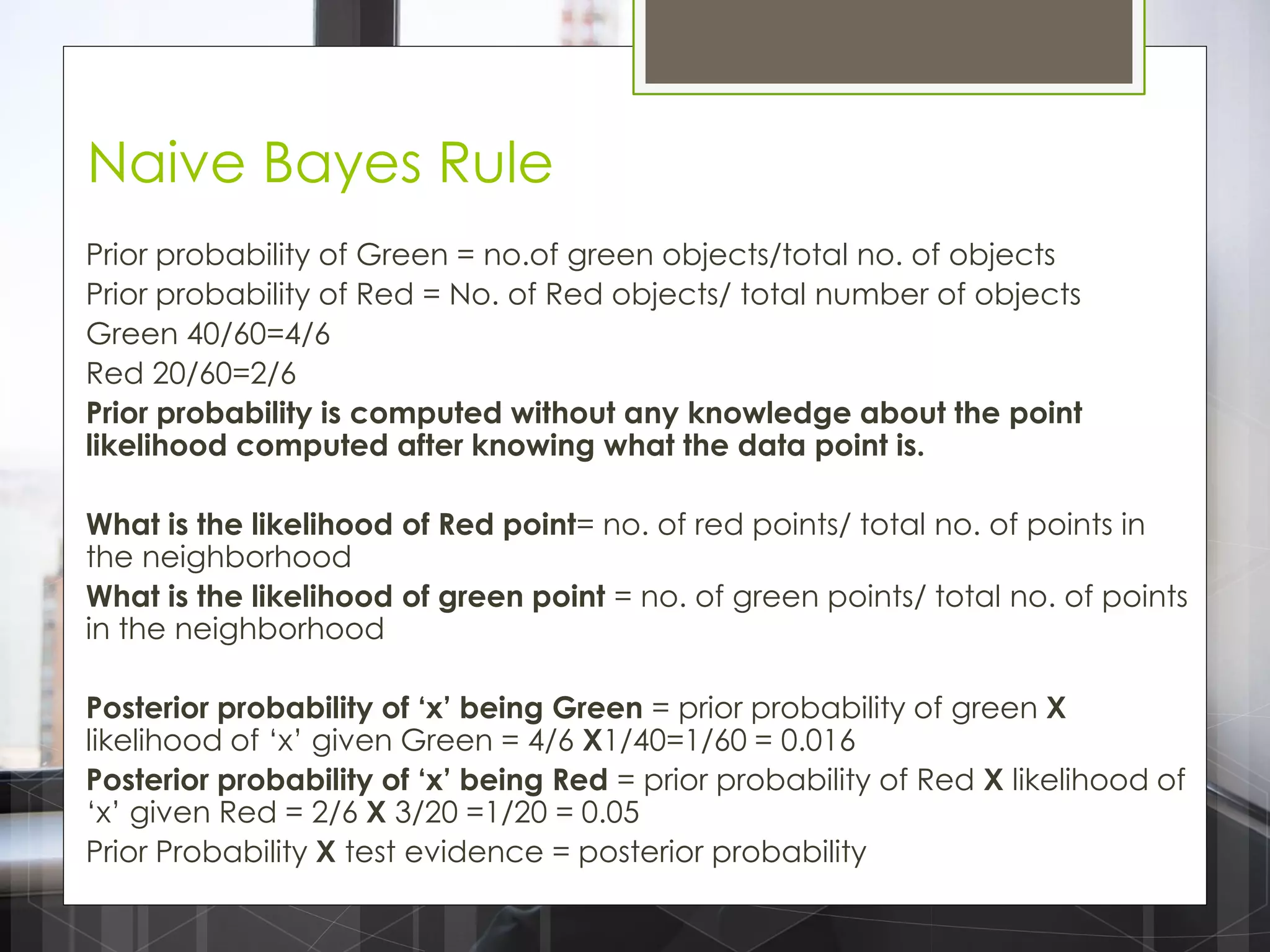

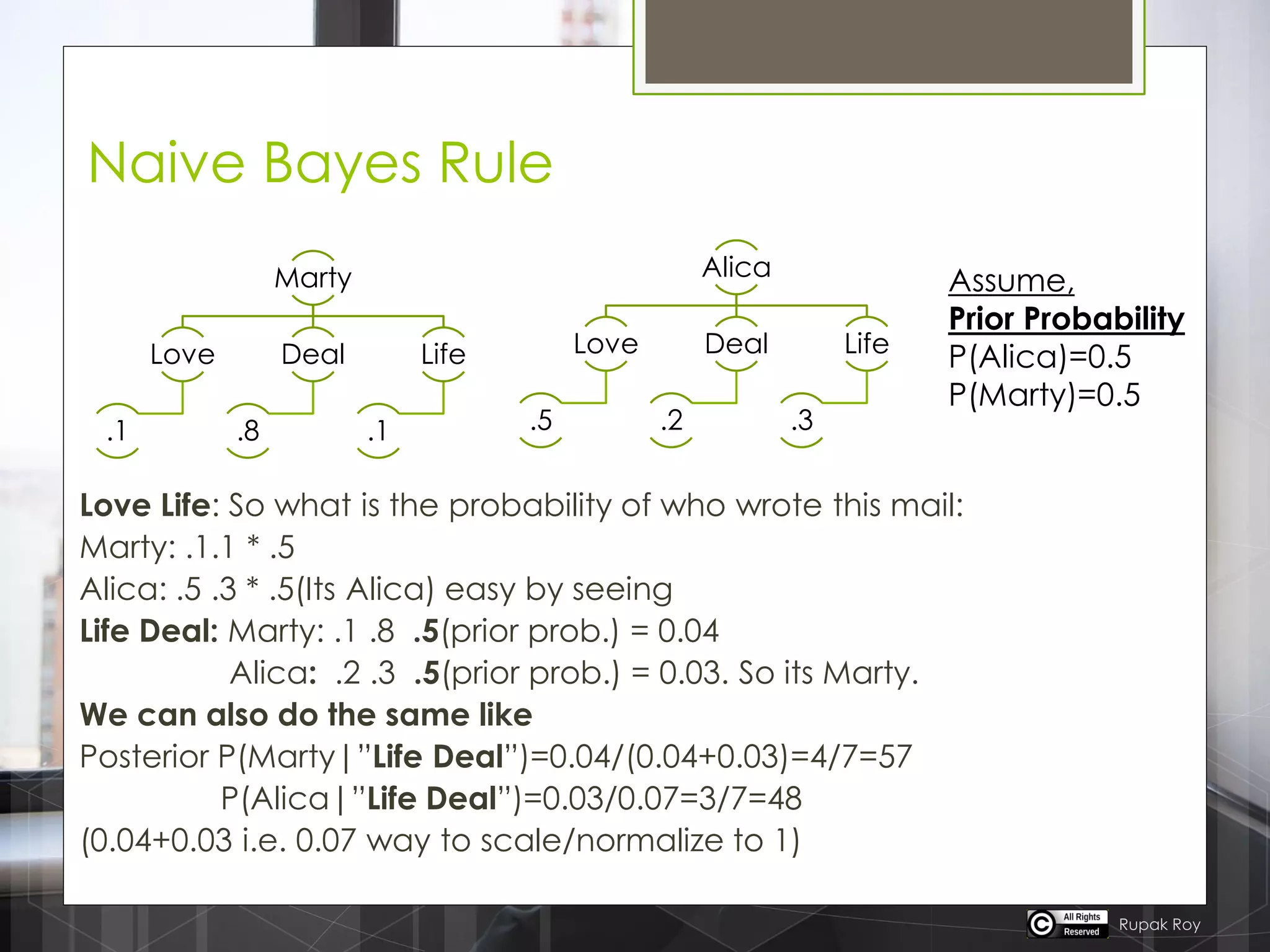

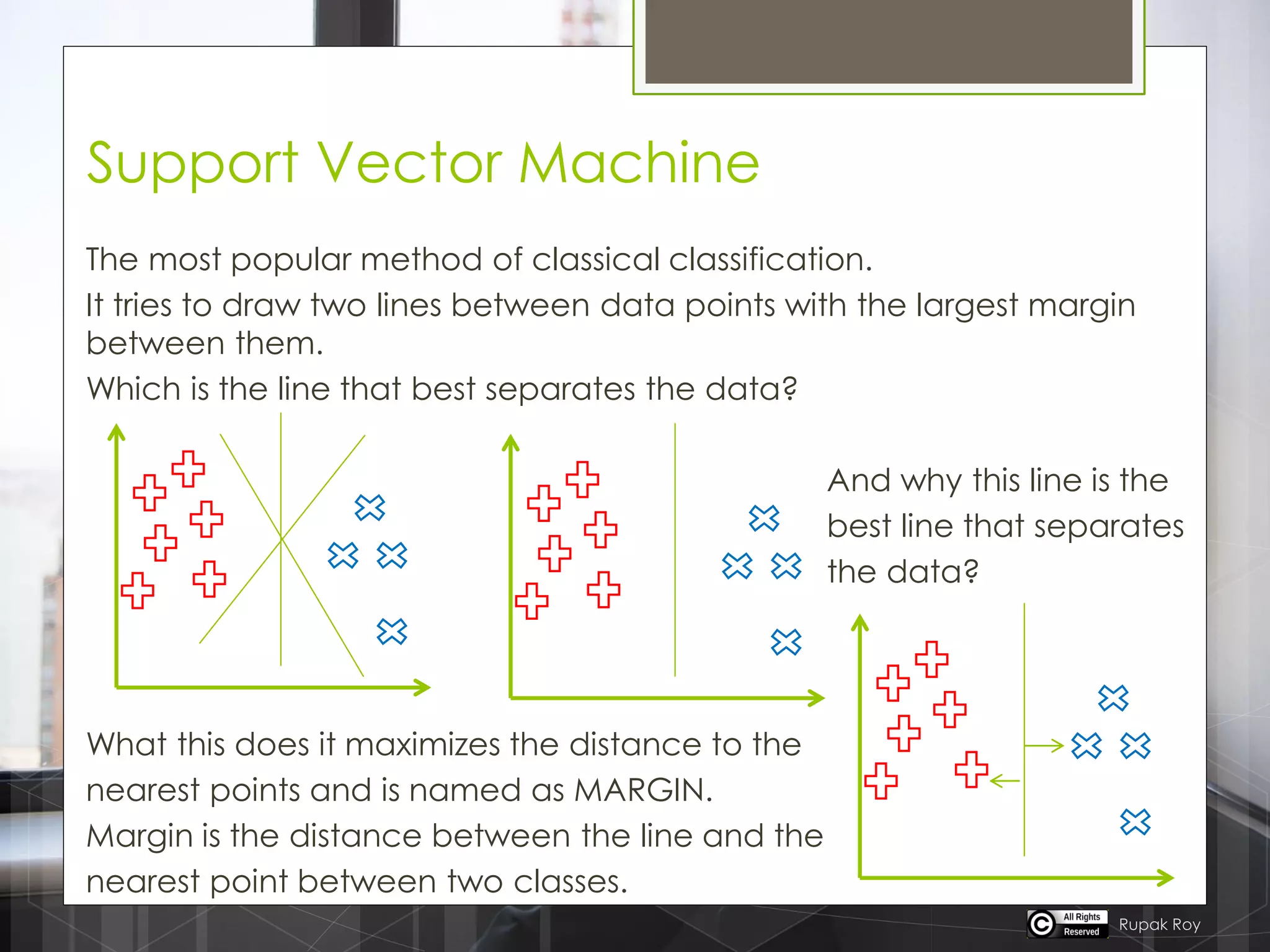

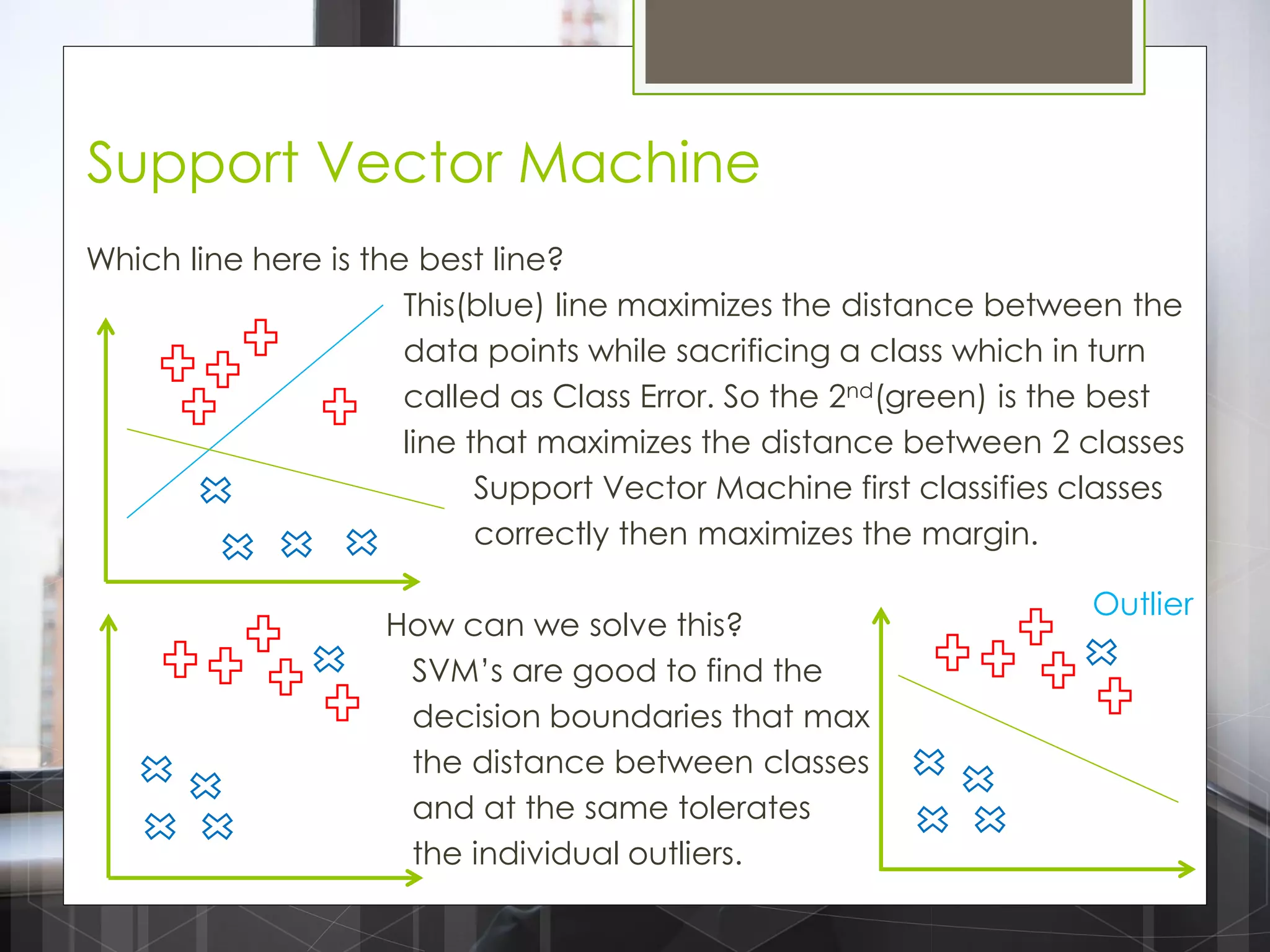

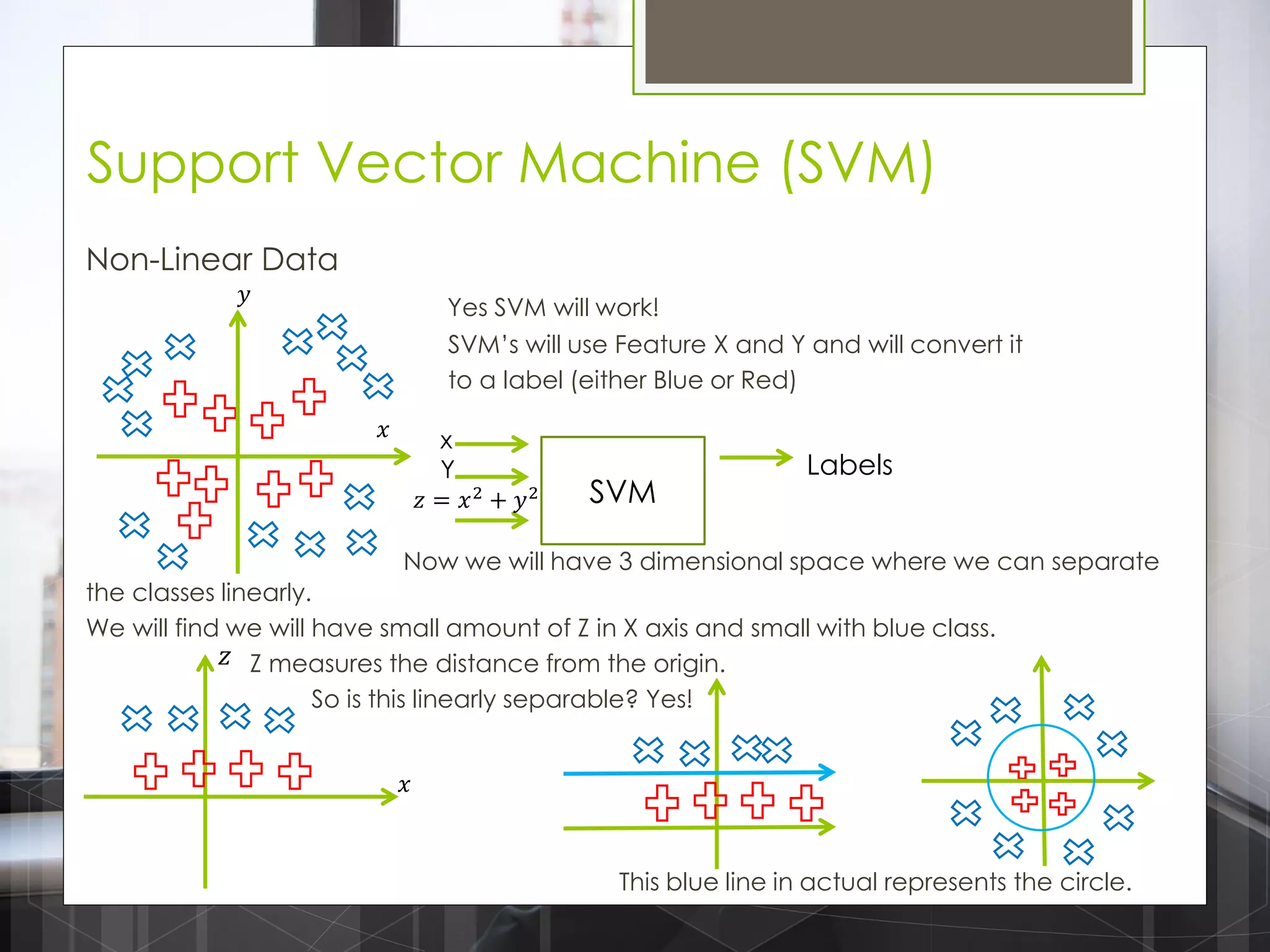

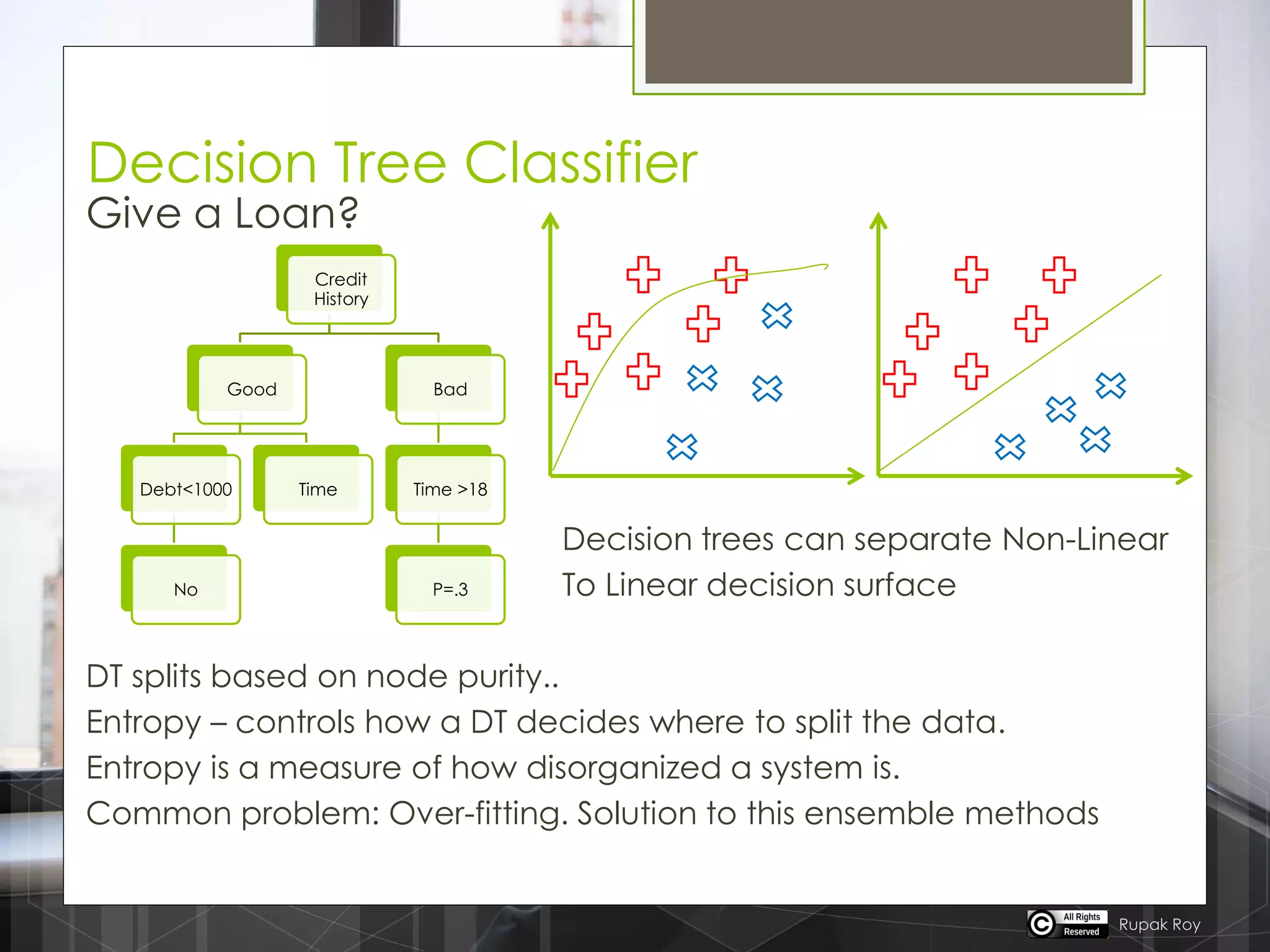

The document discusses various machine learning algorithms categorized into supervised, unsupervised, and reinforcement learning, explaining their unique mechanisms and applications. It notably details methods such as Naive Bayes, Support Vector Machines, and Decision Trees, alongside the importance of techniques like PCA for dimensionality reduction. Additionally, it addresses challenges like overfitting and the bias-variance trade-off in model training and performance.