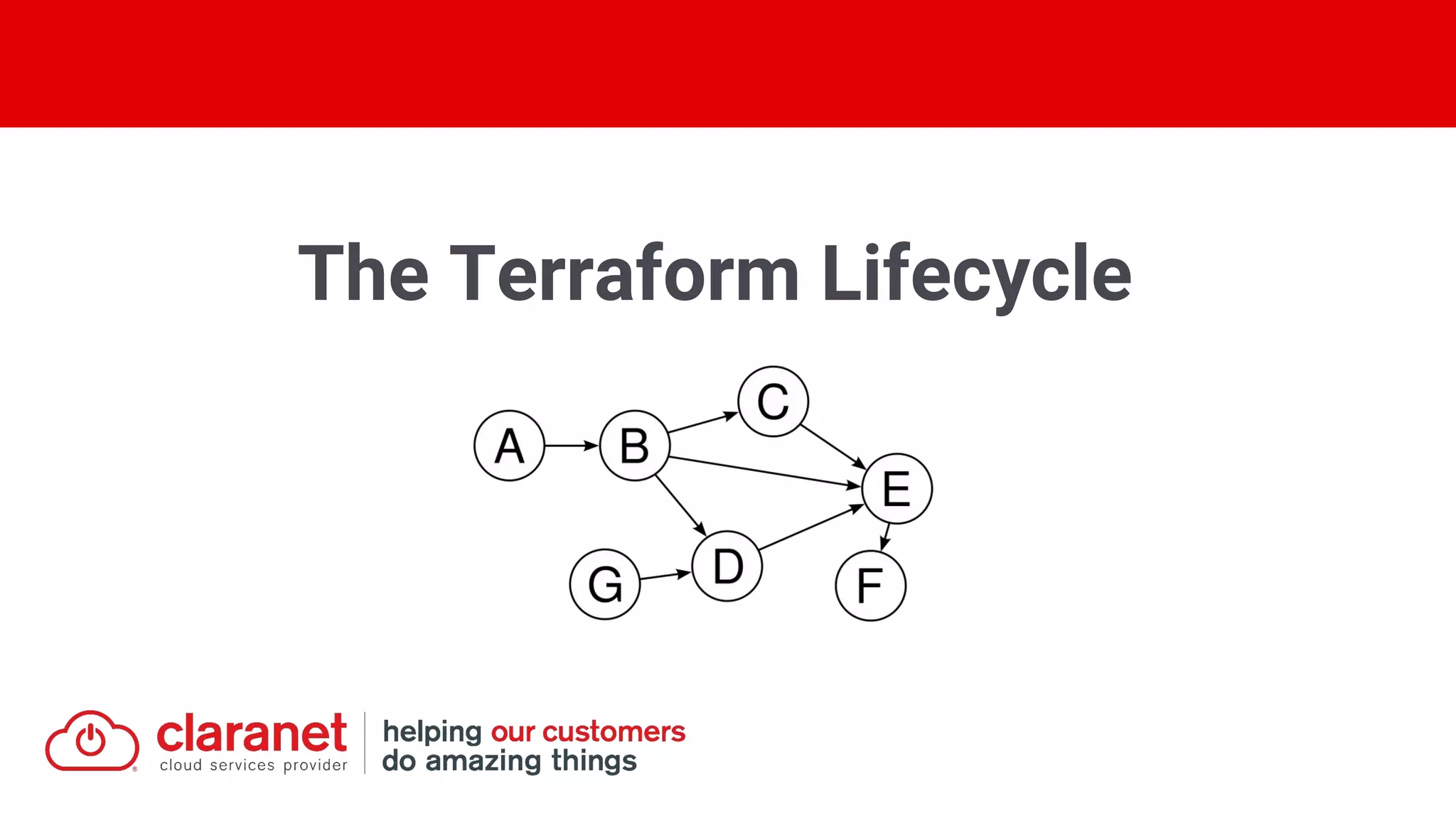

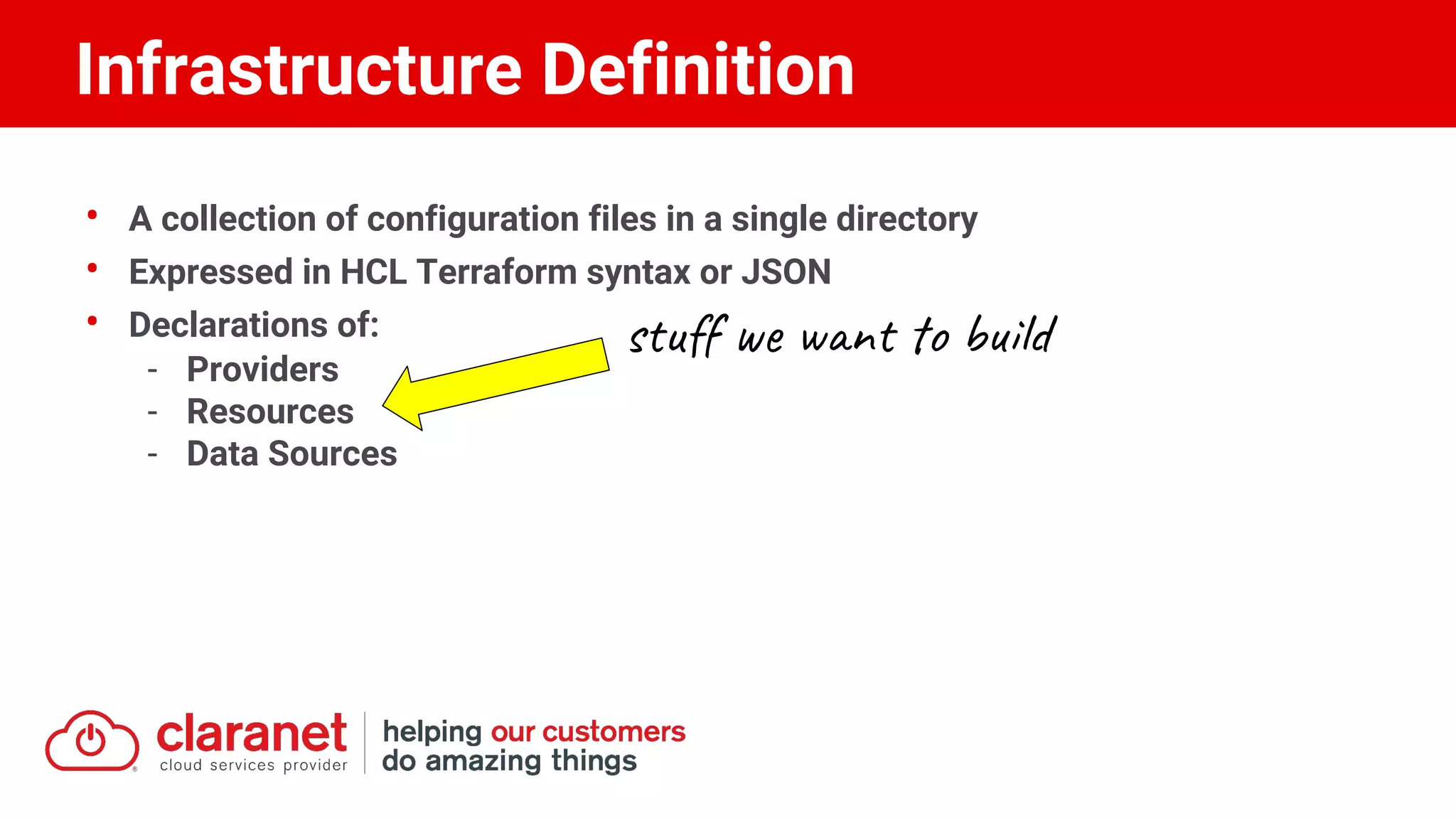

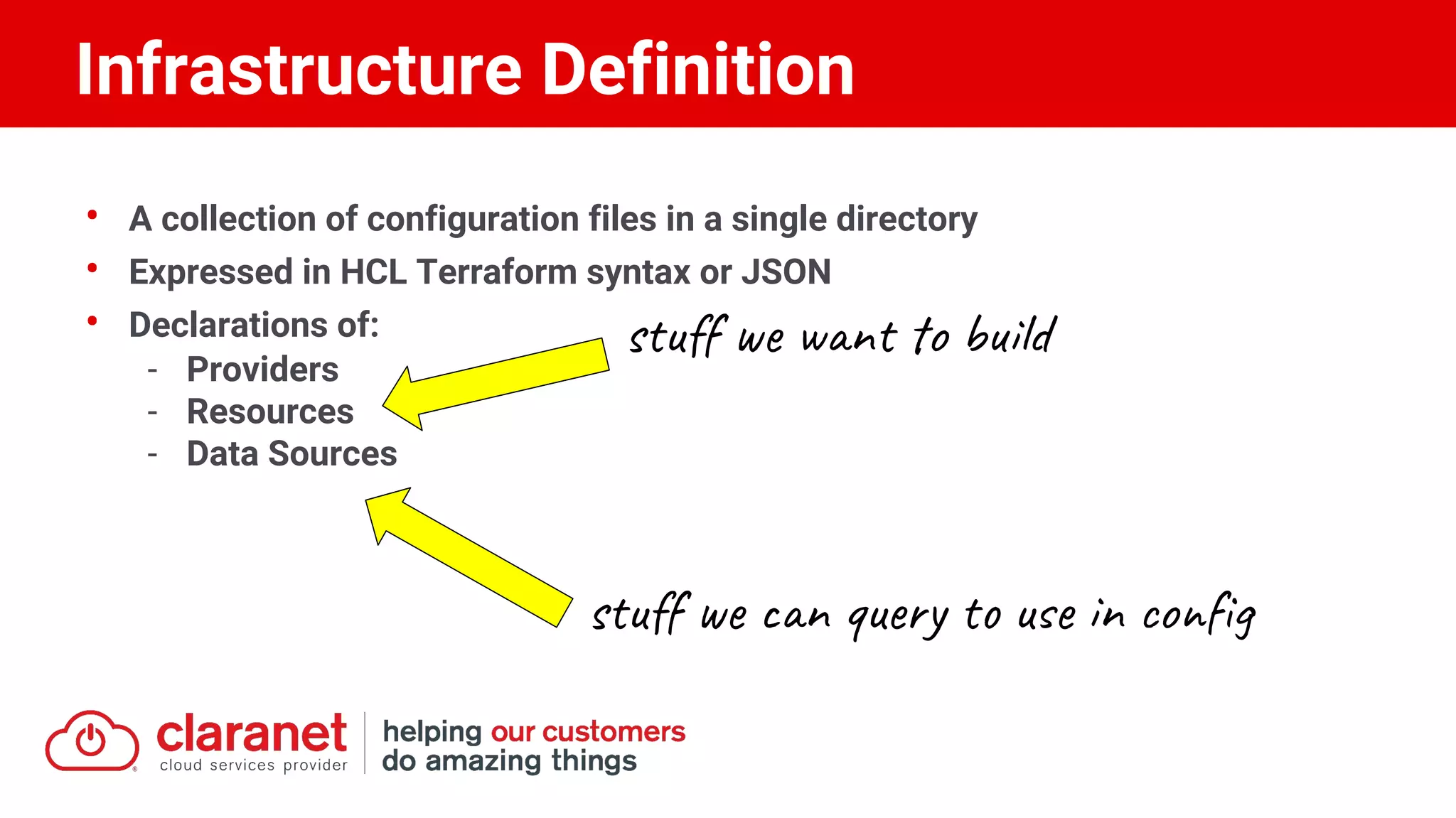

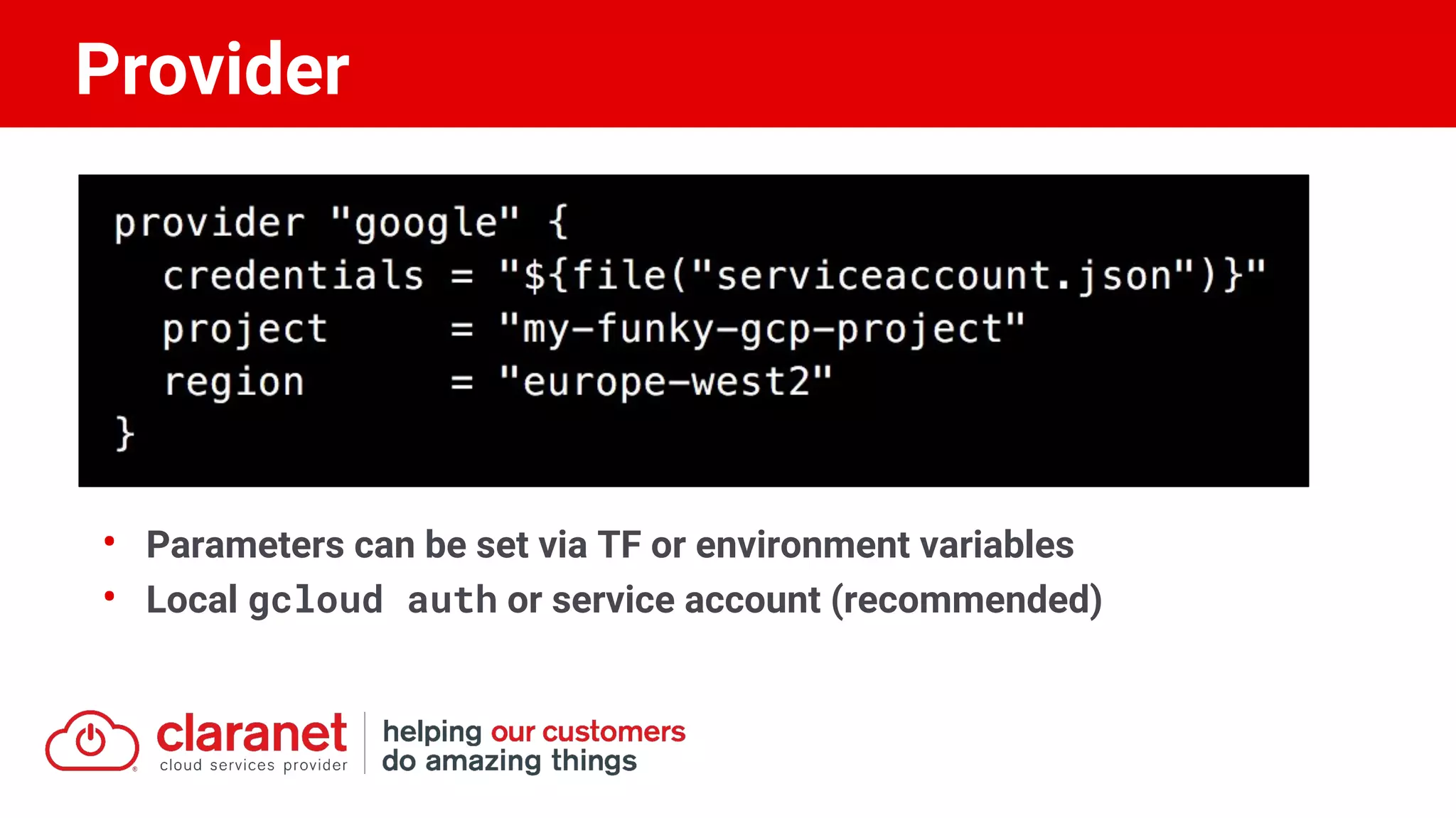

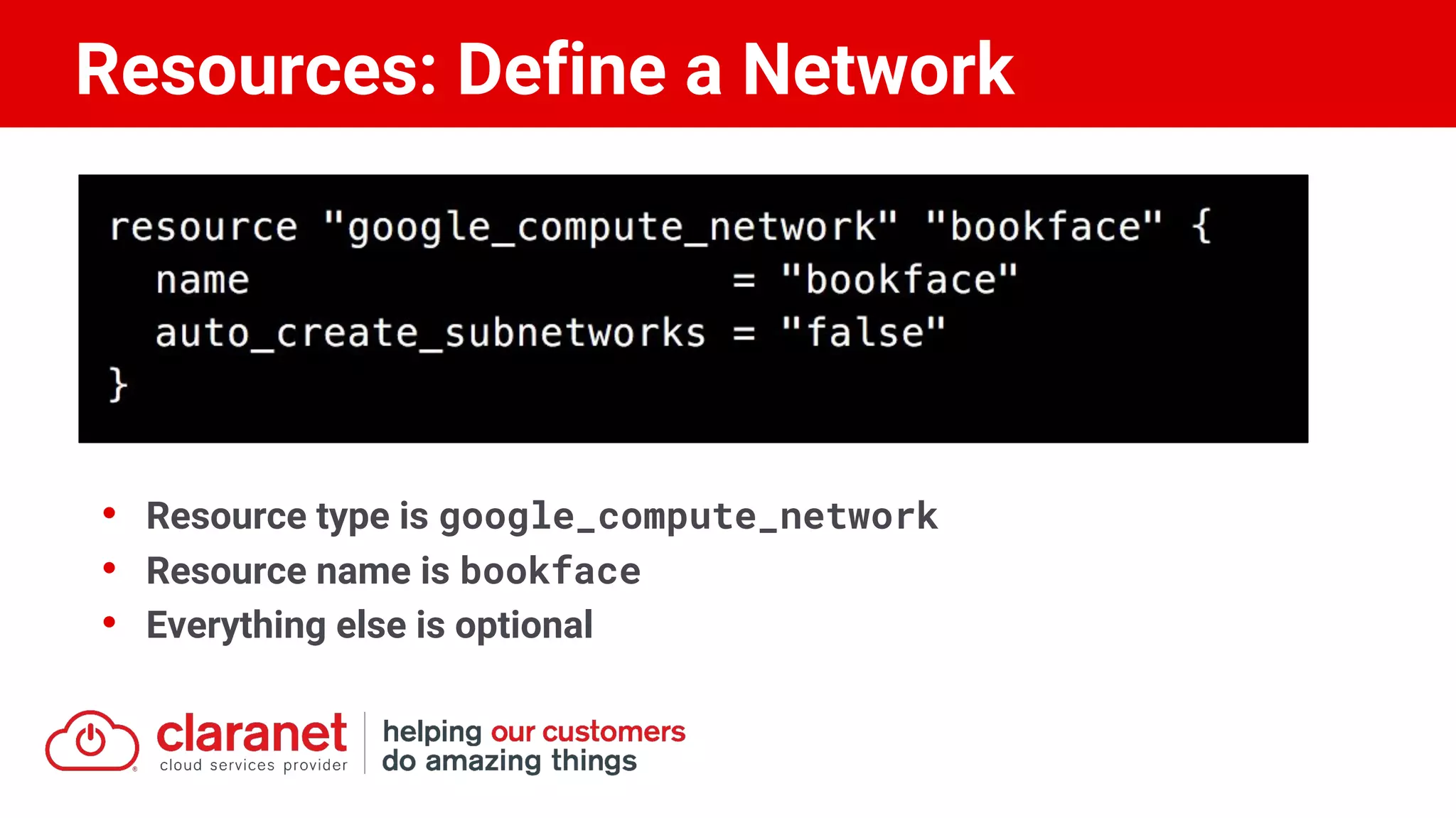

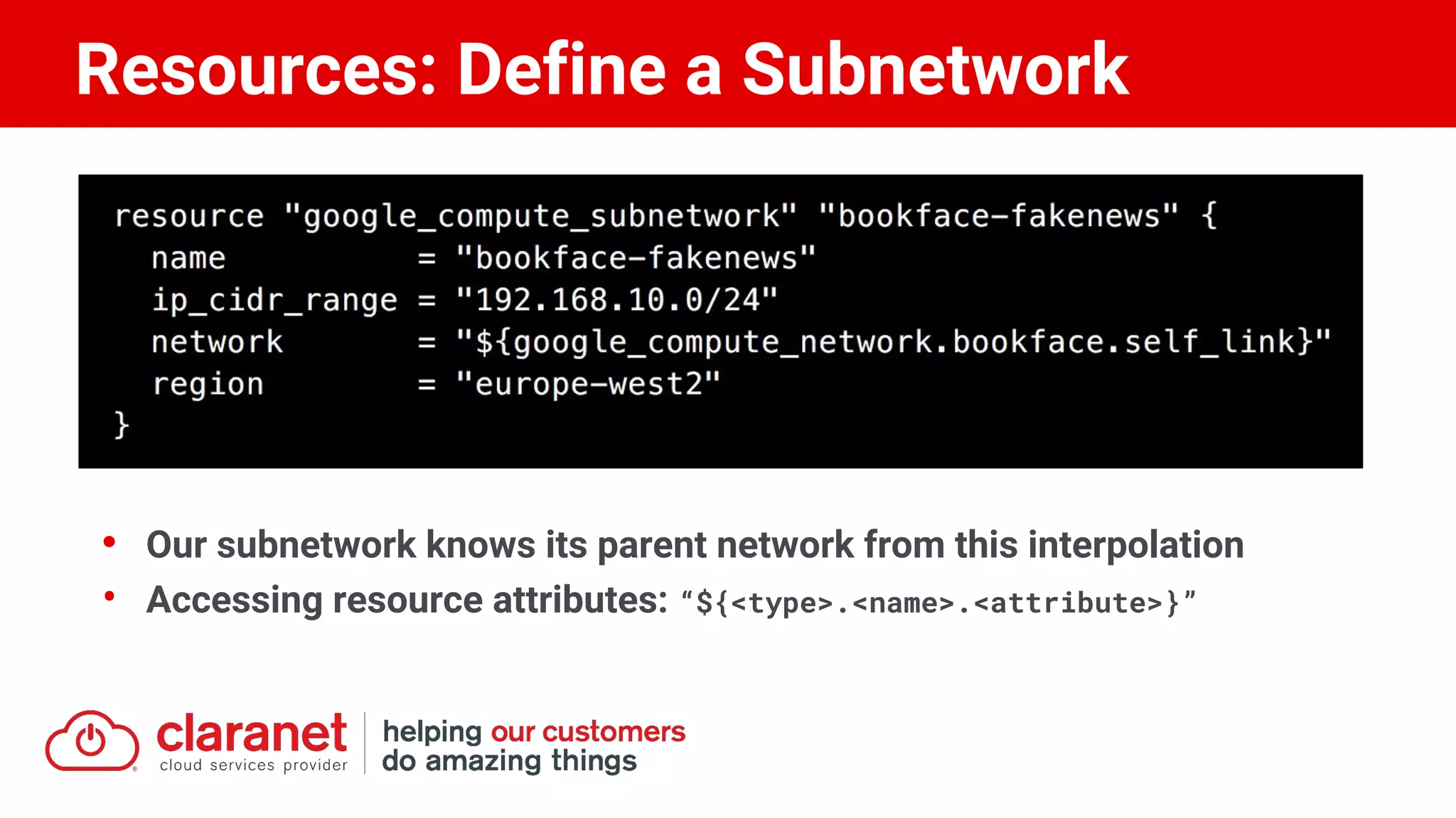

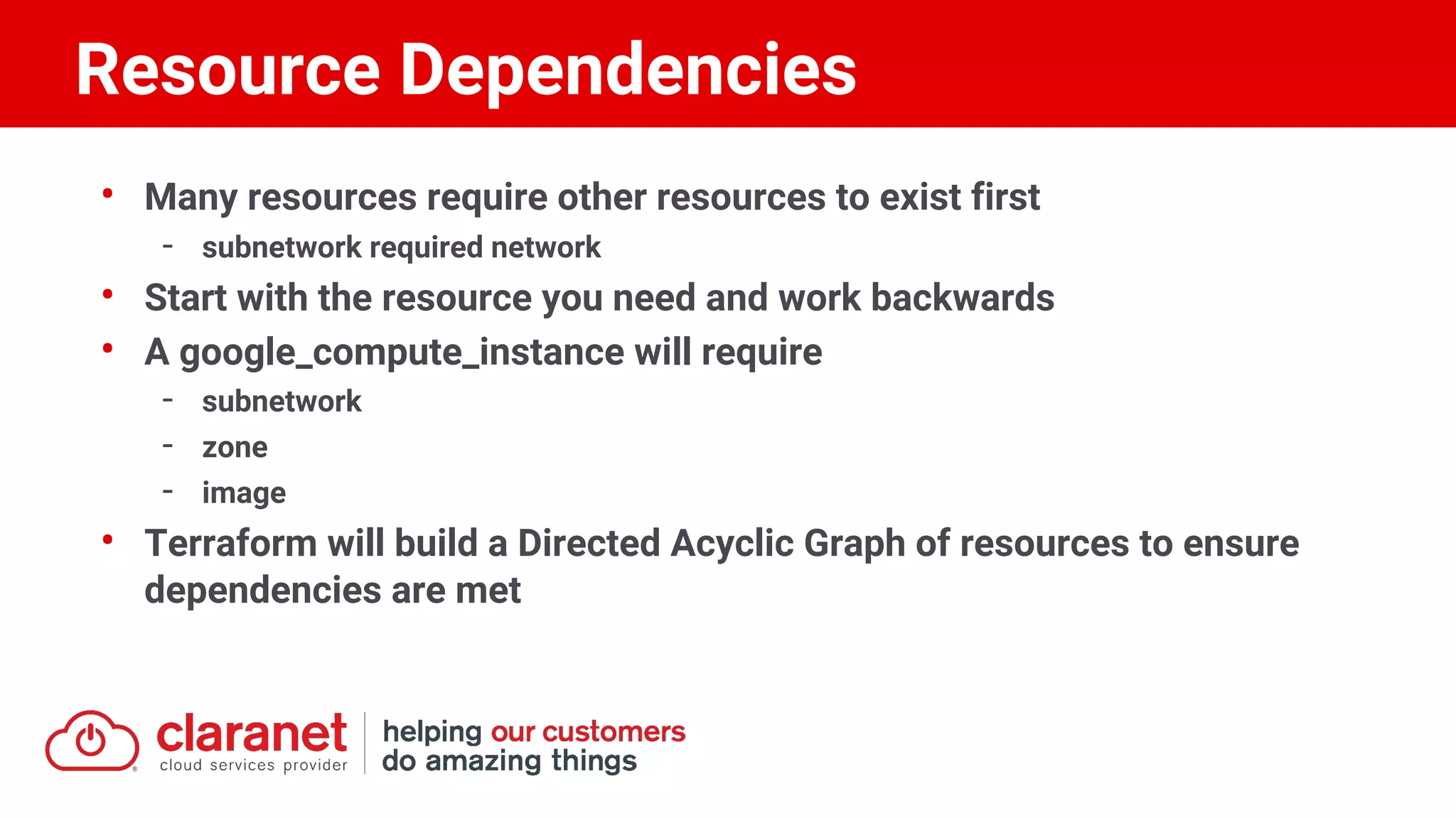

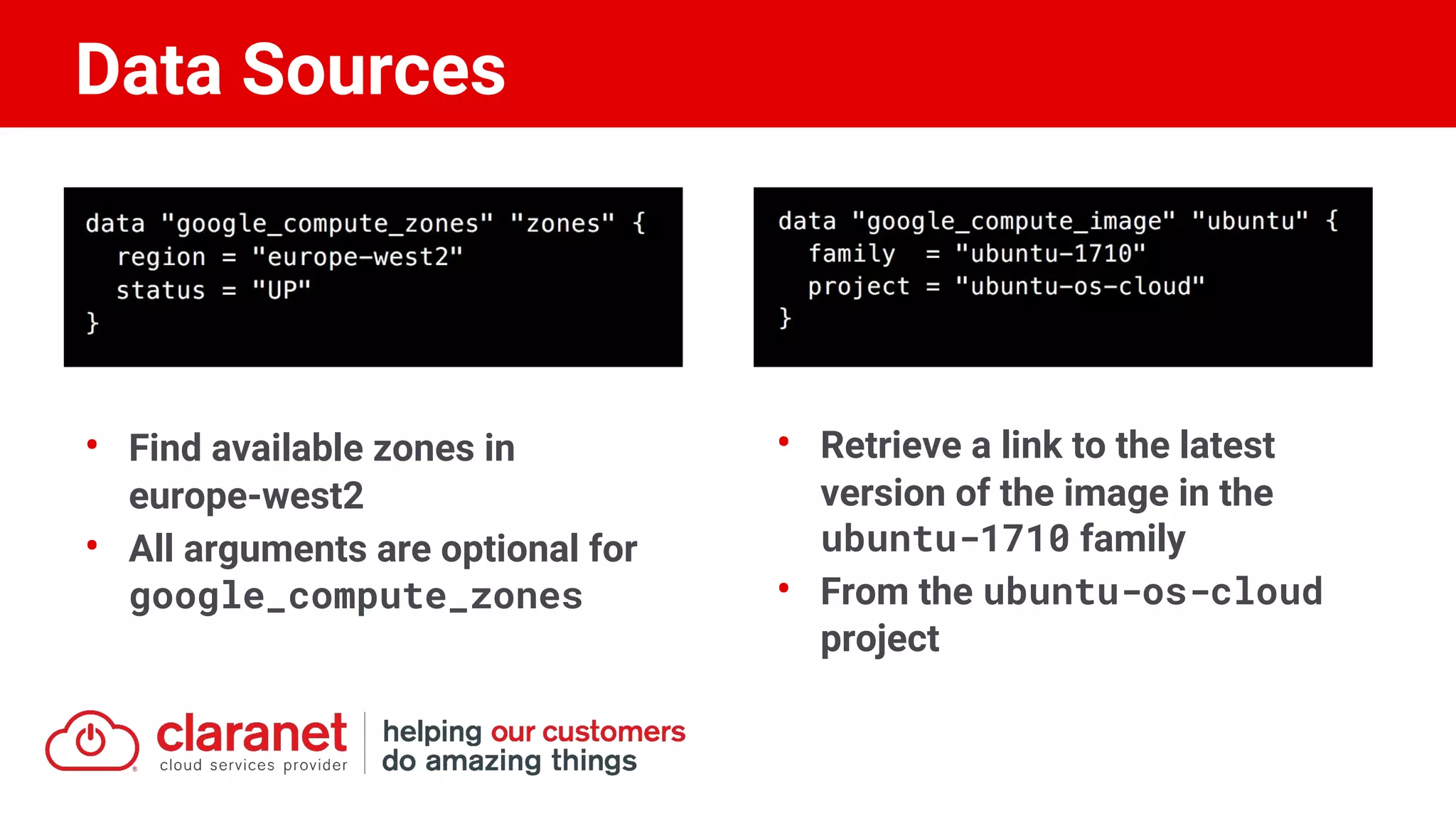

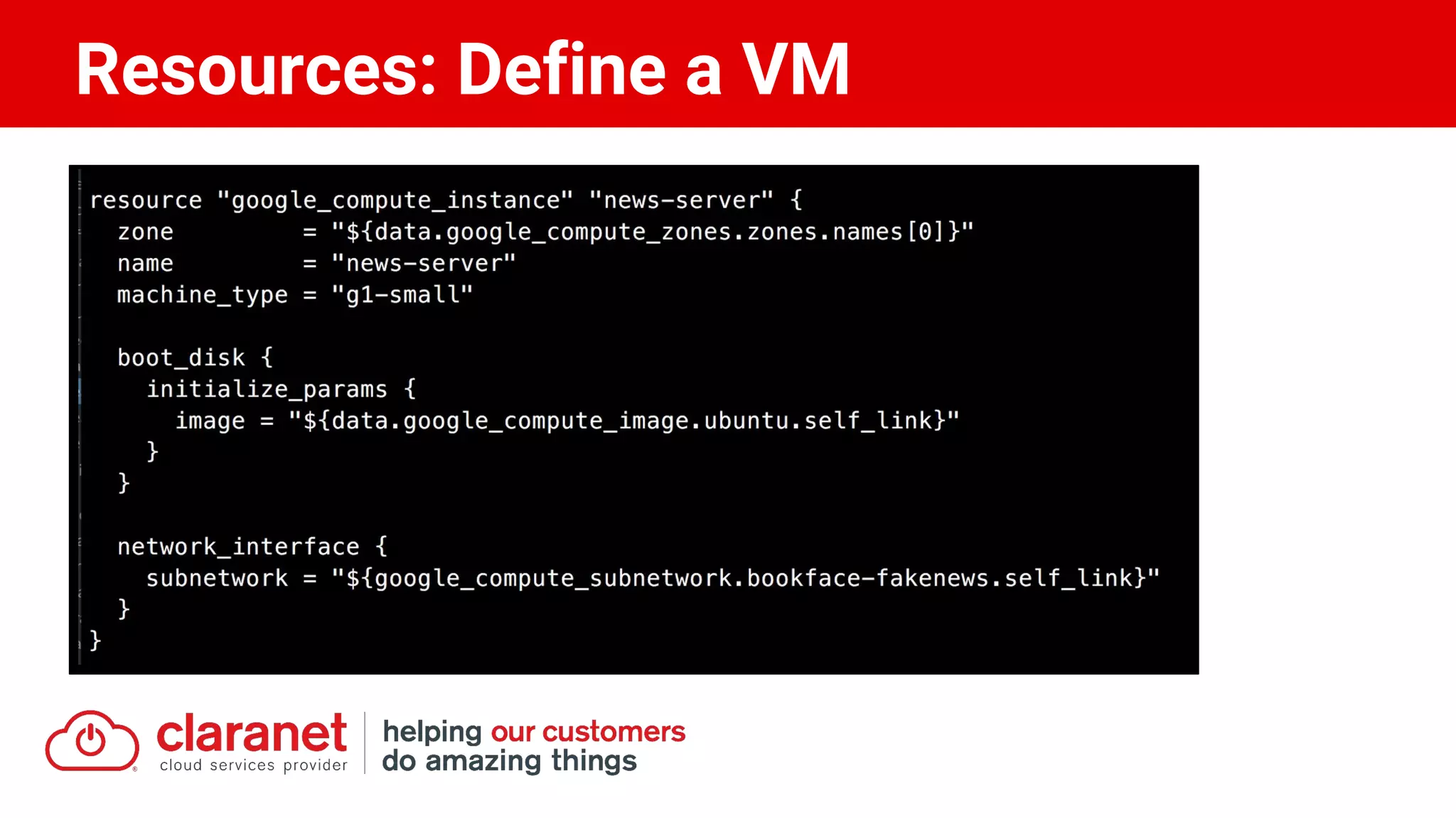

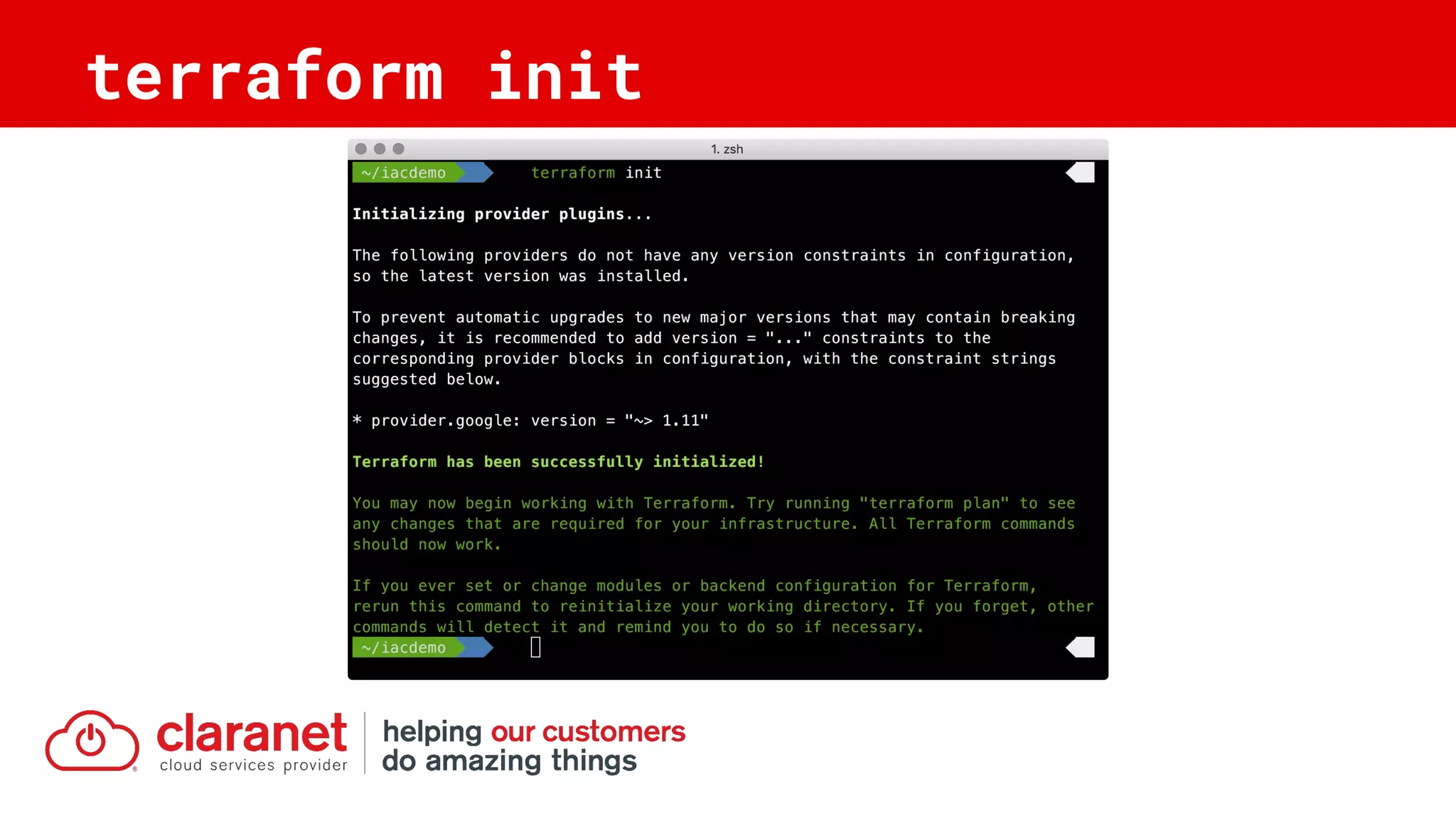

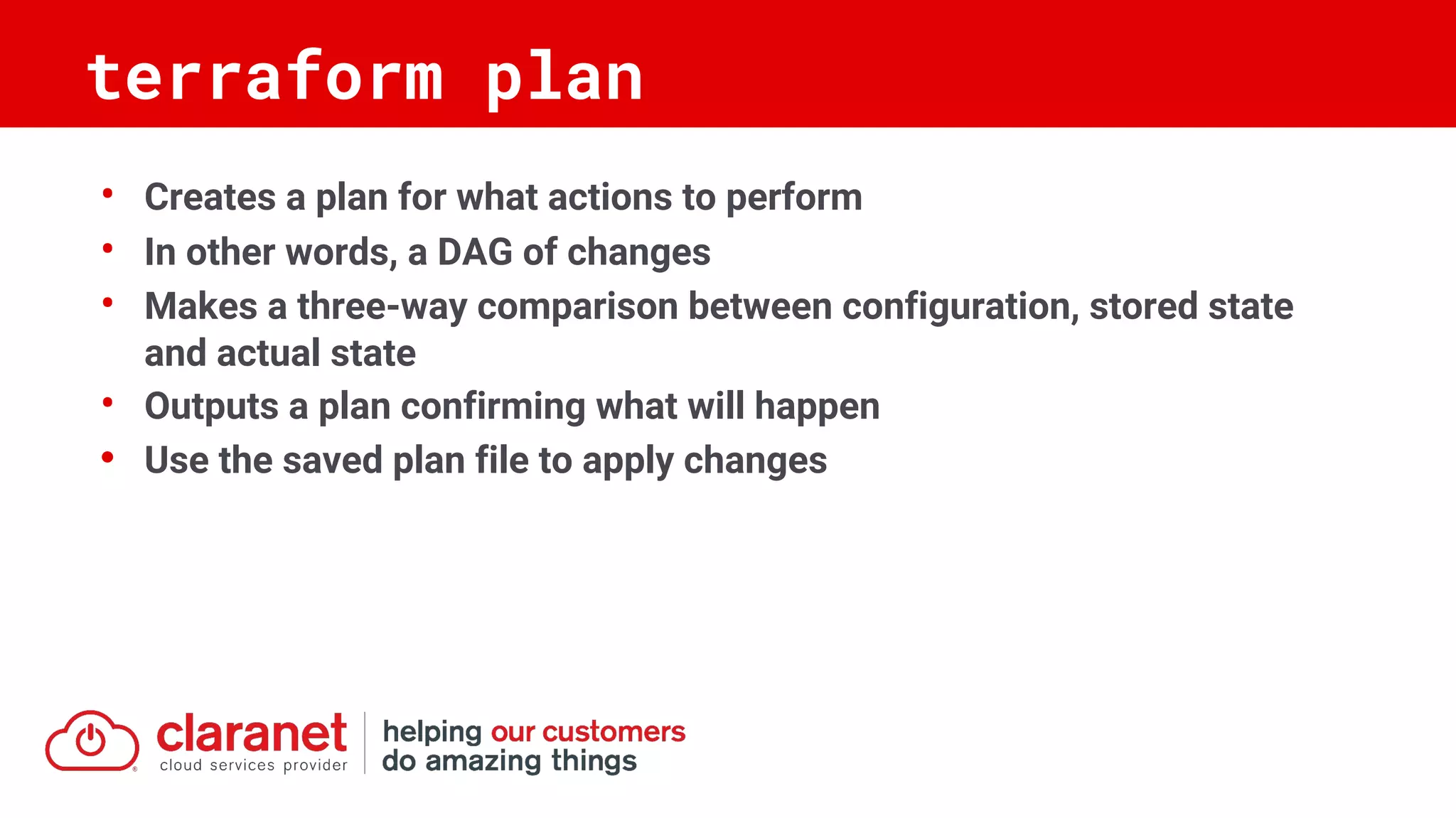

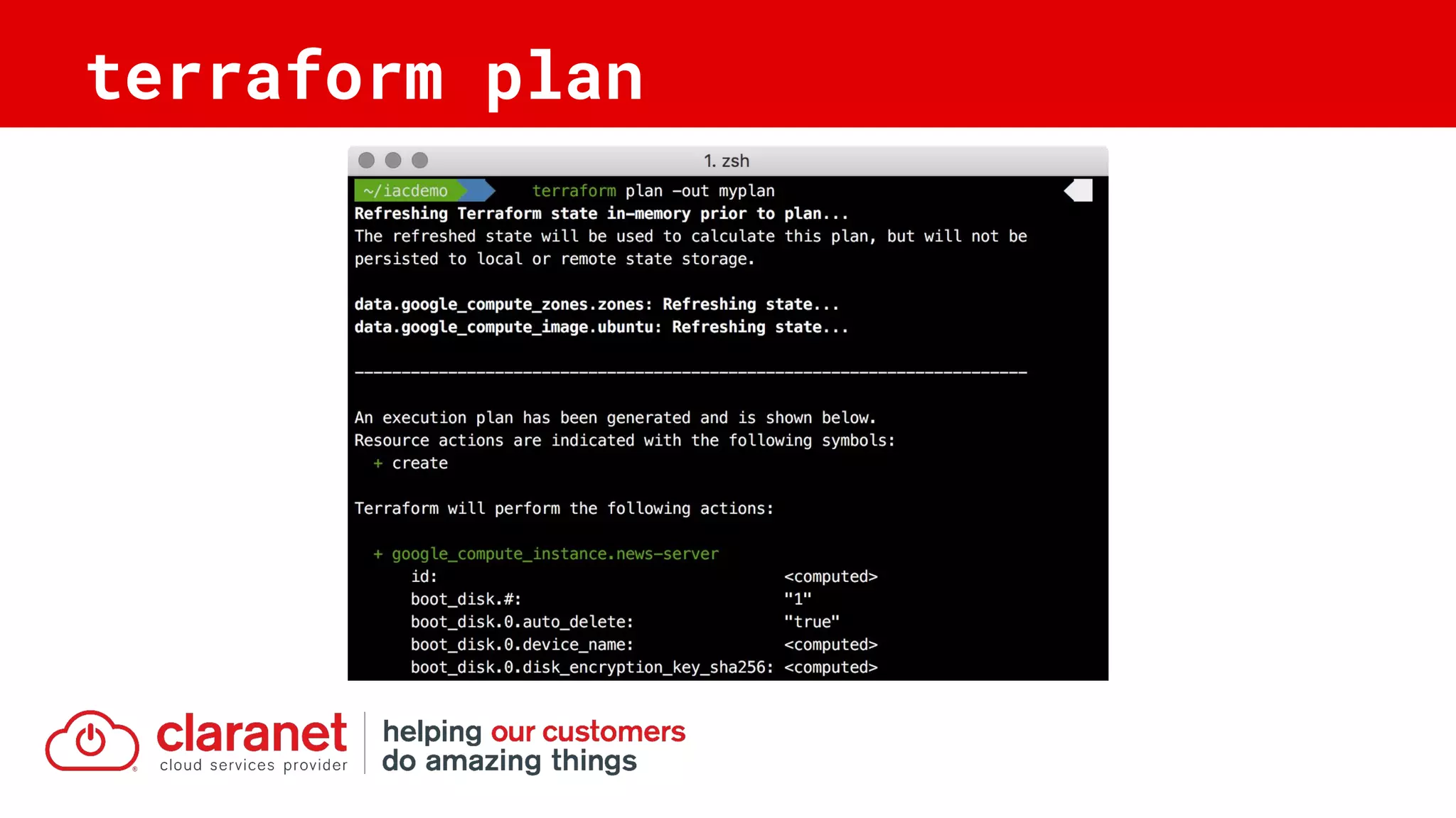

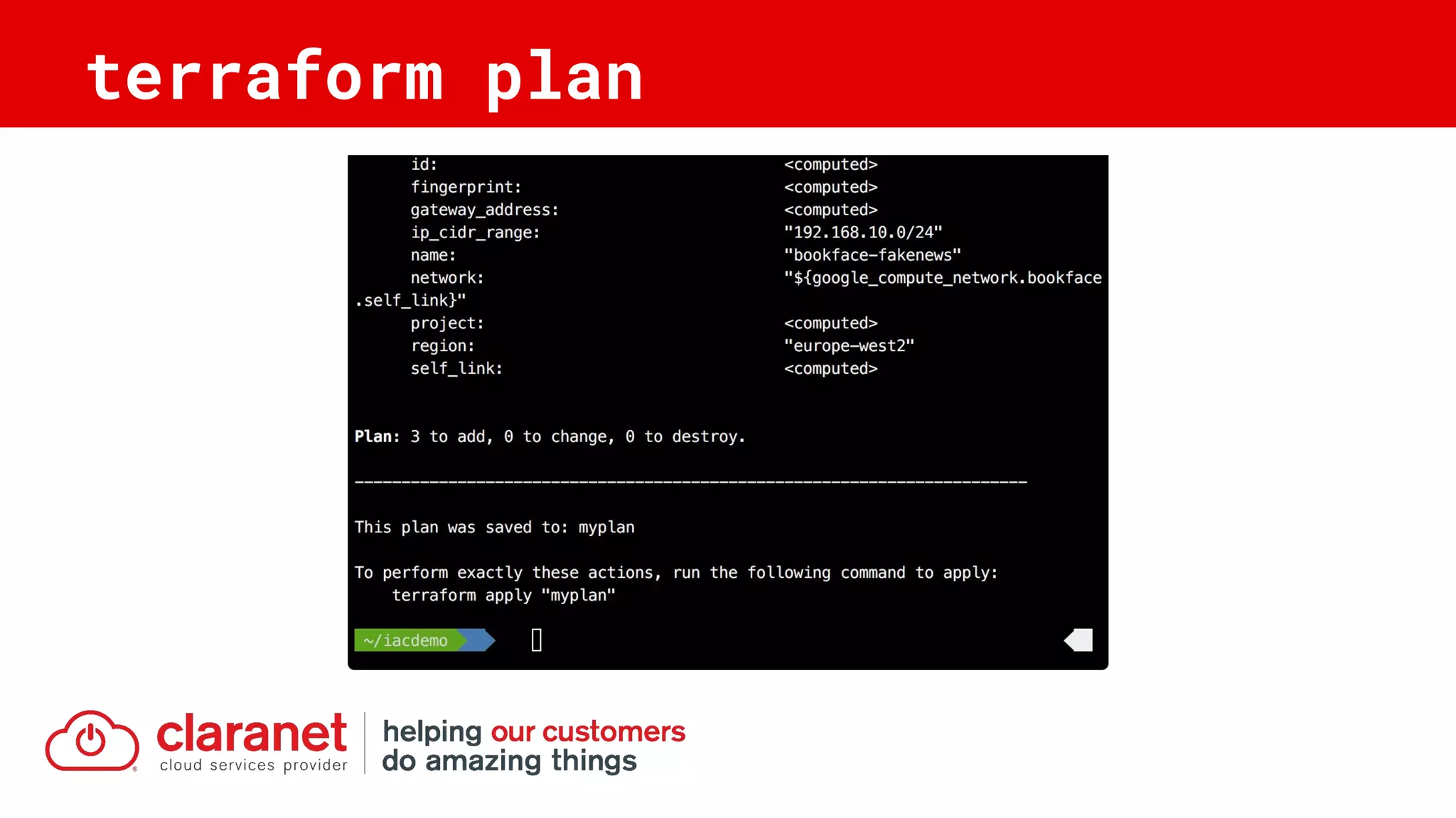

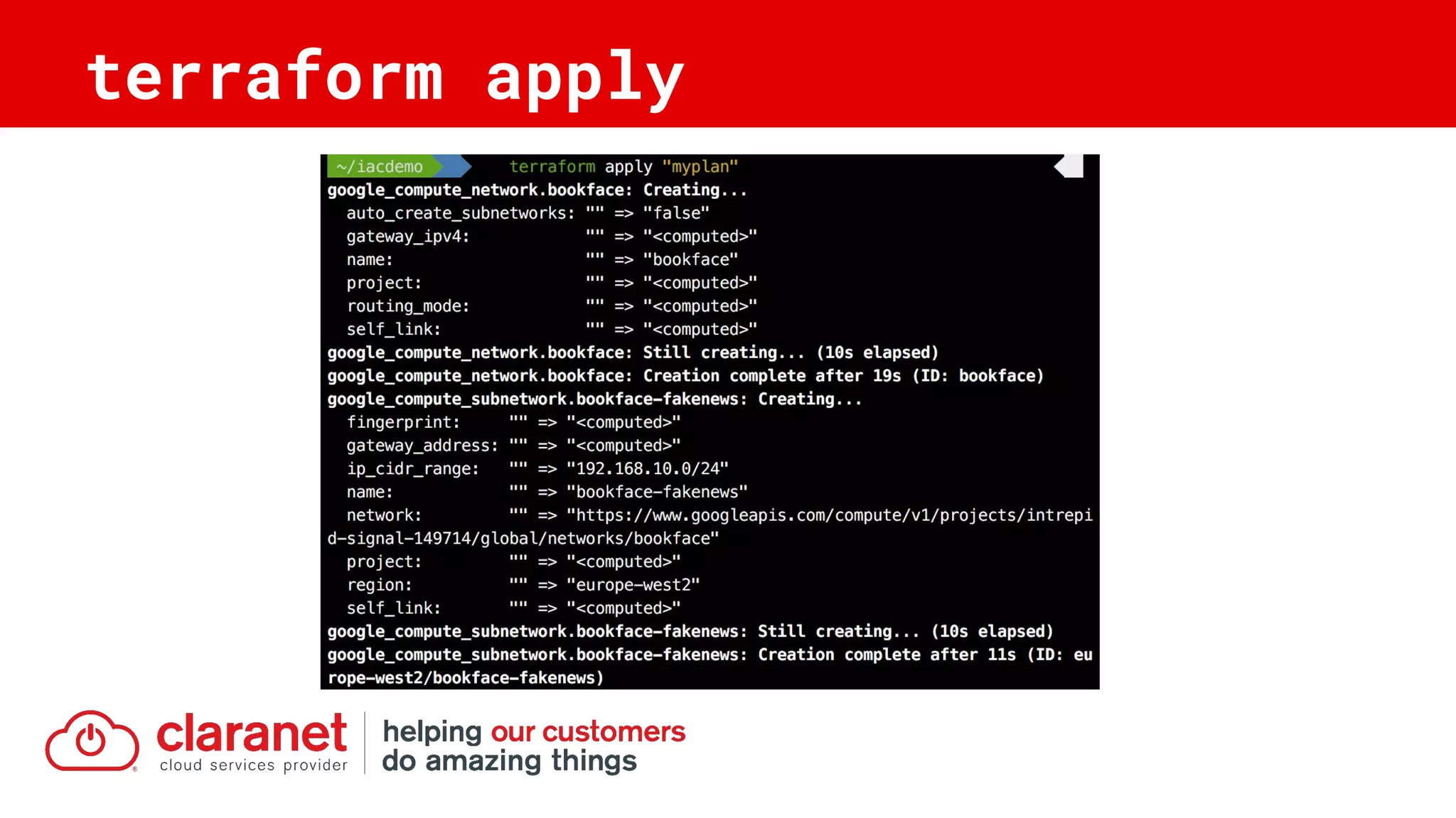

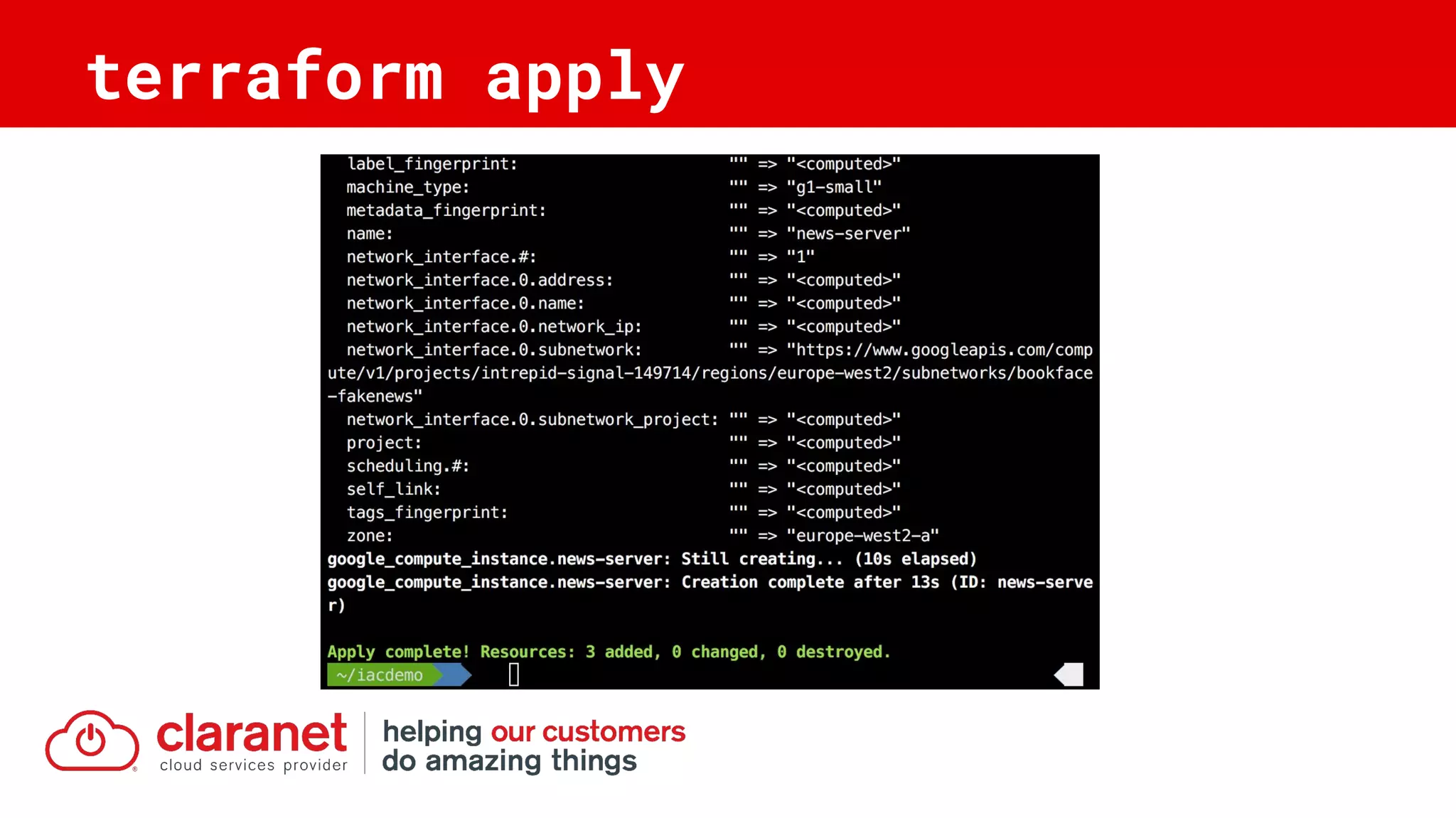

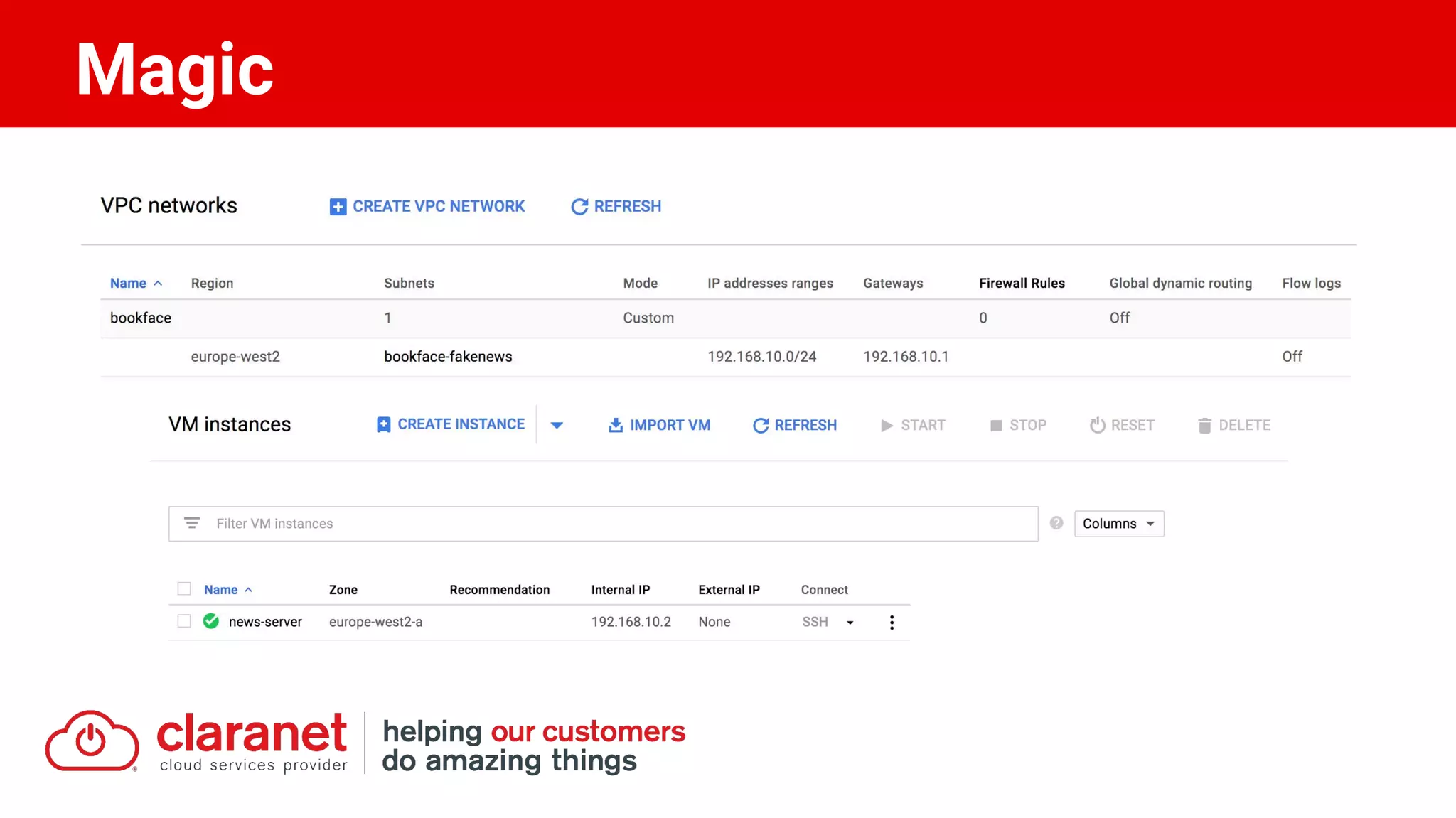

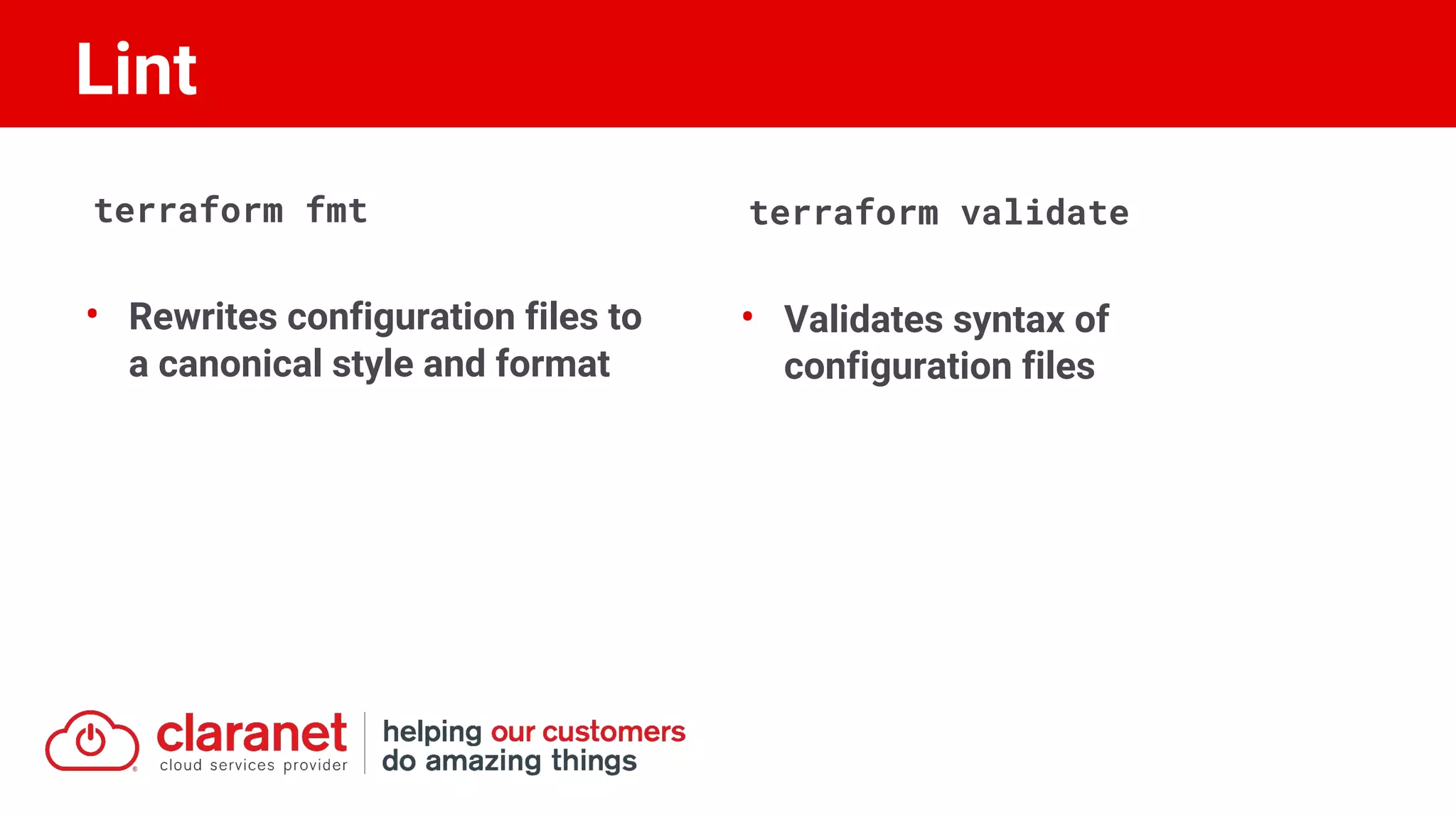

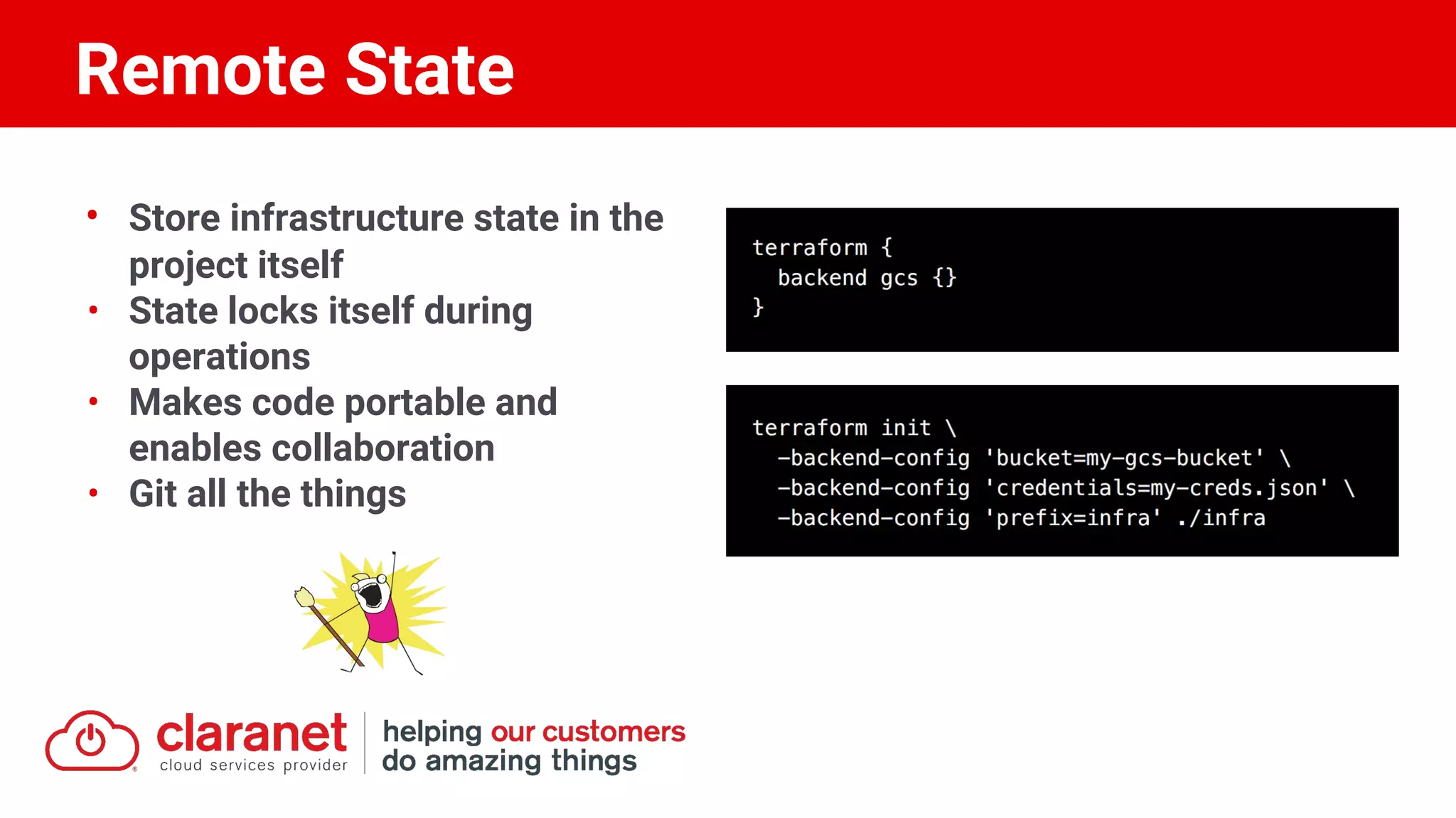

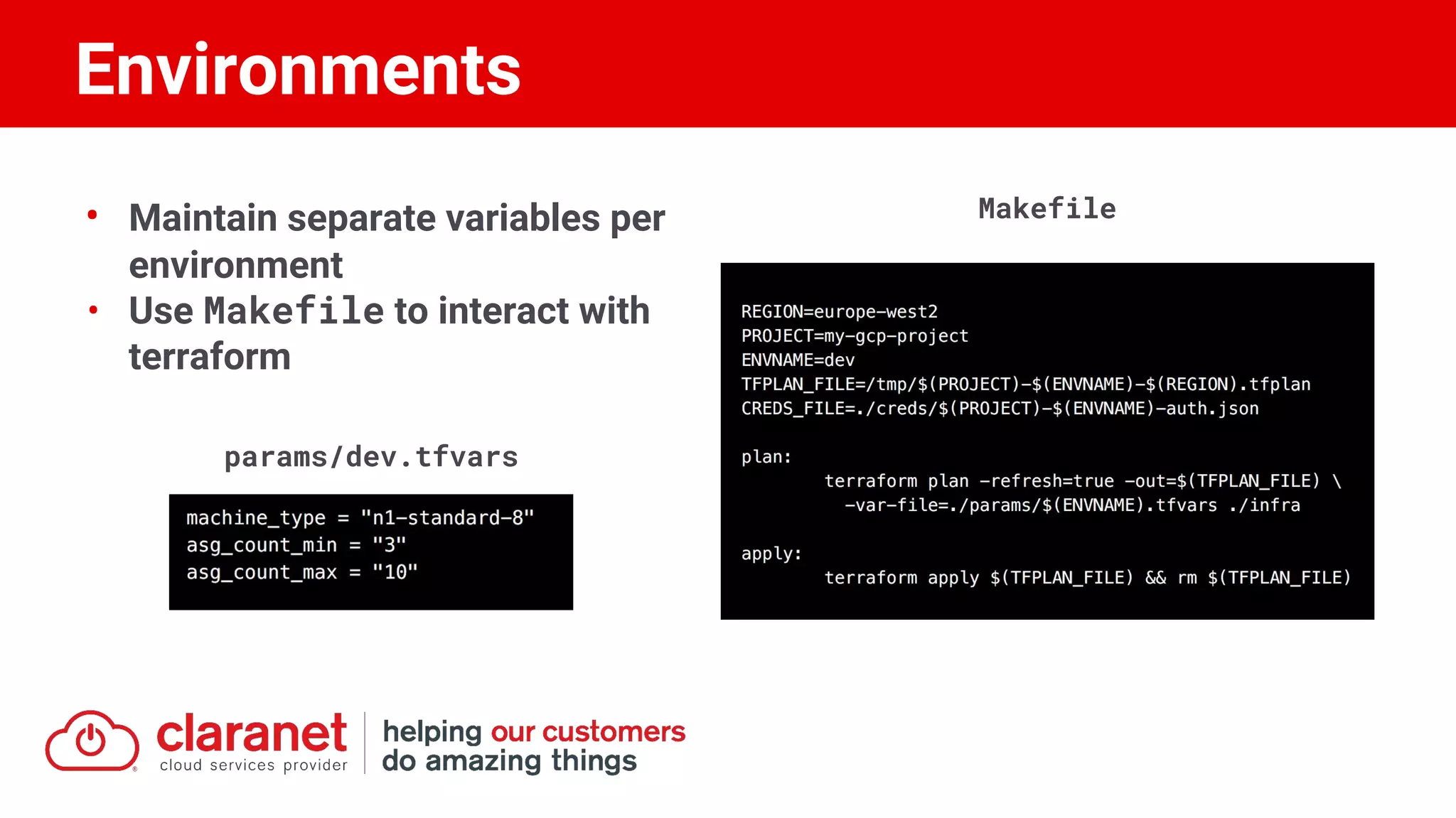

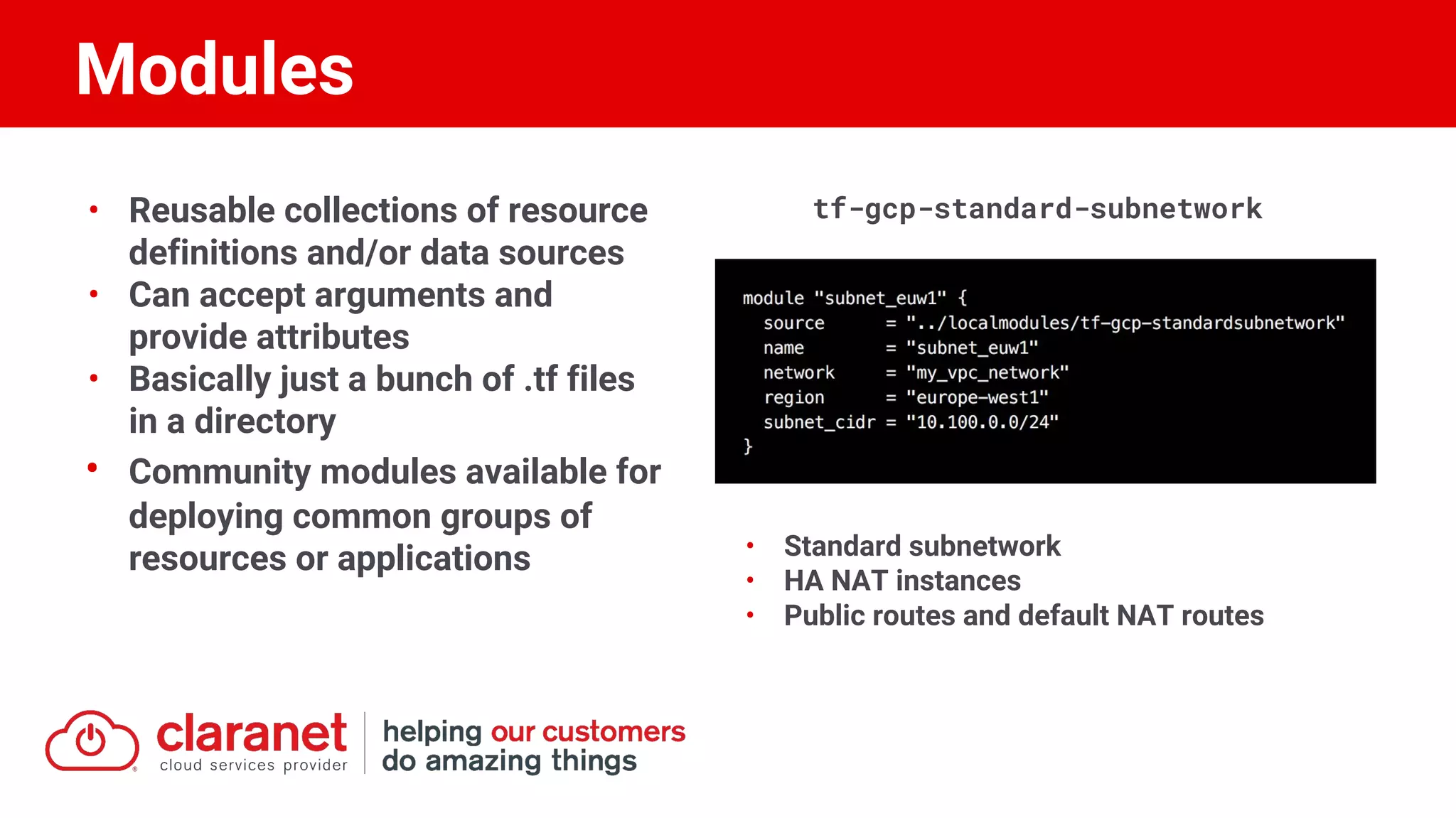

This document is a presentation by Tim Berry on Infrastructure as Code (IaC) using Terraform, highlighting its definition, lifecycle, and best practices. It emphasizes the automated, modular, and auditable nature of IaC and discusses the Terraform lifecycle phases including initialize, plan, apply, and destroy. The presentation also covers resource definitions, data sources, and the usage of community modules for effective infrastructure management in cloud environments.