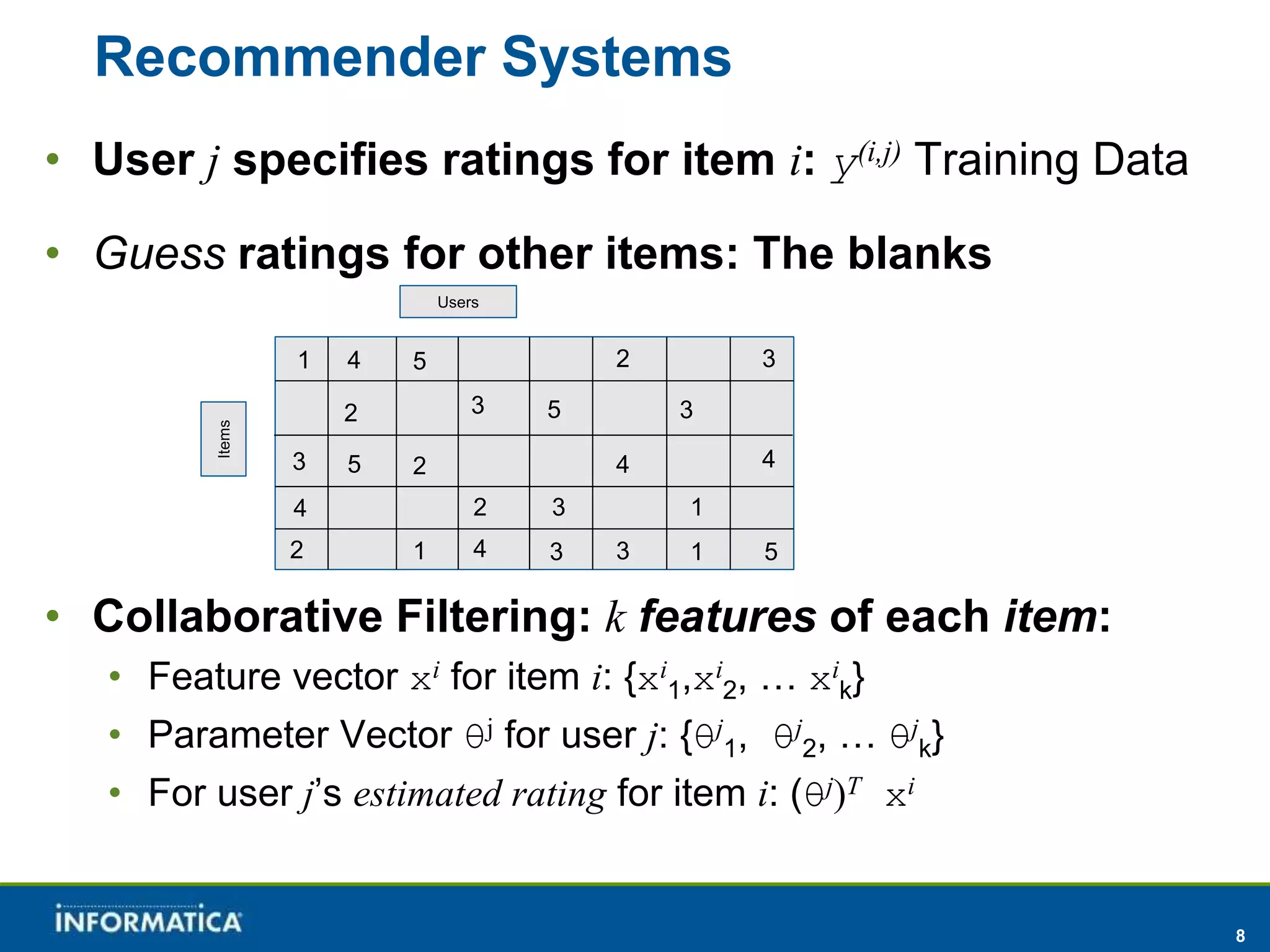

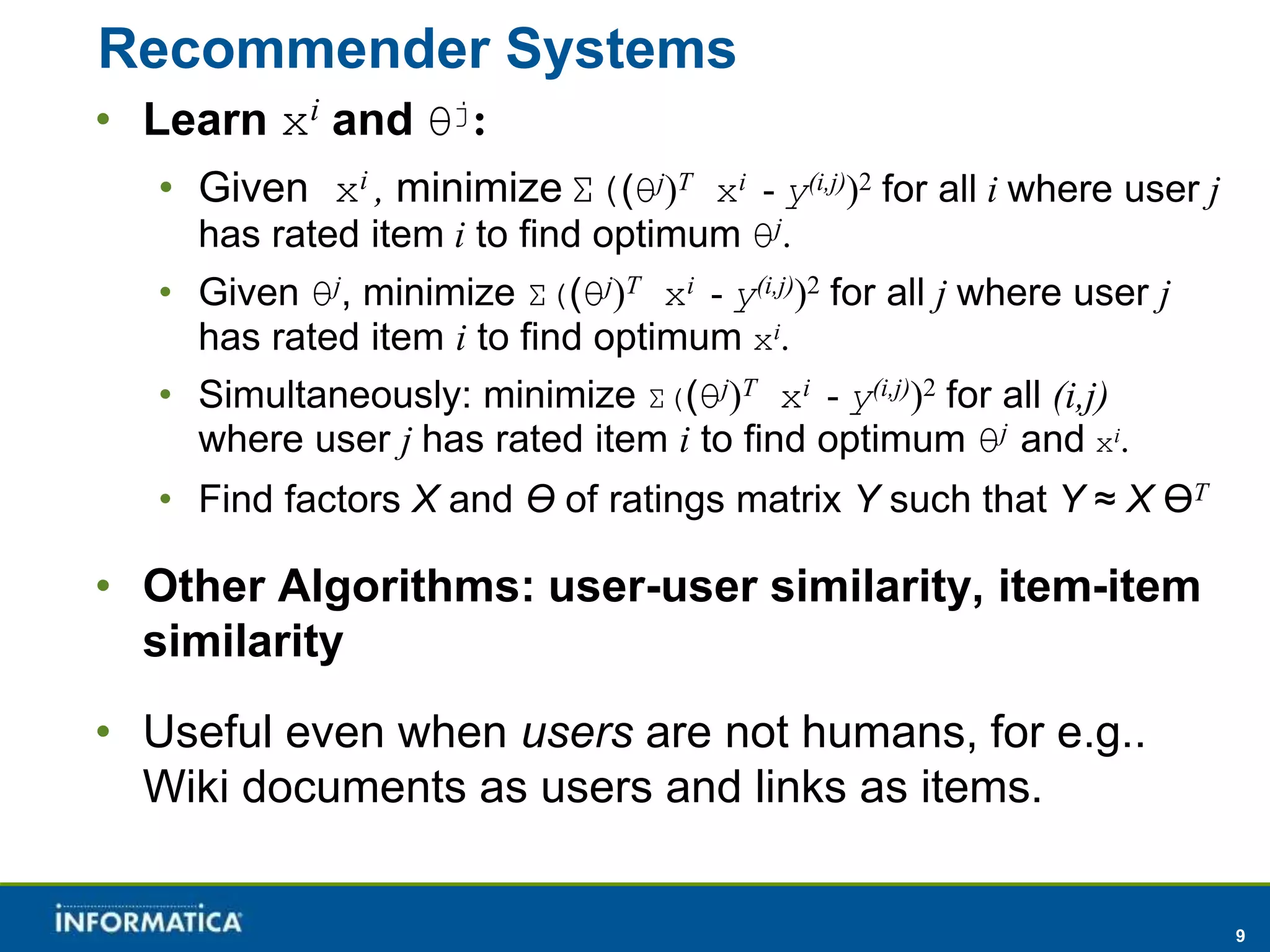

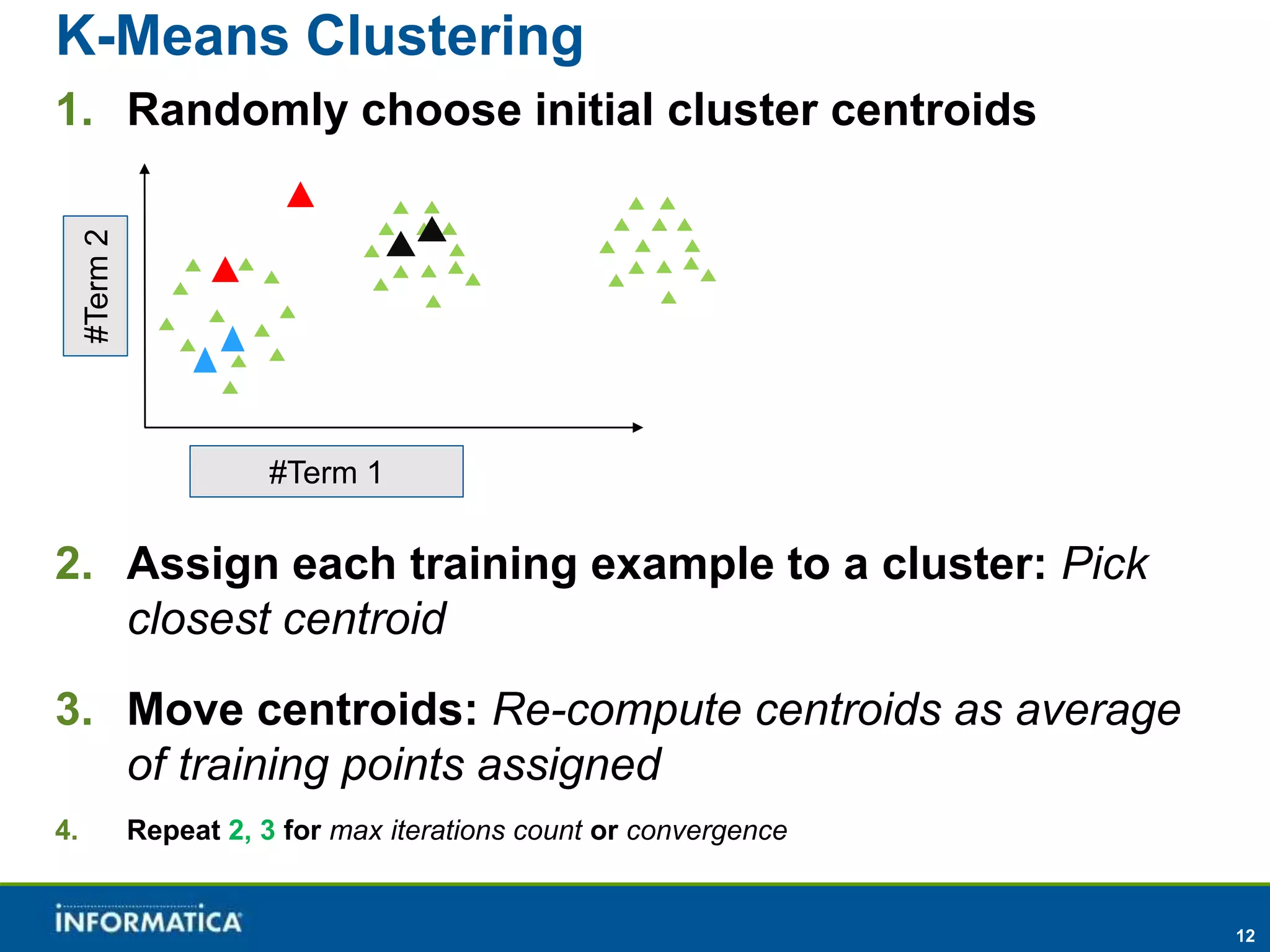

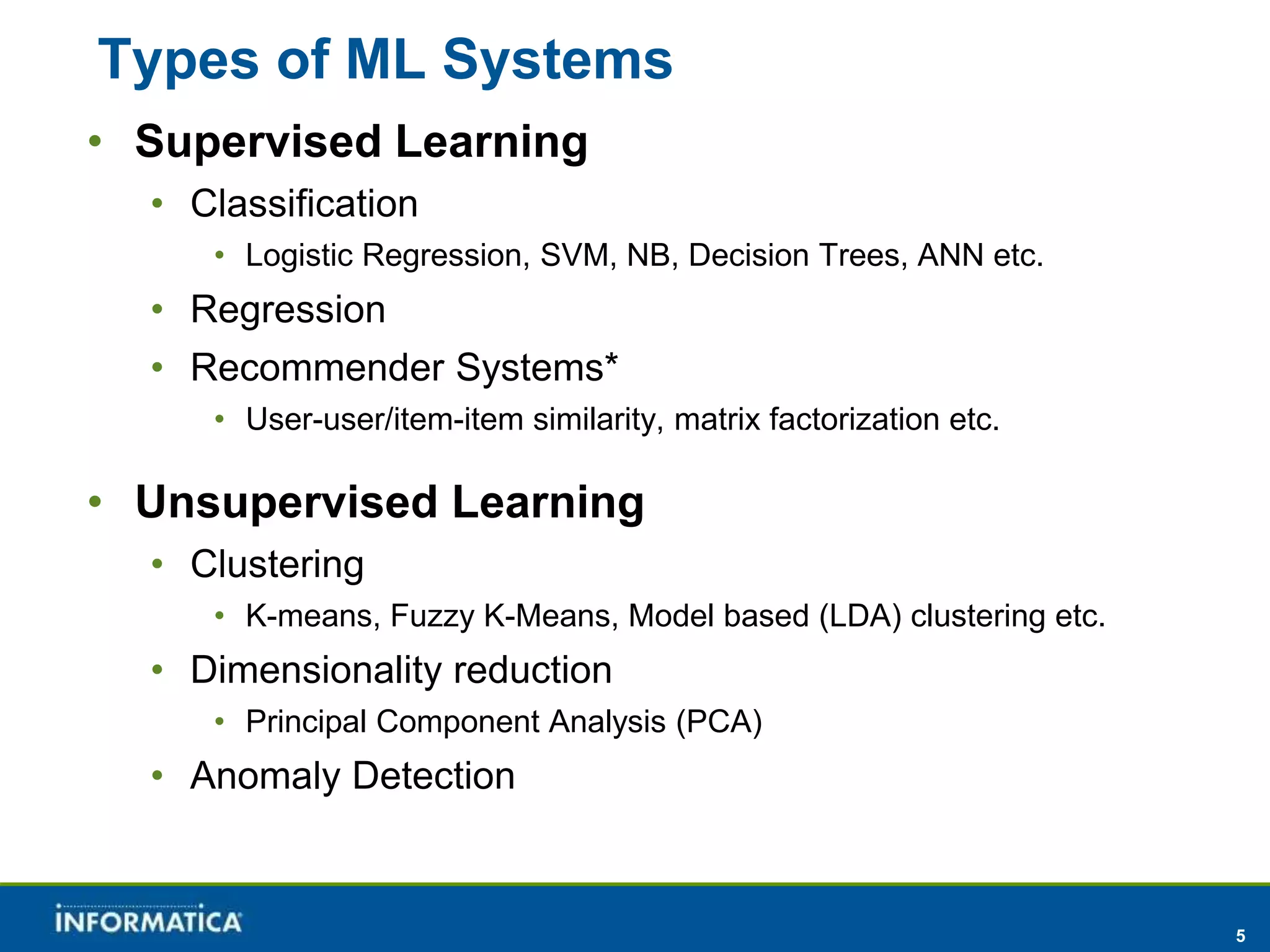

The document provides an introduction to machine learning, defining it as the process of improving task performance through experience and training. Key topics include methods of machine learning such as supervised and unsupervised learning, classification techniques like logistic regression, and applications in recommender systems and clustering. It also highlights popular tools and resources available for machine learning and its real-world applications, including speech recognition and image processing.

![7

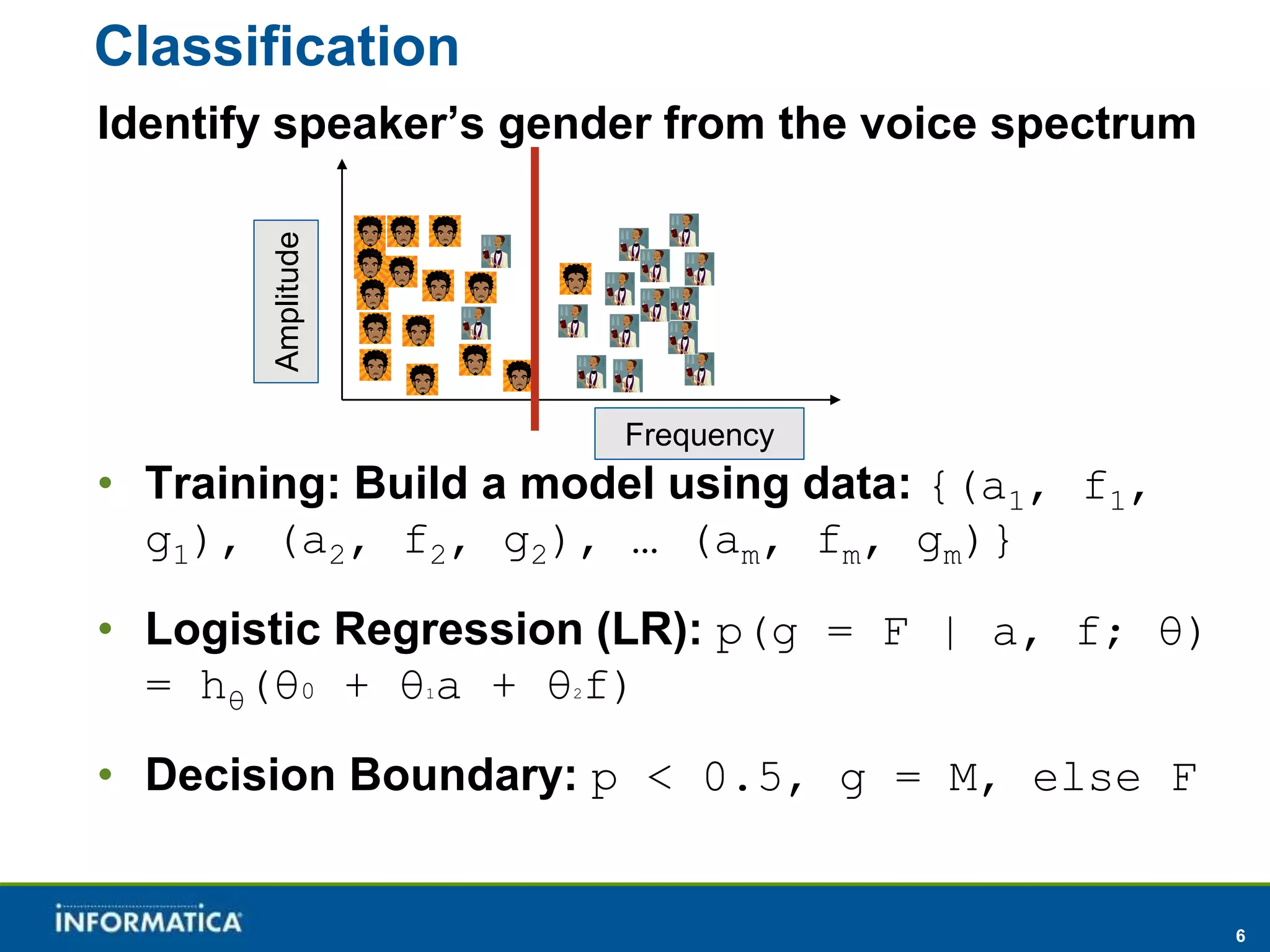

Logistic Regression

• If we let

• y = 1 when g = F, and y = 0 when g = M, and define

vector x = [a, f]

• and define a function hθ(x) = sigmoid(θT*x) where

sigmoid(z) = 1/(1+e-z). It represents probability

p(y=1|x,θ).

• Cost J(θ) = -Σ(y*log(h) + (1-y)*log(1-h)) -

λθTθ over all training examples for some λ.

• Optimization algorithm (gradient descent): Obtain θ which

minimizes J(θ).

• Try to fit model θ to cross validation data, vary λ for

optimum fitment.

• Test model θ against test data: hθ(x) ≥ 0.5, predict

gender = F, otherwise predict gender as M.](https://image.slidesharecdn.com/introtomachinelearning-141021044959-conversion-gate01/75/Introduction-to-machine_learning-7-2048.jpg)